You’ve just been denied a mortgage by an AI system. No human reviewed your case, no appeal is possible, and your consolation prize is a clammy explanation: “Your credit utilization ratio triggered a negative outcome.” Feeling reassured? Didn’t think so.

The more we offload to the machines, the louder we demand explainable AI (XAI)—tools that’ll break the bots and pull us out of the web of black boxes. It’s programmatic perfection! A one-stop solution to soothe our technophobia and keep the machines in check.

Except it’s not.

Explainable AI doesn’t simplify the chaos; it just rebrands it.

What we get is a gold-plated illusion: billions poured into decoding systems while the actual problems—bias, misuse, overreach—remain untouched.

The price? A lot more than dollars.

XAI 101: Decodes Influence, Not Intention

Current explainable AI (XAI) relies on methods like SHAP (Shapley Additive Explanations) and LIME (Local Interpretable Model-Agnostic Explanations) to infer correlation rather than causation. SHAP leans on cooperative game theory to apportion “blame” to key inputs—like income level, credit history, or pixel clusters—mapping out the variables that tipped the scales, while LIME tweaks input data to see how predictions change, creating a simplified model that explains which features mattered most for a specific decision. Image-heavy applications resort to saliency mapping, which uses techniques from gradient-based analysis to deem which pixels caught AI’s eye. Higher-stake scenarios like healthcare and hiring resort to counterfactual explanations that dream up alternate realities to reveal potential outcomes if an input is plausibly changed: e.g., “What if the tumor was 2mm smaller?”.

If you’re willing to foot a hefty electricity bill, XAI can give a passable report of what matters. But why? Nada.

The Case Against Asking ‘Why’

“The 34th layer of my convolutional neural network registered a 12.3% pixel intensity anomaly in the upper-right quadrant, correlating with malignancy markers across 15-region datasets normalized for demographic and genetic covariance, yielding a confidence score exceeding the malignancy threshold established by Bayesian posterior calibration.”

This is what “why” looks like. Confusing? Most definitely. Comforting? Marginally.

But to generate this level of explanation (that most will just chuck out along with the IKEA assembly manual) isn’t just costly—it’ll be disruptive. In a bad way. Explainability drains computational resources, slows innovation, and risks turning every new breakthrough into a bureaucracy of self-justification. While the promise of explainability feels noble, the trade-offs could stifle AI’s real potential. Is the goal of “why” worth derailing progress?

In the end, what matters isn’t how AI works—it’s whether it works *for us.*

Clarity Ain’t Cheap

To truly answer why a decision was made, emerging technologies like neuro-symbolic AI, contrastive explanations, and causal inference models are being developed. These methods rely on hybrid architectures that blend deep learning’s pattern recognition with symbolic reasoning’s rule-based logic. Training such systems requires significantly higher compute resources, as these models must simultaneously process unstructured data (e.g., images or text) and structured logic frameworks, introducing combinatorial complexity that balloons with the scale of the task.

But the real challenge lies in the hardware. Current GPUs and TPUs, like NVIDIA’s H100 or Google’s TPU v5, are designed to maximize throughput for training and inference—not for the iterative, gradient-heavy computations needed for XAI. Generating advanced explanations, such as causal attributions or attention visualizations, requires chips optimized for gradient replay, dynamic memory access, and low-latency parallelism. XAI workloads demand fundamentally different hardware, especially for real-time applications like autonomous vehicles or medical diagnostics, where interpretability must happen alongside predictions. Look at how much the big guys are dishing out for chips to power LLMs. The cost of developing XAI-specific chips would likely surpass that due to the need for new layers of computational overhead. Engineering challenge? More like a financial nightmare.

Peace Of Mind VS Progress Paradox

Building AI is already a high-wire act of experimentation and optimization. Add explainability into the mix, and you’re not just walking the tightrope—you’re doing it with a refrigerator on your back.

Explainability demands re-architecting models to generate interpretable outputs. High-performing systems like vision transformers (ViTs) thrive on complexity—scanning massive datasets to extract nuanced patterns—but making them explainable often means embedding attention mechanisms or surrogate models, which siphon computational power and can tank performance. In reinforcement learning, developers could be forced to simplify reward structures or design decipherable policies, kneecapping the agent’s optimization potential. The same complexity that fuels groundbreaking results becomes the villain in a system shackled by transparency requirements.

The dev pipeline also gets a good shaking. Explainability techniques like counterfactuals or causal modeling demand repeated evaluations on perturbed datasets and add layer upon layer of post-hoc validations to the current grind of iteration (think refining hyperparameters, tweaking loss functions, and running inference at scale). This isn’t a few extra steps; it’s a marathon at every stage, turning what should be a sprint toward breakthroughs into a bureaucratic slog. The compute load? Explanations eat cycles like they’re free, slowing down already glacial training processes in advanced fields like multi-modal or generative AI.

Huzzah, you’ve made it! Not so fast, regulation steps in as the final boss. Industries like healthcare and finance are mandating explainability for deployment, but these requirements often feel like asking Lu Hamilton to justify every twitch of his wrist before he crosses the finish line. Developers spend more time proving their models are interpretable than ensuring they work optimally. Punched out an AI-driven cancer diagnostic tool? Awesome—now go explain every gradient and weight to a room of spectacled regs. By the time you’re done, the tech’s probably obsolete, and the innovation you were chasing is stuck in compliance purgatory.

Explainability warps priorities. Instead of pushing the boundaries of what AI can achieve, teams are forced to tiptoe around transparency demands. Startups and researchers alike might retreat from bold ideas, choosing compliance-friendly vanilla iterations over moonshots. Progress stalls, ambition fizzles, and the field inches forward when it should be sprinting.

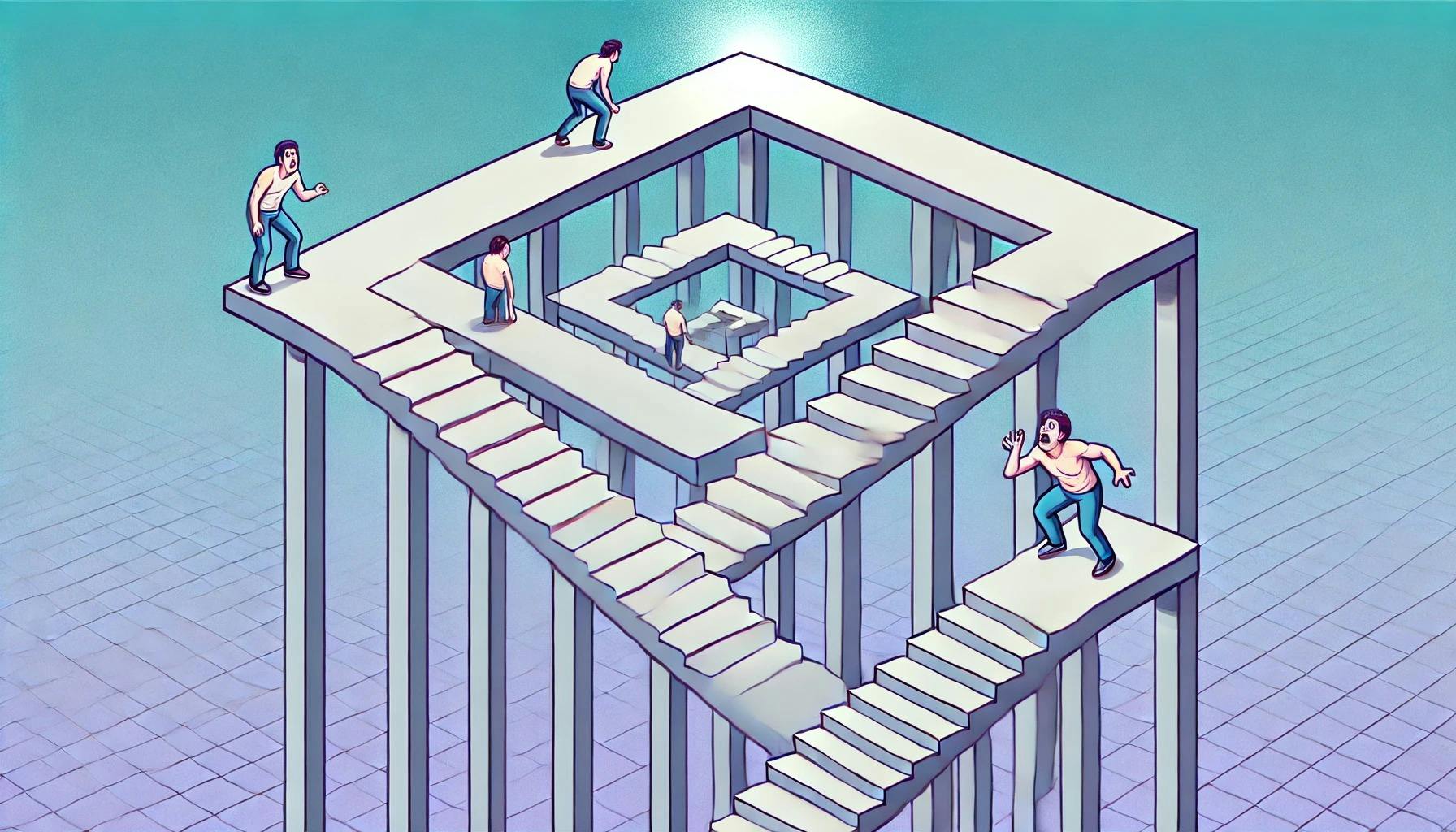

The Russian Doll Problem: Explanations Demand Explanations

Explainable AI claims to simplify the inscrutable, but the explainer is no saint. Any tool smart enough to unravel an advanced model is smart enough to create its own mysteries. The explainer requires an interpreter, the interpreter will need a translator, and you get the point. Recursion dished out as revelation, leaving us none the wiser.

Take counterfactual explanations, the guys that simulate alternate realities—e.g. what if you saved instead of splurging on avocado toast—to show how outcomes would change. But these scenarios rely on optimization assumptions that rarely hold true, like the independence of features or a linear relationship between inputs. When those assumptions fail, the explanation becomes another inscrutable abstraction. And to fix that? A second layer of causal models or saliency maps, looping us deeper into a spiral where each new tool demands its own interpreter. Instead of breaking the black box, we’re just nesting smaller, equally opaque ones inside.

Multi-modal systems—AI models that process text, images, and audio all at once—are deliciously useful, but disgustingly convoluted. Explaining how these systems balance competing inputs across vastly different spaces is a Herculean task that involves decoding the fusion mechanisms (such as attention layers or cross-modal transformers) that weight and align features across vastly different data types. But these explanation tools themselves rely on complex optimization loops and parameter tuning, necessitating additional layers of analysis.

Ah, the delectable irony: XAI doesn’t demystify AI—it builds another machine, just as convoluted, to perform that illusion.

We’re not solving the black box; we’re fracturing it into infinite shards of partial clarity, each less comprehensible than the last.

The more we chase “why,” the more opaque and expensive AI becomes, leaving us tangled in a self-imposed paradox: AI so explainable, no one can explain it.

Safeguard, Or Sidearm?

SHAP and LIME might show you neat pie charts of what influenced a decision, but those charts are only as honest as the people designing them. Discriminatory outcomes can be reframed as logical, with innocuous variables like zip codes and spending habits getting the spotlight while the uglier biases—gender proxies, income clusters—lurk conveniently out of frame. In the wrong hands, transparency becomes theatre.

In high-stakes domains, organizations can produce outputs that align with strict regulatory requirements while obfuscating unethical practices or technical shortcuts just by editing a couple parameters. Tweak the explainer, feed it the right narrative, and voilà: plausible deniability in code form. Decisions driven by biased datasets or flawed objectives can be soaked in sanitized logic, transforming explainability into a shield against scrutiny rather than a pathway to accountability—an immaculate layer of pseudo-logic designed to stop questions before they start.

“Why” Was Never The Problem

Instead of throwing billions into making AI explain itself, we should focus on making it better. The real problems with AI aren’t that we don’t know why it does what it does—it’s that it does the wrong things in the first place. Bias in decisions? Instead of 50-layer explanations about why takeout orders and surnames rejected a loan, invest in algorithmic bias mitigationat the source: re-weight datasets, apply fairness constraints during training, or use adversarial techniques to expose and remove hidden bias. Fix the rot before it gets baked into the model.

Explainability also won’t solve reliability. Instead of backtracking with tools like LIME or SHAP to justify errors, use robust optimization techniques that make models less sensitive to noisy or adversarial inputs. Better regularization, outlier detection, and calibration methods—like temperature scaling or Bayesian uncertainty models—can ensure predictions are not only accurate but dependable. This approach skips the messy middle layer of over-explaining bad decisions and focuses on making better ones.

Regulation is another frontier that doesn’t need XAI’s convoluted gymnastics. Trust doesn’t necessitate that AI bare its soul, just that AI works consistently. Instead of mandating explainability as a vague standard, push for vigorous testing frameworks for worst-case or edge scenarios and auditing pipelines. Think of it like crash tests for cars—no one needs to understand the mechanics of airbag deployment; we just need to know it works. Why should AI be any different?

“Why” is a distraction. The better question is “what”—what can we do to make AI fairer, safer, and more reliable?

The world doesn’t need a 100-step explanation of what went wrong.

It needs systems designed to get things right in the first place.

Final Thoughts: Freak Out, But Don’t Be A Fool

If AI doesn’t have you clutching the nearest Xanax, you’re either a hermit, in denial, or blueprinting anarchy. AI is effing terrifying. But we can’t let existential dread push us into placebo solutions as convoluted as the chaos we’re trying to solve.

Funny, the best way to handle AI might be to lean on it less. Not every problem needs a machine-learning fix, and sometimes, plain old human judgment works just fine. Freak out. Stay scared. Paranoia powers progress.