Table of links

Abstract

1 Introduction

2 Related Work

2.1 Fairness and Bias in Recommendations

2.2 Quantifying Gender Associations in Natural Language Processing Representations

3 Problem Statement

4 Methodology

4.1 Scope

4.3 Flag

5 Case Study

5.1 Scope

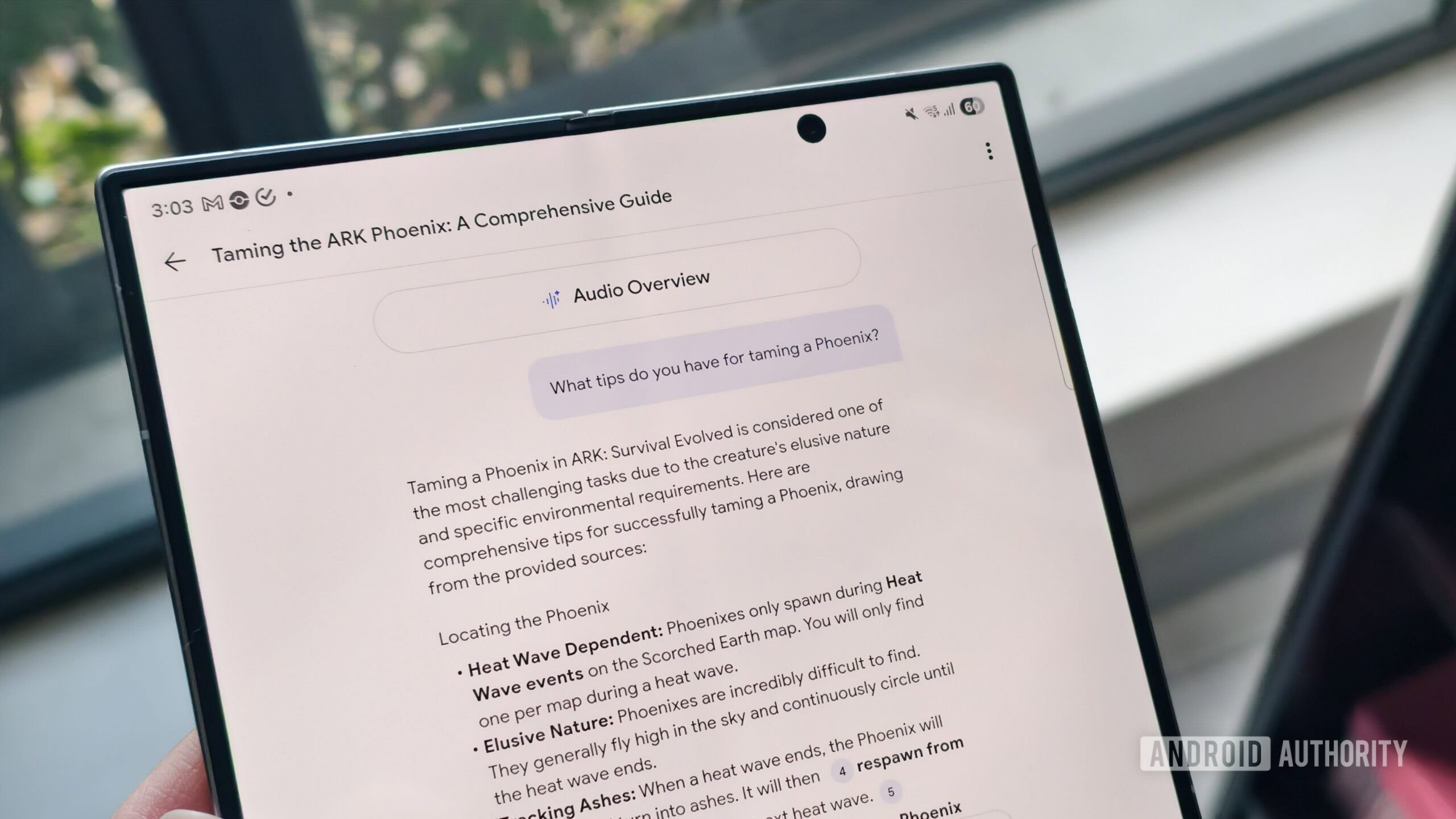

5.2 Implementation

5.3 Flag

6 Results

6.1 Latent Space Visualizations

6.2 Bias Directions

6.3 Bias Amplification Metrics

6.4 Classification Scenarios

7 Discussion

8 Limitations & Future Work

9 Conclusion and References

3 Problem Statement

Disentangled latent factor recommendation research has become increasingly popular as LFR algorithms have been shown to entangle model attributes in their resulting trained user and item embeddings, leading to unstable and inaccurate recommendation outputs [44, 58, 62, 65]. However, most of this research is outcome-focused, providing mitigation methods for improving performance but not addressing the potential for representation bias in the latent space. As a result, few existing evaluation techniques analyze how attributes are explicitly (due to distinct use as a model attribute) or implicitly captured in the recommendation latent space. For those that do exist, the metrics focus on evaluating disentanglement levels for explicitly used and independent model attributes, instead of investigating possible implicit bias associations between entity vectors and sensitive attributes or systematic bias captured within the latent space [44]. Even though latent representation bias has become a well-studied phenomenon in other types of representation learning, such as natural language and image processing, it remains relatively under-examined compared to the large amounts of research concerning exposure and popularity bias [23].

The work presented in this paper looks to close the current research gap concerning evaluating representation bias in LFR algorithms by providing a framework for evaluating attribute association bias. Identifying potential attribute association bias encoded into user and item (entity) embeddings is essential when they become downstream features in hybrid multi-stage recommendation systems, often encountered in industry settings [6, 14]. Evaluating the compositional fairness of these systems, or the potential for bias from one component to amplify into downstream components, is challenging if one does not understand how this type of bias initially occurs within the system component [59]. Understanding the current state of bias is imperative when auditing and investigating the system prior to mitigation in practice [9]. Our proposed methods seek to lower the barrier for practitioners and researchers wishing to understand how attribute association bias can infiltrate their recommendation systems. These evaluation techniques will enable practitioners to more accurately scope what attributes to disentangle in the mitigation and provide baselines for deeming the mitigation successful.

We apply these methods to an industry case study to assess user gender attribute association bias in a LFR model for podcast recommendations. Prior research primarily has focused on evaluating provider gender bias due to the lack of publicly available data on user gender bias; to the best of our knowledge, our work provides one of the first looks into quantifying user gender bias in podcast recommendations. We hope that our observations help other industry practitioners to evaluate user gender and other sensitive attribute association bias in their systems, provide quantitative insights into podcast listening beyond earlier qualitative user studies, and encourage future discussion and greater transparency of sensitive topics within industry systems.

:::info

Authors:

- Lex Beattie

- Isabel Corpus

- Lucy H. Lin

- Praveen Ravichandran

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::