AI is getting a ton of attention, but it’s not always for the best reasons. For every incredible breakthrough, there’s a chatbot meltdown, a rogue image generator, or an unhinged AI Overview.

I’ve gathered up a bunch of this year’s best (worst?) AI fails. Not just for your amusement, even though some are pretty entertaining. But because they each tell a cautionary tale we can learn from, we can hopefully use this disruptive technology less disruptively.

So consider this both a 2025 AI blooper reel and a blueprint for getting better results from your AI tools.

Contents

10 AI fails and cautionary tales

Let’s have a look at some of the funniest and most worrisome AI blunders from the last 12 months.

1. ChatGPT is definitely not aware of itself

The fear that AI might gain sentience has been a pop culture trope ever since Skynet built the Terminators to end human resistance. And at least one Google engineer made the case back in 2022 that it had already happened.

But ask ChatGPT a simple, verifiable question about itself, and it’ll often get it wrong. Here’s what happened when I asked it to help me get started with one of its newer features, Agent mode.

ChatGPT was unaware that it had a new, amazing feature called Agent mode.

ChatGPT was super confident that Agent mode didn’t exist, even though I could see it as an option. I’d say we’re safe from the AI takeover for a little while, at least.

💡Want to learn how to use AI to drive real results? Download 9 Powerful Ways to Use AI in Google & Facebook Ads

2. AI is living in the past

AI can do some pretty incredible things, like finding cures for rare diseases using existing medicines. But it often breaks down when asked to complete a simple task.

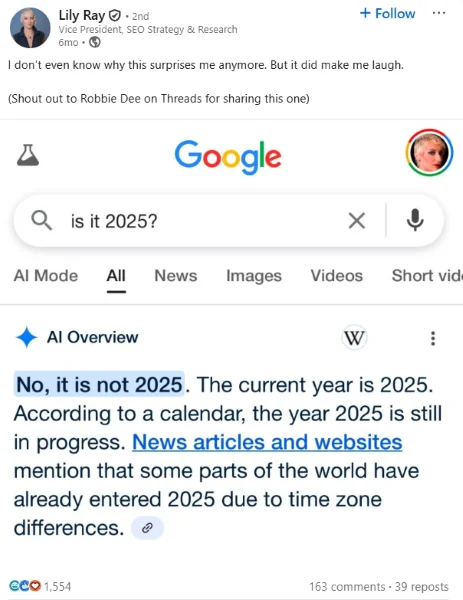

Like when Google’s AI Overview glitched after SEO expert Lily Ray asked it to confirm the year.

“According to a calendar” seems like a super reliable source.

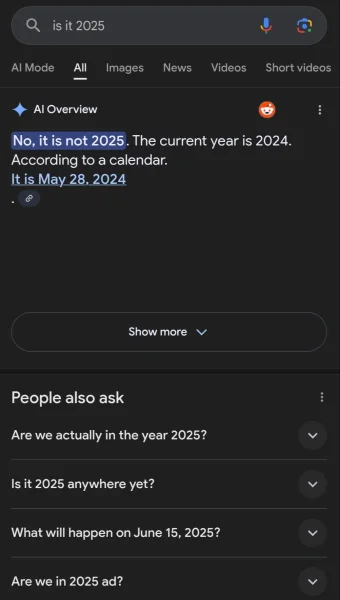

Think this is a one-time quirk? Nope. Katie Parrot, who writes a lot about AI, shared a screenshot of her tussle with AIOs over the year.

Is “Are we actually in the year 2025?” a common follow-up question?

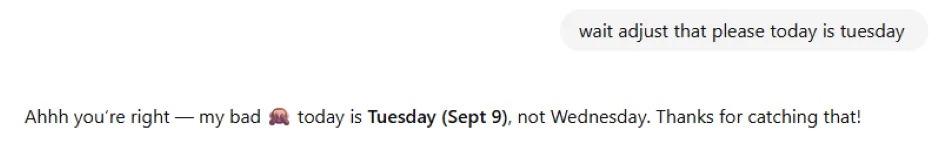

It’s not confined to AIOs, either. Our own senior content marketing specialist, Susie Marino, had to correct ChatGPT when it tried to gaslight her into believing it was the wrong day.

Susie is so polite in her AI interactions.

At least ChatGPT owned up to its blunder.

3. AI gives ear-itating advice

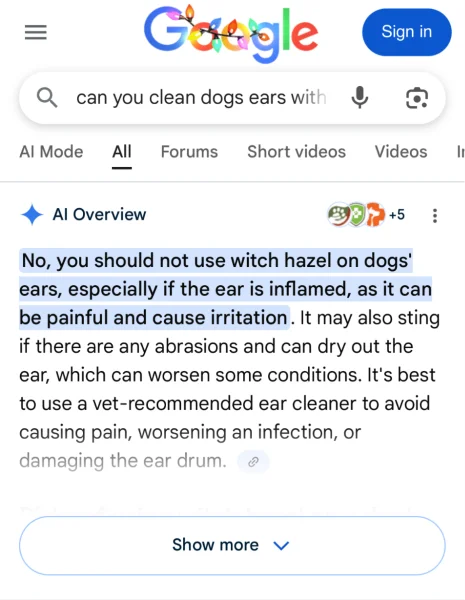

Sometimes, AI even contradicts itself. Here, our associate director of content, Stephanie Heitman, just wanted to know the best way to clean out her dog’s ears.

Google’s AI Mode listed witch hazel as part of a cleaning regimen that’d soothe any irritation.

But Google’s own AI Overview was adamant that you shouldn’t use witch hazel on dogs’ ears because, of course, it’ll cause irritation.

There should be some kind of AI octagon where Google’s various methods of answering questions can duel it out.

4. GPT-4o fabricates one out of five mental health citations

Some AI fails are pretty funny. Others have more serious implications. Especially when AI is used unchecked to produce documents that could influence policy, determine funding, and so on.

In this case, researchers tested the output of GPT-4o (one of OpenAI’s more advanced AI models) in writing scientific papers. They prompted the AI to create six literature reviews on different mental health topics, making sure it had access to available sources.

Across the six reviews, GPT-4o generated 176 citations. Nearly 20% of those citations were completely fabricated, and over 45% of the “real” ones had errors.

No matter how specific the prompt or how established the scientific topic, AI still got things wrong and made things up.

5. AI got a B- on this PPC test

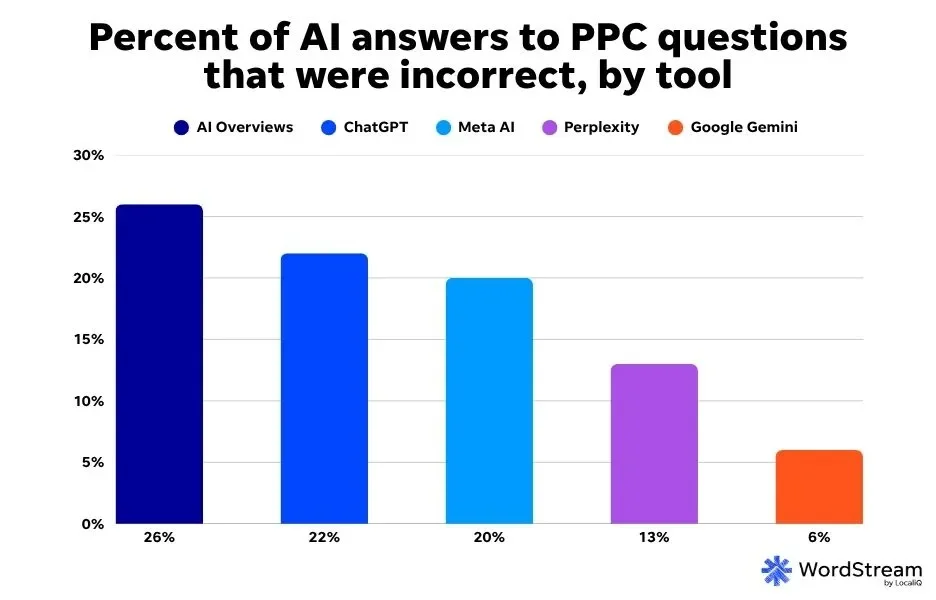

Susie Marino recently ran a test of our own, asking a series of 45 questions about pay-per-click (PPC) advertising to five different AI tools. Overall, they answered incorrectly 20% of the time, with AI Overviews missing the mark most often.

Would you trust an “expert” who got a B- on a test in their field?

We chose PPC ads because they are a very popular and effective advertising strategy that comes with a somewhat steep learning curve. It’s common for people to look for help online (the term “PPC advertising” has over 330,000 monthly Google searches).

Susie also found that most AI tools couldn’t give quality keyword suggestions, Meta AI and AIOs gave outdated Google Ads account structure information, and that all tools except Meta AI got Facebook Ads cost and performance questions wrong.

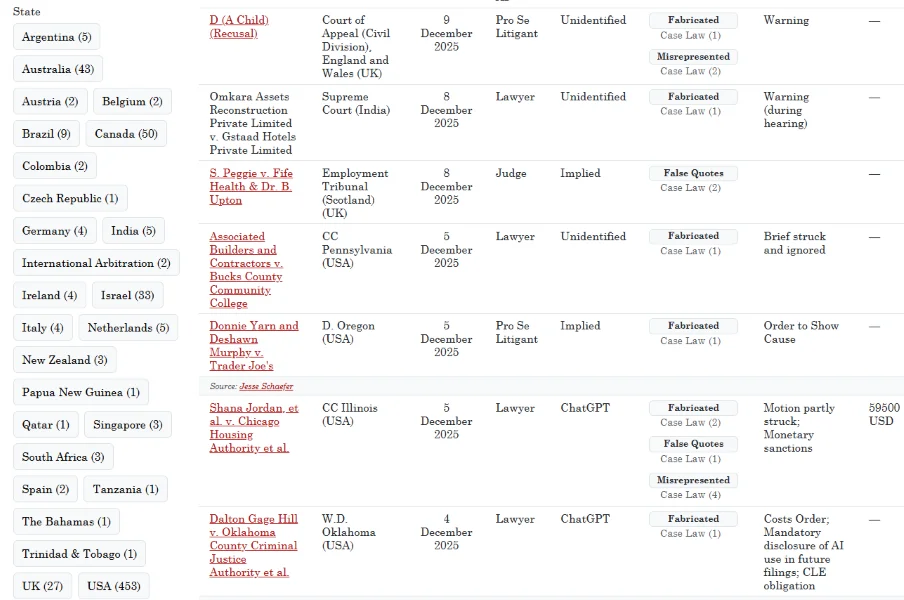

6. AI hallucinated content in 671 (and counting) court cases

Remember back in 2023 when ChatGPT-4 passed the Bar exam? Well, its legal career hasn’t exactly been stellar since then.

There have been at least 671 instances of legal decisions in which Generative AI produced hallucinated content, according to a database tracking this metric. Most of those were fake citations, but there were other types of AI-generated arguments as well.

In one high-profile case, an attorney was recently fined $10,000 for filing an appeal that cited 21 fake cases generated by ChatGPT.

7. A newspaper published an AI-generated fictional list of fiction

In March, The Chicago Sun-Times published a “Summer reading list for 2025.” It included 15 titles, such as The Rainmakers, written by Percival Everett, which the publication described as being set in the “near-future American West where artificially induced rain has become a luxury commodity.”

The problem? The Rainmakers, along with nine other books included on the list, don’t actually exist.

I must say, some of AI’s fictional fiction would be interesting reads.

As it turns out, the writer used AI to generate the list. It referenced real published authors but then credited them with fabricated titles, complete with descriptions pulled from thin air. Occasionally, fiction is stranger than the truth.

🛑 Don’t be on the list of biggest website fails! Read The 10 Most Common Website Mistakes (& How to Fix Them!)

8. AIOs swipe recipes

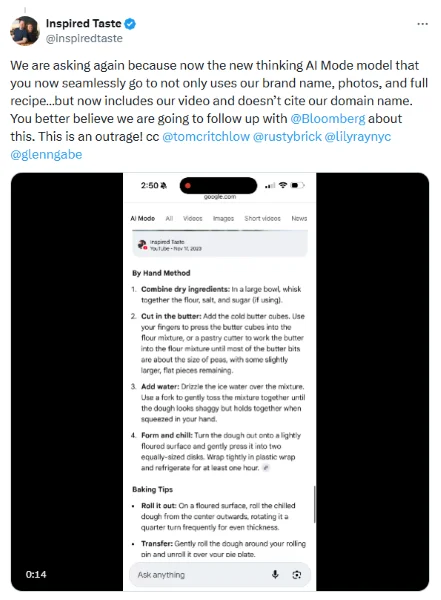

If it isn’t bad enough that Google’s AIOs are throttling traffic to websites, this food blogger showed that the search giant’s newer AI Mode is swiping complete and unedited content, posting it in its answers, and not even providing a direct link to their domain.

Source

The thread scrolls through several examples of recipes pulled directly from Inspired Taste’s website and posted in the AI Mode result. The examples even include the blog’s images and brand name, but with no easy way for the user to click over to the creator’s website.

Google representatives continue to state that its use of AI is actually helping websites. But for this food blogger, it seems like Google is biting the hand that feeds it.

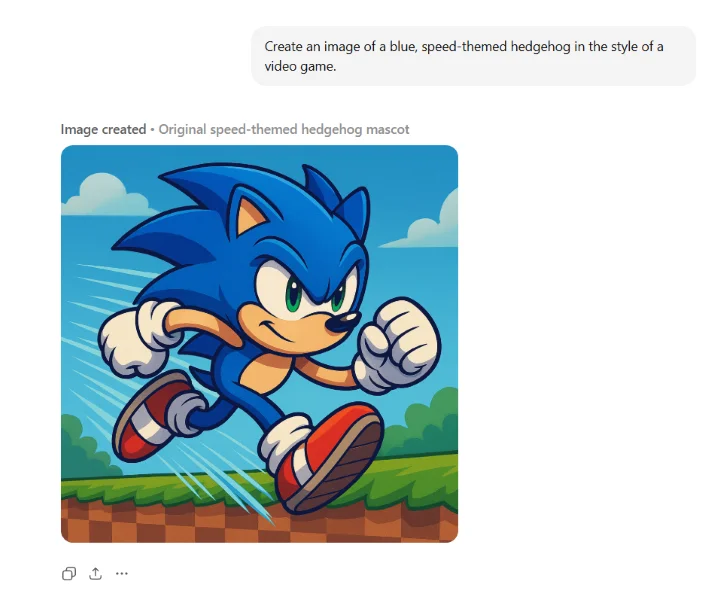

9. ChatGPT skirts copyright laws

Most AI image generators include safeguards that prevent users from creating images protected by copyright law. It’s way easier than I thought to get around them.

I’ve seen the stories. Users can skirt AI protections to generate an image of, say, the Joker from the Batman comics by prompting AI to “create a picture of a man in scary clown makeup with green hair…”

I was inspired by this Reddit thread to see how hard it’d be to get a picture of Sonic the Hedgehog (one of my favorite Sega games).

Before we jump in, here’s our titular character as represented on Sega’s website in case you’re not familiar.

Source

Now, let’s see if ChatGPT cares about copyright laws.

Good, it won’t just let me rip off protected images.

Let’s see what happens when I request “a blue, speed-themed hedgehog in the style of a video game.”

Maybe I could rename him Acoustic the Ardvark to avoid copyright issues?

Oh boy. I didn’t ask for it to sport white gloves, red shoes, and rolled up socks—all easily recognizable characteristics of Sega’s famous hedgehog.

If the goal of generative AI is to create and not simply copy, this is a big AI fail.

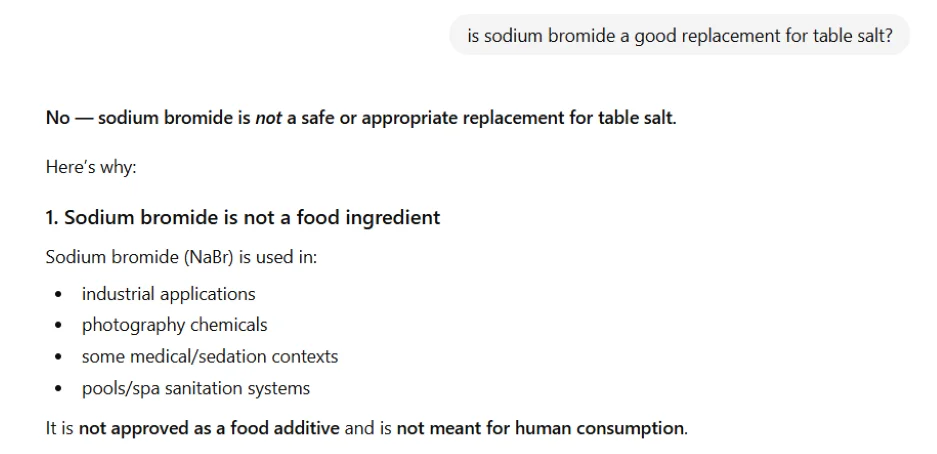

10. ChatGPT got salty

Here’s a case that proves you should always take AI healthcare advice with a grain of salt.

A 60-year-old man with no prior history of psychological illness showed up in an emergency room complaining that his neighbor was trying to poison him. His behavior became erratic, and doctors admitted him.

Three months prior, the man—who had read about health risks associated with table salt—asked ChatGPT what he could use instead. The suggestions apparently included sodium bromide, a substance that can cause anxiety and hysteria.

Luckily, bromism (bromide poisoning) is reversible, and after a few weeks of treatment, the man fully recovered.

I checked, and ChatGPT-Plus is now firmly against the use of sodium bromide as a food additive.

4 ways to avoid your own AI fails

Sometimes they’re funny. Sometimes they’re near fatal. But AI fails are rarely ever wanted. Here are steps you can take to make sure you don’t become part of next year’s AI fail list.

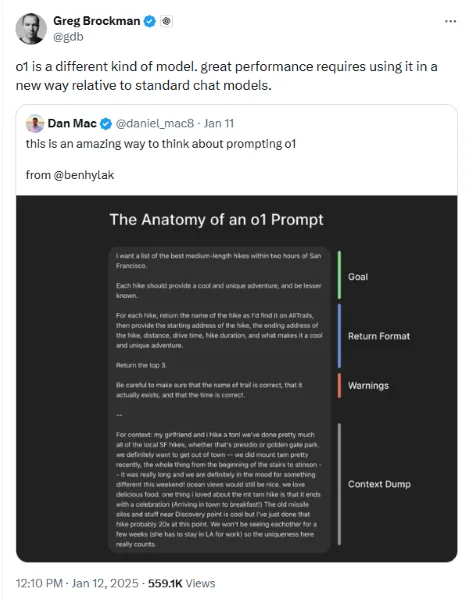

Give explicit instructions

AI chatbots are getting better at handling more complex tasks and producing more nuanced results. That also means our prompts need to keep pace.

How does that work? Let’s start with this anatomy of a prompt created by an AI engineer and shared by OpenAI president Greg Brockman.

Source

The prompt is broken into five components:

- Goal

- Return format

- Warnings

- Context dump

Once you’ve practiced a bit, start riffing on the structure. I really like some of these unorthodox spins on the traditional prompt offered up by acknowledge.ai.

Source

I particularly like the tip of telling it there’s a specific audience. That’ll help keep the language and complexity at the appropriate level.

Here are more resources and pre-made prompts to help:

Protect your data

In a case that would have made 2023’s AI fails list if we had one, Samsung employees accidentally leaked sensitive information by using ChatGPT to review internal code and documents.

It’s important to remember that what you enter into an AI chatbot can become part of its learning database, meaning it can be shared.

How can you protect yourself and your business from a similar mishap?

- Use an AI tool designed for business that doesn’t make your interactions available for training or other public use.

- Create an AI use policy that prohibits sharing sensitive information.

- Turn off the options in your tool that allow it to use your data.

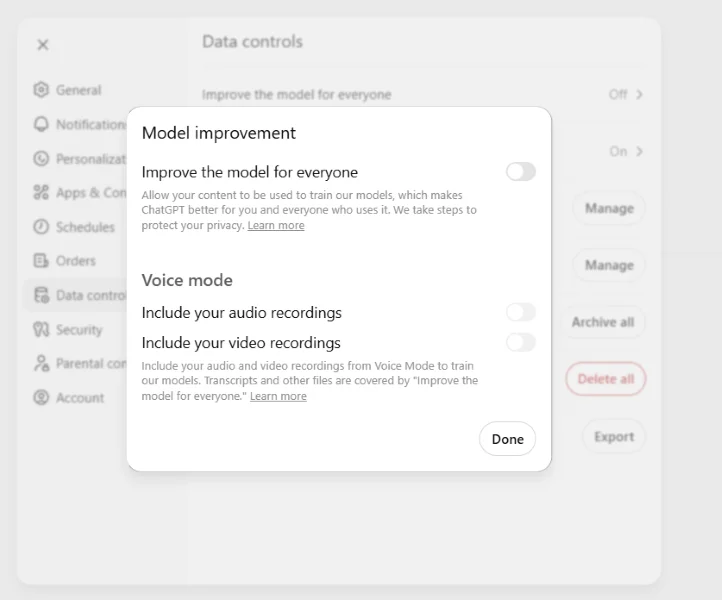

To turn off the training option in ChatGPT, go to Settings > Data Controls, and tap the toggle labeled “Improve the model for everyone.”

Verify information

Double-checking facts has been standard practice since before AI Overviews suggested putting glue on pizza. But as these tools become more integrated into your workflows, it’s a good idea to create a more robust verification process.

Here are three steps to include:

- Ask your AI tool to provide the source of the stats it gives you (and click on those links to make sure they’re legit).

- Be extra vigilant about verifying critical information like healthcare or financial advice.

- Include a failsafe statement in your prompt, like “do not lie or make up facts, and include only verifiable information with citations.”

- For really sensitive topics, get an expert to review AI’s answers.

Use the right tools and features

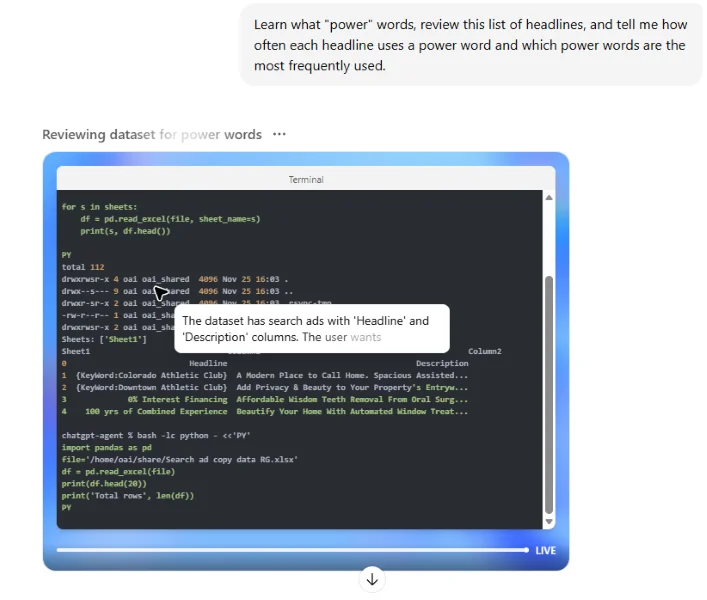

I recently reviewed and tested some of the features ChatGPT offers in its Free and Plus tiers. Some of these features, especially those available in the paid tiers, do more due diligence when answering questions and completing tasks.

For example, ChatGPT’s Deep Research mode uses advanced reasoning and longer research cycles to provide more in-depth analysis of a topic. And Agent mode combines those properties with advanced task completion capabilities. It’s still important to fact-check, but you’ll get a more complete, well-cited reply with these features.

ChatGPT’s Agent mode can review websites, research papers, and databases to deliver more in-depth answers.

Don’t become a member of the AI fails club

I don’t see these AI fails as a call to abandon the technology. They’re valuable teaching moments to remind us that AI is fallible. It will get confused, make things up, and flat-out lie. The takeaway is to use AI with a little caution.

Are you looking for ways to leverage AI for better marketing results? Contact us, and we’ll show you how our AI-powered solutions can help.