:::info

Authors:

(1) Frank Palma Gomez, from Boston University and the work done by him and Ramon during their internship in Google Research and Google DeepMind respectively;

(2) Ramon Sanabria, The University of Edinburgh and the work done by him and Frank during their internship in Google Research and Google DeepMind respectively;

(3) Yun-hsuan Sung, Google Research;

(4) Daniel Cer, Google Research;

(5) Siddharth Dalmia, Google DeepMind and Equal Advising Contributions;

(6) Gustavo Hernandez Abrego, Google Research and Equal Advising Contributions.

:::

Table of Links

Abstract and 1 Introduction

2 Method

3 Data and Tasks

4 Model

5 Experiments

6 Related Work

7 Conclusion

8 Acknowledgements and References

A Appendix

Abstract

Large language models (LLMs) are trained on text-only data that go far beyond the languages with paired speech and text data. At the same time, Dual Encoder (DE) based retrieval systems project queries and documents into the same embedding space and have demonstrated their success in retrieval and bi-text mining. To match speech and text in many languages, we propose using LLMs to initialize multi-modal DE retrieval systems. Unlike traditional methods, our system doesn’t require speech data during LLM pre-training and can exploit LLM’s multilingual text understanding capabilities to match speech and text in languages unseen during retrieval training. Our multi-modal LLMbased retrieval system is capable of matching speech and text in 102 languages despite only training on 21 languages. Our system outperforms previous systems trained explicitly on all 102 languages. We achieve a 10% absolute improvement in Recall@1 averaged across these languages. Additionally, our model demonstrates cross-lingual speech and text matching, which is further enhanced by readily available machine translation data.

1 Introduction

LLMs have demonstrated their effectiveness in modelling textual sequences to tackle various downstream tasks (Brown et al., 2020; Hoffmann et al., 2022; Chowdhery et al., 2023). This effectiveness has led to the development of powerful LLMs capable of modelling text in a wide range of languages. The abundance of textual data in different languages across the internet has fueled the progress of multi-lingual models (Johnson et al., 2017; Xue et al., 2020; Siddhant et al., 2022). On the other hand, speech technologies are prevalent in smartphones and personal assistants, but their language availability is relatively limited compared to the languages that LLMs support (Baevski et al., 2020; Radford et al., 2023).

Various efforts have explored solutions to the speech-text data scarcity problem (Duquenne et al., 2021; Ardila et al., 2019; Wang et al., 2020). Works such as SpeechMatrix (Duquenne et al., 2022) use separate speech and text encoders to mine semantically similar utterances that are neighbors in an embedding space. However, these approaches are limiting because they require speech and text encoders that have aligned representation spaces.

We posit that we can retrieve speech and text utterances by aligning both modalities within the embedding space built from a single pre-trained LLM. We take inspiration from previous works that use pre-trained LLMs to perform automatic speech recognition (ASR) and automatic speech translation (AST) (Rubenstein et al., 2023; Wang et al., 2023; Hassid et al., 2023). Our intuition is that we can perform the speech and text alignment leveraging the capabilities of text-only LLMs without requiring two separate models.

In this paper, we propose converting LLMs into speech and text DE retrieval systems without requiring speech pre-training and outperform previous methods with significantly less data. By discretizing speech into acoustic units (Hsu et al., 2021), we extend our LLMs embedding layer and treat the acoustic units as ordinary text tokens. Consequently, we transform our LLM into a retrieval system via a contrastive loss allowing us to match speech and text utterances in various languages. Our contributions are the following:

-

We build a speech-to-text symmetric DE from a pre-trained LLM. We show that our retrieval system is effective matching speech and text in 102 languages of FLEURS (Conneau et al., 2023 despite only training on 21 languages.

-

We show that our model exhibits cross-lingual speech and text matching without training on this type of data. At the same time, we find that cross-lingual speech and text matching is furthered improved by training on readily available machine translation data.

2 Method

We train a transformer-based DE model that encodes speech and text given a dataset D = {(xi , yi)}, where xi is a speech utterance and yi is its transcription. We denote the speech and text embeddings as xi = E(xi) and yi = E(yi), respectively, where E is a transformer-based DE that encodes speech and text.

2.1 Generating Audio Tokens

We convert raw speech into discrete tokens using the process in Lakhotia et al. (2021); Borsos et al. (2023). The process converts a speech query xi into an embedding using a pre-trained speech encoder. The output embedding is then discretized into a set of tokens using k-means clustering. We refer to the resulting tokens as audio tokens. We use the 2B variant of the Universal Speech Model (USM) encoder (Zhang et al., 2023) as the speech encoder and take the middle layer as the embedding for xi . Additionally, we generate audio tokens at 25Hz using k-means clustering, resulting in a set of 1024 possible audio tokens. We will refer to this as our audio token vocabulary.

2.2 Supporting Text and Audio Tokens

To support text and audio tokens in our LLM, we follow the formulation of Rubenstein et al. (2023). We extend the embedding layer of a transformer decoder by a tokens, where a represents the size of our audio token vocabulary. This modification leads to an embedding layer with size (t + a) × m, where t is the number of tokens in the text vocabulary and m is the dimensions of the embedding vectors. In our implementation, the first t tokens represent text and the remaining a tokens are reserved for audio. We initialize the embeddings layer from scratch when training our model.

3 Data and Tasks

Appendix A.3 details our training and evaluation datasets along with the number of languages in each dataset, the split we used, and the size of each dataset. We focus on the following retrieval tasks:

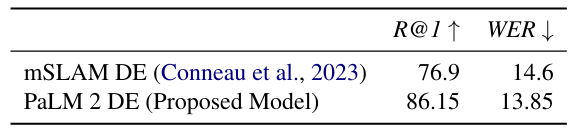

Speech-to-Text Retrieval (S2T) involves retrieving the corresponding transcription from a database given a speech sample. In S2T, we train on CoVoST-2 (Wang et al., 2021) speech utterances and their transcriptions. CoVoST-2 is a large multilingual speech corpus derived from Wikipedia expanding over 21 languages and provides translation to and from English. We use FLEURS (Conneau et al., 2023) to evaluate S2T performance on 102 languages. FLEURS is an n-way parallel dataset containing speech utterances from FLoRES-101 (Goyal et al., 2021) human translations. To evaluate S2T, we report recall at 1 (R@1) rates for retrieving the correct transcription for every speech sample and word error rate (WER).

Speech-to-Text Translation Retrieval (S2TT) attempts to retrieve the corresponding text translation of a speech sample. We use S2TT to measure the cross-lingual capabilities of our multi-modal DE retrieval system. We evaluate this capability zero-shot on X → En S2TT data of FLUERS and explore if we can further improve this capability by training on readily-available machine translation data from WikiMatrix (Schwenk et al., 2019). We pick French, German, Dutch, and Polish to English that are common across WikiMatrix and FLEURS and further discuss the amount of machine translation data used in Appendix A.3. For S2TT, we report 4-gram corpusBLEU (Post, 2018).

4 Model

Figure 1 shows an illustration of our model. We initialize our dual encoder from PaLM 2 XXS (Google et al., 2023) and append a linear projection layer after pooling the outputs along the sequence length dimension. The embedding and linear projection layers are initialized randomly. After initializing our model from PaLM 2, we use a contrastive loss (Hadsell et al., 2006). Appendix A.1 includes more details on our training setup. We will refer to our proposed model as PaLM 2 DE.

:::info

This paper is available on arxiv under CC BY 4.0 DEED license.

:::