Some still carry memories of early big data struggles – those years filled with fragile workflows and unpredictable crashes. Not long ago, running a basic filter on sales records often spiraled into tangled operations. A short script? It quickly grew, burdened by custom retry systems and intricate dependencies. Workers juggled failing tasks while wrestling with overflowing memory in the JVM. Nights passed troubleshooting what should have been straightforward jobs. This disorder felt unavoidable, almost routine – the hidden cost of handling massive datasets at scale. Yet now, change creeps in quietly, driven by shifts in how pipelines are designed. Declarative methods gain ground, simplifying what once demanded constant oversight.

Recent advances in Apache Spark 4.1, signal a turning point – one where engineers spend less time fixing broken links and more shaping insights. The past required stamina; the present begins to reward foresight.

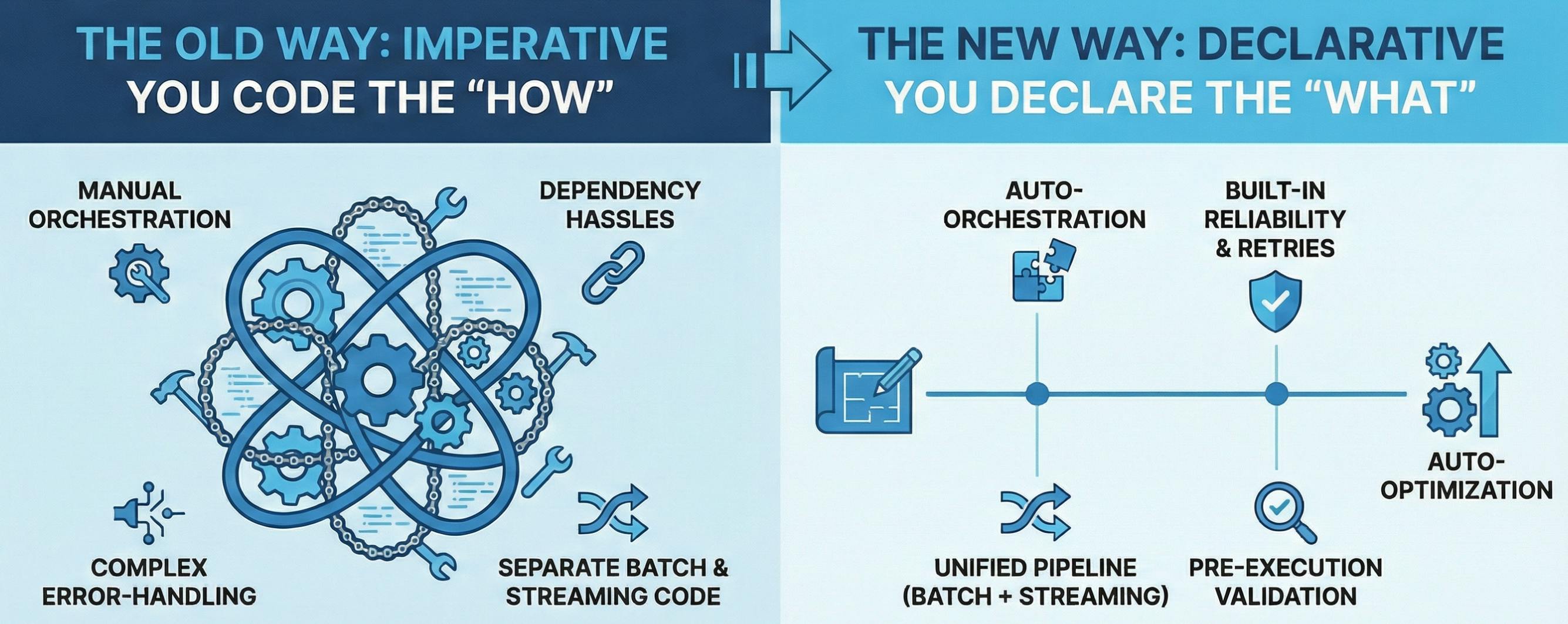

1. From “How” to “What”—The Declarative Mindset

The path of Spark’s growth leans into simpler layers. Yet the 2025 Data+AI Summit revealed something different – a pivot, clear and sharp. Under SPIP-51727, ‘Delta Live Tables’ engine now lives in open source under the name Spark Declarative Pipelines. Instead of spelling out each step in order, users state what outcomes matter; execution adjusts behind the scenes. While earlier styles demanded precision on process, this method skips to intent.

“When I look at this code [RDDs] today, what used to seem simple is actually pretty complicated… One of the first things we did with Spark was try to abstract away some of these complexities for very common functions like doing aggregations, doing joins, doing filters.”

— Michael Armbrust, Databricks Distinguished Engineer

Early efforts reduced friction for basic operations: grouping, merging, narrowing datasets. That impulse continues here – removing clutter without losing control. Outcomes guide the system instead of coded sequences. By defining the “end-state” rather than the manual sequencing, we allow Spark’s Catalyst optimizer to handle the gory details of parallelism and dependency resolution. We are no longer writing glue code; we are architecting data flows.

2. Materialized Views—The End of “Stale Data” Anxiety

One of the most powerful tools in the SDP arsenal is the Materialized View (MV). In past years, developers had only two paths – streaming systems offered speed at the cost of complexity; batch methods brought ease but delayed outcomes. Maintaining constant freshness through real-time streams tends to be prohibitively costly when scaling across vast datasets. MVs provide the essential middle ground, not quite live, not quite static. Unlike a standard view that recomputes on every query, an MV caches its results. Crucially, SDP enables incremental refreshes for these views.

The benefits of the MV data set type include:

- Automated Change Tracking: The system monitors upstream data changes without manual trigger logic.

- Cost Efficiency: By bypassing full recomputes and only processing new data, MVs provide high-performance access at a fraction of the compute cost.

- Simplicity for Complex Queries: They allow arbitrarily complex SQL to be materialized and updated according to a configurable cadence, eliminating the anxiety of manual table refreshes.

3. From 500 Lines down to 10 with Auto CDC

Building a “Gold Layer” with a Slowly Changing Dimension (SCD) Type 2 often brings frustration. Usually, it means writing between 300 and 500 repetitive PySpark lines. Complex merges show up, along with for_each_batch routines and hand-managed record expiry.

Then there’s the create_auto_cdc_flow API – right now unique to Databricks within the SDP system – that changes access completely. Tasks once reserved for specialists now need about ten setup lines. Behind the scenes, the key lives in the sequence_by setting. When linked to a field such as operation_date, the system manages disordered entries and removes outdated records on its own.

Anyone familiar with elementary SQL becomes capable of high-level data work through this setup. Defining the keys along with the order column lets the engine maintain exact consistency in the copied table. Latecoming data does not disrupt accuracy – it adjusts without intervention.

4. Data Quality is No Longer an Afterthought (Expectations)

During the early days of computing, poor data quality usually showed up late – long after systems ran for many hours. Now, SDP brings forward a tool called Expectations, placing validation steps right inside the workflow structure. What shifts everything is checking inputs ahead of execution.

At planning stage, Spark examines the full dependency map prior to launch. Mismatches like customeridentifier against customerid get spotted fast, avoiding wasted compute cycles down the line. Warning triggers mark bad entries but allow work to go on – helpful when saving background details or spotting odd patterns. Removing faulty items keeps downstream layers tidy while keeping movement alive – ideal for shaping reliable intermediate datasets. Stopping everything happens when rules around money or access control break – enforcing hard boundaries where needed.

| Action | Impact on Pipeline | Use Case |

|—-|—-|—-|

| Warn | Records are flagged; processing continues | Tracking non-critical metadata or logging anomalies. |

| Drop | Failed records are discarded; rest proceed | Maintaining a clean Silver layer without halting the flow. |

| Fail | Entire pipeline stops immediately | Ensuring critical financial or security constraints are met. |

5. Spark as the “GPU for Airflow”

People often think SDP takes over roles handled by tools such as Airflow. Yet, it’s better seen as the GPU where Airflow acts like the CPU. While general orchestration systems carry bulk, they tend to launch tasks slowly, offer clumsy workflows for local testing, plus miss awareness of individual SQL columns. Though strong in broad sequencing – say, triggering Spark followed by a notification via Slack – Airflow struggles when tracking fine-grained links between specific data fields. Sitting within Spark’s own analytical layer, SDP manages inner concurrency, tracks status changes, handles recovery attempts – all aspects invisible to traditional workflow engines built for coarser control.

The Future of the Data Architect

Moving beyond hand-driven setups isn’t merely swapping tools – it reshapes how work gets done. Instead of fixing flow breaks step by step, attention shifts toward designing outcomes. With Spark 4.1’s open framework combined with live delta tracking in the Lakehouse, effort lands where it matters. Heavy routine tasks fade into background systems.