I intend this article as a thought-provoking piece on a topic that has intrigued me for quite some time.

In early 2024, the BitNet b1.58 paper was published, and I wrote a short article about it. The core idea behind this architecture is that highly efficient large language models (LLMs) can be built using only three types of weights (-1, 0, 1). (The name comes from the fact that log2(3) = 1.58.) This is particularly exciting because such a network does not require multiplications—only conditional additions. Architectures like these can run efficiently without GPUs or can run on ASICs, which enable much more efficient execution compared to GPUs.

The only problem with this solution is that it cannot be trained using gradient descent. Training still relies on floating-point numbers, and the weights are quantized afterward for inference. This made me wonder for the first time: could there be a point where using gradient-free methods instead of gradient descent might make training more efficient?

But even if we momentarily set aside these extreme quantized networks, there is still a noticeable trend: the gap between the networks used for training and those used for inference is growing. Training a large language model (LLM) starts at several million dollars. Currently, DeepSeek v3 holds the “budget-friendly” record at $6 million. This model requires massive GPU clusters both for training and operation.

However, through distillation and quantization, significantly smaller models can be derived from these large ones. These smaller models perform almost as well but can run locally. Recently, Exolabs showcased a demo where they ran an LLM on an old Windows 98 computer. It seems LLMs are becoming the new Doom—developers are now competing to see who can run the most efficient LLM on the lowest-performance devices.

Of course, Exolabs’ LLM is nowhere near the level of DeepSeek, but the trend is clear. In training, the number of parameters is continuously increasing, ever-larger clusters are being used, and ever-greater sums of money are being burned to “breed” increasingly advanced LLMs. From these highly developed LLMs, smaller models are then derived through distillation and quantization, offering comparable performance to the large models but with significantly lower computational requirements.

The gap between training and inference continues to grow steadily.

This logically raises the question: wouldn’t it be possible to directly produce the small model, instead of training a large one at a cost of millions of dollars and then reducing it? The root of the problem lies in gradient descent, which does not work efficiently on these smaller models.

Gradient descent is the method without which today’s deep neural networks would not exist. Essentially, it’s a heuristic that helps determine how to adjust a network’s parameters so that its behavior aligns more closely with what we expect. I won’t go into too much detail here, as I’ve written a full article on the topic for those interested in a deeper dive.

Gradient descent is so fundamental that machine learning libraries (e.g., Jax) differ from general mathematical libraries (e.g., NumPy) primarily in their ability to compute derivatives for various operations—a critical requirement for gradient descent. Gradient descent is the cornerstone of these systems.

There are, however, gradient-free solutions as well, such as genetic algorithms or even a simple random search. While these are less efficient than gradient descent, they have their advantages. The most obvious advantage is that they can be used in cases where derivatives cannot be computed. This includes, for example, the 1.58-bit neural networks mentioned before.

Without gradient descent, finding the optimal weights for a network becomes significantly more challenging, and gradient-free methods can take 100x or even 1000x more steps. However, if individual steps are much faster, training can still end up being more efficient overall.

Imagine if we repurposed the transistors in a GPU cluster and, instead of building GPUs, created ASICs specifically designed to efficiently run 1.58-bit networks. While a single training step for a large network runs on a GPU cluster, 10,000 training steps could be executed simultaneously on an ASIC cluster with a much smaller, reduced network.

Even if training requires 1,000x more steps using a gradient-free method, the process could still be 10x faster overall. Additionally, such a system would likely scale better and consume less energy.

In addition, these 1.58-bit networks could open up an entirely new world of possibilities. For instance, instead of ASICs, we could imagine highly specialized analog or even light-based circuits that are 100x or even 1,000x more efficient than ASICs.

A gradient-free method might take 1,000x more steps to find the optimal weights, but if each step can be executed 10,000x faster, the training process would still be 10x faster overall and likely more energy-efficient as well.

Another very exciting area is decentralization. For the reasons mentioned earlier, training large neural networks is increasingly becoming the privilege of major corporations, as it requires massive GPU clusters and millions of dollars. However, the reduced small models derived from these networks can run efficiently locally on desktops or even mobile devices.

The latest processors often come equipped with dedicated units specifically designed for running neural networks, making this more feasible.

If we use gradient-free methods for training, any device capable of running the neural network can be involved in the training process.

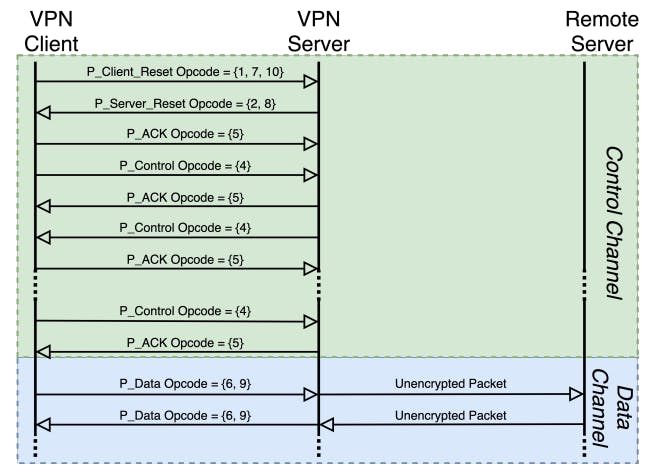

In gradient-free methods like evolutionary algorithms, the task is straightforward: after randomly altering a few parameters, simply run the network. After several attempts, the better-performing parameter sets are kept, the poorer ones are discarded, and the process continues. Periodically, the best candidates can be exchanged between devices, allowing massive clusters to be built from something as simple as mobile phones.

It is possible to build a system similar to Bitcoin, where participants “mine” neural networks. In Bitcoin, mining means that participants’ computers make random attempts to find a specific hash. For this purpose, specialized ASICs are available that are designed solely to generate hashes very quickly. The first participant to find the desired hash earns the reward.

A decentralized system for “mining” intelligence would look very similar, but instead of calculating hashes, the ASIC would run a neural network extremely quickly. GPUs could also be used, but for something like 1.58-bit networks, an ASIC might already be more efficient. Miners would make random attempts to find more effective parameters, and those who succeeded could submit their results. If the network is indeed more efficient, they earn a reward, and a new round begins with the goal of finding an even better-performing network.

This seems like a far more meaningful way to utilize energy compared to Bitcoin mining.

It’s hard to say how effective gradient-free solutions could be. It’s also possible that the gradient-free direction might simply be a dead end. However, I am certain that the answer is far from trivial. This field deserves deeper research, as the potential rewards could be significant. If we could genuinely train models more efficiently this way, it could lead to the development of new and better LLMs.

It’s also possible we might find that training a network from scratch using gradient-free methods is not effective, but even in that case, there could be potential for gradient-free fine-tuning. Additionally, the decentralization of training—and thus the democratization of AI—are promises compelling enough to make this area worth exploring further.

I started a discussion in a Reddit post. If you’re interested in the topic and would like to join the conversation, you can do so here. I’d greatly appreciate any opinions or comments!