Table of Links

Abstract and 1 Introduction

2 Related Work

3 Methodology

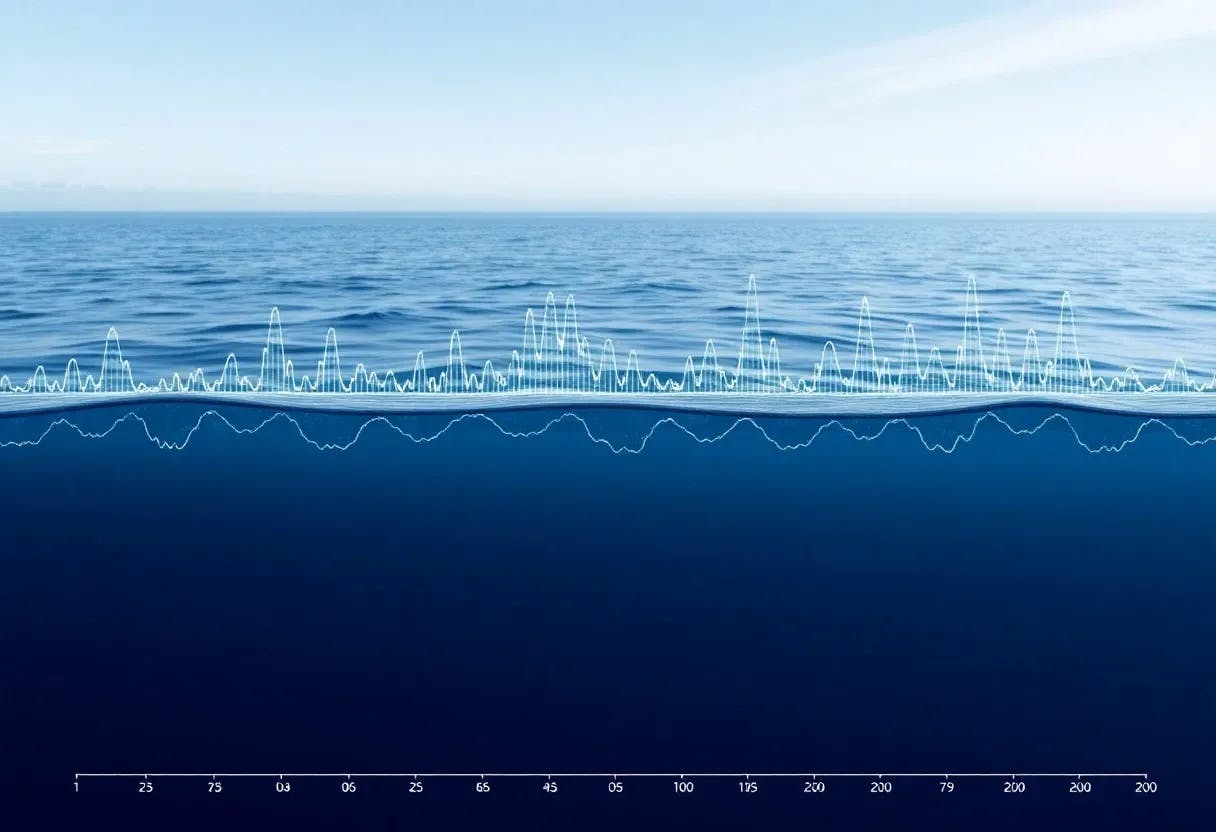

4 Studying Deep Ocean Ecosystem and 4.1 Deep Ocean Research Goals

4.2 Workflow and Data

4.3 Design Challenges and User Tasks

5 The DeepSea System

- 5.1 Map View

- 5.2 Core View

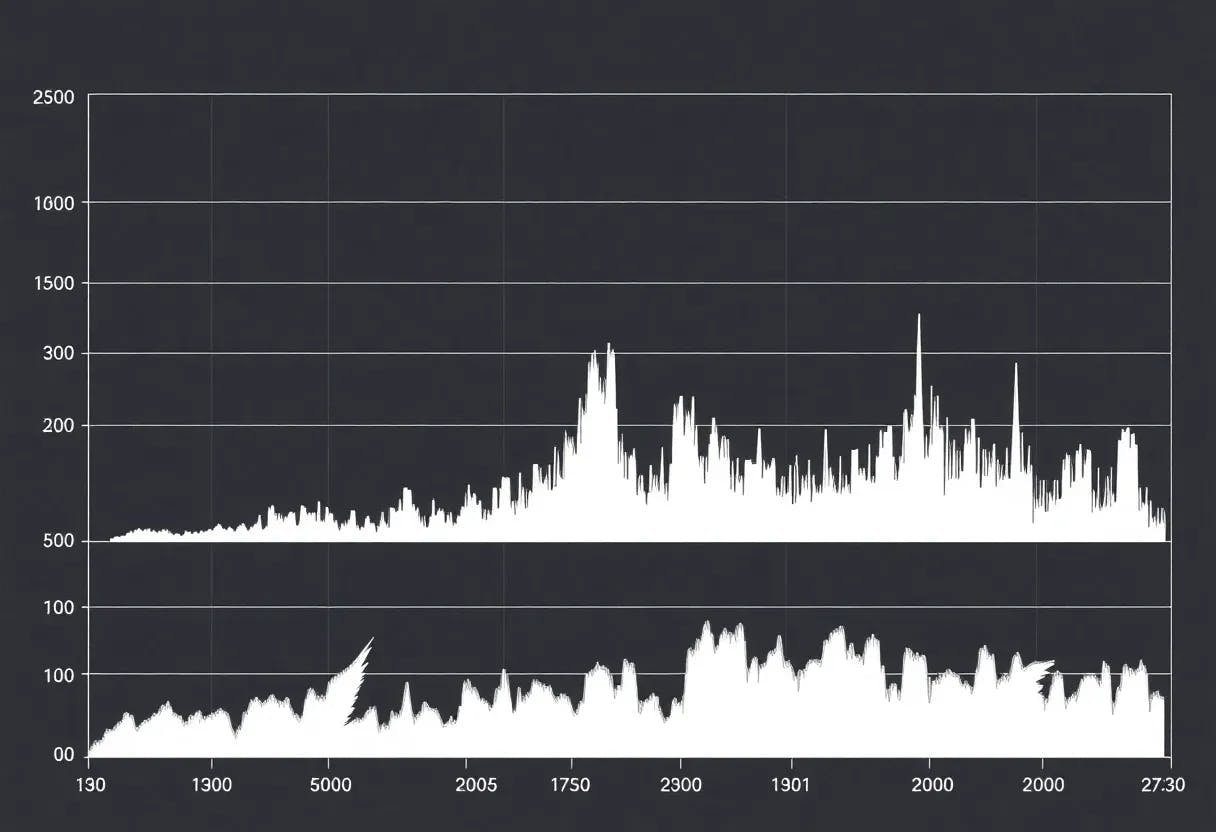

5.3 Interpolation View and 5.4 Implementation

6 Usage Scenarios and 6.1 Scenario: Pre-Cruise Planning

- 6.2 Scenario: On-the-Fly Decision-Making

7 Evaluation and 7.1 Cruise Deployment

7.2 Expert Interviews

7.3 Limitations

7.4 Lessons Learned

8 Conclusions and Future Work, Acknowledgments, and References

3 METHODOLOGY

In this paper, we followed Sedlmair et al.’s methodological framework for design studies [43]. Each phase of the study resulted in a contribution, namely: (1) a characterization of the problem domain via design challenges and user tasks; (2) a novel and validated visualization design; and (3) reflections on the process and lessons learned for improving visualization and interaction design guidelines. We connect these phases throughout the paper by illustrating how our synthesized challenges and tasks informed DeepSee’s design and how DeepSee’s success in addressing the challenges and tasks is reflected in our evaluation. To the best of our knowledge, the problem we describe in Sect. 4 is unique to our collaborators and has not been addressed by other researchers so far. Thus, we posit that a design study based on active participation from collaborators in both the design and evaluation phases is necessary to successfully support domain experts in their highly specialized tasks.

We began by establishing a close collaboration between visualization researchers and domain experts, to properly assess the impact of our approach on solving a real-world problem. In line with prior design studies [15, 18, 33], our team comprised all authors and included visualization experts in human-centered computing, interaction design, scientific data visualization and art, as well as scientists and researchers with expertise in environmental microbiology, geochemistry and geology. We developed DeepSee through several user-centered design methodologies including contextual inquiry, mixed-fidelity prototyping, user testing, and design iteration. Over an intensive ten-week period, we worked closely together to develop a shared understanding of the importance and aims of their scientific research (Sect. 4.1), characterize data and workflows (Sect. 4.2), and identify data visualization challenges and user tasks (Sect. 4.3) that would guide our system design.

Our process started with contextual inquiry, combining observation and interviews with our domain collaborators to deeply understand their existing tools and workflows, discuss unmet needs and pain points, and identify key technical constraints. After two weeks, we began mixed-fidelity prototyping, starting with paper prototypes, wireframes and small interactive prototypes in code, before moving on to progressively higher-fidelity prototypes. These prototypes were iteratively discussed with the science team over the next seven weeks through semi-structured user testing. Using these prototypes as stimuli, we deepened our understanding of the problem space through on-going contextual inquiry. During this time, systems diagrams and conceptual workflows were also created to help us validate our understanding of the problem space in collaboration with the science team. As a result of this approach, we progressively uncovered insights that guided each subsequent design iteration, leading us to generate a working prototype described in Sect. 5 that addressed our collaborators’ tasks.

DeepSee was then deployed in the wild [43] to assess whether our approach solves a real problem – bridging gaps in supporting visual analysis of samples during field research. Deploying DeepSee with the same experts that we designed with allowed us to assess whether domain experts are indeed helped by the new solution, as well as reflect on and refine principles for visualization and interaction design guidelines [43]. Specifically, we investigated the impact of introducing data visualizations into both planning before fieldwork as well as tactical decision making in the field. We learned that visualizing data at multiple scales, integrating 2D and 3D data in the same interface, and modularizing visualizations positively impacted scientists planning workflows the most, and that additional support for integrating data on the fly could better enable in-situ decision making. Methodological details of our evaluation and how we synthesized these lessons learned are provided in Sect. 7.

Authors:

(1) Adam Coscia, Georgia Institute of Technology, Atlanta, Georgia, USA ([email protected]);

(2) Haley M. Sapers, Division of Geological and Planetary Sciences, California Institute of Technology, Pasadena, California, USA ([email protected]);

(3) Noah Deutsch, Harvard University Cambridge, Massachusetts, USA ([email protected]);

(4) Malika Khurana, The New York Times Company, New York, New York, USA ([email protected]);

(5) John S. Magyar, Division of Geological and Planetary Sciences, California Institute of Technology Pasadena, California, USA ([email protected]);

(6) Sergio A. Parra, Division of Geological and Planetary Sciences, California Institute of Technology Pasadena, California, USA ([email protected]);

(7) Daniel R. Utter, [email protected] Division of Geological and Planetary Sciences, California Institute of Technology Pasadena, California, USA ([email protected]);

(8) John S. Magyar, Division of Geological and Planetary Sciences, California Institute of Technology Pasadena, California, USA ([email protected]);

(9) David W. Caress, Monterey Bay Aquarium Research Institute, Moss Landing, California, USA ([email protected]);

(10) Eric J. Martin Jennifer B. Paduan Monterey Bay Aquarium Research Institute, Moss Landing, California, USA ([email protected]);

(11) Jennifer B. Paduan, Monterey Bay Aquarium Research Institute, Moss Landing, California, USA ([email protected]);

(12) Maggie Hendrie, ArtCenter College of Design, Pasadena, California, USA ([email protected]);

(13) Santiago Lombeyda, California Institute of Technology, Pasadena, California, USA ([email protected]);

(14) Hillary Mushkin, California Institute of Technology, Pasadena, California, USA ([email protected]);

(15) Alex Endert, Georgia Institute of Technology, Atlanta, Georgia, USA ([email protected]);

(16) Scott Davidoff, Jet Propulsion Laboratory, California Institute of Technology, Pasadena, California, USA ([email protected]);

(17) Victoria J. Orphan, Division of Geological and Planetary Sciences, California Institute of Technology, Pasadena, California, USA ([email protected]).