Table of Links

Abstract and 1 Introduction

2.1 Software Testing

2.2 Gamification of Software Testing

3 Gamifying Continuous Integration and 3.1 Challenges in Teaching Software Testing

3.2 Gamification Elements of Gamekins

3.3 Gamified Elements and the Testing Curriculum

4 Experiment Setup and 4.1 Software Testing Course

4.2 Integration of Gamekins and 4.3 Participants

4.4 Data Analysis

4.5 Threats to Validity

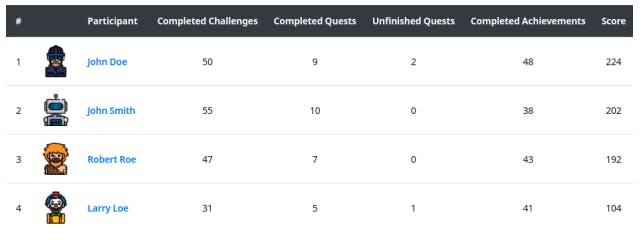

5.1 RQ1: How did the students use Gamekins during the course?

5.2 RQ2: What testing behavior did the students exhibit?

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

6 Related Work

7 Conclusions, Acknowledgments, and References

4.4 Data Analysis

The analysis of the experiment involves comparing the results obtained from the participating students. In order to determine the significance of any differences, we employ the exact WilcoxonMann-Whitney test [34] with a significance level 𝛼 = 0.05.

4.4.1 RQ1: How did the students use Gamekins during the course? The data collected by Gamekins, including current, completed, and rejected challenges and quests, are stored in the configuration files of each user. This data can be easily extracted for further evaluation, which is the focus of our analysis in this research question. We consider the differences between the Analyzer and Fuzzer projects to determine if the students performed or usedGamekins differently during these projects. We examine the various types of challenges solved by the students and investigate the reasons why they rejected certain challenges. This analysis helps us identify any difficulties that the students faced while using Gamekins.

4.4.2 RQ2: What testing behavior did the students exhibit? We compare both projects in terms of (1) number of tests, (2) number of commits, (3) line coverage, (4) mutation score, and (5) grade. The line coverage of the participants’ implementations is measured with the help of JaCoCo[9], while we use PIT to determine the mutation score. Coverage is a common metric to determine how well the source code of a project is exercised [27], while mutation analysis detects test gaps also within covered code [38]. The grade is an individual score assigned to each participant for each project, ranging from 0 to 100. It is determined using several factors, including average line and branch coverage, mutation score, and the usage of Gamekins. The usage of Gamekins depends on the participants’ engagement in writing tests and solving challenges using the tool (see Section 4.2). For the Analyzer project, the correct output is also considered in the grading process by running the participants’ implementation against eleven different examples and comparing their output with a reference implementation. To further analyze the relationship between the participants’ performance in Gamekins and their project outcomes, we use Pearson correlation [10] to determine if there is a correlation between the scores achieved by the participants in Gamekins and the number of tests, number of commits, code coverage, mutation score, and grade.

The Analyzer project was previously used in the course in 2019, with the same task and framework, differing only in the use of Gamekins. We compare the results of 2019 and 2022 in terms of (1) number of tests, (2) number of commits, (3) line coverage, (4) mutation score, and (5) correct output. For this, we count the number of tests in both the 2019 and 2022 projects and execute them to obtain coverage using JaCoCo. Next, we calculate mutation scores for the student code using PIT. Lastly, we compare the output generated by their code with the output of our reference solution.

4.4.3 RQ3: How did the students perceive the integration of Gamekins into their projects? To address this research question, we administered a survey to the students, comprising 23 questions categorized into three sections (Table 1). The initial section focused on gathering demographic information about the participants, while the second section inquired about their experience with Gamekins. The final section allowed students to provide feedback on their likes and dislikes regarding Gamekins. We present the survey responses using Likert plots and analyze the students’ free-text answers to gain insights into their perceptions of Gamekins.

[9] https://www.jacoco.org/jacoco/

Authors:

(1) Philipp Straubinger, University of Passau, Germany;

(2) Gordon Fraser, University of Passau, Germany.