Table of Links

Abstract and 1 Introduction

2.1 Software Testing

2.2 Gamification of Software Testing

3 Gamifying Continuous Integration and 3.1 Challenges in Teaching Software Testing

3.2 Gamification Elements of Gamekins

3.3 Gamified Elements and the Testing Curriculum

4 Experiment Setup and 4.1 Software Testing Course

4.2 Integration of Gamekins and 4.3 Participants

4.4 Data Analysis

4.5 Threats to Validity

5.1 RQ1: How did the students use Gamekins during the course?

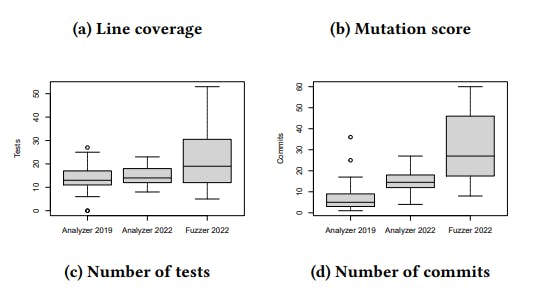

5.2 RQ2: What testing behavior did the students exhibit?

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

6 Related Work

7 Conclusions, Acknowledgments, and References

5.3 RQ3: How did the students perceive the integration of Gamekins into their projects?

Figure 11 summarizes the survey responses: 82 % of the students enjoyed the inclusion of Gamekins (G1) in the course, but they expressed substantially less enjoyment of writing tests (G2) in general (65 %). Furthermore, 59 % of the participants agreed that it is more enjoyable to write tests when using Gamekins compared to writing tests without it (G3). Approximately half of the students reported learning and practicing valuable skills for their future careers (G4) and expressed interest in using Gamekins in other projects (G5).

Considering the questions on the different gamification elements (Fig. 12), challenges were the most enjoyable aspect (G6–G10) for the majority of students (82 %). Quests, on the other hand, received the most negative feedback, with 18 % of students expressing dissatisfaction. The sequential nature of solving the steps of quests appears to be difficult, especially since the application being tested was small and had only few classes. This frustrated some students who found it challenging to progress without solving multiple steps at once. However, students appreciated the competitive aspect of the leaderboard and enjoyed the challenges and achievements.

Approximately 24 % of the students reported feeling pressured to solve challenges (G12), with all of them expressing the desire to achieve a good grade in the course. While this likely holds for any required aspect of coursework, it raises the question of how well practitioners would engage with Gamekins. While a majority of the students (59 %) stated that they wrote more tests using Gamekins (G13), an equal number of participants (59 %) mentioned that they believed they wrote unnecessary tests just to solve challenges (G14). Unfortunately, no reasons were provided by these students. One possible explanation could be that they were required to achieve 100 % coverage for their project, and any tests that went beyond that (such as those targeting mutants) were perceived as unnecessary. On the other hand, it may simply be the case that the 100 % coverage requirement implied coverage on trivial code (e.g., getters or setters). However, neither of these reasons would be specific to Gamekins, but would equally apply to the underlying test metrics.

The students encountered several problems (F2–F4): Issues arose when they had to modify code such that this caused Gamekins to fail to locate relevant lines after the changes, thus automatically rejecting challenges. Sometimes Mutation Challenges were solvable on the students’ computers but not in Gamekins, which was caused by changes in line numbers, modified code, or PIT not recognizing the mutant as killed. Smell Challenges also caused some confusion whenever it was not apparent without further explanation why a suggested change would improve the code in the given context. The students also faced problems with Quests, which sometimes were impossible to complete fully due to code changes. These issues will be addressed in future versions of Gamekins, for example by improving the tracking of code changes, rethinking the concept of Quests, and reducing the number of smells reported.

Overall, however, the students expressed satisfaction with the integration of Gamekins into the course (F1). They appreciated the small tasks assigned to them instead of being overwhelmed by a large number of missed scenarios. They particularly enjoyed the competitive aspect and the game-like interface, which provided hints and ideas for testing and improving tests and code.

Authors:

(1) Philipp Straubinger, University of Passau, Germany;

(2) Gordon Fraser, University of Passau, Germany.