Robert Triggs / Android Authority

Cloud AI might be impressive, but I yearn for the added security that only offline, local processing provides, especially in light of DeepSeek reporting user data back to China. Replacing CoPilot with DeepSeek on my laptop yesterday made me wonder if I could run large language models offline on my smartphone as well. After all, today’s flagship phones claim to be plenty powerful, have pools of RAM, and have dedicated AI accelerators that only the most modern PCs or expensive GPUs can best. Surely, it can be done.

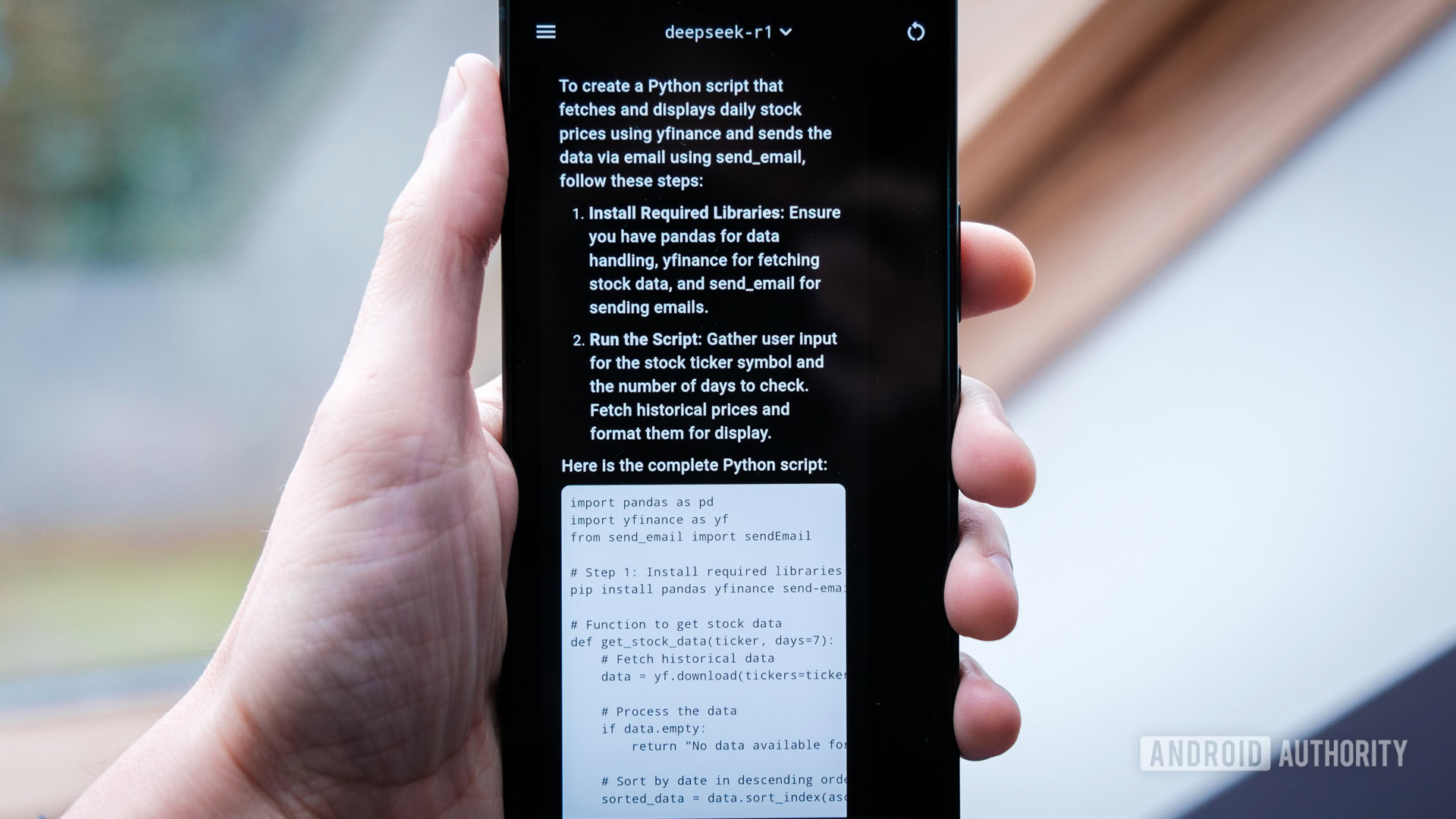

Well, it turns out you can run a condensed version of DeepSeek (and many other large language models) locally on your phone without being tethered to an internet connection. While the responses are not as fast or accurate as those of the full-sized cloud model, the phones I tested can churn out answers at a brisk reading pace, making them very usable. Importantly, the smaller models are still good at assisting with problem-solving, explaining complex topics, and even producing working code, just like its bigger sibling.

Are you interested in DeepSeek’s AI model?

4711 votes

I’m very impressed with the results, given that it runs on something that fits in my pocket. I can’t recommend that you all dash out to copy me, but those who are really interested in the ever-developing AI landscape should probably try running local models for themselves.

Robert Triggs / Android Authority

Installing an offline LLM on your phone can be a pain, and the experience is not as seamless as using Google’s Gemini. My time spent digging and tinkering also revealed that smartphones are not the most beginner-friendly environments for experimenting with or developing new AI tools. That’ll need to change if we’re ever going to have a competitive marketplace for compelling AI apps, allowing users to break free from today’s OEM shackles.

Local models run surprisingly well on Android, but setting them up is not for the fainthearted.

Surprisingly, performance wasn’t really the issue here; the admittedly cutting-edge Snapdragon 8 Elite smartphones I tested run moderately sized seven and eight-billion parameter models surprisingly well on just their CPU, with an output speed of 11 tokens per second — a bit faster than most people can read. You can even run the 14-billion parameter Phi-4 if you have enough RAM, though the output falls to a still passable six tokens per second. However, running LLMs is the hardest I’ve pushed modern smartphone CPUs outside of benchmarking, and it results in some pretty warm handsets.

Impressively, even the aging Pixel 7 Pro can run smaller three billion parameter models, like Meta’s llama3.2, at a passable five tokens per second, but attempting the larger DeepSeek is really pushing the limit of what older phones can do. Unfortunately, there’s currently no NPU or GPU acceleration available for any smartphone using the methods I tried, which would give older phones a real shot in the arm. As such, larger models are an absolute no-go, even on Android’s most powerful chips.

Without internet access or assistant functions, few will find local LLM’s very useful on their own.

Even with powerful modern handsets, I think the vast majority of people will find the use cases for running an LLM on their phone very limited. Impressive models like DeepSeek, Llama, and Phi are great assistants for working on big-screen PC projects, but you’ll struggle to make use of their abilities on a tiny smartphone. Currently, in phone form, they can’t access the internet or interact with external functions like Google Assistant routines, and it’s a nightmare to pass them documents to summarize via the command line. There’s a reason phone brands are embedding AI tools into apps like the Gallery: targeting more specific use cases is the best way for most people to interact with models of various types.

So, while it’s very promising that smartphones can run some of the better compact LLMs out there, there’s a long way to go before the ecosystem is close to supporting consumer choice in assistants. As you’ll see below, smartphones aren’t in focus for those training or running the latest models, let alone optimizing for the wide range of hardware acceleration capabilities the platform has to offer. That said, I’d love to see more developer investment here, as there’s clearly promise.

If you still want to try running DeepSeek or many of the other popular large language models on the security of your own smartphone, I’ve detailed two options to get you started.

How to install DeepSeek on your phone (the easy way)

Robert Triggs / Android Authority

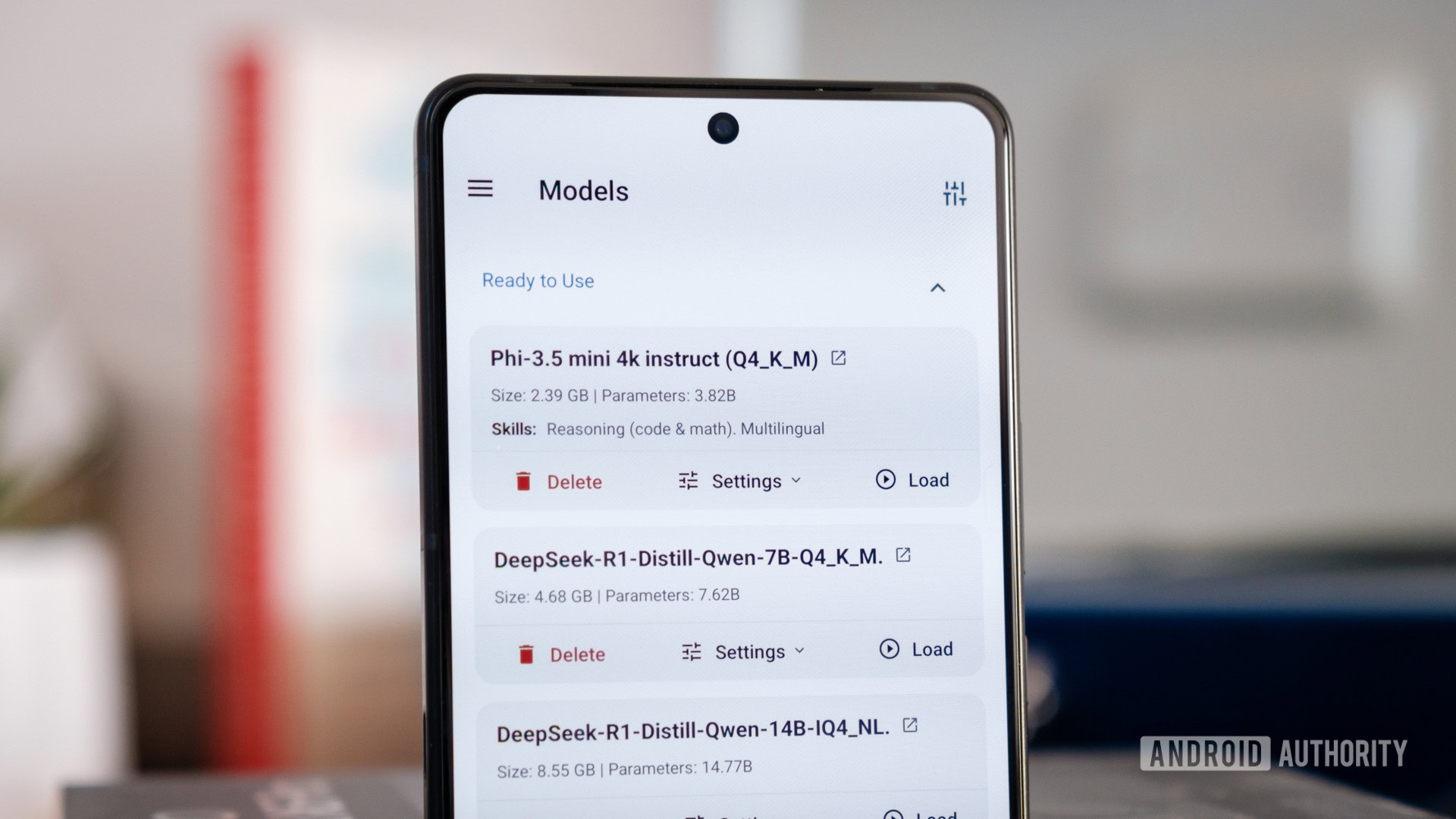

If you want a super-easy solution for chatting with local AI on your Android or iOS handset, you should try out the PocketPal AI app. iPhone owners can also try PrivateLLM. You’ll still need a phone with a decent processor and pool of fast RAM; 12 GB is acceptable in my testing to run smaller, condensed 7b/8b models, but 16GB is better and essential if you want to try and tackle 14b.

Through the app, you can access a wide range of models via the popular HuggingFace portal, which contains DeepSeek, LLama, Phi, and many, many others. In fact, HuggingFace’s humongous portfolio is a bit of an obstacle for the uninitiated, and the search functionality in PocketPal AI is limited. Models aren’t particularly well labeled in the small UI, so picking the official or optimal one for your device is difficult, outside of avoiding ones that display a memory warning. Thankfully, you can manually import models you’ve downloaded yourself, which makes the process easier.

Want to run local AI on your phone? PocketPal AI is a super easy way to do just that.

Unfortunately, I experienced a few bugs with PocketPal AI, ranging from failed downloads to unresponsive chats and full-on app crashes. Basically, don’t ever navigate away from the app. A number of responses also cut off prematurely due to the small default window size (a big problem for the verbose DeepSeek), so although it’s user-friendly to start, you’ll probably have to dig into more complex settings eventually. I couldn’t find a way to delete chats either.

Thankfully, performance is really solid, and it’ll automatically offload the model to reduce RAM when not in use. This is by far the easier way to run DeepSeek and other popular models offline, but there’s another way too.

…and the hard way

Robert Triggs / Android Authority

This second method involves command-line wrangling via Termux to install Ollama, a popular tool for running LLM models locally (and the basis for PocketPal AI) that I found to be a bit more reliable than the previous app. There are a couple of ways to install Ollama on your Android phone. I’m going to use this rather smart method by Davide Fornelli, which uses Proot to provide a fresh (and easily removable) Debian environment to work with. It’s the easiest to set up and manage, especially as you’ll probably end up removing Ollama after playing around for a bit. Performance with Proot isn’t quite native, but it’s good enough. I suggest reading the full guide to understand the process, but I’ve listed the essential steps below.

Follow these steps on your Android phone to install Ollama.

- Install and open the Termux app

pkgupdate && pkg upgrade– upgrade to latest packagespkg install proot-distro– install Proot-Distropd install debian– install Debianpd login debian– login into our Debian environmentcurl -fsSL https://ollama.com/install.sh |sh– download and install Ollamaollama serve– start running the Ollama server

You can exit the Debian environment via CTRL+D at any time. To remove Ollama, all your downloaded models, and the Debian environment, follow the steps below. This will take your phone back to its original state.

CTRL+Cand/orCTRL+D– exit Ollama and make sure you’re logged out of the Debian environment in Termux.pd remove debian– this will delete everything you did in the environment (it won’t touch anything else).pkg remove proot-distro– this is optional, but I will remove Proot as well (it’s only a few MB in size, so you can easily keep it).

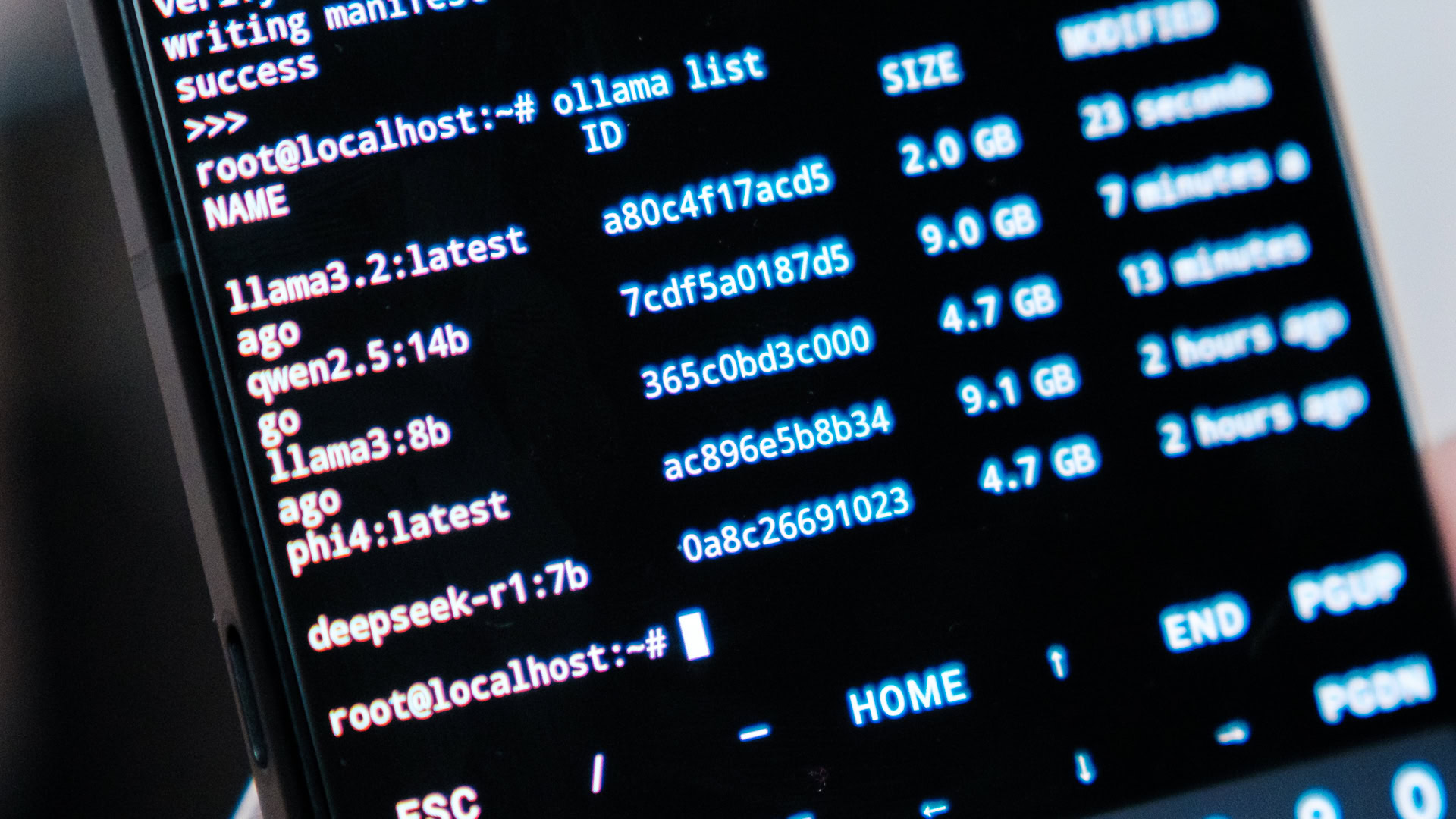

With Ollama installed, log in to our Debian environment via Termux and run ollama serve to start up the server. You can press CTRL+C to end the server instance and free up your phone’s resources. While this is running we have a couple of options to interact with Ollama and start running some large language models.

You can open a second window in Termux, log in to our Debian environment, and proceed with Ollama command-line interactions. This is the fastest and most stable way to interact with Ollama, particularly on low-RAM smartphones, and is (currently) the only way to remove models to free up storage.

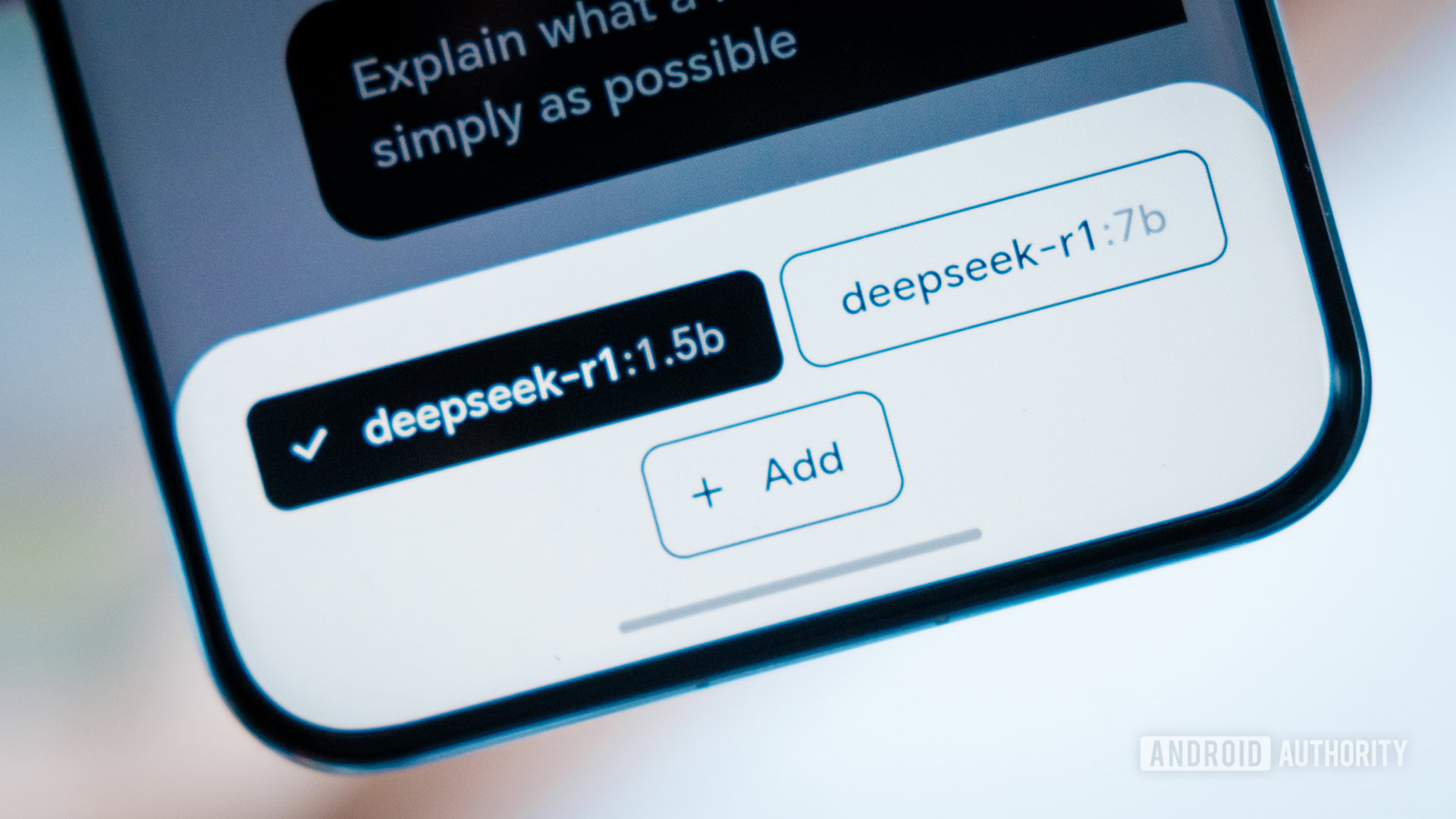

To start chatting with DeepSeek-R1, enter ollama run deepseek-r1:7b. Once it’s downloaded, you can type away into the command line, and the model will return answers. You can also pick any other suitably sized model from the well-organized Ollama library.

ollama run MODEL_NAME– download and/or run a modelollama stop MODEL_NAME– stop a model (Ollama automatically unloads models after five minutes)ollama pull MODEL_NAME– download a modelollama rm MODEL_NAME– delete a modelollama ps– view the currently loaded modelollama list– list all the installed models

The alternative is to install JHubi1’s Ollama App for Android. It communicates with Ollama and provides a nicer user interface for chatting and installing models of your choice. The drawback is that it’s another app to run, which eats up precious RAM and can tip a less performant smartphone to a crawl. Responses also feel a bit more sluggish than running in the command line, owing to the communication overhead with Ollama. Still, it’s a solid quality-of-life upgrade if you’re planning to perpetually run a local LLM.

Which LLMs can I run on a smartphone?

Robert Triggs / Android Authority

While watching DeepSeek’s thought process is impressive, I don’t think it’s necessarily the most useful large language model out there, especially to run on a phone. Even the seven billion parameter model will pause with longer conversations on an 8 Elite, which makes it a chore to use when paired with its long chain of reasoning. Fortunately, there are loads more models to pick from that can run faster and still provide excellent results. Meta’s Llama, Microsoft’s Phi, and Mistral can all run well on a variety of phones; you just need to pick the most appropriate size model depending on your smartphone’s RAM and processing capabilities.

Broadly speaking, three billion parameter models will need up to 2.5GB of free RAM, while seven and eight billion parameter models need around 5-7GB to hold them. 14b models can eat up to 10GB, and so on, roughly doubling the amount of RAM every time you double the size of the model. Given that smartphones also have to share RAM with the OS, other apps, and GPU, you need at least a 50% buffer (even more if you want to keep the model running constantly) to ensure your phone doesn’t bog down by attempting to move the model into swap space. As a rough ballpark, phones with 12GB of fast RAM will run seven and eight billion parameter models, but you’ll need 16GB or greater to attempt 14b. And remember, Google’s Pixel 9 Pro partitions off some RAM for its own Gemini model.

Android phones can pick from a huge range of smaller AI models.

Of course, more parameters require vastly more processing power to crunch the model as well. Even the Snapdragon 8 Elite slows down a bit with Microsoft’s Phi-4:14b and Alibaba’s Qwen2.5:14b, though both are reasonably usable on the colossal 24GB ASUS ROG Phone 9 Pro I used to test with. If you want something that outputs at reading pace on current or last-gen hardware that still offers a decent level of accuracy, stick with 8b at most. For older or mid-range phones, dropping with smaller 3b models sacrifices much more accuracy but should allow for a token output that isn’t a complete snail’s pace.

Of course, this is just if you want to run AI locally. If you’re happy with the trade-offs of cloud computing, you can access much more powerful models like ChatGPT, Gemini, and the full-sized DeepSeek through your web browser or their respective dedicated apps. Still, running AI on my phone has certainly been an interesting experiment, and better developers than me could tap into much more potential with Ollama’s API hooks.