Table of Links

Abstract and 1 Introduction

2 Related Works

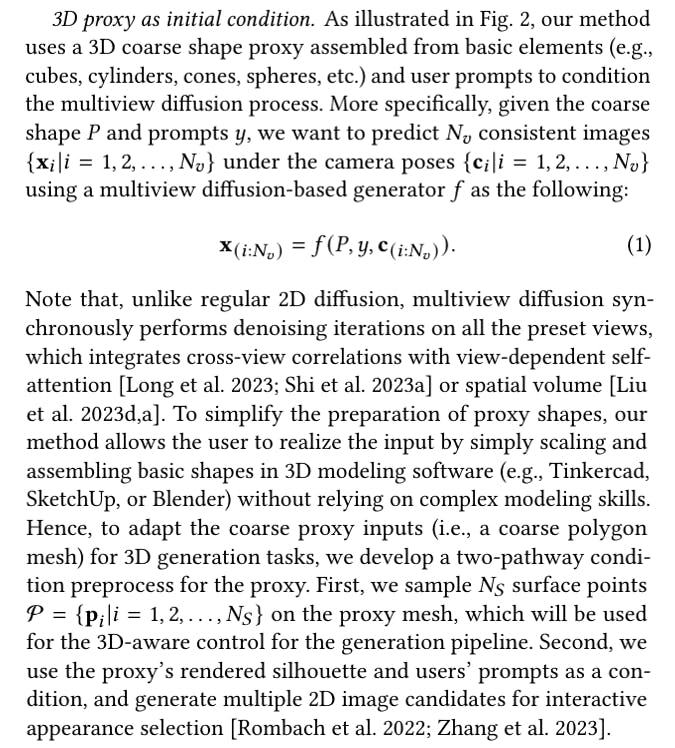

3 Method and 3.1 Proxy-Guided 3D Conditioning for Diffusion

3.2 Interactive Generation Workflow and 3.3 Volume Conditioned Reconstruction

4 Experiment and 4.1 Comparison on Proxy-based and Image-based 3D Generation

4.2 Comparison on Controllable 3D Object Generation, 4.3 Interactive Generation with Part Editing & 4.4 Ablation Studies

5 Conclusions, Acknowledgments, and References

SUPPLEMENTARY MATERIAL

A. Implementation Details

B. More Discussions

C. More Experiments

3 METHOD

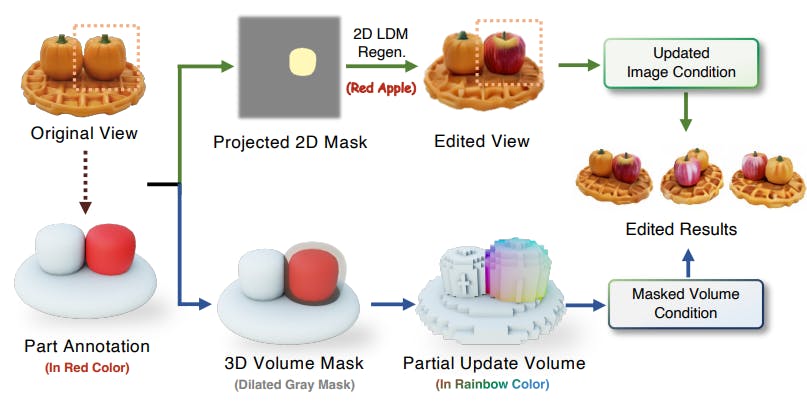

We introduce Coin3D, a novel Controllable 3D assets generation framework, which adds 3D-aware control to the multiview diffusion process in object generation tasks, enabling an interactive modeling workflow for fine-grained customizable object generation. An overview of Coin3D is shown in Fig. 2. Instead of using conventional text prompts or a perspective image as a condition, our framework employs a coarse geometry proxy made from basic shapes (e.g., a snowman composed of two spheres, two sticks, and one cone), complemented by user prompts that describe the object’s identity. Then, the diffusion-based generation will be conditioned on both a voxelized 3D proxy and 2D image candidates generated by controlled 2D diffusion with the proxy’s silhouette (e.g., images with different appearances in the left bottom of Fig. 2). During the 3D-aware conditioned generation, we use a novel 3D adapter module that seamlessly integrates proxy-guided controls with adjustable strength into the diffusion pipeline (Sec 3.1). To deliver an interactive generation workflow with fine-grained 3D-aware part editing and the responsive previewing ability, we also introduce proxy-bounded editing to precisely control the volume update, and employ an efficient volume cache mechanism to accelerate the image previewing at arbitrary viewpoints (Sec 3.2). Furthermore, we propose a volume-conditioned reconstruction strategy, which effectively leverages the 3D context from feature volume to improve the reconstruction quality (Sec 3.3).

3.1 Proxy-Guided 3D Conditioning for Diffusion

Authors:

(1) Wenqi Dong, from Zhejiang University, and conducted this work during his internship at PICO, ByteDance;

(2) Bangbang Yang, from ByteDance contributed equally to this work together with Wenqi Dong;

(3) Lin Ma, ByteDance;

(4) Xiao Liu, ByteDance;

(5) Liyuan Cui, Zhejiang University;

(6) Hujun Bao, Zhejiang University;

(7) Yuewen Ma, ByteDance;

(8) Zhaopeng Cui, a Corresponding author from Zhejiang University.