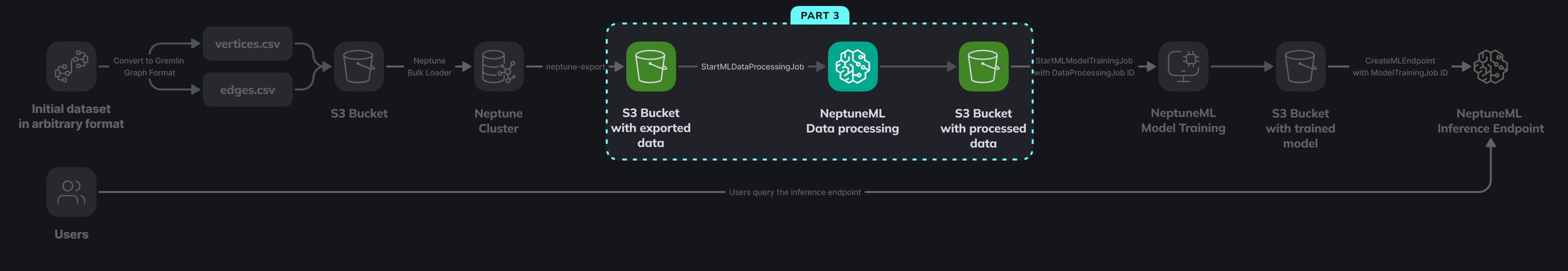

In this post we’ll continue working on link prediction with the Twitch dataset.

We already have the graph data exported from Neptune using the neptune-export utility and the ‘neptune_ml’ profile. The previous steps are described in parts 2 and 1 of this guide.

Read part 1 here and part 2 here.

The data is currently stored in S3 and looks like this:

Vertices CSV (nodes/user.consolidated.csv):

~id,~label,days,mature,views,partner

"6980","user",771,true,2935,false

"547","user",2602,true,18099,false

"2173","user",1973,false,3939,false

...

Edges CSV (edges/user-follows-user.consolidated.csv):

~id,~label,~from,~to,~fromLabels,~toLabels

"3","follows","6194","2507","user","user"

"19","follows","3","3739","user","user"

"35","follows","6","2126","user","user"

...

The export utility also generated this config file for us:

training-data-configuration.json:

{

"version" : "v2.0",

"query_engine" : "gremlin",

"graph" : {

"nodes" : [ {

"file_name" : "nodes/user.consolidated.csv",

"separator" : ",",

"node" : [ "~id", "user" ],

"features" : [ {

"feature" : [ "days", "days", "numerical" ],

"norm" : "min-max",

"imputer" : "median"

}, {

"feature" : [ "mature", "mature", "auto" ]

}, {

"feature" : [ "views", "views", "numerical" ],

"norm" : "min-max",

"imputer" : "median"

}, {

"feature" : [ "partner", "partner", "auto" ]

} ]

} ],

"edges" : [ {

"file_name" : "edges/%28user%29-follows-%28user%29.consolidated.csv",

"separator" : ",",

"source" : [ "~from", "user" ],

"relation" : [ "", "follows" ],

"dest" : [ "~to", "user" ],

"features" : [ ]

} ]

},

"warnings" : [ ]

}

Our current goal is to perform data processing, which means converting the data we have into a format that the Deep Graph Library framework can use for model training. (For an overview of link prediction with just DGL, see this post). That includes normalization of numerical features, encoding of categorical features, creating lists of node pairs with existing and non-existing links to enable supervised learning for our link prediction task, and splitting the data into training, validation and test sets.

As you can see in the training-data-configuration.json file, the node features ‘days’ (account age) and ‘views’ were recognized as numerical, and min-max normalization was suggested. Min-max normalization scales arbitrary values to a range of [0; 1] like this: x_normalized = (x – x_min) / (x_max – x_min). And imputer = median means that the missing values will be filled with the median value.

Node features ‘mature’ and ‘partner’ are labeled as ‘auto’, and as those columns contain only boolean values, we expect that they will be recognized as categorical features and encoded during the data processing stage. The train-validation-test split is not included in this automatically generated file, and the default split for the link prediction task is 0.9, 0.05, 0.05.

You can adjust the normalization and encoding settings, and you can choose a custom train-validation-test split. If you choose to do so, just replace the original training-data-configuration.json file in S3 with the updated version. The full list of supported fields in that JSON is available here. In this post, we’ll leave this file unchanged.

IAM ROLES NEEDED FOR DATA PROCESSING

Just like in the data loading stage (which is described in Part 1 of this tutorial), we need to create IAM roles that allow access to the services that we’ll be using, and we also need to add those roles to our Neptune cluster. We need two roles for the data processing stage. The first one is a Neptune role that provides Neptune access to SageMaker and S3. The second one is a SageMaker execution role that is used by SageMaker while running the data processing task and allows access S3.

These roles must have trust policies that allow Neptune and SageMaker services to assume them:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Service": "sagemaker.amazonaws.com"

},

"Action": "sts:AssumeRole"

},

{

"Sid": "",

"Effect": "Allow",

"Principal": {

"Service": "rds.amazonaws.com"

},

"Action": "sts:AssumeRole"

}

]

}

After creating the roles and updating their trust policies, we’ll add them to the Neptune cluster (Neptune -> Databases -> YOUR_NEPTUNE_CLUSTER_ID -> Connectivity & Security -> IAM Roles -> Add role).

DATA PROCESSING WITH NEPTUNE ML HTTP API

Now that we’ve updated the training-data-configuration.json file and added the IAM roles to the Neptune cluster, we’re ready to start the data processing job. To do that we need to send a request to the Neptune cluster’s HTTP API from inside the VPC where the cluster is located. We’ll use and EC2 instance to do that.

We’ll use curl to start the data processing job:

curl -XPOST https://(YOUR_NEPTUNE_ENDPOINT):8182/ml/dataprocessing

-H 'Content-Type: application/json'

-d '{

"inputDataS3Location" : "s3://SOURCE_BUCKET/neptune-export/...",

"processedDataS3Location" : "s3://OUTPUT_BUCKET/neptune-export-processed/...",

"neptuneIamRoleArn": "arn:aws:iam::123456789012:role/NeptuneMLDataProcessingNeptuneRole",

"sagemakerIamRoleArn": "arn:aws:iam::123456789012:role/NeptuneMLDataProcessingSagemakerRole"

}'

Just these 4 parameters are required: input data S3 location, processed data S3 location, Neptune role, Sagemaker role. There are many optional parameters: for example, we can manually select the EC2 instance type that will be created for our data processing task with processingInstanceType and set its storage volume size with processingInstanceVolumeSizeInGB. The full list of parameters can be found here.

The cluster responds with a JSON that contains the ID of the data processing job that we just created:

{"id":"d584f5bc-d90e-4957-be01-523e07a7562e"}

We can use it to get the status of the job with this command (use the same neptuneIamRoleArn as in the previous request):

curl https://YOUR_NEPTUNE_CLUSTER_ENDPOINT:8182/ml/dataprocessing/YOUR_JOB_ID?neptuneIamRoleArn='arn:aws:iam::123456789012:role/NeptuneMLDataProcessingNeptuneRole'

Once it responds with something like this,

{

"processingJob": {...},

"id":"d584f5bc-d90e-4957-be01-523e07a7562e",

"status":"Completed"

}

we can check the output. These files were created in the destination S3 bucket:

The graph.* files contains the processed graph data.

The features.json file contains the lists of node and edge features:

{

"nodeProperties": {

"user": [

"days",

"mature",

"views",

"partner"

]

},

"edgeProperties": {}

}

The details on how the data was processed and how the features were encoded can be found in the updated_training_config.json file:

{

"graph": {

"nodes": [

{

"file_name": "nodes/user.consolidated.csv",

"separator": ",",

"node": [

"~id",

"user"

],

"features": [

{

"feature": [

"days",

"days",

"numerical"

],

"norm": "min-max",

"imputer": "median"

},

{

"feature": [

"mature",

"mature",

"category"

]

},

{

"feature": [

"views",

"views",

"numerical"

],

"norm": "min-max",

"imputer": "median"

},

{

"feature": [

"partner",

"partner",

"category"

]

}

]

}

],

"edges": [

{

"file_name": "edges/%28user%29-follows-%28user%29.consolidated.csv",

"separator": ",",

"source": [

"~from",

"user"

],

"relation": [

"",

"follows"

],

"dest": [

"~to",

"user"

]

}

]

}

}

We can see that the columns ‘mature’ and ‘partner’ with boolean values, initially labeled as ‘auto’ in the training-data-configuration.json file, were encoded as category features.

The ‘train_instance_recommendation.json’ file contains the SageMaker instance type and storage size recommended for model training:

{

"instance": "ml.g4dn.2xlarge",

"cpu_instance": "ml.m5.2xlarge",

"disk_size": 14126462,

"mem_size": 4349122131.111111

}

The model-hpo-configuration.json file contains the type of the model, the metrics used for its evaluation, the frequency of the evaluation, and the hyperparameters.

This concludes the data processing stage of the process, as we are now ready to start training the ML model. It will be discussed in the next part of this guide.