Authors:

(1) S M Rakib Hasan, Department of Computer Science and Engineering, BRAC University, Dhaka, Bangladesh ([email protected]);

(2) Aakar Dhakal, Department of Computer Science and Engineering, BRAC University, Dhaka, Bangladesh ([email protected]).

Table of Links

Abstract and I. Introduction

II. Literature Review

III. Methodology

IV. Results and Discussion

V. Conclusion and Future Work, and References

III. METHODOLOGY

A. Dataset Description

The obfuscated malware dataset is a collection of memory dumps from benign and malicious processes, created to evaluate the performance of obfuscated malware detection methods. The dataset contains 58,596 records, with 50% benign and 50% malicious. The malicious memory dumps are from three categories of malware: Spyware, Ransomware, and Trojan Horse. The dataset is based on the work [10], who proposed a memory feature engineering approach for detecting obfuscated malware.

The dataset has 55 features and two types of labels, one denoting if they are malicious and the other denoting their malware family. Of the malicious samples, 32.5% are Trojan Horse malware, 33.67% are Spyware and 33.8% are Ransomware samples.

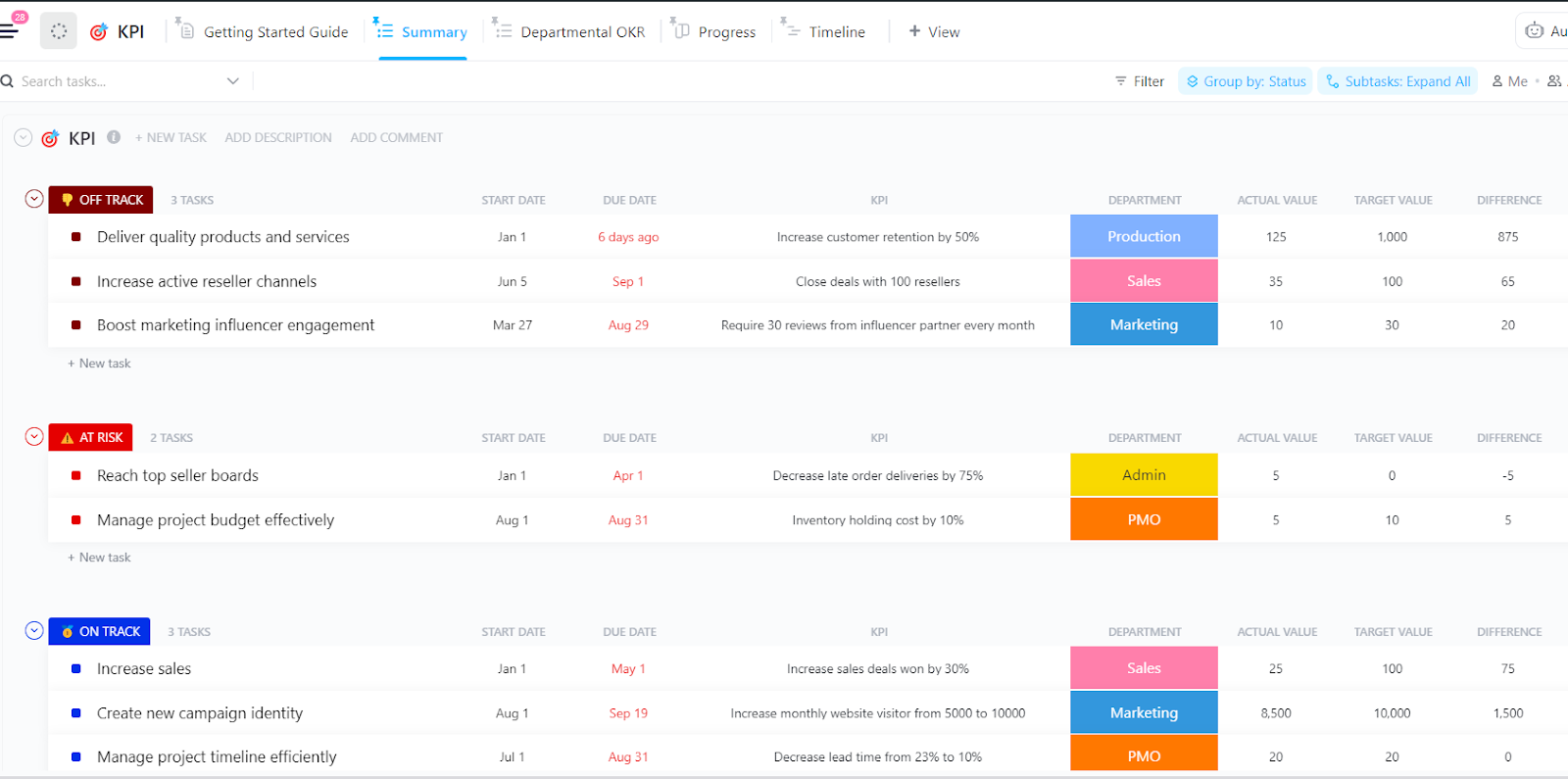

After collecting the data, we went through general preprocessing steps and conducted two sets of classification. One to detect malware and the other to classify the malware. Afterwards, we compiled the results to compare. Fig.1 demonstrates our workflow.

B. Data Preprocessing

At first, we cleaned the data. Some labels had unnecessary information, so we had to trim them. We also standardized the data points for equal treatment of features and better convergence. The dataset was well maintained and clean, so we did not have to perform denoising or further preprocessing steps. For some classifications, we had to encode the labels or oversample or under-sample the data points.

C. Model Description

Here, we provide an in-depth analysis of the used machine learning models, discussing the underlying principles, loss functions, activation functions, mathematical functions, strengths, weaknesses, and limitations.

1) Random Forest: The Random Forest classifier is a powerful ensemble learning method that aims to improve predictive performance and reduce overfitting by aggregating multiple decision trees [11]. Each decision tree is built on a bootstrapped subset of the data, and features are sampled randomly to construct a diverse ensemble of trees. The final prediction is determined through a majority vote or weighted average of individual tree predictions.

Random Forest employs the Gini impurity as the default loss function for measuring the quality of splits in decision trees. It is defined as:

Where c is the number of classes, and pi is the probability of a sample being classified as class i.

Random Forest is robust against outliers and noisy data due to the ensemble nature that averages out individual tree errors. Also, it is less prone to overfitting compared to individual decision trees. Additionally, Random Forest provides an estimate of feature importance, aiding in feature selection and interpretation of results.

2) Multi-Layer Perceptron (MLP) Classifier: The MultiLayer Perceptron (MLP) classifier is a type of artificial neural network that consists of multiple layers of interconnected nodes or neurons [12] that process information and pass it to the subsequent layer using activation functions. The architecture typically includes an input layer, one or more hidden layers, and an output layer.

They commonly use the cross-entropy loss function for classification tasks which measures the dissimilarity between predicted probabilities and true class labels. Mathematically, it is defined as:

Where p(i) is the true probability distribution of class i, and q(i) is the predicted probability distribution.

They can capture complex patterns in data. The architecture’s flexibility allows it to model non-linear relationships effectively. With sufficient data and training, MLPs can achieve high predictive accuracy.

They require careful hyperparameter tuning to prevent overfitting, and their performance heavily depends on the choice of architecture and initialization.

3) k-Nearest Neighbors (KNN) Classifier: The k-Nearest Neighbors (KNN) classifier is a non-parametric algorithm used for both classification and regression tasks [13]. It makes predictions based on the majority class of the k nearest data points in the feature space.

KNN is not driven by explicit loss functions or optimization during training. Instead, during inference, it calculates distances between the query data point and all training data points using a distance metric such as Euclidean distance or Manhattan distance. The k nearest neighbours are then selected based on these distances. It can handle multi-class problems and adapt well to changes in data distribution. KNN does not assume any underlying data distribution, making it suitable for various data types. One limitation of KNN is its sensitivity to the choice of k value and distance metric.

4) XGBoost Classifier: The XGBoost (Extreme Gradient Boosting) classifier is an ensemble learning algorithm that has gained popularity for its performance and scalability in structured data problems [14]. It combines the power of gradient boosting with regularization techniques to create a robust and accurate model. It optimizes a loss function through a series of decision trees. The loss function is often a sum of a data-specific loss (e.g., squared error for regression or log loss for classification) and a regularization term. It minimizes this loss by iteratively adding decision trees and adjusting their weights. XGBoost excels in handling structured data, producing accurate predictions with fast training times. Its ensemble of shallow decision trees helps capture complex relationships in the data. Regularization techniques, such as L1 and L2 regularization, prevent overfitting and enhance generalization. However, it may not perform as well on text or image data, as it is primarily designed for structured data.

D. Binary Classification

In this step, our primary target was to detect a malicious memory dump. For this purpose, we have used some simple yet robust machine learning classifiers namely Random Forest Classifier, Multi-Level Perceptron and KNN and received outstanding results. Our trained models could detect all the malware accurately and showed great performance in the segregation of malware and safe memory dumps.

E. Malware Classification

Here, we tried to separate the malwares from each other based on their different features. Considering the benign samples, the data was highly imbalanced, so we had to go through some further preprocessing before training the detection system. We conducted the classification in three steps:

• Classifying in the original format: We ran the data through different ML models and stored the metric scores for comparison. We did not go through any further steps than our usual preprocessing. We passed the same data as we did in binary classification.

• Undersampling the majority class: As ”Benign” was the majority class in the malware dataset, we undersampled it through various methods, namely Edited Nearest Neighbor Rule [15], Near Miss Rule [16], Random Undersampling and All KNN Undersampling.

• Oversampling other minority Classes: We have used the ADASYN [17] method to generate synthetic data points for all minority classes.

After applying these techniques, our training data(80% of the total data) size is shown in TABLE I: Afterwards, we used different machine learning algorithms to train our detection models and recorded their performance.