In traditional blockchains, block propagation occurs through a peer-to-peer network in which new blocks and transactions are broadcast to all propagating nodes. This process usually happens sequentially or is flooded. However, this method is not scalable, as it becomes difficult to manage as the network grows due to the sheer number of communications.

Let’s consider a network of 35,000 validators. The leader needs to transmit a 128 MB block (about 500,000 transactions at 250 bytes per transaction) to all 35,000 validators. The traditional block propagation implementation would require the leader to have a unique connection to each validator and transmit the full 128 MB 35,000 times. This would result in a total data transmission of 4.69 TB, which is far beyond typical bandwidth capabilities and would be infeasible to accommodate with so many connections.

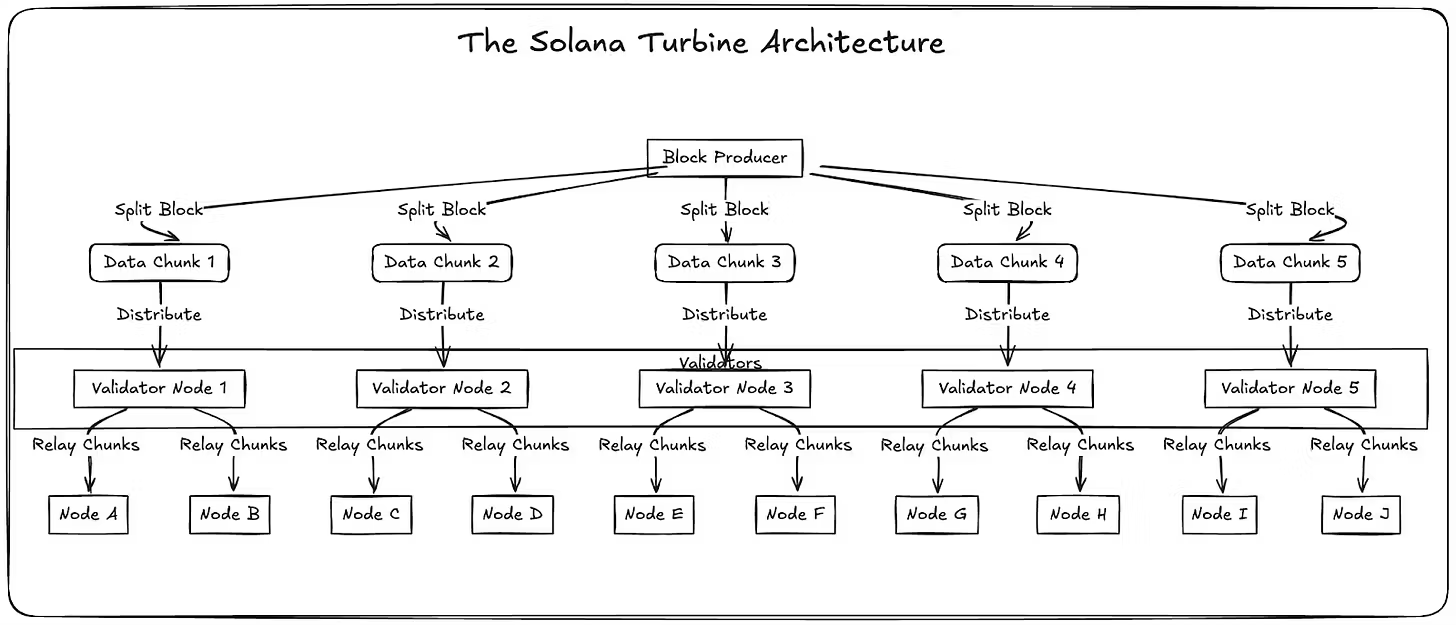

To solve the issue of slow block dissemination, Solana introduced Turbine, a multi-layer block propagation mechanism used by clusters to broadcast ledger entries to all nodes. Broadly speaking, Turbine breaks blocks into smaller blocks and disseminates them through an order of nodes, reducing the load on an individual node.

What is Turbine?

Turbine’s architecture was heavily inspired by BitTorrent’s. Both rely on data chunking—breaking large data into smaller pieces—and utilize the peer-to-peer network to disseminate data. Turbine is optimized for streaming. It transmits data using UDP only, which provides latency benefits. It implements a random path per packet through the network as leaders (block producers) stream their data. This high speed of block propagation allows Solana to maintain its high throughput.

More so, Turbine addresses the issue of data availability, ensuring that all nodes can access the required data to validate transactions efficiently. This is done without requiring an enormous amount of bandwidth, which is a common bottleneck in other blockchain networks.

The block propagation process of Turbine

Before a block is propagated, the leader builds and orders the block given the incoming stream of transactions. After the block is built, it is ready to be sent via Turbine to the rest of the network. This process is referred to as block propagation. Messages are then passed between validators, and these messages are encapsulated within the block data to satisfy either the commitment status “confirmed” or “finalized.”.

While leaders build and propose entire blocks, the actual data is sent as shreds (partial blocks) to other validators in the network. Shreds are the atomic units sent between validators. This process of shredding and propagation ensures a swift and efficient distribution of block data across Solana, maintaining high throughput and network security.

Note: A confirmed block has received a supermajority of ledger votes, whereas a finalized block has been confirmed and has 31+ confirmed blocks built atop the target block.

Erasure Coding

Before being sent to the Turbine Tree, shreds are encoded with Reed-Solomon erasure coding, a data protection method that uses specific types of error-correcting code. This method is designed to protect against data loss in case of failures or errors. It divides data into smaller blocks and encodes each block with extra information.

Turbine relies heavily on the retransmission of packets between validators who could decide to rebroadcast incorrect data or incomplete data. Due to retransmission, any network-wide packet loss is compounded, and the probability of the packet failing to reach its destination increases on each hop. For instance, if a leader transmits 20% of the block’s packets as erasure codes, then the network can drop any 20% of the packets without losing the block. Leaders can dynamically adjust this number (FEC rate) based on network conditions by taking into account variables such as recently observed network-wide packet loss and tree depth.

In Solana, data shreds are partial blocks from the original block built by the leader, and the recovery shreds are the Reed-Solomon-generated erasure-coded blocks. Blocks on Solana typically leverage an FEC of 32:32 (32 out of 64 packets can be lost with no need to re-transmit).

Turbine Tree

Solana uses a Turbine Tree, a structured network topology, to facilitate the efficient propagation of shreds among validators. When shreds are properly encoded into their respective shred groups, they are ready to be disseminated through the Turbine Tree to inform other validators in the network of the most updated state.

The Turbine Tree, being known to all, ensures each validator knows exactly where they are responsible for relaying that shred. The Turbine Tree is typically a 2- or 3-hop tree (depending on the number of active validators), given the current DATA_PLANE_FANOUT value of 200.

Furthermore, nodes can resort to gossip and repair if they don’t receive enough shreds or if the loss rate exceeds the FEC rate. Under the current implementation, a node lacking enough shreds to reconstruct the block sends a request to the leader for re-transmission. Under deterministic Turbine, any node that receives the full block can send the repair shreds that the requesting node needs, thus pushing data transmission further down to the areas of the tree that are requesting data.

Conclusion

In this report, we examined Solana’s Turbine block propagation mechanism, which breaks large blocks into smaller shreds to efficiently distribute data across the network. We highlighted how erasure coding ensures data integrity and how the Turbine Tree facilitates swift transmission among validators.