DeepSeek has released DeepSeek-Prover-V2 a new open-source large language model specifically designed for formal theorem proving in Lean 4. The model builds on a recursive theorem proving pipeline powered by the company’s DeepSeek-V3 foundation model. Lean 4 represents the latest version of the Lean theorem prover, an interactive proof assistant developed by Microsoft Research. This functional programming language and interactive theorem proving system allows mathematicians and computer scientists to write formal proofs with machine-checked verification.

The project represents a step toward bridging formal and informal mathematical reasoning, using general-purpose LLMs’ capabilities to tackle the structured domain of formal theorem proving. According to the researchers, their approach mirrors human proof construction methodology by breaking complex theorems into more manageable components.

The DeepSeek research team has expanded their evaluation framework with a new benchmark collection designed specifically for formal theorem proving assessment.

“In addition to standard benchmarks, we introduce ProverBench, a collection of 325 formalized problems, to enrich our evaluation, including 15 selected problems from the recent American Invitational Mathematics Examination (AIME) competitions (years 24-25),” the researchers explained.

Figure 1 | Source: ProverBench – Formalization of AIME and Textbook Problems

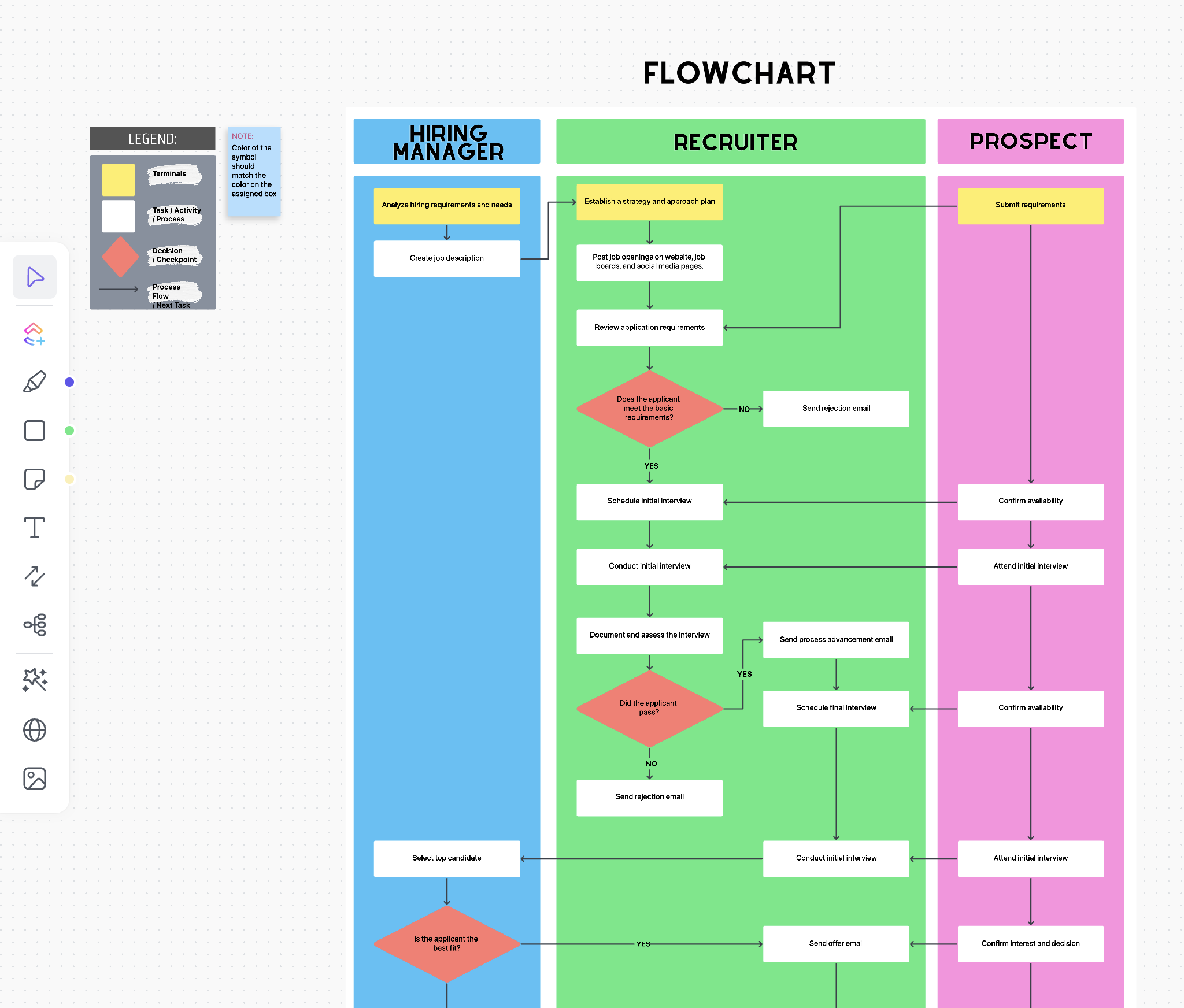

Initial results from testing on these AIME problems demonstrate promising performance from their specialized theorem proving model. The team reports that DeepSeek-Prover-V2 successfully solved 6 of the 15 AIME problems, while the general-purpose DeepSeek-V3 model solved 8 when using majority voting techniques.

Figure 2 | Source: Benchmark performance of DeepSeek-Prover-V2

Figure 3 | Source: Decomposed sub-goals into a series of lemma statements

“Since general-purpose models are known to struggle with producing complete Lean proofs, we instruct DeepSeek-V3 to generate only a high-level proof sketch with the details omitted. The resulting chain of thought culminates in a Lean theorem composed of a sequence of have statements, each concluded with a sorry placeholder indicating a subgoal to be solved. This approach mirrors the human style of proof construction, in which a complex theorem is incrementally reduced to a sequence of more manageable lemmas,” the team described.

The system then employs a methodical strategy to address each component of the proof individually, creating a structured approach to theorem proving that builds upon previously established results.

“Leveraging the subgoals generated by DeepSeek-V3, we adopt a recursive solving strategy to systematically resolve each intermediate proof step. We extract subgoal expressions from have statements to substitute them for the original goals in the given problems (see Figure 3(a)), and then incorporate the preceding subgoals as premises (see Figure 3(b)). This construction enables subsequent subgoals to be resolved using the intermediate results of earlier steps, thereby promoting a more localized dependency structure and facilitating the development of simpler lemmas,” the researchers detailed.

To optimize computational resources, the system employs a smaller specialized 7B parameter model for processing the decomposed lemmas, which helps manage the computational demands of extensive proof searches. The approach culminates in an automatically derived complete proof when all decomposed steps are successfully resolved.

“The algorithmic framework operates in two stages, leveraging two complementary models: DeepSeek-V3 for lemma decomposition and a 7B prover model to complete the corresponding formal proof details,” the researchers described.

This architecture establishes a natural pathway for synthesizing formal reasoning data, effectively merging high-level mathematical reasoning with rigorous formal verification requirements.

“We curate a subset of challenging problems that remain unsolved by the 7B prover model in an end-to-end manner, but for which all decomposed sub-goals have been successfully resolved. By composing the proofs of all sub-goals, we construct a complete-formal proof for the original problem,” the researchers explained.

Despite the technical achievements, some experts have expressed concerns about the implementation details. Elliot Glazer, Lead mathematician at Epoch AI, pointed out potential issues with the research:

Some concerns about the DeepSeek-Prover-V2 paper. Potentially misformalized examples, and discussion on the Lean zulip suggests the PutnamBench proofs are nonsense and use an implicit sorry (possibly hidden in the apply? tactic) not reported in their read-eval-print-loop.

These concerns highlight the ongoing challenges in the formal verification space, where precise implementation details can significantly impact the validity of results.

DeepSeek has released their Prover-V2 in two different model sizes: a 7B parameter version built upon their previous Prover-V1.5-Base featuring an extended context length of up to 32K tokens, and a much larger 671B parameter version trained on DeepSeek-V3-Base. Both models are now available on HuggingFace, along with the full ProverBench dataset containing 325 formalized problems for evaluation purposes. Researchers and developers interested in formal theorem proving can access these resources to explore the capabilities and limitations of this technology and potentially contribute to addressing the concerns raised by experts in the field.