Table of Links

Abstract and 1. Introduction

-

Methods

2.1 Tokenizer analysis

2.2 Indicators for detecting under-trained tokens and 2.3 Verification of candidate tokens

-

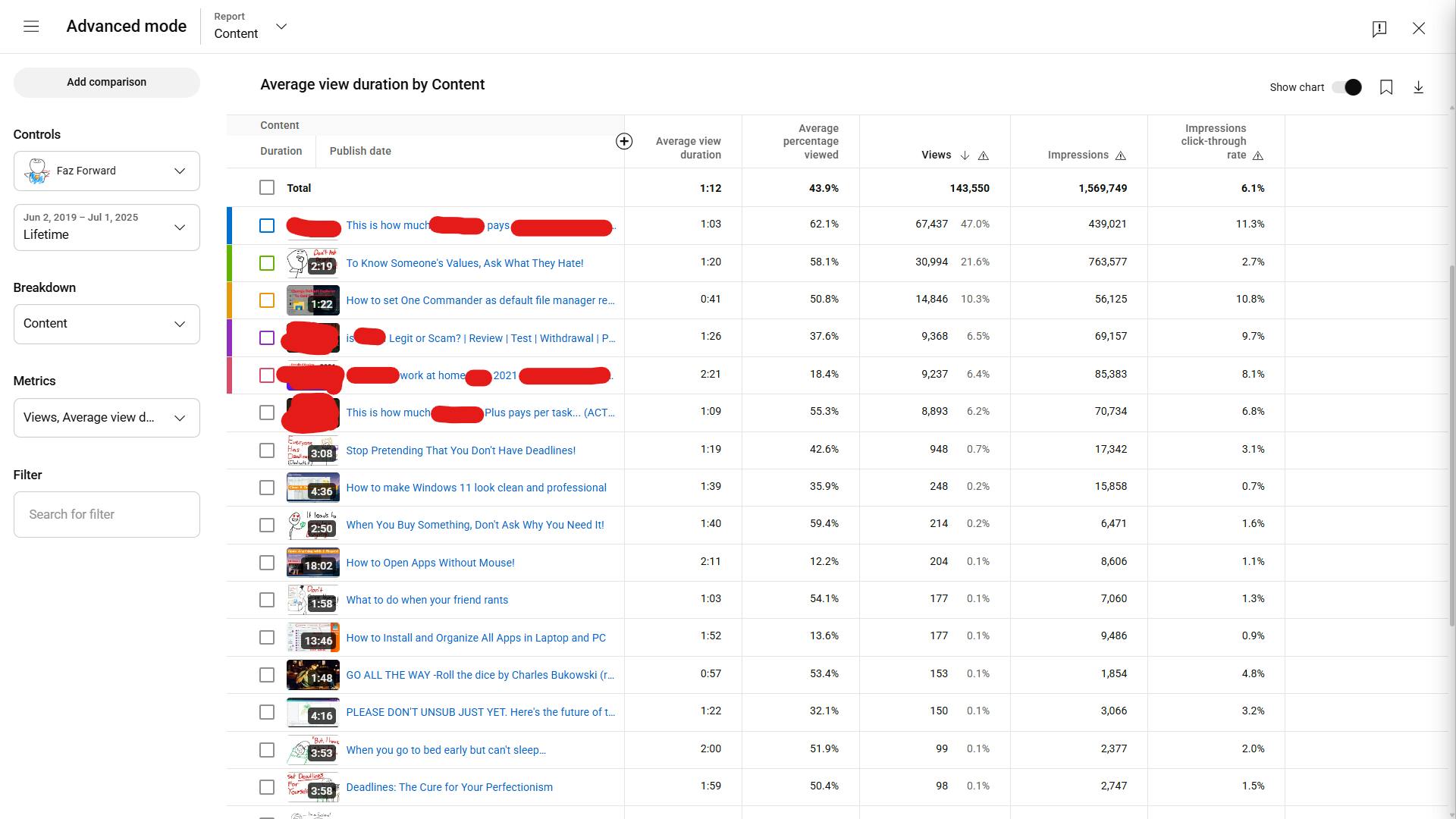

Results

3.1 Effectiveness of indicators and verification

3.2 Common observations

3.3 Model-specific observations

-

Closed-source models

-

Discussion, Acknowledgments, and References

A. Verification details

B. A short primer on UTF-8 encoding

C. Outputs for API-based verification

3.2 Common observations

Although many of our findings are dependent on model-specific details such as tokenizer training and configuration, model architecture, and training data, there are a number of commonalities that appear across many different model families.

3.2.1 Single-byte tokens

Tokens representing a single byte are a common source of untrained tokens. The most common occurrence are the ‘fallback’ bytes 0xF5–0xFF which are not used in UTF-8 encoded text[2], and are a convenient source for quickly locating reference untrained tokens for indicators which require them. In addition, many tokenizers including from the Gemma, Llama2 and Mistral families include every byte as a token, but additionally assign a duplicate token to many characters in the normal ASCII range 0x00–0x7F. For example, A is both token 282 as an unused byte fallback token and as token 235280 a text-based ‘A’ in the Gemma models. These issues are not universal, and we also find models which include precisely the 243 bytes used in UTF-8

as tokens, including the models by EleutherAI [14]. Untrained single byte tokens are typically classified as ‘partial UTF-8 sequences’ or ‘unreachable’, and our indicators are effective in revealing which ones are never or rarely seen in training. We publish specific tables which shows the status of each single-byte token for each analyzed model in our repository.

3.2.2 Fragments of merged tokens

3.2.3 Special tokens

Many models include untrained special tokens, such as <pad>, <unk>, or <|unused_123|>. In the following discussion we generally omit mentioning them, unless their status as an (un)trained token is particularly surprising, as their inclusion in the tokenizer and training data is typically deliberate, for purposes such as the ability to fine-tune models without changing tokenizers. One common observation is that on many occasions tokens such as <mask>, which we expect to be completely untrained, nevertheless appear to have been seen in training. A likely source for this is code repositories or guides about language models using these tokens in normal text, along with tokenizers allowing such special control tokens in normal input text.

[2] See Appendix B for a primer on UTF-8 encoding.

[3] When mentioning fragments of more complete tokens, the tokens in parentheses were not detected or verified as under-trained, unless explicitly mentioned otherwise.