Table of Links

Abstract and 1 Introduction

2 Related Work

3 Model and 3.1 Associative memories

3.2 Transformer blocks

4 A New Energy Function

4.1 The layered structure

5 Cross-Entropy Loss

6 Empirical Results and 6.1 Empirical evaluation of the radius

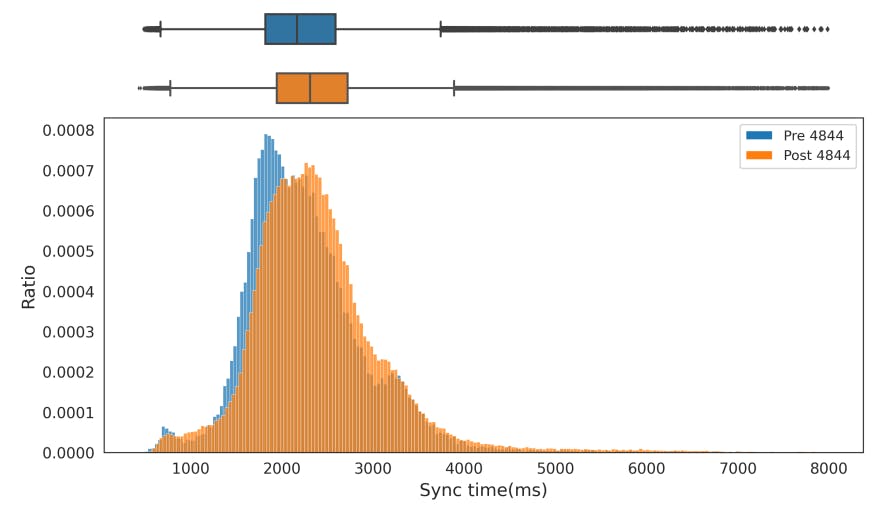

6.2 Training GPT-2

6.3 Training Vanilla Transformers

7 Conclusion and Acknowledgments

Appendix A. Deferred Tables

Appendix B. Some Properties of the Energy Functions

Appendix C. Deferred Proofs from Section 5

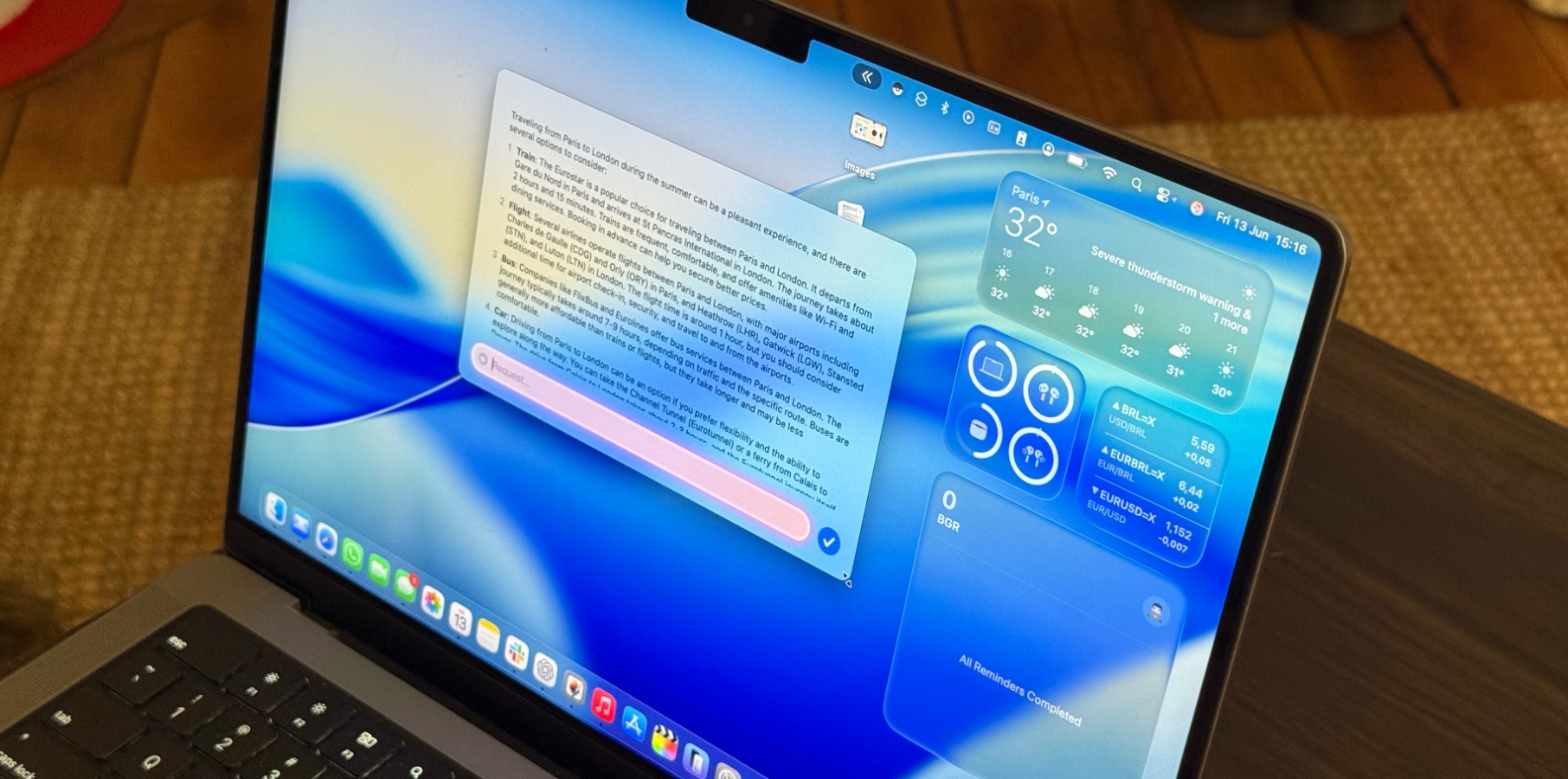

Appendix D. Transformer Details: Using GPT-2 as an Example

References

Abstract

Increasing the size of a Transformer model does not always lead to enhanced performance. This phenomenon cannot be explained by the empirical scaling laws. Furthermore, improved generalization ability occurs as the model memorizes the training samples. We present a theoretical framework that sheds light on the memorization process and performance dynamics of transformer-based language models. We model the behavior of Transformers with associative memories using Hopfield networks, such that each transformer block effectively conducts an approximate nearest-neighbor search. Based on this, we design an energy function analogous to that in the modern continuous Hopfield network which provides an insightful explanation for the attention mechanism. Using the majorization minimization technique, we construct a global energy function that captures the layered architecture of the Transformer. Under specific conditions, we show that the minimum achievable cross-entropy loss is bounded from below by a constant approximately equal to 1. We substantiate our theoretical results by conducting experiments with GPT-2 on various data sizes, as well as training vanilla Transformers on a dataset of 2M tokens.

1 Introduction

Transformer-based neural networks have exhibited powerful capabilities in accomplishing a myriad of tasks such as text generation, editing, and question-answering. These models are rooted in the Transformer architecture (Vaswani et al., 2017) which employs the selfattention mechanisms to capture the context in which words appear, resulting in superior ability to handle long-range dependencies and improved training efficiency. In many cases, models with more parameters result in better performance measured by perplexity (Kaplan et al., 2020), as well as in the accuracies of end tasks (Khandelwal et al., 2019; Rae et al., 2021; Chowdhery et al., 2023). As a result, larger and larger models are being developed in the industry. Recent models (Smith et al., 2022) can reach up to 530 billion parameters, trained on hundreds of billions of tokens with more than 10K GPUs.

Nevertheless, it is not always the case that bigger models result in better performance. For example, the 2B model MiniCPM (Hu et al., 2024) exhibits comparable capabilities to larger language models, such as Llama2-7B (Touvron et al., 2023), Mistral-7B (Jiang et al., 2023), Gemma-7B (Banks and Warkentin, 2024), and Llama-13B (Touvron et al., 2023). Moreover, as computational resources for training larger models increase, the size of available high-quality data may not keep pace. It has been documented that the generalization abilities of a range of models increase with the number of parameters and decrease when the number of training samples increases (Belkin et al., 2019; Nakkiran et al., 2021; d’Ascoli et al., 2020), indicating that generalization occurs beyond the memorization of training samples in over-parameterized neural networks (Power et al., 2022). Therefore, it is crucial to understand the convergence dynamics of training loss during memorization, both in relation to the model size and the dataset at hand. There has been an increasing interest in the empirical scaling laws under constraints on the training dataset size (Muennighoff et al., 2024). Let N denote the number of the parameters of the model and D denote the size of the dataset. Extensive experiments have led to the conclusion of the following empirical scaling laws (Kaplan et al., 2020) in terms of the model performance measured by the test cross-entropy loss L on held-out data:

In this paper, we focus on the theoretical aspects of the dependencies between the achievable performance, indicated by the pre-training loss, for transformer-based models, and the model and data sizes during memorization. It has been observed that a family of large language models tends to rely on knowledge memorized during training (Hsia et al., 2024), and the larger the models, the more they tend to encode the training data and organize the memory according to the similarity of textual context (Carlini et al., 2022; Tirumala et al., 2022). Therefore, we model the behavior of the Transformer layers with associative memory, which associates an input with a stored pattern, and inference aims to retrieve the related memories. A model for associative memory, known as the Hopfield network, was originally developed to retrieve stored binary-valued patterns based on part of the content (Amari, 1972; Hopfield, 1982). Recently, the classical Hopfield network has been generalized to data with continuous values. The generalized model, the Modern Continuous Hopfield Network (MCHN), has been shown to exhibit equivalence to the attention mechanism (Ramsauer et al., 2020). With MCHN, the network can store well-separated data points with a size exponential in the embedding dimension. However, the MCHN only explains an individual Transformer layer and relies heavily on regularization.

Transformer-based models consist of a stack of homogeneous layers. The attention and feed-forward layers contribute to the majority of the parameters and are also the key components of the attention mechanism. It has been shown that the feed-forward layers can be interpreted as persistent memory vectors, and the weights of the attention and feed-forward sub-layers can be merged into a new all-attention layer without compromising the model’s performance (Sukhbaatar et al., 2019). This augmentation enables a unified view that integrates the feed-forward layers into the attention layers, thereby significantly facilitating our analysis. Furthermore, the layered structure of the transformer networks induces a sequential optimization, reminiscent of the majorization-minimization (MM) technique (Ortega and Rheinboldt, 1970; Sun et al., 2016), which has been extensively utilized across domains such as signal processing and machine learning. Using the MM framework, we construct a global energy function tailored for the layered structure of the transformer network.

Our model provides a theoretical framework for analyzing the performance of transformerbased language models as they memorize training samples. Large language models only manifest capabilities for certain downstream tasks once the training loss reaches a specific threshold (Du et al., 2024). In practice, the training of large language models is terminated when the loss curves plateau. On the one hand, the validation loss offers valuable insights for budgetary considerations; it has been observed that even after training on up to 2T tokens, some models have yet to exhibit signs of saturation (Touvron et al., 2023). On the other hand, early stopping can potentially compromise the model’s generalization capabilities (Murty et al., 2023). On the other hand, implementing early stopping can potentially compromise the generalization capabilities of the models (Murty et al., 2023). We conduct a series of experiments utilizing GPT-2 across a spectrum of data sizes, concurrently training vanilla Transformer models on a dataset comprising 2M tokens. The experimental outcomes substantiate our theoretical results. We believe this work offers valuable theoretical perspectives on the optimal cross-entropy loss, which can inform and enhance decision-making regarding model training.