I. Why Dimensionality Reduction Deserves Your Attention

In machine learning, more data isn’t always better — especially when it comes to features. Having too many variables can lead to what’s affectionately known as the curse of dimensionality, where the model becomes confused, overfits, or takes forever to train.

Unknown Fact: not all data is good data. Some of it is just noise dressed up as numbers. As your dataset balloons into hundreds (or thousands) of features, your model may start gasping for air.

Enter dimensionality reduction — the thoughtful art of keeping what matters and gently escorting the rest to the exit.

Dimensionality reduction helps

- streamline your model,

- enhance performance,

- cut training time,

- and even reduce overfitting.

II. The Toolkit: Four Techniques That Actually Work

Dimensionality reduction is less about deleting columns and more about reshaping how the data is represented. Here are four solid techniques every aspiring data scientist should know:

1. Principal Component Analysis (PCA)

PCA transforms the original features into a new set of orthogonal axes (called principal components), ranked by how much variance they capture. Instead of 100 unorganised features, PCA might give five super features that explain 95% of the data’s behaviour.

Why it’s cool: It compresses data with minimal information loss, like packing a suitcase efficiently, where everything fits neatly and nothing essential gets left behind.

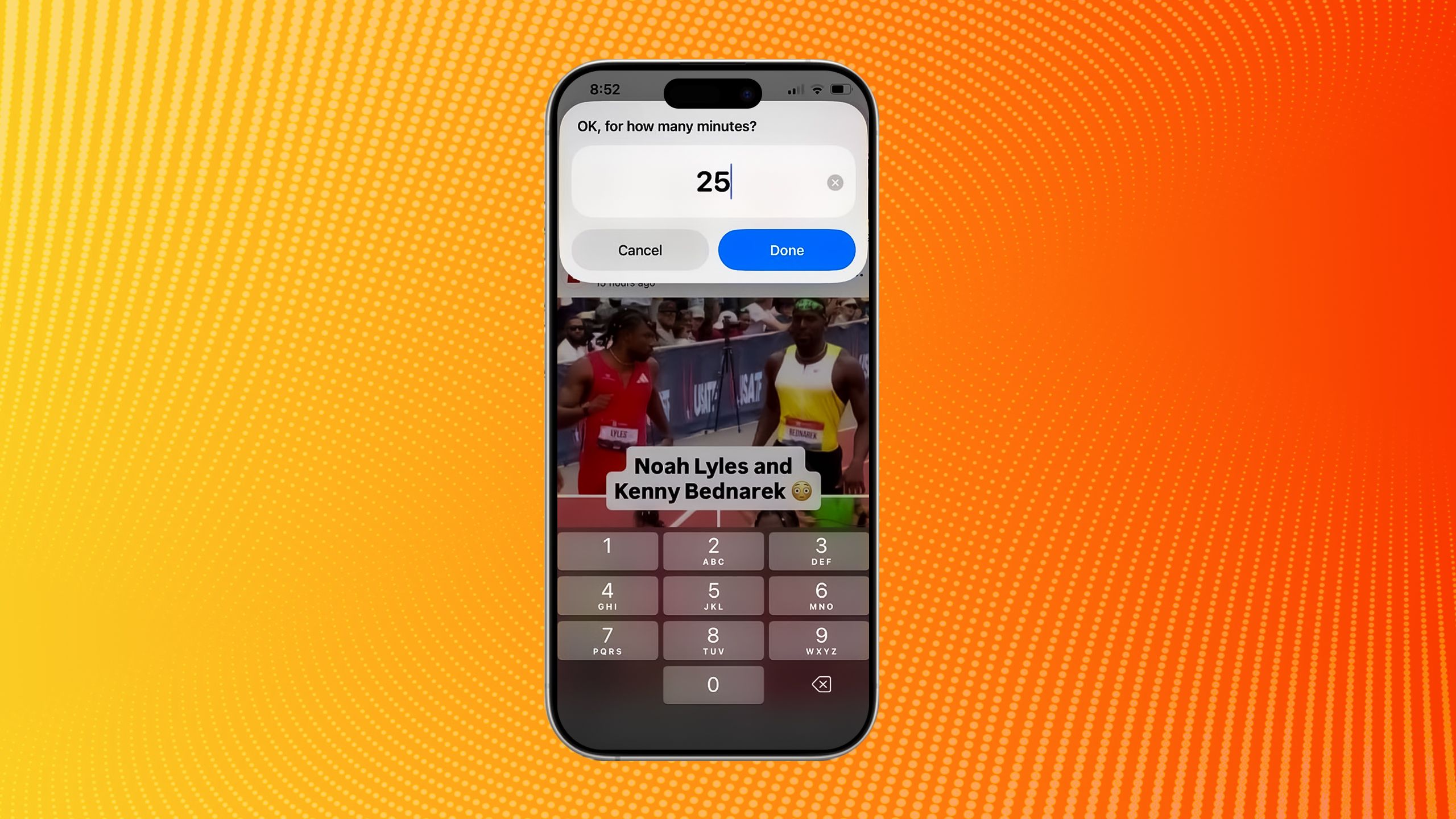

2. t-SNE (t-Distributed Stochastic Neighbor Embedding)

It is a non-linear technique that is ideal for visualising high-dimensional data in 2D or 3D. It’s especially good at preserving local structure, similar points in high dimensions stay close in the lower dimensions.

Heads up: It’s a bit temperamental and not great for downstream ML tasks, think of it as a fabulous artist, not an accountant.

3. Linear Discriminant Analysis (LDA)

Unlike PCA, which is unsupervised, LDA is supervised. It tries to find a linear combination of features that best separates the classes.

Perfect when: Your goal is to improve classification performance, especially when classes are tightly packed.

4. Autoencoders

Autoencoders are neural networks designed to compress (encode) input data into a lower-dimensional representation and then reconstruct (decode) it back to the original form. Much like the bottleneck layer in a convolutional neural network (CNN) architecture, the central layer of an autoencoder holds the compressed, reduced-dimension data, capturing the most essential features for efficient reconstruction.

Caution: Powerful but complex, best reserved for large datasets and deep learning pipelines.

III. When to Use It (and When to Politely Decline it)

Use dimensionality reduction when:

- Your dataset has hundreds (or thousands) of features, and your model feels overwhelmed.

- There’s multicollinearity (multiple features saying the same thing in different languages).

- You want to visualise the structure or clusters in your data.

- You’re preparing inputs for algorithms sensitive to input dimensions (like KNN or SVM).

Avoid it when:

- Interpretability is non-negotiable. PCA components don’t tell you much about real-world meaning.

- Your features are already few and far between. Don’t trim the bonsai.

- You’re debugging also additional transformations can add a fog of confusion to what’s already happening.

Pro tip: Always understand what you’re reducing from and what you’re reducing to. It’s not just about trimming the numbers, it’s about keeping legal parts that actually matter. Otherwise, you’re just deleting columns and calling it strategy.

IV. In Conclusion…

Dimensionality reduction is a critical practice in machine learning. Much like effective writing, it is focused, intentional, and designed to convey meaning without unnecessary complexity. Whether you’re simplifying large, feature-rich datasets or uncovering latent structure within your data, techniques such as PCA, t-SNE, and autoencoders can be remarkably effective when applied thoughtfully.

However, these techniques are not quick fixes. They require a clear understanding of your data, your modeling goals, and the trade-offs involved. Dimensionality reduction should be viewed not as a shortcut but as a strategic refinement, a way to enhance clarity, performance, and interpretability.

When applied with care, it can lead to models that are not only faster and more efficient but also more robust. And as a welcome bonus, your visualisations may start to reveal patterns that were previously hidden in the noise.

Thanks for reading, bugginBae! Subscribe for free to receive new posts and support my work.