Table of Links

Abstract and 1. Introduction

-

Background

2.1 Ethereum Primer

2.2 Whitelisted Address Verification

2.3 Taint Analysis on Smart Contracts and 2.4 Threat Model

-

Motivating Example and Challenges

3.1 Motivating Example

3.2 Challenges

3.3 Limitations of Existing Tools

-

Design of AVVERIFIER and 4.1 Overview

4.2 Notations

4.3 Component#1: Code Grapher

4.4 Component#2: EVM Simulator

4.5 Component#3: Vulnerability Detector

-

Evaluation

5.1 Experimental Setup & Research Questions

5.2 RQ1: Effectiveness & Efficiency

5.3 RQ2: Characteristics of Real-world Vulnerable Contracts

5.4 RQ3: Real-time Detection

-

Discussion

6.1 Threats to Validity and 6.2 Limitations

6.3 Ethical Consideration

-

Related Work

-

Conclusion, Availability, and References

5.2 RQ1: Effectiveness & Efficiency

5.2.1 Evaluating Results on Benchmark

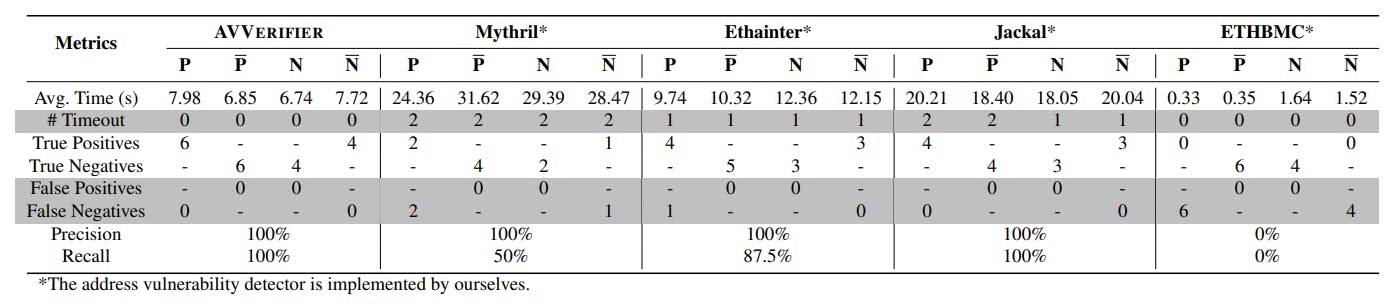

Crafting the Benchmark. After comprehensively collecting technical reports from well-known blockchain security companies [12], we have identified six confirmed vulnerable contracts, as P. As all their source code files are available, we manually patch each of them to compose P. Moreover, we manually sample four benign contracts from widely-adopted DeFi, i.e., Aave [2], Compound [25], ParaSpace Lending [69], and a yield protocol [32], to form the set N. The reason of selecting these four contracts is twofold. On the one hand, they all require the input of external contract address. Specifically, Aave and Compound primarily employ tokens as collateral to

borrow another valuable token, while ParaSpace uses NFTs as collateral. The yield protocol uses the input external contract address to generate collateral yield. Because all these four contracts perform the necessary verification on the passed address, they meet both P1 and P2. On the other hand, after executing certain on-chain operations, the valuable tokens in their contracts are transferred to the caller, thus posing potential risks as P3. Similarly, we deliberately remove their verification on addresses to make them vulnerable, denoting this set as N. Consequently, we obtained 20 ground truth cases. Table 1 illustrates the results, where the highlighted rows refer to the mis-detected results.

Average Time. It takes AVVERIFIER around 7.34s on average, while Mythril lags considerably, taking about 28.3s on average. Ethainter and Jackal sit in between, with times ranging from 10.86s to 19.19s on average. AVVERIFIER is approximately 3.86x, 1.48x, and 2.61x faster than Mythril, Ethainter, and Jackal, respectively. When considering the timeout cases, both AVVERIFIER and ETHBMC recorded zero, while Mythril, Ethainter and Jackal encountered timeout in 8, 4, and 6 instances, respectively. Additionally, we can easily observe that ETHBMC performs well in terms of executing time. However, after a comprehensive code audit and analysis on output logs, we believe it is because its bounded model checking approach prioritizes efficiency over thoroughness. In other words, paths may be overlooked, which may compromise the accuracy in complex scenarios, like the address verification vulnerability focused in this work.

Precision & Recall. Precision and recall are two critical metrics for evaluating an analyzer’s effectiveness, where AVVERIFIER outperforms other tools. Specifically, AVVERIFIER achieves 100% precision and 100% recall on the benchmark. In the case of Mythril and ETHBMC, the main issue is false negatives. For the cases that can be completed within the time limit, Mythril and ETHBMC have a 50% and 100% false negative rate, respectively. We speculate that the primary reason for ETHBMC’s non-ideal results is twofold. On the one hand, ETHBMC’s bounded model checking strategy inherently focuses on a specific range of states and paths within contracts, potentially missing the complexities involved in address verification due to its limited scope. On the other hand, ETHBMC necessitates a pre-defined initial state for analysis. However, this state is very likely not optimal for detecting the address verification vulnerability, potentially affecting its performance. Ethainter also has a worse performance compared to AVVERIFIER in terms of recall, with a false negative rate of around 12.5%. We think the most critical factor is the adoption of Gigahorse [36], a toolchain for binary analysis. According to its implementation, one of its limitations is its inability to perfectly handle dynamic memory, affecting the performance of Ethainter in identifying functions that extensively use dynamic memory. Consequently, this limitation leads to the false negatives.

Root Causes. Considering the differences in metrics when conducting analysis on the benchmark among these five tools, we speculate that there are four reasons for their distinctions on the performance on the benchmark. First, AVVERIFIER fully leverages the characteristics summarized from P1. In the Grapher, it filters suspicious functions as candidates, which significantly reduces the number of possible states, a predicament affects these tools. Second, as detailed in §4.4.4, the pathsearching strategy employed by the Simulator is specifically designed for the address verification vulnerability. This strategy prioritizes paths that may lead to vulnerabilities. Thirdly, when handling the dynamic memory, the other four tools struggle to accurately analyze vulnerable functions that extensively employ complex dynamic memory allocation. In contrast, AVVERIFIER leverages an EVM simulator, enabling it to precisely track address parameters without explicitly modeling dynamic memory behaviors, thereby enhancing its capability to identify such functions. Last, AVVERIFIER adopts a straightforward simulation approach, rather than static symbolic execution. This choice contributes to its efficiency. Previous studies, like KLEE [19], suggest that backend SMT solvers can be significant drags on performance.

5.2.2 Real-world Contracts Results

To further illustrate the effectiveness of AVVERIFIER on realworld contracts, we perform the analysis on all collected contracts, 5,158,101 ones in total. Consequently, 812 of them are marked as vulnerable by AVVERIFIER. To evaluate the effectiveness of AVVERIFIER, we again use Mythril, Ethainter, Jackal, and ETHBMC as baselines. However, because the un

readability of the bytecode, for a more effective comparison, we tried to obtain their source code from Etherscan. Finally, we collected 369 pieces of source code. The final scanning results for all these five tools on 369 open-source contracts are shown in Table 2.

Average Time. As we can see, for all 369 cases, AVVERIFIER achieves the second-best performance in terms of average analysis time. Moreover, there are no timeout cases within the 10-minute limit. Ethainter is one place behind, with 8.74s and 6 timeouts. Mythril’s efficiency lags far behind, averaging 33.69s per case and suffering 42 timeouts exceeding the 10-minute threshold, the highest among compared tools. Jackal averages around 29.96s with 60 timeouts within. Finally, though ETHBMC has a decent 5.43s average time, it suffers 164 timeout cases, which significantly impacts its effectiveness. By comparing Table 2 and Table 1, we can observe that the performance among these tools is roughly consistent, except that ETHBMC has more timeout cases on the real world cases. We speculate that this is because it needs to try different initial states when the current one cannot explore paths to exploitations, leading to a huge efficiency issue.

Precision & Recall. After manually rechecking all these contracts, the numbers of false positives and false negatives are also shown in Table 2. We can easily observe that there are 21 false positives generated by AVVERIFIER, leading to 94.3% precision. The main reason for that is there are unconventional verification methods on addresses. Except for the three mechanisms we summarized in §2.2, some of them delegate address verification to other contracts, which is not a widely adopted verification method. Moreover, some contracts perform verification via digital signatures [17] or Merkle proofs [50]. Currently, due to efficiency issues, AVVERIFIER does not integrate such patterns. Moreover, as inter-contract analysis is always a huge obstacle for smart contract analysis [58], it is a compromise must be made. In contrast, all the other four baselines suffer from severe false negative issues. Although Mythril employs a similar path filtering approach to AVVERIFIER, its recall is only 5.1%, because the symbolic execution cannot effectively find feasible paths to exploit vulnerable contracts. Ethainter and Jackal, both of which use the GigaHorse framework, achieve recall rates of only 43.0% and 59.7%, respectively. As we mentioned in §5.2.1, Gigahorse struggles to accurately construct complete CFGs when handling some contracts, which stems from its less optimized handling on the dynamic memory. The recall of ETHBMC is only 1.1%, whose reason is mainly due to its adopted initial states as we stated above. We have conducted a case study to illustrate how a case is mislabeled as false negative by these four tools, please refer to our open-source repo at link.

Authors:

(1) Tianle Sun, Huazhong University of Science and Technology;

(2) Ningyu He, Peking University;

(3) Jiang Xiao, Huazhong University of Science and Technology;

(4) Yinliang Yue, Zhongguancun Laboratory;

(5) Xiapu Luo, The Hong Kong Polytechnic University;

(6) Haoyu Wang, Huazhong University of Science and Technology.