Table of Links

Abstract and 1. Introduction

-

Related Work

-

Method

3.1 Overview of Our Method

3.2 Coarse Text-cell Retrieval

3.3 Fine Position Estimation

3.4 Training Objectives

-

Experiments

4.1 Dataset Description and 4.2 Implementation Details

4.3 Evaluation Criteria and 4.4 Results

-

Performance Analysis

5.1 Ablation Study

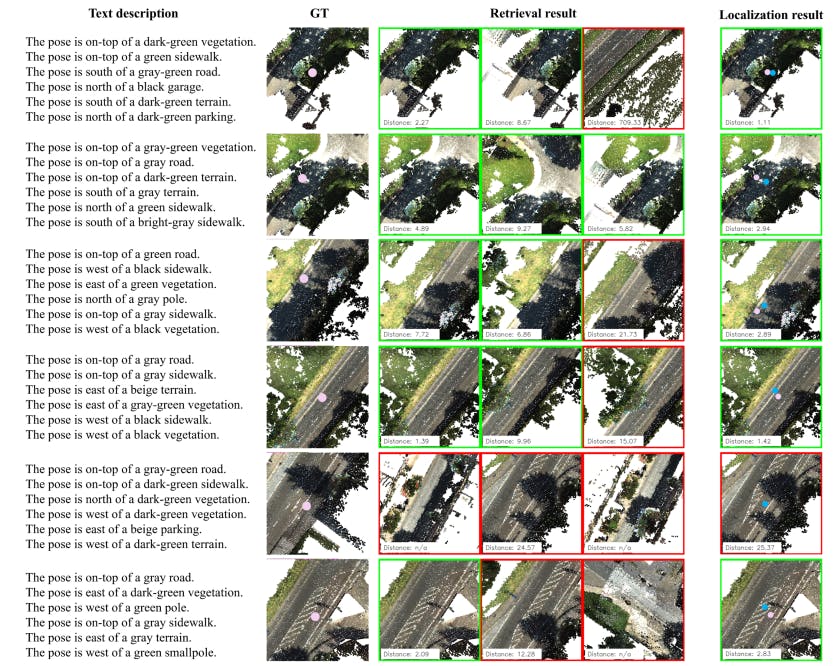

5.2 Qualitative Analysis

5.3 Text Embedding Analysis

-

Conclusion and References

Supplementary Material

- Details of KITTI360Pose Dataset

- More Experiments on the Instance Query Extractor

- Text-Cell Embedding Space Analysis

- More Visualization Results

- Point Cloud Robustness Analysis

Anonymous Authors

- Details of KITTI360Pose Dataset

- More Experiments on the Instance Query Extractor

- Text-Cell Embedding Space Analysis

- More Visualization Results

- Point Cloud Robustness Analysis

5.3 Text Embedding Analysis

Recent years have seen the emergence of large language models (encoders) like BERT [14], RoBERTa [24], T5 [33], and the CLIP [31] text encoder, each is trained with varied tasks and datasets. Text2Loc highlights that a pre-trained T5 model significantly enhances text and point cloud feature alignment. Yet, the potential of other models, such as RoBERTa and the CLIP text encoder, known for their excellence in visual grounding tasks, is not explored in their study. Thus, we conduct a comparative analysis of T5-small, RoBERTa-base, and the CLIP text encoder within our model framework. The result in Table 6 indicates that the T5-small (61M) achieves 0.24/0.46/0.57 at the top-1/3/5 recall metrics, incrementally outperforming RoBERTabase (125M) and CLIP text (123M) with fewer parameters.

6 CONCLUSION

In this paper, we propose a IFRP-T2P model for 3D point cloud localization based on a few natural language descriptions, which is the first approach to directly take raw point clouds as input, eliminating the need for ground-truth instances. Additionally, we

propose the RowColRPA in the coarse stage and the RPCA in the fine stage to fully leverage the spatial relation information. Through extensive experiments, IFRP-T2P achieves comparable performance with the state-of-the-art model, Text2Loc, which relies on groundtruth instances. Moreover, it surpasses the Text2Loc using instance segmentation model as prior. Our approach expands the usability of existing text-to-point-cloud localization models, enabling their application in scenarios where few instance information is available. Our future work will focus on applying IFRP-T2P for navigation in real-world robotic applications, bridging the gap between theoretical models and practical utility in autonomous navigation and interaction.

REFERENCES

[1] Panos Achlioptas, Ahmed Abdelreheem, Fei Xia, Mohamed Elhoseiny, and Leonidas Guibas. 2020. ReferIt3D: Neural Listeners for Fine-Grained 3D Object Identification in Real-World Scenes. 16th European Conference on Computer Vision (ECCV) (2020).

[2] Relja Arandjelovic, Petr Gronat, Akihiko Torii, Tomas Pajdla, and Josef Sivic. 2018. NetVLAD: CNN Architecture for Weakly Supervised Place Recognition. IEEE Transactions on Pattern Analysis and Machine Intelligence (Jun 2018), 1437–1451. https://doi.org/10.1109/tpami.2017.2711011

[3] Daigang Cai, Lichen Zhao, Jing Zhang, Lu Sheng, and Dong Xu. 2022. 3DJCG: A Unified Framework for Joint Dense Captioning and Visual Grounding on 3D Point Clouds. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022), 16443–16452. https://api.semanticscholar.org/CorpusID: 250980730

[4] Nicolas Carion, Francisco Massa, Gabriel Synnaeve, Nicolas Usunier, Alexander Kirillov, and Sergey Zagoruyko. 2020. End-to-End Object Detection with Transformers.

[5] Dave Zhenyu Chen, Angel X Chang, and Matthias Nießner. 2020. Scanrefer: 3d object localization in rgb-d scans using natural language. In Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16. Springer, 202–221.

[6] Sijin Chen, Hongyuan Zhu, Xin Chen, Yinjie Lei, Tao Chen, and YU Gang. 2023. End-to-End 3D Dense Captioning with Vote2Cap-DETR. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023), 11124–11133. https://api.semanticscholar.org/CorpusID:255522451

[7] Zhenyu Chen, Ali Gholami, Matthias Nießner, and Angel X Chang. 2021. Scan2Cap: Context-aware Dense Captioning in RGB-D Scans. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3193– 3203.

[8] Bowen Cheng, Ishan Misra, Alexander G Schwing, Alexander Kirillov, and Rohit Girdhar. 2022. Masked-attention Mask Transformer for Universal Image Segmentation.

[9] Bowen Cheng, Alex Schwing, and Alexander Kirillov. 2021. Per-Pixel Classification is Not All You Need for Semantic Segmentation.

[10] Christopher Choy, JunYoung Gwak, and Silvio Savarese. 2019. 4D SpatioTemporal ConvNets: Minkowski Convolutional Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 3075–3084.

[11] Angela Dai, Angel X Chang, Manolis Savva, Maciej Halber, Thomas Funkhouser, and Matthias Nießner. 2017. Scannet: Richly-annotated 3d reconstructions of indoor scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 5828–5839.

[12] Haowen Deng, Tolga Birdal, and Slobodan Ilic. 2018. PPFNet: Global Context Aware Local Features for Robust 3D Point Matching. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018), 195–205. https: //api.semanticscholar.org/CorpusID:3703761

[13] Ruoxi Deng, Chunhua Shen, Shengjun Liu, Huibing Wang, and Xinru Liu. 2018. Learning to Predict Crisp Boundaries.

[14] Jacob Devlin, Ming-Wei Chang, Kenton Lee, and Kristina Toutanova. 2019. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In North American Chapter of the Association for Computational Linguistics. https: //api.semanticscholar.org/CorpusID:52967399

[15] Zhaoxin Fan, Zhenbo Song, Hongyan Liu, Zhiwu Lu, Jun He, and Xiaoyong Du. 2022. SVT-Net: Super Light-Weight Sparse Voxel Transformer for Large Scale Place Recognition. Proceedings of the AAAI Conference on Artificial Intelligence (Jul 2022), 551–560. https://doi.org/10.1609/aaai.v36i1.19934

[16] Stephen Hausler, Sourav Garg, Ming Xu, Michael Milford, and Tobias Fischer. 2021. Patch-NetVLAD: Multi-Scale Fusion of Locally-Global Descriptors for Place Recognition. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr46437.2021.01392

[17] Yining Hong, Haoyu Zhen, Peihao Chen, Shuhong Zheng, Yilun Du, Zhenfang Chen, and Chuang Gan. 2023. 3d-llm: Injecting the 3d world into large language models. Advances in Neural Information Processing Systems 36 (2023), 20482– 20494.

[18] Bu Jin, Yupeng Zheng, Pengfei Li, Weize Li, Yuhang Zheng, Sujie Hu, Xinyu Liu, Jinwei Zhu, Zhijie Yan, Haiyang Sun, et al. 2024. TOD3Cap: Towards 3D Dense Captioning in Outdoor Scenes. arXiv preprint arXiv:2403.19589 (2024).

[19] Raghav Kapoor, Yash Parag Butala, Melisa Russak, Jing Yu Koh, Kiran Kamble, Waseem Alshikh, and Ruslan Salakhutdinov. 2024. OmniACT: A Dataset and Benchmark for Enabling Multimodal Generalist Autonomous Agents for Desktop and Web. ArXiv abs/2402.17553 (2024). https://api.semanticscholar.org/CorpusID: 268031860

[20] Jinkyu Kim, Anna Rohrbach, Trevor Darrell, John F. Canny, and Zeynep Akata. 2018. Textual Explanations for Self-Driving Vehicles. In European Conference on Computer Vision. https://api.semanticscholar.org/CorpusID:51887402

[21] Manuel Kolmet, Qunjie Zhou, Aljosa Osep, and Laura Leal-Taixé. 2022. Text2Pos: Text-to-Point-Cloud Cross-Modal Localization. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022), 6677–6686. https://api. semanticscholar.org/CorpusID:247779335

[22] Jacek Komorowski. 2020. MinkLoc3D: Point Cloud Based Large-Scale Place Recognition. 2021 IEEE Winter Conference on Applications of Computer Vision (WACV) (2020), 1789–1798. https://api.semanticscholar.org/CorpusID:226282298

[23] Harold W Kuhn. 1955. The Hungarian method for the assignment problem. Naval research logistics quarterly 2, 1-2 (1955), 83–97.

[24] Yinhan Liu, Myle Ott, Naman Goyal, Jingfei Du, Mandar Joshi, Danqi Chen, Omer Levy, Mike Lewis, Luke Zettlemoyer, and Veselin Stoyanov. 2019. RoBERTa: A Robustly Optimized BERT Pretraining Approach. ArXiv abs/1907.11692 (2019). https://api.semanticscholar.org/CorpusID:198953378

[25] Junyi Ma, Guang ming Xiong, Jingyi Xu, and Xieyuanli Chen. 2023. CVTNet: A Cross-View Transformer Network for LiDAR-Based Place Recognition in Autonomous Driving Environments. IEEE Transactions on Industrial Informatics 20 (2023), 4039–4048. https://api.semanticscholar.org/CorpusID:256598042

[26] Srikanth Malla, Chiho Choi, Isht Dwivedi, Joonhyang Choi, and Jiachen Li. 2022. DRAMA: Joint Risk Localization and Captioning in Driving. 2023 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV) (2022), 1043–1052. https://api.semanticscholar.org/CorpusID:252439240

[27] Ishan Misra, Rohit Girdhar, and Armand Joulin. 2021. An End-to-End Transformer Model for 3D Object Detection.

[28] Ming Nie, Renyuan Peng, Chunwei Wang, Xinyue Cai, Jianhua Han, Hang Xu, and Li Zhang. 2023. Reason2Drive: Towards Interpretable and Chain-based Reasoning for Autonomous Driving. ArXiv abs/2312.03661 (2023). https://api. semanticscholar.org/CorpusID:265688025

[29] Charles R. Qi, Li Yi, Hao Su, and Leonidas J. Guibas. 2017. PointNet++: deep hierarchical feature learning on point sets in a metric space. In Proceedings of the 31st International Conference on Neural Information Processing Systems (Long Beach, California, USA) (NIPS’17). Curran Associates Inc., Red Hook, NY, USA, 5105–5114.

[30] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, et al. 2021. Learning transferable visual models from natural language supervision. In International conference on machine learning. PMLR, 8748–8763.

[31] Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, and Ilya Sutskever. 2021. Learning Transferable Visual Models From Natural Language Supervision. In International Conference on Machine Learning. https://api.semanticscholar.org/CorpusID:231591445

[32] Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J. Liu. 2020. Exploring the limits of transfer learning with a unified text-to-text transformer. J. Mach. Learn. Res. 21, 1, Article 140 (jan 2020), 67 pages.

[33] Colin Raffel, Noam Shazeer, Adam Roberts, Katherine Lee, Sharan Narang, Michael Matena, Yanqi Zhou, Wei Li, and Peter J. Liu. 2020. Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer. Journal of Machine Learning Research 21, 140 (2020), 1–67. http://jmlr.org/papers/v21/20-074.html

[34] Paul-Edouard Sarlin, Cesar Cadena, Roland Siegwart, and Marcin Dymczyk. 2019. From Coarse to Fine: Robust Hierarchical Localization at Large Scale. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). https://doi.org/10.1109/cvpr.2019.01300

[35] Jonas Schult, Francis Engelmann, Alexander Hermans, Or Litany, Siyu Tang, and Bastian Leibe. 2023. Mask3D: Mask Transformer for 3D Semantic Instance Segmentation. (2023).

[36] Mikaela Angelina Uy and Gim Hee Lee. 2018. PointNetVLAD: Deep Point Cloud Based Retrieval for Large-Scale Place Recognition. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (2018), 4470–4479. https: //api.semanticscholar.org/CorpusID:4805033

[37] Laurens Van der Maaten and Geoffrey Hinton. 2008. Visualizing data using t-SNE. JMLR (2008).

[38] Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Łukasz Kaiser, and Illia Polosukhin. 2017. Attention is all you need. In Proceedings of the 31st International Conference on Neural Information Processing Systems (Long Beach, California, USA) (NIPS’17). Curran Associates Inc., Red Hook, NY, USA, 6000–6010.

[39] Guangzhi Wang, Hehe Fan, and Mohan Kankanhalli. 2023. Text to point cloud localization with relation-enhanced transformer. In Proceedings of the ThirtySeventh AAAI Conference on Artificial Intelligence and Thirty-Fifth Conference on Innovative Applications of Artificial Intelligence and Thirteenth Symposium on Educational Advances in Artificial Intelligence (AAAI’23/IAAI’23/EAAI’23). AAAI Press, Article 278, 9 pages. https://doi.org/10.1609/aaai.v37i2.25347

[40] Yanmin Wu, Xinhua Cheng, Renrui Zhang, Zesen Cheng, and Jian Zhang. 2023. EDA: Explicit Text-Decoupling and Dense Alignment for 3D Visual Grounding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

[41] Yan Xia, Mariia Gladkova, Rui Wang, Qianyun Li, Uwe Stilla, Joao F Henriques, and Daniel Cremers. 2023. CASSPR: Cross Attention Single Scan Place Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV). IEEE.

[42] Yan Xia, Letian Shi, Zifeng Ding, João F Henriques, and Daniel Cremers. 2024. Text2Loc: 3D Point Cloud Localization from Natural Language. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition.

[43] Yan Xia, Yusheng Xu, Shuang Li, Rui Wang, Juan Du, Daniel Cremers, and Uwe Stilla. 2020. SOE-Net: A Self-Attention and Orientation Encoding Network for Point Cloud based Place Recognition. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2020), 11343–11352. https: //api.semanticscholar.org/CorpusID:227162609

[44] Xu Yan, Zhihao Yuan, Yuhao Du, Yinghong Liao, Yao Guo, Shuguang Cui, and Zhen Li. 2023. Comprehensive Visual Question Answering on Point Clouds through Compositional Scene Manipulation. IEEE Transactions on Visualization and Computer Graphics (2023).

[45] Zhengyuan Yang, Songyang Zhang, Liwei Wang, and Jiebo Luo. 2021. SAT: 2D Semantics Assisted Training for 3D Visual Grounding. International Conference on Computer Vision,International Conference on Computer Vision (May 2021).

[46] Huan Yin, Xuecheng Xu, Sha Lu, Xieyuanli Chen, Rong Xiong, Shaojie Shen, Cyrill Stachniss, and Yue Wang. 2023. A Survey on Global LiDAR Localization: Challenges, Advances and Open Problems. International Journal of Computer Vision (02 2023).

[47] Zhihao Yuan, Jinke Ren, Chun-Mei Feng, Hengshuang Zhao, Shuguang Cui, and Zhen Li. 2023. Visual Programming for Zero-shot Open-Vocabulary 3D Visual Grounding. arXiv preprint arXiv:2311.15383 (2023).

[48] Zhihao Yuan, Xu Yan, Zhuo Li, Xuhao Li, Yao Guo, Shuguang Cui, and Zhen Li. 2022. Toward Explainable and Fine-Grained 3D Grounding through Referring Textual Phrases. arXiv preprint arXiv:2207.01821 (2022).

[49] Zhihao Yuan, Xu Yan, Yinghong Liao, Yao Guo, Guanbin Li, Zhen Li, and Shuguang Cui. 2022. X -Trans2Cap: Cross-Modal Knowledge Transfer using Transformer for 3D Dense Captioning. 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022), 8553–8563. https: //api.semanticscholar.org/CorpusID:247218430

[50] Zhihao Yuan, Xu Yan, Yinghong Liao, Ruimao Zhang, Zhen Li, and Shuguang Cui. 2021. Instancerefer: Cooperative holistic understanding for visual grounding on point clouds through instance multi-level contextual referring. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 1791–1800.

[51] Renrui Zhang, Jiaming Han, Aojun Zhou, Xiangfei Hu, Shilin Yan, Pan Lu, Hongsheng Li, Peng Gao, and Yu Jiao Qiao. 2023. LLaMA-Adapter: Efficient Finetuning of Language Models with Zero-init Attention. ArXiv abs/2303.16199 (2023). https://api.semanticscholar.org/CorpusID:257771811

[52] Qunjie Zhou, Torsten Sattler, Marc Pollefeys, and Laura Leal-Taixe. 2020. To Learn or Not to Learn: Visual Localization from Essential Matrices. In 2020 IEEE International Conference on Robotics and Automation (ICRA). https://doi.org/10. 1109/icra40945.2020.9196607

[53] Zhicheng Zhou, Cheng Zhao, Daniel Adolfsson, Songzhi Su, Yang Gao, Tom Duckett, and Li Sun. 2021. NDT-Transformer: Large-Scale 3D Point Cloud Localisation using the Normal Distribution Transform Representation. In 2021 IEEE International Conference on Robotics and Automation (ICRA). https: //doi.org/10.1109/icra48506.2021.9560932

Authors:

(1) Lichao Wang, FNii, CUHKSZ ([email protected]);

(2) Zhihao Yuan, FNii and SSE, CUHKSZ ([email protected]);

(3) Jinke Ren, FNii and SSE, CUHKSZ ([email protected]);

(4) Shuguang Cui, SSE and FNii, CUHKSZ ([email protected]);

(5) Zhen Li, a Corresponding Author from SSE and FNii, CUHKSZ ([email protected]).

This paper is