Table of Links

-

Introduction

-

Related Work

2.1 Semantic Typographic Logo Design

2.2 Generative Model for Computational Design

2.3 Graphic Design Authoring Tool

-

Formative Study

3.1 General Workflow and Challenges

3.2 Concerns in Generative Model Involvement

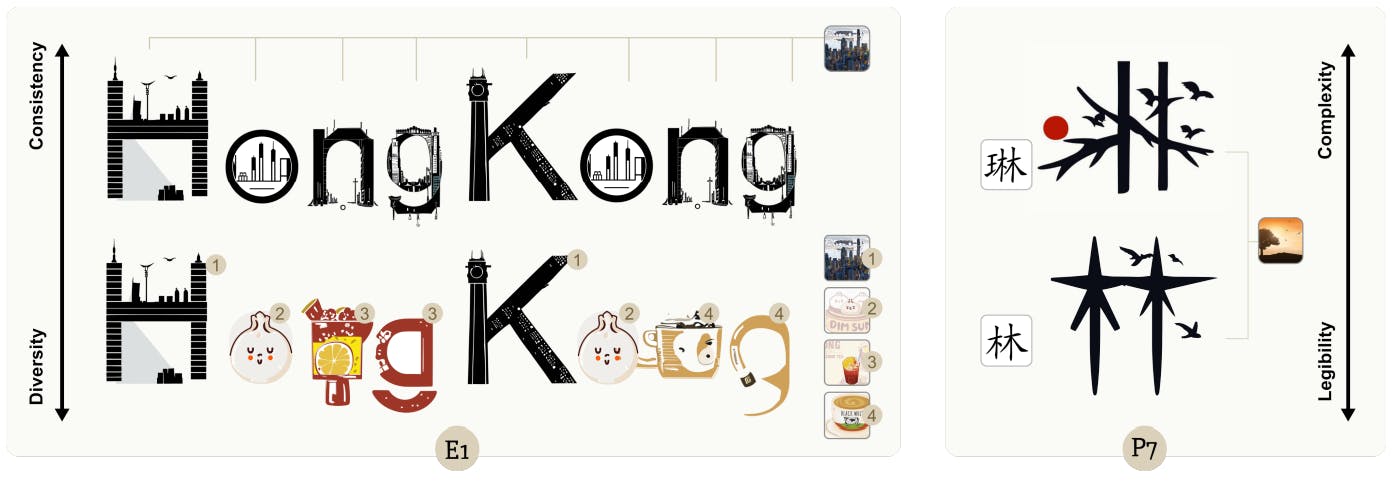

3.3 Design Space of Semantic Typography Work

-

Design Consideration

-

Typedance and 5.1 Ideation

5.2 Selection

5.3 Generation

5.4 Evaluation

5.5 Iteration

-

Interface Walkthrough and 6.1 Pre-generation stage

6.2 Generation stage

6.3 Post-generation stage

-

Evaluation and 7.1 Baseline Comparison

7.2 User Study

7.3 Results Analysis

7.4 Limitation

-

Discussion

8.1 Personalized Design: Intent-aware Collaboration with AI

8.2 Incorporating Design Knowledge into Creativity Support Tools

8.3 Mix-User Oriented Design Workflow

-

Conclusion and References

5.4 Evaluation

Once the design is generated, evaluating a design’s compliance with recognized visual design principles is crucial for its completion [9, 37]. Semantic typographic logos require a balance between the typeface’s readability and the imagery’s expressiveness, presenting a challenging tradeoff. Designers often rely on the feedback from participants to validate their designs. Typdance offers a data-driven instant assessment before gathering participants’ reactions.

To assist users in determining the position of their current work on the type-semantic spectrum, TypeDance utilizes a pre-trained CLIP model [39] that provides objective judgment supported by data. By distilling knowledge from a vast dataset of 400 million image-text pairs of CLIP model, TypeDance can quantify the similarity between typeface and imagery on a scale ranging from [0, 1], with the sum equal to 1. To enhance intuitive understanding, TypeDance translates the neutral points from 0.5 to 0 and normalizes the distance between the similarity and the neutral point from 0.5 to 1. Users can determine the degree of divergence from the neutral point for their currently generated result. A value of 0 signifies neutrality, indicating that the generated result favors both the typeface and the imagery. Conversely, higher values indicate a greater degree of divergence towards either the typeface or the imagery, depending on the specific direction.

5.5 Iteration

Although iteration is throughout the process, we identified three main iteration patterns.

5.5.1 Regeneration for New Design. Previous tools often allow creators to delete unsatisfactory results, but few aim to improve the regenerated performance to meet creators’ intent. To address this challenge, TypeDance utilizes both implicit and explicit human feedback to infer user preferences. Inspired by FABRIC [49], we leverage users’ reactions toward generated results as a clue to user preference. By analyzing positive feedback from preserved results and negative feedback from deletions, the generative model in TypeDance dynamically adjusts the weights in the self-attention layer. This iterative incorporation of human feedback allows for the refinement of the generative model over time. Additionally, TypeDance provides users with more explicit ways to make adjustments, including a textual prompt and a slider that enables users to control the balance between typeface and imagery.

5.5.2 Refinement in Type-imagery Spectrum. TypeDance provides a refined approach to iteratively refine their designs along the type-imagery spectrum. In addition to the quantitative metric identified in the evaluation component, TypeDance allows creators to make precise adjustments using the same slider. As shown in Fig. 5 (c), we divide the distance between the neutral point and the typeface and imagery into 20 equal intervals, each representing 0.05. These small interpolations preserve the overall structure and allow for incremental adjustments. By dragging the slider, creators can set their desired value point between typeface and imagery, achieving a balanced aesthetic.

Specifically, to prioritize imagery, we begin with the current design as the initial image and inject it into the diffusion model with the strength set to the desired value point. This process pushes the generated image toward the imagery end, resulting in a more semantically rich design. Conversely, to emphasize the typeface, we utilize the saliency map of the typeface in the pixel space to filter out the relevant regions of the image. This modified image is fed into the generative model to ensure a smooth transition towards the typeface end.

5.5.3 Editability for Elements in the Final Design. A more fine-grained adjustment is required to make nuanced changes to an almost satisfactory result, such as deleting an element in the generated result. In order to achieve this level of editability, we convert the image from pixel to vector space. Hence, creators gain the ability to manipulate each individual element in their design, allowing them to remove, scale, rotate, and change colors as needed.

Authors:

(1) SHISHI XIAO, The Hong Kong University of Science and Technology (Guangzhou), China;

(2) LIANGWEI WANG, The Hong Kong University of Science and Technology (Guangzhou), China;

(3) XIAOJUAN MA, The Hong Kong University of Science and Technology, China;

(4) WEI ZENG, The Hong Kong University of Science and Technology (Guangzhou), China.

This paper is