Imagine logging into your laptop, and instead of just checking your password once, the system continuously verifies it’s really you by analyzing how you type. No additional hardware, no face scans, no fingerprint readers – just the natural rhythm of your fingers dancing across the keyboard. Welcome to keystroke dynamics, one of cybersecurity’s most elegant solutions to continuous authentication.

Believe it or not but every time you type, you create a unique digital signature. The way you hold down the ‘a’ key, the millisecond pause between ‘th’, the slight hesitation before hitting the spacebar – these micro-patterns are as distinctive as your handwriting, and far harder to replicate than stolen passwords.

Seeing Keystroke Patterns as Authentication

Traditional authentication is binary: you’re either in or you’re out. You enter your credentials once, and the system trusts you for the entire session. But what if someone shoulder-surfs your password? What if you walk away from an unlocked terminal? What if an attacker gains remote access to your authenticated session?

Continuous authentication solves this by constantly asking: “Is this still the same person?” Instead of one security checkpoint, you have dozens of micro-verifications happening invisibly as you work.

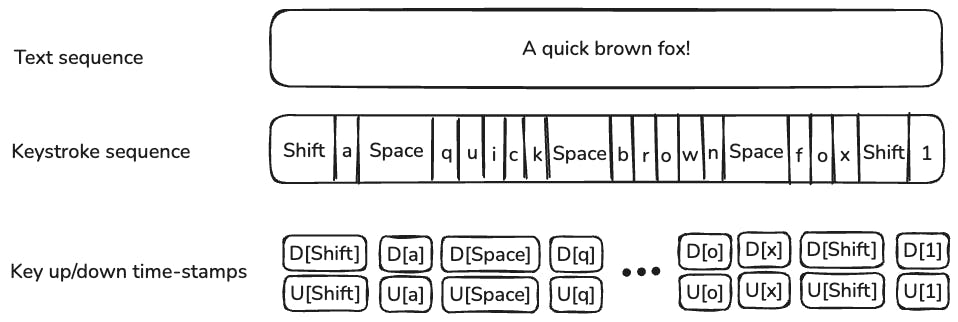

Understanding Keystroke Dynamics

Keystroke dynamics captures the unique temporal patterns in how individuals type. We’re not just recording what keys you press, but when and how you press them. Think of it as behavioral biometrics – identifying people by what they do, not just what they know or what they have.

Keystroke Event Sequence Visualization:

========================================

Raw Keystrokes: "hello"

Key: h e l l o

| | | | |

▼ ▼ ▼ ▼ ▼

Press ●----●----●----●----●

| | | | |

Release ●----●----●----●----●

| | | | |

Time: 0 50 120 180 220 280 (ms)

Dwell Times (Press to Release):

h: 45ms e: 38ms l: 42ms l: 35ms o: 48ms

Flight Times (Release to Next Press):

h→e: 25ms e→l: 28ms l→l: 18ms l→o: 12ms

The key measurements include:

Dwell Time: How long you hold each key down. Some people are key-tappers (quick press-and-release), others are key-holders (longer pressure duration).

Flight Time: The interval between releasing one key and pressing the next. This reveals your natural typing rhythm and finger coordination patterns.

Pressure Dynamics: On pressure-sensitive keyboards, how hard you press keys. Nervous typers might press harder, relaxed typers might have a lighter touch.

Typing Velocity: Not just words per minute, but the acceleration and deceleration patterns within words and sentences.

Individual Typing Pattern Comparison:

====================================

User A (Fast Typer):

Dwell: ■■ (30ms avg) Flight: ■ (15ms avg)

Pattern: ●-●-●-●-●-●-●-● (Quick, consistent rhythm)

User B (Deliberate Typer):

Dwell: ■■■■ (65ms avg) Flight: ■■■ (45ms avg)

Pattern: ●---●---●---●--- (Slower, thoughtful pace)

User C (Variable Typer):

Dwell: ■■■ (50ms avg) Flight: ■■ (varies 10-80ms)

Pattern: ●--●-●----●--●- (Irregular, context-dependent)

The Deep Learning Architecture

Here’s where it gets interesting. Raw keystroke timing data is messy, high-dimensional, and full of noise. Traditional machine learning approaches often struggle with the temporal complexities and individual variations. Enter deep learning.

Data Flow Architecture Overview:

===============================

Raw Keystrokes → Feature Extraction → Model Training → Authentication

↓ ↓ ↓ ↓

[h][e][l][l] [Dwell Times] [CNN/RNN] [User/Imposter]

Time stamps → [Flight Times] → Training → Decision

Press/Release [Velocities] [Patterns] [Confidence]

Full Pipeline Visualization:

===========================

Input Layer: [●●●●●●●●●●] (Keystroke sequence)

↓

Feature Layer: [■■■■] (Temporal features)

↓ ↘

CNN Branch: [▲▲▲] → [▼] (Pattern detection)

↓ ↘

RNN Branch: [◆◆◆] → [♦] (Sequence modeling)

↓

Fusion Layer: [⬢] (Combined features)

↓

Output: [0.87] (Authentication score)

Convolutional Neural Networks (CNNs) for Pattern Recognition

CNNs excel at finding spatial patterns, and we can leverage this for keystroke analysis by treating typing sequences as 1D signals or converting them into 2D representations.

CNN Architecture for Keystroke Analysis:

=======================================

Input: Keystroke Sequence (100 timesteps × 4 features)

┌─────────────────────────────────────────────────┐

│ Dwell │■■□■■■□□■■■□■■□□■■■□■■■□□■■■□■■□□■■■□. │

│ Flight│□■■□□■■□■■□□■■□■■□□■■□■■□□■■□■■□□■■□. │

│ Press │■□■■□■□■■□■■□■□■■□■■□■□■■□■■□■□■■□■■. │

│ Velocity│□□■■■□□■■■□□■■■□□■■■□□■■■□□■■■□□■■■. │

└─────────────────────────────────────────────────┘

↓ Conv2D (32 filters, 3×1)

┌─────────────────────────────────────────────────┐

│ Feature Maps │

│ ▲▲▲▲ ▼▼▼▼ ◆◆◆◆ ●●●● ■■■■ □□□□ ★★★★ ☆☆☆☆ │

│ Filter responses detecting local patterns │

└─────────────────────────────────────────────────┘

↓ MaxPool + Conv2D (64 filters)

┌─────────────────────────────────────────────────┐

│ Higher-level Features │

│ ████ ▓▓▓▓ ░░░░ ▒▒▒▒ ■■■■ □□□□ │

│ Complex typing pattern detectors │

└─────────────────────────────────────────────────┘

↓ Global Average Pooling

[Feature Vector]

↓ Dense Layer

[Authentication Score: 0.92]

Pattern Recognition Examples:

============================

Filter 1: ■□■□■□ (Detects alternating dwell patterns)

Filter 2: ■■■□□□ (Detects burst typing followed by pause)

Filter 3: □■■■■□ (Detects acceleration patterns)

Filter 4: ■□□■□□ (Detects hesitation patterns)

Example CNN architecture for keystroke feature extraction:

import tensorflow as tf

def build_keystroke_cnn(sequence_length, num_features):

model = tf.keras.Sequential([

# Reshape input for 1D convolution

tf.keras.layers.Reshape((sequence_length, num_features, 1)),

# First convolutional block - captures local typing patterns

tf.keras.layers.Conv2D(32, (3, 1), activation='relu', padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.MaxPooling2D((2, 1)),

# Second block - captures mid-level temporal patterns

tf.keras.layers.Conv2D(64, (3, 1), activation='relu', padding='same'),

tf.keras.layers.BatchNormalization(),

tf.keras.layers.MaxPooling2D((2, 1)),

# Third block - high-level feature extraction

tf.keras.layers.Conv2D(128, (3, 1), activation='relu', padding='same'),

tf.keras.layers.GlobalAveragePooling2D(),

# Dense layers for classification

tf.keras.layers.Dense(256, activation='relu'),

tf.keras.layers.Dropout(0.3),

tf.keras.layers.Dense(1, activation='sigmoid') # Binary: authentic user or not

])

return model

The CNN learns to identify characteristic patterns in your typing “signal.” Just like how it might recognize edges in images, it recognizes the distinctive timing signatures in your keystroke sequences.

Recurrent Neural Networks (RNNs) for Temporal Modeling

While CNNs are great for pattern recognition, RNNs are specifically designed for sequential data. Typing is inherently temporal – the order and timing of keystrokes matter enormously.

RNN/LSTM Architecture for Keystroke Sequences:

==============================================

Keystroke Sequence: [k1] → [k2] → [k3] → [k4] → ... → [kn]

↓ ↓ ↓ ↓ ↓

LSTM Layer 1: [h1] → [h2] → [h3] → [h4] → ... → [hn]

↓ ↓ ↓ ↓ ↓

Memory States: [c1] [c2] [c3] [c4] ... [cn]

LSTM Layer 2: [h1'] → [h2'] → [h3'] → [h4'] → ... → [hn']

↓ ↓ ↓ ↓ ↓

Final Output: [Output]

↓

[Auth Score: 0.85]

LSTM Cell Internal Process:

==========================

Previous: h(t-1), c(t-1) Current Input: x(t)

↓ ↓

┌─────────────────────────────────────┐

│ Forget Gate: f(t) = σ(Wf·[h,x]+bf) │ ← Decides what to forget

│ Input Gate: i(t) = σ(Wi·[h,x]+bi) │ ← Decides what to update

│ Candidate: C̃(t) = tanh(Wc·[h,x]) │ ← New candidate values

│ Output Gate: o(t) = σ(Wo·[h,x]+bo) │ ← Controls output

└─────────────────────────────────────┘

↓

Memory Update: c(t) = f(t)*c(t-1) + i(t)*C̃(t)

Hidden State: h(t) = o(t) * tanh(c(t))

Temporal Pattern Learning:

=========================

Time: t1 t2 t3 t4 t5 t6

Input: [●] [●] [●] [●] [●] [●]

Pattern: Fast→Fast→Slow→Fast→Fast→Slow

Memory: ■ ■■ ■■■ ■■ ■■■ ■■■■

↑ ↑ ↑ ↑ ↑ ↑

Learn Build Slow Reset Repeat Confirm

rhythm context pattern state rhythm pattern

Translating the above RNN/LSTM architecture into Python:

def build_keystroke_rnn(sequence_length, num_features):

model = tf.keras.Sequential([

# LSTM layers to capture typing rhythm and dependencies

tf.keras.layers.LSTM(128, return_sequences=True, dropout=0.2),

tf.keras.layers.LSTM(64, return_sequences=True, dropout=0.2),

tf.keras.layers.LSTM(32, dropout=0.2),

# Dense layers for user identification

tf.keras.layers.Dense(128, activation='relu'),

tf.keras.layers.Dropout(0.3),

tf.keras.layers.Dense(64, activation='relu'),

tf.keras.layers.Dense(1, activation='sigmoid')

])

return model

The RNN maintains an internal memory of your typing history, learning that your unique pattern might be quick-quick-pause-quick for certain letter combinations, or that you consistently slow down before typing numbers.

Hybrid CNN-RNN Architecture

The most powerful approach combines both: CNNs extract local typing patterns, while RNNs model the temporal dependencies.

def build_hybrid_keystroke_model(sequence_length, num_features):

# Input layer

inputs = tf.keras.layers.Input(shape=(sequence_length, num_features))

# CNN branch for pattern extraction

cnn_branch = tf.keras.layers.Reshape((sequence_length, num_features, 1))(inputs)

cnn_branch = tf.keras.layers.Conv2D(64, (3, 1), activation='relu', padding='same')(cnn_branch)

cnn_branch = tf.keras.layers.MaxPooling2D((2, 1))(cnn_branch)

cnn_branch = tf.keras.layers.Conv2D(32, (3, 1), activation='relu', padding='same')(cnn_branch)

cnn_branch = tf.keras.layers.Reshape((-1, 32))(cnn_branch)

# RNN branch for temporal modeling

rnn_branch = tf.keras.layers.LSTM(64, return_sequences=True)(inputs)

rnn_branch = tf.keras.layers.LSTM(32)(rnn_branch)

# Combine features

combined = tf.keras.layers.Concatenate()([

tf.keras.layers.GlobalAveragePooling1D()(cnn_branch),

rnn_branch

])

# Final classification

outputs = tf.keras.layers.Dense(128, activation='relu')(combined)

outputs = tf.keras.layers.Dropout(0.3)(outputs)

outputs = tf.keras.layers.Dense(1, activation='sigmoid')(outputs)

model = tf.keras.Model(inputs=inputs, outputs=outputs)

return model

Practical Training Pipeline

Data Preprocessing

def preprocess_keystroke_data(raw_data):

"""

Convert raw keystroke events into feature vectors

"""

features = []

for session in raw_data:

# Calculate dwell times

dwell_times = [event.release_time - event.press_time for event in session]

# Calculate flight times

flight_times = []

for i in range(len(session) - 1):

flight_time = session[i+1].press_time - session[i].release_time

flight_times.append(flight_time)

# Normalize to handle different typing speeds

dwell_times = normalize_sequence(dwell_times)

flight_times = normalize_sequence(flight_times)

# Create fixed-length sequences

feature_vector = create_fixed_sequence(dwell_times, flight_times)

features.append(feature_vector)

return np.array(features)

Model Training Strategy

The key insight is treating this as an anomaly detection problem rather than traditional classification. You’re not trying to recognize every possible user – you’re trying to recognize when someone is NOT the authenticated user.

# Training approach

def train_keystroke_authenticator(user_data, imposter_data):

# Combine CNN and RNN model

model = build_hybrid_keystroke_model(sequence_length=100, num_features=4)

# Use focal loss to handle class imbalance

model.compile(

optimizer='adam',

loss='binary_focal_crossentropy', # Better for imbalanced data

metrics=['accuracy', 'precision', 'recall']

)

# Training with data augmentation

X_train, X_val, y_train, y_val = train_test_split(

features, labels, test_size=0.2, stratify=labels

)

# Use callbacks for adaptive training

callbacks = [

tf.keras.callbacks.EarlyStopping(patience=10),

tf.keras.callbacks.ReduceLROnPlateau(factor=0.5, patience=5),

tf.keras.callbacks.ModelCheckpoint('best_model.h5', save_best_only=True)

]

model.fit(X_train, y_train,

validation_data=(X_val, y_val),

epochs=100,

batch_size=32,

callbacks=callbacks)

return model

Deployment and Monitoring

Real-time Inference

In production, the system makes continuous authentication decisions:

class KeystrokeAuthenticator:

def __init__(self, model_path, window_size=50):

self.model = tf.keras.models.load_model(model_path)

self.window_size = window_size

self.keystroke_buffer = []

self.confidence_threshold = 0.7

def process_keystroke(self, keystroke_event):

# Add to rolling buffer

self.keystroke_buffer.append(keystroke_event)

# Keep only recent keystrokes

if len(self.keystroke_buffer) > self.window_size:

self.keystroke_buffer.pop(0)

# Make prediction if we have enough data

if len(self.keystroke_buffer) >= self.window_size:

features = self.extract_features(self.keystroke_buffer)

confidence = self.model.predict(features.reshape(1, -1))[0][0]

if confidence < self.confidence_threshold:

return "AUTHENTICATION_FAILED"

else:

return "AUTHENTICATED"

return "INSUFFICIENT_DATA"

Adaptive Thresholds

Static thresholds don’t work well for behavioral biometrics. Users change, systems change, and context matters. Implement dynamic thresholds that adapt based on:

- Recent authentication success rates

- Time of day and day of week patterns

- Keyboard/device context

- User feedback (if available)

Running the Experiment

Run the scripts above, following the prompts to type normally for the legitimate user and differently for the impostor. The CNN layers extract spatial features during training, while the RNN processes temporal sequences during testing, simulating continuous authentication. The model outputs a prediction (genuine or impostor) with a confidence score.

Note: This is a simplified prototype. In production, you’d need a larger dataset, robust preprocessing, and ethical considerations like user consent.

Real-World Implementation Challenges

Data Collection and Privacy

Keystroke dynamics requires continuous monitoring of user input, raising significant privacy concerns. You’re essentially logging everything someone types. The solution involves:

- On-device processing: Raw keystroke data never leaves the user’s machine

- Feature extraction only: Store timing patterns, not actual keystrokes

- Differential privacy: Add controlled noise to protect individual typing patterns

- User consent and transparency: Clear communication about what’s being monitored

Handling Variability

People don’t type consistently. You type differently when you’re tired, stressed, using a different keyboard, or even sitting in a different position. Successful systems must account for:

- Contextual adaptation: Different models for different scenarios (laptop vs desktop keyboard, morning vs evening typing)

- Continuous learning: Models that adapt to gradual changes in typing patterns

- Confidence scoring: Sometimes it’s better to say “I’m not sure” than to make a wrong authentication decision

Performance Requirements

Continuous authentication must be:

- Fast: Sub-second decision making

- Resource-efficient: Can’t drain battery or slow down the system

- Accurate: Low false positive rates (don’t lock out legitimate users) and low false negative rates (don’t miss attackers)

Conclusion

Keystroke mapping for continuous authentication offers a powerful, user-friendly approach to modern cybersecurity. By leveraging the unique patterns in typing behavior, it provides seamless, real-time identity verification, as supported by empirical evidence showing low EER values in DET and ROC curves.

The hybrid CNN-RNN implementation demonstrates how advanced machine learning can enhance this technology, with CNNs extracting robust features during training and RNNs modeling temporal dynamics for continuous testing. Through this exercise, you’ve gained hands-on insight into building a sophisticated authentication system, positioning you to explore further or develop innovative applications. As cyber threats evolve, mastering such techniques places you at the forefront of digital security. For further inquiries or to share your experiments, engage with the community or consult additional resources.