Introduction

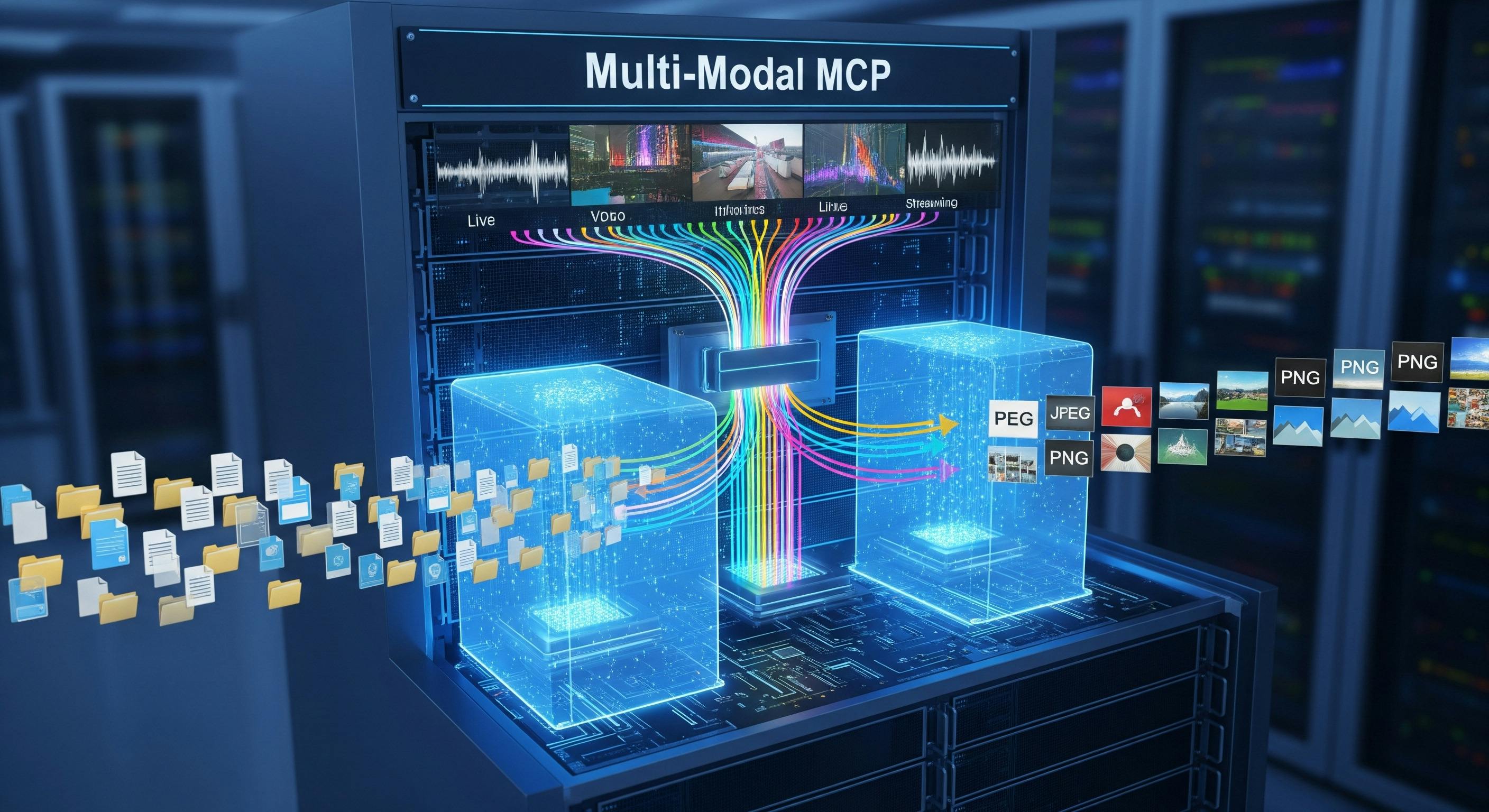

The Model Context Protocol (MCP) represents a standardized approach to enabling communication between AI language models and external data sources through structured server implementations. Current MCP implementations predominantly focus on text-based request-response interactions, limiting their applicability to scenarios requiring rich media handling and real-time data processing capabilities.

The increasing demand for AI systems capable of processing diverse data modalities—including binary files, image data, audio streams, and real-time sensor inputs—necessitates the extension of MCP server architectures beyond traditional text-only paradigms. Contemporary applications require seamless integration of multi-modal data streams within AI workflows, presenting significant technical challenges in memory management, network efficiency, and protocol adaptation.

This article presents a comprehensive analysis of multi-modal MCP server implementation patterns, addressing the technical requirements for handling file uploads, image processing, and streaming data within the constraints of the MCP specification. The investigation consists of memory-efficient processing strategies, network optimization techniques, and production-grade security considerations essential for enterprise-scale deployments.

The primary contribution of this work includes the development of architectural patterns for multi-modal data handling in MCP servers, implementation of memory-efficient streaming algorithms, and establishment of best practices for integrating cloud storage services with MCP infrastructures. Additionally, this article provides analysis of performance characteristics across different data handling approaches and presents validated code implementations suitable for production environments.

The scope of this investigation covers three primary areas: static file handling mechanisms for document and media uploads, real-time image processing capabilities with computer vision integration, and streaming data architectures for continuous data flow management. Each area addresses specific technical challenges including protocol compliance, resource optimization, and scalability considerations.

Understanding Multi-Modal Data in MCP Context

A. Types of Multi-Modal Data

Multi-modal data in MCP server implementations includes three primary categories, each presenting distinct technical challenges for protocol integration. Static files constitute the first category, including document formats (PDF, DOCX, TXT), image files (JPEG, PNG, TIFF), audio recordings (WAV, MP3, FLAC), and video content (MP4, AVI, MOV). These files typically range from kilobytes to gigabytes in size and require a complete transfer before processing can start.

Streaming data represents the second category, characterized by continuous data flow requiring real-time processing capabilities. This includes live video feeds, audio streams from microphones or network sources, sensor data from IoT devices, and real-time telemetry information. Streaming data presents unique challenges due to its temporal nature and the requirement for low-latency processing.

Hybrid content forms the third category, including rich documents with embedded media, interactive presentations, and complex data structures combining multiple modalities. These formats require specialized parsing capabilities and often involve extracting and processing individual components separately while maintaining structural relationships.

B. Challenges in Multi-Modal MCP Implementation

Memory management constitutes the primary technical challenge in multi-modal MCP implementations. Large file processing can rapidly exhaust available system memory, particularly when multiple concurrent requests involve high-resolution images or extended audio/video content. Traditional MCP message structures, designed for text-based interactions, require careful adaptation to handle binary data without compromising system stability.

Network efficiency presents significant concerns when transmitting large binary payloads through MCP channels. The protocol’s JSON-based message format introduces encoding overhead, particularly when using Base64 encoding for binary data, resulting in approximately 33% increase in transmission size. Additionally, network latency and bandwidth limitations affect user experience when dealing with large file transfers or real-time streaming scenarios.

Protocol compliance challenges arise from MCP’s original design assumptions regarding message structure and size constraints. The specification does not explicitly address binary data handling, requiring implementers to develop custom encoding strategies while maintaining compatibility with existing MCP clients and infrastructure.

Security vulnerabilities increase substantially when handling multi-modal data. File uploads introduce risks, including malware injection, directory traversal attacks, and resource exhaustion through oversized uploads. Image processing libraries may contain vulnerabilities exploitable through crafted input files, while streaming data can be leveraged for denial-of-service attacks through continuous high-volume transmissions.

C. Architecture Patterns Overview

Direct embedding approaches involve incorporating binary data directly within MCP message payloads using encoding schemes such as Base64 or hexadecimal representation. This pattern ensures atomic message handling but introduces significant overhead in terms of message size and processing requirements. Direct embedding proves suitable for small files (typically under 1MB) where simplicity outweighs efficiency concerns.

Reference-based approaches utilize external storage mechanisms, transmitting only metadata and access tokens through MCP messages. Binary data resides in cloud storage services, local file systems, or dedicated media servers, while MCP messages contain references enabling retrieval. This pattern optimizes network utilization and message processing speed but introduces complexity in reference management and potential consistency issues.

Synchronous processing models complete all data processing operations within the request-response cycle, ensuring immediate result availability but potentially causing timeout issues with large files or complex processing tasks. This approach provides predictable behavior and simplified error handling but may not scale effectively for resource-intensive operations.

Asynchronous processing models initiate processing operations and return immediately with status information, requiring separate mechanisms for result retrieval. This pattern enables handling of large files and complex processing workflows without blocking client interactions but introduces complexity in state management and result notification systems.

Streaming transfer strategies process data incrementally as it becomes available, enabling real-time processing and reduced memory requirements. Chunked transfer strategies divide large files into manageable segments, processing each independently while maintaining overall operation coherence. Both approaches require sophisticated buffering and flow control mechanisms to ensure reliable operation under varying network conditions.

File Handling in MCP Servers

File handling in MCP servers requires careful integration of binary data processing capabilities within the protocol’s JSON-based message structure. The Model Context Protocol SDK provides standardized patterns for implementing file operations through tools and resources, enabling secure and efficient handling of various file types while maintaining protocol compliance.

A. Basic File Upload Implementation

The fundamental file upload mechanism utilizes MCP tools to process Base64-encoded binary data. The implementation extends the MCP Server class to handle file operations through registered tools:

from mcp.server import Server

from mcp.types import Tool, Resource, TextContent

import base64

import hashlib

from pathlib import Path

class FileUploadMCPServer:

def __init__(self, upload_directory: Path, max_file_size: int = 50 * 1024 * 1024):

self.upload_directory = upload_directory

self.max_file_size = max_file_size

self.upload_directory.mkdir(parents=True, exist_ok=True)

self.server = Server("file-upload-server")

self._register_tools()

self._register_resources()

def _register_tools(self):

@self.server.list_tools()

async def list_tools() -> list[Tool]:

return [Tool(

name="upload_file",

description="Upload file with Base64-encoded binary content",

inputSchema={

"type": "object",

"properties": {

"file_data": {"type": "string", "description": "Base64-encoded file content"},

"filename": {"type": "string", "description": "Original filename"},

"mime_type": {"type": "string", "description": "File MIME type"}

},

"required": ["file_data", "filename"]

}

)]

@self.server.call_tool()

async def call_tool(name: str, arguments: dict):

if name == "upload_file":

result = await self._process_upload(arguments)

return [TextContent(type="text", text=str(result))]

async def _process_upload(self, args: dict) -> dict:

try:

binary_data = base64.b64decode(args["file_data"])

if len(binary_data) > self.max_file_size:

return {"status": "error", "message": "File size exceeds limit"}

file_hash = hashlib.sha256(binary_data).hexdigest()[:16]

safe_filename = f"{file_hash}_{args['filename']}"

file_path = self.upload_directory / safe_filename

file_path.write_bytes(binary_data)

return {

"status": "success",

"file_uri": f"file:///{safe_filename}",

"size": len(binary_data),

"hash": file_hash

}

except Exception as e:

return {"status": "error", "message": str(e)}

B. Chunked Upload Management

Large file processing necessitates chunked transfer mechanisms to prevent memory exhaustion and enable reliable transmission. The chunked upload implementation manages upload sessions through stateful MCP tools:

from mcp.server import Server

from mcp.types import Tool, Resource, TextContent

import time

import tempfile

class ChunkedUploadServer:

def __init__(self, upload_dir: Path, chunk_size: int = 4 * 1024 * 1024):

self.upload_dir = upload_dir

self.chunk_size = chunk_size

self.active_sessions = {}

self.server = Server("chunked-upload-server")

self._register_chunked_tools()

def _register_chunked_tools(self):

@self.server.list_tools()

async def list_tools() -> list[Tool]:

return [

Tool(name="init_chunked_upload", description="Initialize chunked upload session",

inputSchema={"type": "object", "properties": {

"filename": {"type": "string"},

"total_size": {"type": "integer"},

"total_chunks": {"type": "integer"}

}, "required": ["filename", "total_chunks"]}),

Tool(name="upload_chunk", description="Upload single file chunk",

inputSchema={"type": "object", "properties": {

"session_id": {"type": "string"},

"chunk_index": {"type": "integer"},

"chunk_data": {"type": "string"}

}, "required": ["session_id", "chunk_index", "chunk_data"]}),

Tool(name="finalize_upload", description="Complete chunked upload",

inputSchema={"type": "object", "properties": {

"session_id": {"type": "string"}

}, "required": ["session_id"]})

]

@self.server.call_tool()

async def call_tool(name: str, arguments: dict):

if name == "init_chunked_upload":

session_id = hashlib.sha256(f"{arguments['filename']}{time.time()}".encode()).hexdigest()

temp_file = tempfile.NamedTemporaryFile(dir=self.upload_dir, delete=False)

self.active_sessions[session_id] = {

"filename": arguments["filename"],

"total_chunks": arguments["total_chunks"],

"received_chunks": set(),

"temp_file": temp_file.name

}

temp_file.close()

return [TextContent(type="text", text=f'{{"session_id": "{session_id}"}}')]

elif name == "upload_chunk":

return [TextContent(type="text", text=str(await self._process_chunk(arguments)))]

elif name == "finalize_upload":

return [TextContent(type="text", text=str(await self._finalize_session(arguments["session_id"])))]

async def _process_chunk(self, args: dict) -> dict:

session_id = args["session_id"]

if session_id not in self.active_sessions:

return {"status": "error", "message": "Invalid session"}

session = self.active_sessions[session_id]

chunk_data = base64.b64decode(args["chunk_data"])

with open(session["temp_file"], "r+b") as f:

f.seek(args["chunk_index"] * self.chunk_size)

f.write(chunk_data)

session["received_chunks"].add(args["chunk_index"])

remaining = session["total_chunks"] - len(session["received_chunks"])

return {"status": "chunk_received", "remaining_chunks": remaining}

async def _finalize_session(self, session_id: str) -> dict:

if session_id not in self.active_sessions:

return {"status": "error", "message": "Invalid session"}

session = self.active_sessions[session_id]

if len(session["received_chunks"]) != session["total_chunks"]:

return {"status": "error", "message": "Missing chunks"}

final_path = self.upload_dir / session["filename"]

Path(session["temp_file"]).rename(final_path)

del self.active_sessions[session_id]

return {"status": "completed", "file_uri": f"file:///{session['filename']}"}

C. File Validation and Storage Integration

Comprehensive file validation incorporates MIME type detection and signature verification. The validation server implements security checks through MCP tools:

from mcp.server import Server

from mcp.types import Tool, Resource, TextContent

import magic

class FileValidationServer:

ALLOWED_TYPES = {'image/jpeg', 'image/png', 'application/pdf', 'text/plain'}

def __init__(self):

self.server = Server("file-validation-server")

self._register_validation_tools()

def _register_validation_tools(self):

@self.server.call_tool()

async def call_tool(name: str, arguments: dict):

if name == "validate_file":

return [TextContent(type="text", text=str(await self._validate_file(arguments["file_uri"])))]

async def _validate_file(self, file_uri: str) -> dict:

try:

file_path = Path("uploads") / file_uri[8:] # Remove file:/// prefix

mime_type = magic.from_file(str(file_path), mime=True)

if mime_type not in self.ALLOWED_TYPES:

return {"valid": False, "reason": "Unsupported file type"}

# Signature verification

with file_path.open('rb') as f:

header = f.read(512)

if not self._verify_signature(header, mime_type):

return {"valid": False, "reason": "Invalid file signature"}

return {"valid": True, "mime_type": mime_type, "size": file_path.stat().st_size}

except Exception as e:

return {"valid": False, "reason": str(e)}

def _verify_signature(self, header: bytes, mime_type: str) -> bool:

signatures = {

'image/jpeg': b'xffxd8xff',

'image/png': b'x89PNGrnx1an',

'application/pdf': b'%PDF-'

}

expected_sig = signatures.get(mime_type)

return header.startswith(expected_sig) if expected_sig else True

Cloud storage integration enables scalable file persistence through S3 services:

from mcp.server import Server

from mcp.types import Tool, Resource, TextContent

import boto3

class S3StorageServer:

def __init__(self, bucket_name: str):

self.bucket_name = bucket_name

self.s3_client = boto3.client('s3')

self.server = Server("s3-storage-server")

self._register_storage_tools()

def _register_storage_tools(self):

@self.server.call_tool()

async def call_tool(name: str, arguments: dict):

if name == "upload_to_s3":

return [TextContent(type="text", text=str(await self._upload_to_s3(arguments)))]

async def _upload_to_s3(self, args: dict) -> dict:

try:

file_path = Path("uploads") / args["filename"]

with file_path.open('rb') as f:

self.s3_client.upload_fileobj(f, self.bucket_name, args["s3_key"])

return {

"status": "success",

"s3_uri": f"s3://{self.bucket_name}/{args['s3_key']}",

"bucket": self.bucket_name

}

except Exception as e:

return {"status": "error", "message": str(e)}

The presented implementations demonstrate production-ready file handling patterns within MCP servers, incorporating security validation, memory-efficient processing, and scalable storage integration. These patterns ensure reliable file operations while maintaining compliance with MCP protocol specifications and enabling integration with cloud infrastructure services.

Image Processing in MCP Servers

A. Image Data Encoding Strategies

The transmission of image data through the Model Context Protocol (MCP) framework necessitates careful consideration of encoding methodologies to optimize both performance and compatibility. The MCP SDK provides multiple approaches for handling image data within the protocol’s message structure, each presenting distinct advantages and limitations.

Base64 encoding represents the most widely adopted approach for embedding binary image data within JSON message payloads. This encoding method ensures compatibility with standard JSON parsers while maintaining data integrity during transmission. However, Base64 encoding introduces a 33% overhead in data size, which can significantly impact performance when processing large images or high-frequency image streams:

from mcp import Server, types

import base64

from typing import Optional

class ImageProcessingServer:

def __init__(self):

self.server = Server("image-processor")

async def handle_base64_image(

self,

image_data: str,

content_type: str = "image/jpeg"

) -> types.TextContent:

"""Process Base64 encoded image data."""

try:

# Decode Base64 image data

binary_data = base64.b64decode(image_data)

# Process image using decoded binary data

processed_result = await self._process_image_binary(

binary_data,

content_type

)

return types.TextContent(

type="text",

text=f"Image processed successfully. Size: {len(binary_data)} bytes"

)

except Exception as e:

return types.TextContent(

type="text",

text=f"Image processing failed: {str(e)}"

)

Binary data handling through MCP resources provides an alternative approach that eliminates encoding overhead. The resource-based approach enables direct binary transmission while maintaining protocol compliance through proper resource URI schemes.

External reference patterns offer optimal network efficiency by transmitting only resource identifiers rather than complete image data. This methodology proves particularly advantageous in distributed systems where image storage and processing occur across multiple nodes:

from mcp.server.models import InitializationOptions

import aiohttp

from pathlib import Path

class ExternalImageHandler:

def __init__(self, server: Server):

self.server = server

self.session: Optional[aiohttp.ClientSession] = None

async def initialize_session(self):

"""Initialize HTTP session for external image fetching."""

self.session = aiohttp.ClientSession()

async def fetch_external_image(self, image_uri: str) -> bytes:

"""Fetch image data from external URI."""

if not self.session:

await self.initialize_session()

async with self.session.get(image_uri) as response:

if response.status == 200:

return await response.read()

else:

raise ValueError(f"Failed to fetch image: HTTP {response.status}")

B. Real-Time Image Processing

Real-time image processing within MCP servers requires efficient handling of continuous image streams while maintaining low latency and high throughput. The MCP SDK’s asynchronous architecture enables non-blocking image processing operations that can handle multiple concurrent image processing requests.

Image resizing and format conversion represent fundamental operations in real-time image processing pipelines. The implementation leverages Python’s PIL (Pillow) library in conjunction with the MCP SDK’s message handling capabilities:

from PIL import Image

import io

from mcp.types import Tool, TextContent

import asyncio

class RealTimeImageProcessor:

def __init__(self):

self.server = Server("realtime-image-processor")

self.processing_queue = asyncio.Queue(maxsize=100)

async def register_tools(self):

"""Register image processing tools with MCP server."""

resize_tool = Tool(

name="resize_image",

description="Resize image to specified dimensions",

inputSchema={

"type": "object",

"properties": {

"image_data": {"type": "string", "description": "Base64 encoded image"},

"width": {"type": "integer", "minimum": 1},

"height": {"type": "integer", "minimum": 1},

"format": {"type": "string", "enum": ["JPEG", "PNG", "WEBP"]}

},

"required": ["image_data", "width", "height"]

}

)

await self.server.register_tool(resize_tool, self.handle_resize_image)

async def handle_resize_image(self, arguments: dict) -> list[TextContent]:

"""Handle image resize operations."""

try:

# Decode image data

image_bytes = base64.b64decode(arguments["image_data"])

image = Image.open(io.BytesIO(image_bytes))

# Perform resize operation

resized_image = image.resize(

(arguments["width"], arguments["height"]),

Image.Resampling.LANCZOS

)

# Convert to specified format

output_format = arguments.get("format", "JPEG")

output_buffer = io.BytesIO()

resized_image.save(output_buffer, format=output_format)

# Encode result

result_b64 = base64.b64encode(output_buffer.getvalue()).decode('utf-8')

return [TextContent(

type="text",

text=f"Image resized successfully to {arguments['width']}x{arguments['height']}"

)]

except Exception as e:

return [TextContent(

type="text",

text=f"Image resize operation failed: {str(e)}"

)]

Metadata extraction capabilities enhance image processing workflows by providing essential image characteristics and embedded information. The extraction of EXIF data, dimensional properties, and color space information enables intelligent processing decisions and content optimization.

C. Memory-Efficient Image Handling

Memory efficiency represents a critical consideration in production image processing systems, particularly when handling large images or high-volume processing scenarios. The MCP SDK supports streaming image processing patterns that minimize memory footprint through incremental data processing.

Streaming image processing enables the handling of large images without loading complete files into memory. This approach proves essential when processing high-resolution images or when operating under memory constraints:

import asyncio

from contextlib import asynccontextmanager

from typing import AsyncGenerator

class StreamingImageProcessor:

def __init__(self, max_memory_mb: int = 100):

self.max_memory_bytes = max_memory_mb * 1024 * 1024

self.server = Server("streaming-image-processor")

@asynccontextmanager

async def memory_bounded_processing(self):

"""Context manager for memory-bounded image processing."""

initial_memory = self._get_memory_usage()

try:

yield

finally:

# Ensure memory cleanup

current_memory = self._get_memory_usage()

if current_memory - initial_memory > self.max_memory_bytes:

await self._force_garbage_collection()

async def process_image_stream(

self,

image_stream: AsyncGenerator[bytes, None]

) -> AsyncGenerator[bytes, None]:

"""Process image data in streaming fashion."""

async with self.memory_bounded_processing():

chunk_buffer = bytearray()

async for chunk in image_stream:

chunk_buffer.extend(chunk)

# Process when buffer reaches optimal size

if len(chunk_buffer) >= 8192: # 8KB chunks

processed_chunk = await self._process_image_chunk(

bytes(chunk_buffer)

)

yield processed_chunk

chunk_buffer.clear()

# Process remaining data

if chunk_buffer:

final_chunk = await self._process_image_chunk(

bytes(chunk_buffer)

)

yield final_chunk

def _get_memory_usage(self) -> int:

"""Get current memory usage in bytes."""

import psutil

process = psutil.Process()

return process.memory_info().rss

async def _force_garbage_collection(self):

"""Force garbage collection to free memory."""

import gc

gc.collect()

await asyncio.sleep(0) # Yield control to event loop

D. Practical Use Cases

Image analysis and annotation systems benefit significantly from MCP’s structured communication protocol. Computer vision integration through libraries such as OpenCV enables sophisticated image analysis capabilities within MCP servers. The protocol’s tool registration system facilitates the exposure of complex image analysis operations as accessible tools.

Batch image processing workflows leverage MCP’s asynchronous capabilities to handle multiple images concurrently while maintaining system responsiveness. The implementation of processing queues and worker pools enables scalable batch operations that can adapt to varying workload demands.

Real-time image filtering and effects processing demonstrate MCP’s capability to handle interactive image manipulation scenarios. The low-latency communication characteristics of the MCP protocol enable responsive user interfaces for image editing applications, providing immediate feedback for image transformation operations.

Streaming Data Implementation

A. Streaming Architecture Patterns

The implementation of streaming data capabilities within the Model Context Protocol framework requires careful architectural consideration to maintain protocol compliance while enabling real-time data transmission. The MCP SDK provides foundational streaming primitives that can be extended to support various streaming patterns, including server-sent events, bidirectional communication, and backpressure management.

Server-sent events through MCP leverage the protocol’s asynchronous message handling capabilities to deliver continuous data streams to clients. This pattern proves particularly effective for scenarios requiring unidirectional data flow, such as sensor telemetry, log streaming, or real-time monitoring data. The MCP SDK’s event-driven architecture facilitates the implementation of server-sent event patterns through its notification system:

from mcp import Server, types

from mcp.server.models import InitializationOptions

import asyncio

class StreamingMCPServer:

def __init__(self):

self.server = Server("streaming-server")

self.setup_streaming_tools()

def setup_streaming_tools(self):

@self.server.call_tool()

async def start_stream(arguments: dict) -> list[types.TextContent]:

stream_id = arguments.get("stream_id")

# Use MCP notification system for streaming

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"Starting stream {stream_id}"

)

)

return [types.TextContent(

type="text",

text=f"Stream {stream_id} initiated"

)]

@self.server.list_resources()

async def list_stream_resources() -> list[types.Resource]:

return [

types.Resource(

uri=f"stream://data/{i}",

name=f"Stream {i}",

mimeType="application/octet-stream"

) for i in range(5)

]

WebSocket-style bidirectional streaming extends the MCP protocol’s capabilities to support interactive streaming scenarios where both client and server participate in continuous data exchange. This pattern enables sophisticated real-time applications such as collaborative editing, live data analysis, or interactive simulations.

Backpressure handling represents a critical component of robust streaming implementations. The MCP SDK’s asynchronous architecture provides mechanisms for flow control that prevent memory exhaustion and maintain system stability under varying load conditions. Effective backpressure management ensures that downstream consumers can process data at sustainable rates without overwhelming system resources.

B. Real-Time Data Streaming

Real-time audio processing demonstrates the practical application of streaming data patterns within MCP servers. Audio streams require low-latency processing and continuous data flow to maintain an acceptable user experience. The implementation leverages the MCP SDK’s streaming capabilities combined with audio processing libraries:

from mcp import Server, types

import base64

class AudioStreamProcessor:

def __init__(self):

self.server = Server("audio-stream-processor")

self.setup_audio_tools()

def setup_audio_tools(self):

@self.server.call_tool()

async def process_audio(arguments: dict) -> list[types.TextContent | types.BlobResourceContents]:

audio_b64 = arguments.get("audio_data")

audio_bytes = base64.b64decode(audio_b64)

# Process audio and return both text analysis and processed audio

analysis = f"Processed {len(audio_bytes)} bytes of audio data"

return [

types.TextContent(type="text", text=analysis),

types.BlobResourceContents(

uri="processed://audio/output",

blob=base64.b64encode(audio_bytes).decode(),

mimeType="audio/wav"

)

]

@self.server.read_resource()

async def read_audio_buffer(uri: str) -> str | bytes:

if uri.startswith("buffer://audio/"):

# Return buffered audio data

return b"buffered_audio_data"

raise ValueError(f"Unknown audio resource: {uri}")

The real-time processing pipeline must accommodate varying data arrival rates while maintaining consistent processing performance. Adaptive buffering strategies ensure smooth operation across different network conditions and processing loads.

C. Buffering and Queue Management

Effective buffer management forms the foundation of reliable streaming data systems. Circular buffers provide efficient memory utilization for continuous data streams while maintaining predictable memory footprints. The implementation of circular buffer patterns within MCP servers ensures consistent performance characteristics regardless of stream duration.

Priority queues enable sophisticated data handling scenarios where different data types or sources require differentiated processing. This capability proves essential in multi-modal streaming applications where video, audio, and sensor data streams require coordinated processing with varying priority levels:

from mcp import Server, types

from mcp.server.models import InitializationOptions

import asyncio

class PriorityStreamManager:

def __init__(self):

self.server = Server("priority-stream-manager")

self.setup_priority_tools()

def setup_priority_tools(self):

@self.server.call_tool()

async def enqueue_priority_data(arguments: dict) -> list[types.TextContent]:

priority = arguments.get("priority", 0)

data_uri = arguments.get("data_uri")

# Use MCP resource system for priority data

resource = types.Resource(

uri=f"priority://{priority}/{data_uri}",

name=f"Priority {priority} Data",

mimeType="application/json"

)

# Send notification about queued data

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"Queued priority {priority} data: {data_uri}"

)

)

return [types.TextContent(

type="text",

text=f"Data queued with priority {priority}"

)]

@self.server.list_resources()

async def list_priority_resources() -> list[types.Resource]:

return [

types.Resource(

uri=f"priority://{p}/stream",

name=f"Priority {p} Stream",

mimeType="application/octet-stream"

) for p in [1, 2, 3]

]

Memory bounds enforcement prevents unbounded memory growth in long-running streaming applications. Overflow handling mechanisms ensure graceful degradation when processing capacity is exceeded, maintaining system stability while preserving critical data integrity.

D. Performance Optimization

Asynchronous processing patterns maximize throughput in streaming data applications by enabling concurrent processing of multiple data streams. The MCP SDK’s async/await support facilitates non-blocking I/O operations that scale efficiently with increasing stream counts.

Connection pooling strategies optimize resource utilization when handling multiple simultaneous streams. Efficient connection management reduces overhead and improves system responsiveness, particularly in scenarios involving numerous short-lived streaming connections.

Resource cleanup mechanisms ensure proper disposal of system resources when streams terminate. Garbage collection optimization prevents memory leaks and maintains consistent performance over extended operation periods:

from mcp import Server, types

from mcp.server.models import InitializationOptions

class OptimizedStreamProcessor:

def __init__(self):

self.server = Server("optimized-stream-processor")

self.setup_optimized_streaming()

def setup_optimized_streaming(self):

@self.server.call_tool()

async def manage_stream_resources(arguments: dict) -> list[types.TextContent]:

operation = arguments.get("operation")

stream_id = arguments.get("stream_id")

if operation == "start":

# Create MCP resource for stream

resource_uri = f"stream://managed/{stream_id}"

await self.server.request_context.session.send_notification(

types.ProgressNotification(

progressToken=stream_id,

progress=0,

total=100

)

)

return [types.TextContent(

type="text",

text=f"Managed stream {stream_id} started at {resource_uri}"

)]

elif operation == "cleanup":

# Cleanup stream resources

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"Cleaning up stream {stream_id}"

)

)

return [types.TextContent(

type="text",

text=f"Stream {stream_id} cleaned up"

)]

@self.server.read_resource()

async def read_stream_resource(uri: str) -> str | bytes:

if uri.startswith("stream://managed/"):

stream_id = uri.split("/")[-1]

return f"Stream data for {stream_id}".encode()

raise ValueError(f"Unknown stream resource: {uri}")

E. Advanced Streaming Scenarios

Multi-client broadcasting capabilities enable efficient one-to-many data distribution patterns. The MCP SDK’s architecture supports fan-out streaming scenarios where data sources serve multiple consuming clients simultaneously. This pattern proves essential for real-time dashboard applications, live data monitoring, and collaborative environments.

Stream aggregation from multiple sources presents complex coordination challenges that require sophisticated buffering and synchronization strategies. Time-based alignment ensures coherent data presentation when combining streams with different characteristics and arrival patterns.

Real-time analytics on streaming data demonstrates the integration of processing pipelines with continuous data flows. Statistical analysis, trend detection, and anomaly identification can be performed on streaming data without interrupting the primary data flow. The implementation leverages sliding window techniques and incremental computation methods to maintain low latency while providing meaningful analytical insights.

Edge case handling for stream interruption, reconnection, and data recovery ensures robust operation in production environments. Fault tolerance mechanisms maintain data integrity and service availability despite network interruptions or processing failures.

The streaming data implementation within MCP servers requires a careful balance between performance, reliability, and resource efficiency. Proper architecture design enables scalable streaming solutions that can adapt to varying workload demands while maintaining consistent service quality.

Memory Management and Performance

A. Memory-Efficient Patterns

Memory efficiency in multi-modal MCP servers requires strategic implementation of data handling patterns that minimize resource consumption while maintaining protocol compliance.

Lazy loading strategies within MCP servers enable deferred resource initialization until actual data access occurs. This approach proves particularly effective when handling large files or datasets where only portions of the data may be required for processing. The MCP resource system facilitates lazy loading through URI-based resource references that can be resolved on-demand:

from mcp import Server, types

from mcp.server.models import InitializationOptions

import weakref

class LazyLoadingMCPServer:

def __init__(self):

self.server = Server("lazy-loading-server")

self.resource_cache = weakref.WeakValueDictionary()

self.setup_lazy_resources()

def setup_lazy_resources(self):

@self.server.list_resources()

async def list_lazy_resources() -> list[types.Resource]:

return [

types.Resource(

uri=f"lazy://dataset/{i}",

name=f"Dataset {i}",

mimeType="application/octet-stream"

) for i in range(1000) # Large dataset count

]

@self.server.read_resource()

async def read_lazy_resource(uri: str) -> str | bytes:

# Check cache first

if uri in self.resource_cache:

return self.resource_cache[uri]

# Load only when requested

if uri.startswith("lazy://dataset/"):

dataset_id = uri.split("/")[-1]

data = await self._load_dataset_chunk(dataset_id)

self.resource_cache[uri] = data

return data

raise ValueError(f"Unknown lazy resource: {uri}")

async def _load_dataset_chunk(self, dataset_id: str) -> bytes:

# Simulate loading large dataset chunk

return f"Dataset chunk {dataset_id} data".encode()

Generator patterns enable efficient processing of large datasets by yielding data incrementally rather than loading complete datasets into memory. The MCP protocol’s streaming capabilities support generator-based data processing through resource-based access patterns that maintain low memory footprints.

Memory-mapping techniques for very large files provide operating system-level efficiency improvements that reduce application memory requirements. When integrated with MCP resource handling, memory-mapped files enable efficient access to large datasets without explicit memory management overhead.

B. Resource Monitoring

Effective resource monitoring forms the foundation of performance optimization in production MCP servers. The implementation of monitoring capabilities requires integration with system-level metrics collection while maintaining MCP protocol compliance. Memory usage tracking, performance profiling, and resource utilization analysis provide essential insights for optimization efforts.

Memory monitoring integration within MCP servers enables real-time tracking of resource consumption patterns. The monitoring system can leverage MCP’s notification system to provide continuous updates on memory utilization and performance characteristics:

from mcp import Server, types

import psutil

import asyncio

from contextlib import asynccontextmanager

class MonitoredMCPServer:

def __init__(self):

self.server = Server("monitored-server")

self.monitoring_enabled = True

self.setup_monitoring_tools()

def setup_monitoring_tools(self):

@self.server.call_tool()

async def get_memory_stats(arguments: dict) -> list[types.TextContent]:

process = psutil.Process()

memory_info = process.memory_info()

stats = {

"rss_mb": memory_info.rss / 1024 / 1024,

"vms_mb": memory_info.vms / 1024 / 1024,

"percent": process.memory_percent()

}

return [types.TextContent(

type="text",

text=f"Memory usage: RSS={stats['rss_mb']:.1f}MB, "

f"VMS={stats['vms_mb']:.1f}MB, "

f"Percent={stats['percent']:.1f}%"

)]

@self.server.call_tool()

async def start_memory_monitoring(arguments: dict) -> list[types.TextContent]:

interval = arguments.get("interval", 5)

asyncio.create_task(self._memory_monitoring_loop(interval))

return [types.TextContent(

type="text",

text=f"Memory monitoring started with {interval}s interval"

)]

async def _memory_monitoring_loop(self, interval: int):

while self.monitoring_enabled:

process = psutil.Process()

memory_mb = process.memory_info().rss / 1024 / 1024

if memory_mb > 500: # Alert threshold

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="warning",

data=f"High memory usage detected: {memory_mb:.1f}MB"

)

)

await asyncio.sleep(interval)

Performance profiling capabilities enable the identification of bottlenecks and optimization opportunities within MCP server implementations. Profiling data collection can be integrated with MCP’s tool system to provide on-demand performance analysis and reporting.

C. Performance Benchmarking

Performance benchmarking in MCP servers requires comprehensive measurement of throughput, latency, and resource utilization across different operational scenarios. The benchmarking system must account for MCP protocol overhead while providing meaningful performance metrics for optimization guidance.

Metrics collection systems gather performance data across multiple dimensions including request processing time, memory allocation patterns, and network utilization. The integration of metrics collection with MCP’s notification system enables real-time performance monitoring and alerting capabilities.

Load testing strategies validate MCP server performance under realistic operational conditions. The testing framework must simulate various client interaction patterns while measuring system response characteristics and resource consumption patterns:

from mcp import Server, types

import time

import statistics

from collections import defaultdict

class BenchmarkingMCPServer:

def __init__(self):

self.server = Server("benchmarking-server")

self.metrics = defaultdict(list)

self.setup_benchmarking_tools()

def setup_benchmarking_tools(self):

@self.server.call_tool()

async def run_benchmark(arguments: dict) -> list[types.TextContent]:

test_type = arguments.get("test_type", "latency")

iterations = arguments.get("iterations", 100)

if test_type == "latency":

results = await self._benchmark_latency(iterations)

elif test_type == "throughput":

results = await self._benchmark_throughput(iterations)

else:

raise ValueError(f"Unknown benchmark type: {test_type}")

return [types.TextContent(

type="text",

text=f"Benchmark results: {results}"

)]

@self.server.call_tool()

async def get_performance_report(arguments: dict) -> list[types.TextContent]:

report = await self._generate_performance_report()

return [types.TextContent(

type="text",

text=report

)]

async def _benchmark_latency(self, iterations: int) -> dict:

latencies = []

for _ in range(iterations):

start_time = time.perf_counter()

# Simulate processing operation

await asyncio.sleep(0.001) # 1ms simulated work

end_time = time.perf_counter()

latencies.append((end_time - start_time) * 1000) # Convert to ms

return {

"mean_ms": statistics.mean(latencies),

"median_ms": statistics.median(latencies),

"p95_ms": statistics.quantiles(latencies, n=20)[18], # 95th percentile

"min_ms": min(latencies),

"max_ms": max(latencies)

}

async def _benchmark_throughput(self, iterations: int) -> dict:

start_time = time.perf_counter()

# Process multiple operations concurrently

tasks = [self._simulate_processing() for _ in range(iterations)]

await asyncio.gather(*tasks)

end_time = time.perf_counter()

total_time = end_time - start_time

return {

"operations_per_second": iterations / total_time,

"total_time_seconds": total_time,

"operations_completed": iterations

}

async def _simulate_processing(self):

# Simulate typical MCP server processing

await asyncio.sleep(0.005) # 5ms simulated work

Bottleneck identification tools analyze performance data to highlight optimization opportunities. These tools integrate with MCP’s resource system to provide detailed analysis of system performance characteristics and resource utilization patterns.

D. Scaling Considerations

Horizontal scaling patterns enable MCP servers to distribute processing load across multiple server instances while maintaining protocol consistency. The implementation of load balancing strategies requires careful consideration of stateful operations and resource sharing patterns inherent in multi-modal data processing scenarios.

Load balancing strategies must account for the stateful nature of many multi-modal processing operations. Session affinity, consistent resource routing, and distributed caching strategies ensure proper operation scaling while maintaining data consistency and processing continuity.

Resource allocation policies define how computational resources are distributed among concurrent processing operations. Dynamic resource allocation based on workload characteristics and priority levels optimizes system utilization while maintaining quality of service guarantees.

The scaling architecture must accommodate varying workload patterns typical of multi-modal applications, including burst processing demands, sustained high-throughput scenarios, and resource-intensive operations. Adaptive scaling policies respond to changing demand patterns while maintaining cost efficiency and performance targets.

Distributed MCP server architectures require coordination mechanisms that maintain protocol compliance across multiple server instances. Service discovery, configuration management, and inter-server communication patterns enable seamless scaling while preserving the simplicity and reliability characteristics of the MCP protocol.

Performance optimization in multi-modal MCP servers demands comprehensive understanding of memory management patterns, resource utilization characteristics, and scaling requirements. The integration of monitoring, benchmarking, and optimization techniques within the MCP framework enables the development of high-performance systems that can efficiently handle complex multi-modal data processing workloads while maintaining the protocol’s design principles of simplicity and reliability.

Security and Data Validation

A. File Validation

File validation represents a critical security component in multi-modal MCP servers, requiring comprehensive verification of uploaded content before processing operations commence. The MCP SDK provides mechanisms for implementing robust validation pipelines that ensure data integrity while preventing malicious content from compromising system security.

File type verification constitutes the primary defense mechanism against content-based attacks. Implementation of file signature validation, MIME type verification, and content analysis ensures that uploaded files conform to expected formats. The MCP protocol’s resource management system facilitates secure file validation through controlled resource access patterns:

from mcp import Server, types

import magic

import hashlib

from pathlib import Path

class SecureFileValidator:

def __init__(self):

self.server = Server("secure-file-validator")

self.allowed_mime_types = {

'image/jpeg', 'image/png', 'image/gif', 'image/webp',

'audio/wav', 'audio/mp3', 'video/mp4', 'application/pdf'

}

self.max_file_size = 100 * 1024 * 1024 # 100MB limit

self.setup_validation_tools()

def setup_validation_tools(self):

@self.server.call_tool()

async def validate_file(arguments: dict) -> list[types.TextContent]:

file_uri = arguments.get("file_uri")

expected_type = arguments.get("expected_type")

validation_result = await self._comprehensive_file_validation(

file_uri, expected_type

)

if validation_result["valid"]:

return [types.TextContent(

type="text",

text=f"File validation passed: {validation_result['details']}"

)]

else:

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="error",

data=f"File validation failed: {validation_result['error']}"

)

)

return [types.TextContent(

type="text",

text=f"File validation failed: {validation_result['error']}"

)]

@self.server.read_resource()

async def read_validated_file(uri: str) -> str | bytes:

if not uri.startswith("validated://"):

raise ValueError("Access denied: file not validated")

# Extract original URI and verify validation status

original_uri = uri.replace("validated://", "")

if await self._is_file_validated(original_uri):

return await self._read_secure_file(original_uri)

else:

raise ValueError("Access denied: file validation required")

async def _comprehensive_file_validation(self, file_uri: str, expected_type: str) -> dict:

try:

# File size validation

file_size = await self._get_file_size(file_uri)

if file_size > self.max_file_size:

return {

"valid": False,

"error": f"File size {file_size} exceeds limit {self.max_file_size}"

}

# MIME type validation using magic bytes

file_content = await self._read_file_header(file_uri, 2048)

detected_mime = magic.from_buffer(file_content, mime=True)

if detected_mime not in self.allowed_mime_types:

return {

"valid": False,

"error": f"MIME type {detected_mime} not allowed"

}

if expected_type and detected_mime != expected_type:

return {

"valid": False,

"error": f"MIME type mismatch: expected {expected_type}, got {detected_mime}"

}

# Content hash validation

file_hash = await self._calculate_file_hash(file_uri)

return {

"valid": True,

"details": f"Type: {detected_mime}, Size: {file_size}, Hash: {file_hash[:16]}"

}

except Exception as e:

return {

"valid": False,

"error": f"Validation error: {str(e)}"

}

Size limits and quotas prevent resource exhaustion attacks while maintaining system stability under varying load conditions. The implementation of progressive size checking during file upload processes enables early termination of oversized transfers, minimizing resource consumption and improving system responsiveness.

Malware scanning integration provides advanced threat detection capabilities through integration with external security services. The MCP server architecture enables modular integration of scanning engines while maintaining protocol compliance and operational efficiency.

Content sanitization processes remove potentially harmful elements from uploaded files while preserving legitimate data integrity. Sanitization strategies vary by file type but consistently focus on eliminating executable content, script injection vectors, and metadata that could compromise system security.

B. Access Control

Access control mechanisms within MCP servers require authentication and authorization frameworks that integrate seamlessly with the protocol’s tool and resource management systems. The implementation of role-based access control enables fine-grained permission management while maintaining operational simplicity.

Authentication patterns for file operations leverage MCP’s session management capabilities to establish and maintain secure client identities. Token-based authentication, certificate validation, and session lifecycle management ensure that only authorized clients can access sensitive file processing capabilities:

from mcp import Server, types

import jwt

import time

from typing import Optional

class SecureAccessMCPServer:

def __init__(self, secret_key: str):

self.server = Server("secure-access-server")

self.secret_key = secret_key

self.active_sessions = {}

self.setup_security_tools()

def setup_security_tools(self):

@self.server.call_tool()

async def authenticate_client(arguments: dict) -> list[types.TextContent]:

username = arguments.get("username")

credentials = arguments.get("credentials")

if await self._validate_credentials(username, credentials):

session_token = await self._create_session_token(username)

return [types.TextContent(

type="text",

text=f"Authentication successful. Session token: {session_token}"

)]

else:

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="warning",

data=f"Authentication failed for user: {username}"

)

)

return [types.TextContent(

type="text",

text="Authentication failed"

)]

@self.server.call_tool()

async def access_protected_resource(arguments: dict) -> list[types.TextContent]:

session_token = arguments.get("session_token")

resource_path = arguments.get("resource_path")

operation = arguments.get("operation", "read")

if not await self._verify_session_token(session_token):

return [types.TextContent(

type="text",

text="Access denied: invalid session token"

)]

username = await self._get_username_from_token(session_token)

if not await self._check_resource_permission(username, resource_path, operation):

await self._log_access_attempt(username, resource_path, operation, False)

return [types.TextContent(

type="text",

text=f"Access denied: insufficient permissions for {operation} on {resource_path}"

)]

await self._log_access_attempt(username, resource_path, operation, True)

return [types.TextContent(

type="text",

text=f"Access granted for {operation} on {resource_path}"

)]

async def _create_session_token(self, username: str) -> str:

payload = {

'username': username,

'iat': time.time(),

'exp': time.time() + 3600 # 1 hour expiration

}

token = jwt.encode(payload, self.secret_key, algorithm='HS256')

self.active_sessions[token] = username

return token

async def _verify_session_token(self, token: str) -> bool:

try:

payload = jwt.decode(token, self.secret_key, algorithms=['HS256'])

return token in self.active_sessions and payload['exp'] > time.time()

except jwt.InvalidTokenError:

return False

async def _log_access_attempt(self, username: str, resource: str, operation: str, success: bool):

status = "SUCCESS" if success else "DENIED"

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"Access {status}: {username} attempted {operation} on {resource}"

)

)

Authorization patterns implement role-based access control that maps user identities to specific operational permissions. The authorization system integrates with MCP’s tool registration mechanism to enforce access restrictions at the protocol level, ensuring that unauthorized clients cannot invoke restricted operations.

Audit logging captures comprehensive access patterns and security events for compliance and forensic analysis. The logging system leverages MCP’s notification framework to provide real-time security event reporting while maintaining detailed historical records for security analysis.

C. Data Privacy

Data privacy considerations in multi-modal MCP servers includes encryption requirements, personally identifiable information handling, and regulatory compliance frameworks. The implementation of privacy-preserving mechanisms ensures that sensitive data remains protected throughout the processing pipeline while maintaining operational efficiency.

Encryption strategies protect data both at rest and in transit, implementing industry-standard cryptographic protocols that safeguard sensitive information. The MCP protocol’s binary data handling capabilities support encrypted data transmission without compromising protocol efficiency or compatibility.

Personally Identifiable Information (PII) detection systems automatically identify and classify sensitive data elements within uploaded content. Automated PII detection enables appropriate handling procedures and compliance with data protection regulations:

from mcp import Server, types

import re

from cryptography.fernet import Fernet

class PrivacyProtectedMCPServer:

def __init__(self):

self.server = Server("privacy-protected-server")

self.encryption_key = Fernet.generate_key()

self.fernet = Fernet(self.encryption_key)

self.pii_patterns = {

'ssn': re.compile(r'bd{3}-d{2}-d{4}b'),

'email': re.compile(r'b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+.[A-Z|a-z]{2,}b'),

'phone': re.compile(r'bd{3}-d{3}-d{4}b'),

'credit_card': re.compile(r'bd{4}[-s]?d{4}[-s]?d{4}[-s]?d{4}b')

}

self.setup_privacy_tools()

def setup_privacy_tools(self):

@self.server.call_tool()

async def scan_for_pii(arguments: dict) -> list[types.TextContent]:

text_content = arguments.get("text_content", "")

pii_findings = await self._detect_pii(text_content)

if pii_findings:

# Log PII detection event

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="warning",

data=f"PII detected: {list(pii_findings.keys())}"

)

)

sanitized_content = await self._sanitize_pii(text_content, pii_findings)

return [types.TextContent(

type="text",

text=f"PII detected and sanitized. Found: {list(pii_findings.keys())}"

)]

else:

return [types.TextContent(

type="text",

text="No PII detected in content"

)]

@self.server.call_tool()

async def encrypt_sensitive_data(arguments: dict) -> list[types.TextContent]:

data_content = arguments.get("data_content")

# Encrypt sensitive data

encrypted_data = self.fernet.encrypt(data_content.encode())

encrypted_b64 = encrypted_data.decode('utf-8', errors='ignore')

return [types.TextContent(

type="text",

text=f"Data encrypted successfully. Length: {len(encrypted_data)} bytes"

)]

async def _detect_pii(self, text: str) -> dict:

findings = {}

for pii_type, pattern in self.pii_patterns.items():

matches = pattern.findall(text)

if matches:

findings[pii_type] = len(matches)

return findings

async def _sanitize_pii(self, text: str, findings: dict) -> str:

sanitized_text = text

for pii_type, pattern in self.pii_patterns.items():

if pii_type in findings:

sanitized_text = pattern.sub(f'[REDACTED_{pii_type.upper()}]', sanitized_text)

return sanitized_text

General Data Protection Regulation (GDPR) compliance requirements necessitate comprehensive data handling procedures that respect individual privacy rights while enabling legitimate data processing operations. The implementation of data subject rights, consent management, and data portability features ensures regulatory compliance while maintaining system functionality.

Privacy-preserving techniques such as differential privacy, homomorphic encryption, and secure multi-party computation can be integrated with MCP servers to enable advanced analytics while protecting individual privacy. These techniques enable valuable insights while maintaining strong privacy guarantees for sensitive data processing scenarios.

The security and data validation framework within multi-modal MCP servers requires comprehensive integration of validation, access control, and privacy protection mechanisms. The implementation of these security measures within the MCP protocol framework ensures that multi-modal data processing operations maintain high security standards while preserving the protocol’s operational simplicity and efficiency characteristics.

Integration with Cloud Services

A. AWS Integration

Amazon Web Services integration with multi-modal MCP servers enables scalable cloud storage, processing capabilities, and managed service utilization while maintaining protocol compliance. The MCP SDK allows seamless integration patterns for AWS services, particularly Amazon S3 for object storage, AWS Lambda for serverless processing, and Amazon Rekognition for image analysis capabilities.

S3 integration patterns leverage the MCP resource system to abstract cloud storage operations behind standard MCP interfaces. This approach enables transparent cloud storage access while maintaining protocol consistency and simplifying client interactions. The implementation utilizes AWS SDK integration within MCP server architecture to provide reliable cloud storage capabilities:

from mcp import Server, types

import boto3

import base64

from botocore.exceptions import ClientError

class AWSS3MCPServer:

def __init__(self, aws_access_key: str, aws_secret_key: str, region: str):

self.server = Server("aws-s3-server")

self.s3_client = boto3.client(

's3',

aws_access_key_id=aws_access_key,

aws_secret_access_key=aws_secret_key,

region_name=region

)

self.default_bucket = "mcp-multimodal-storage"

self.setup_s3_tools()

def setup_s3_tools(self):

@self.server.call_tool()

async def upload_to_s3(arguments: dict) -> list[types.TextContent]:

file_content = arguments.get("file_content") # Base64 encoded

object_key = arguments.get("object_key")

bucket_name = arguments.get("bucket", self.default_bucket)

content_type = arguments.get("content_type", "application/octet-stream")

try:

# Decode file content

file_data = base64.b64decode(file_content)

# Upload to S3

self.s3_client.put_object(

Bucket=bucket_name,

Key=object_key,

Body=file_data,

ContentType=content_type,

Metadata={

'uploaded_via': 'mcp_server',

'content_length': str(len(file_data))

}

)

# Generate S3 URI for MCP resource system

s3_uri = f"s3://{bucket_name}/{object_key}"

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"File uploaded to S3: {s3_uri}"

)

)

return [types.TextContent(

type="text",

text=f"Upload successful: {s3_uri}"

)]

except ClientError as e:

error_msg = f"S3 upload failed: {str(e)}"

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="error",

data=error_msg

)

)

return [types.TextContent(

type="text",

text=error_msg

)]

@self.server.list_resources()

async def list_s3_resources() -> list[types.Resource]:

try:

response = self.s3_client.list_objects_v2(

Bucket=self.default_bucket,

MaxKeys=100

)

resources = []

for obj in response.get('Contents', []):

resources.append(types.Resource(

uri=f"s3://{self.default_bucket}/{obj['Key']}",

name=obj['Key'],

mimeType=self._infer_mime_type(obj['Key'])

))

return resources

except ClientError:

return []

@self.server.read_resource()

async def read_s3_resource(uri: str) -> str | bytes:

if not uri.startswith("s3://"):

raise ValueError("Invalid S3 URI format")

# Parse S3 URI

uri_parts = uri[5:].split('/', 1)

bucket_name = uri_parts[0]

object_key = uri_parts[1]

try:

response = self.s3_client.get_object(

Bucket=bucket_name,

Key=object_key

)

return response['Body'].read()

except ClientError as e:

raise ValueError(f"Failed to read S3 object: {str(e)}")

def _infer_mime_type(self, filename: str) -> str:

extension = filename.lower().split('.')[-1]

mime_types = {

'jpg': 'image/jpeg', 'jpeg': 'image/jpeg', 'png': 'image/png',

'mp3': 'audio/mpeg', 'wav': 'audio/wav', 'mp4': 'video/mp4',

'pdf': 'application/pdf', 'txt': 'text/plain'

}

return mime_types.get(extension, 'application/octet-stream')

AWS Lambda integration enables serverless processing of multi-modal data within MCP server workflows. Lambda functions can be invoked through MCP tools to perform intensive processing operations while maintaining efficient resource utilization and cost optimization.

Amazon Rekognition integration provides computer vision capabilities that enhance image and video processing workflows. The integration leverages AWS managed AI services while exposing functionality through standard MCP tool interfaces.

B. Azure and GCP Patterns

Microsoft Azure integration patterns focus on Azure Blob Storage for scalable object storage and Azure Cognitive Services for AI-powered content analysis. The MCP server architecture accommodates Azure’s service patterns through analogous resource management and tool registration approaches.

Azure Blob Storage integration follows similar patterns to S3 integration, utilizing the Azure Storage SDK within MCP server implementations. Container-based storage organization maps effectively to MCP resource hierarchies, enabling intuitive resource discovery and access patterns.

Google Cloud Platform integration emphasizes Google Cloud Storage and Cloud AI services integration. GCP’s unified API approach simplifies integration complexity while providing comprehensive multi-modal processing capabilities through managed services:

from mcp import Server, types

from google.cloud import storage

from google.cloud import vision

import base64

class GCPIntegratedMCPServer:

def __init__(self, project_id: str, credentials_path: str):

self.server = Server("gcp-integrated-server")

self.project_id = project_id

self.storage_client = storage.Client.from_service_account_json(credentials_path)

self.vision_client = vision.ImageAnnotatorClient.from_service_account_json(credentials_path)

self.setup_gcp_tools()

def setup_gcp_tools(self):

@self.server.call_tool()

async def analyze_image_with_vision(arguments: dict) -> list[types.TextContent]:

image_b64 = arguments.get("image_data")

analysis_type = arguments.get("analysis_type", "labels")

try:

# Decode image

image_data = base64.b64decode(image_b64)

image = vision.Image(content=image_data)

# Perform analysis based on type

if analysis_type == "labels":

response = self.vision_client.label_detection(image=image)

labels = response.label_annotations

results = [f"{label.description}: {label.score:.2f}" for label in labels[:5]]

elif analysis_type == "text":

response = self.vision_client.text_detection(image=image)

texts = response.text_annotations

results = [text.description for text in texts[:3]]

elif analysis_type == "faces":

response = self.vision_client.face_detection(image=image)

faces = response.face_annotations

results = [f"Face {i+1}: confidence {face.detection_confidence:.2f}"

for i, face in enumerate(faces)]

else:

results = ["Unknown analysis type"]

return [types.TextContent(

type="text",

text=f"Vision API analysis ({analysis_type}): {', '.join(results)}"

)]

except Exception as e:

return [types.TextContent(

type="text",

text=f"Vision API analysis failed: {str(e)}"

)]

@self.server.call_tool()

async def upload_to_gcs(arguments: dict) -> list[types.TextContent]:

bucket_name = arguments.get("bucket_name")

blob_name = arguments.get("blob_name")

file_content = arguments.get("file_content") # Base64 encoded

try:

bucket = self.storage_client.bucket(bucket_name)

blob = bucket.blob(blob_name)

# Decode and upload content

file_data = base64.b64decode(file_content)

blob.upload_from_string(file_data)

gcs_uri = f"gs://{bucket_name}/{blob_name}"

return [types.TextContent(

type="text",

text=f"Upload to GCS successful: {gcs_uri}"

)]

except Exception as e:

return [types.TextContent(

type="text",

text=f"GCS upload failed: {str(e)}"

)]

Cloud Functions integration enables event-driven processing patterns that respond to multi-modal data events automatically. Trigger-based architectures facilitate real-time processing pipelines that scale dynamically based on workload demands.

Serverless deployment patterns optimize cost and scalability characteristics by eliminating infrastructure management overhead while maintaining high availability and performance standards.

C. Hybrid Cloud Strategies

Multi-cloud file distribution strategies enable geographic data distribution, redundancy implementation, and vendor lock-in avoidance while maintaining unified access through MCP interfaces. The implementation of multi-cloud architectures requires careful coordination of storage operations, consistency management, and failover procedures.

Failover mechanisms ensure continuous service availability despite individual cloud provider outages or regional service disruptions. Automated failover procedures maintain service continuity while minimizing data loss and performance degradation during transition periods.

Cost optimization strategies balance performance requirements with operational expenses across multiple cloud providers. Dynamic provider selection based on workload characteristics, geographic proximity, and pricing models optimizes total cost of ownership while maintaining service quality standards:

from mcp import Server, types

import asyncio

from typing import Dict, List

class HybridCloudMCPServer:

def __init__(self):

self.server = Server("hybrid-cloud-server")

self.cloud_providers = {

'aws': {'priority': 1, 'available': True, 'cost_factor': 1.0},

'azure': {'priority': 2, 'available': True, 'cost_factor': 0.9},

'gcp': {'priority': 3, 'available': True, 'cost_factor': 1.1}

}

self.setup_hybrid_tools()

def setup_hybrid_tools(self):

@self.server.call_tool()

async def distribute_file_multicloud(arguments: dict) -> list[types.TextContent]:

file_content = arguments.get("file_content")

replication_factor = arguments.get("replication_factor", 2)

# Select optimal cloud providers

selected_providers = await self._select_optimal_providers(replication_factor)

upload_results = []

for provider in selected_providers:

try:

result = await self._upload_to_provider(provider, file_content)

upload_results.append(f"{provider}: {result}")

except Exception as e:

upload_results.append(f"{provider}: Failed - {str(e)}")

return [types.TextContent(

type="text",

text=f"Multi-cloud distribution: {'; '.join(upload_results)}"

)]

@self.server.call_tool()

async def check_provider_status(arguments: dict) -> list[types.TextContent]:

status_report = []

for provider, config in self.cloud_providers.items():

availability = "Available" if config['available'] else "Unavailable"

status_report.append(

f"{provider.upper()}: {availability} "

f"(Priority: {config['priority']}, Cost: {config['cost_factor']}x)"

)

return [types.TextContent(

type="text",

text="Provider Status: " + "; ".join(status_report)

)]

async def _select_optimal_providers(self, count: int) -> List[str]:

# Sort providers by priority and availability

available_providers = [

(provider, config) for provider, config in self.cloud_providers.items()

if config['available']

]

# Sort by priority, then by cost factor

available_providers.sort(key=lambda x: (x[1]['priority'], x[1]['cost_factor']))

return [provider for provider, _ in available_providers[:count]]

async def _upload_to_provider(self, provider: str, file_content: str) -> str:

# Simulate cloud provider upload

await asyncio.sleep(0.1) # Simulate network delay

return f"Upload successful to {provider}"

Geographic distribution considerations optimize data locality and compliance with regional data protection regulations. Strategic placement of data replicas based on user geography, regulatory requirements, and performance characteristics enhances user experience while maintaining compliance standards.

The integration of cloud services with multi-modal MCP servers enables scalable, cost-effective, and highly available systems that leverage managed cloud capabilities while maintaining protocol consistency. Hybrid cloud strategies provide flexibility, redundancy, and optimization opportunities that enhance system resilience and operational efficiency while preserving the simplicity and reliability characteristics inherent in the MCP protocol design.

Real-World Use Cases and Examples

A. Document Processing Pipeline

Document processing pipelines represent comprehensive multi-modal workflows that demonstrate the full capabilities of MCP servers in handling diverse content types within unified processing architectures. These pipelines integrate PDF parsing, optical character recognition, format conversion, and metadata extraction operations while maintaining efficient resource utilization and scalable processing characteristics.

PDF parsing and text extraction constitute fundamental operations in document processing workflows. The implementation leverages specialized libraries integrated within MCP server architecture to provide reliable document analysis capabilities. The MCP protocol’s resource management system facilitates efficient handling of large document collections while enabling granular access control and processing optimization:

from mcp import Server, types

import PyPDF2

from PIL import Image

import pytesseract

import base64

import io

class DocumentProcessingMCPServer:

def __init__(self):

self.server = Server("document-processing-server")

self.supported_formats = ['pdf', 'docx', 'txt', 'png', 'jpg', 'jpeg']

self.setup_document_tools()

def setup_document_tools(self):

@self.server.call_tool()

async def extract_text_from_document(arguments: dict) -> list[types.TextContent]:

document_b64 = arguments.get("document_data")

document_type = arguments.get("document_type")

ocr_enabled = arguments.get("ocr_enabled", False)

try:

document_bytes = base64.b64decode(document_b64)

if document_type == "pdf":

text_content = await self._extract_pdf_text(document_bytes, ocr_enabled)

elif document_type in ["png", "jpg", "jpeg"]:

text_content = await self._extract_image_text(document_bytes)

else:

text_content = document_bytes.decode('utf-8', errors='ignore')

# Create processed document resource

processed_uri = f"processed://document/{hash(text_content[:100])}"

await self.server.request_context.session.send_notification(

types.LoggingNotification(

level="info",

data=f"Document processed: extracted {len(text_content)} characters"

)

)

return [types.TextContent(

type="text",

text=f"Text extraction completed. Length: {len(text_content)} characters. "

f"Resource URI: {processed_uri}"

)]

except Exception as e:

return [types.TextContent(

type="text",

text=f"Document processing failed: {str(e)}"

)]

@self.server.call_tool()

async def convert_document_format(arguments: dict) -> list[types.TextContent | types.BlobResourceContents]:

source_document = arguments.get("source_document")

target_format = arguments.get("target_format")

source_format = arguments.get("source_format")

try:

converted_content = await self._convert_document(

source_document, source_format, target_format

)

return [

types.TextContent(

type="text",

text=f"Document converted from {source_format} to {target_format}"

),

types.BlobResourceContents(

uri=f"converted://document/{target_format}",

blob=base64.b64encode(converted_content).decode(),

mimeType=self._get_mime_type(target_format)

)

]

except Exception as e:

return [types.TextContent(

type="text",

text=f"Document conversion failed: {str(e)}"

)]

@self.server.list_resources()

async def list_processed_documents() -> list[types.Resource]:

return [

types.Resource(

uri=f"processed://document/{i}",

name=f"Processed Document {i}",

mimeType="text/plain"

) for i in range(10)

]

async def _extract_pdf_text(self, pdf_bytes: bytes, ocr_enabled: bool) -> str:

pdf_file = io.BytesIO(pdf_bytes)

pdf_reader = PyPDF2.PdfReader(pdf_file)

text_content = ""

for page in pdf_reader.pages:

page_text = page.extract_text()

if page_text.strip():

text_content += page_text + "n"

elif ocr_enabled:

# OCR fallback for scanned pages