Let me tell you about Jenny.

She’s 42 and has spent the past 10 years working in customer service, progressing from a contract staff member to a full-time team lead. Last year, the IT manager at work asked her for some help with setting up a support bot to make her team more efficient.

Obviously, Jenny is familiar with support bots. She has used a dozen of them over her career in customer service, and she knows that most of them lead to customers saying they want to talk to a human.

But this time it’s different.

In just over a year, she has seen the bot learn to handle more support tickets faster and has seen management progressively reduce the headcount of the customer service team. She’s starting to get worried about her role. She sees the frequent “Open to Work” posts from friends and former colleagues on LinkedIn, so she knows that headcount reduction is happening at other companies, too.

She knows what the current job market is like and remembers she has another 15 years of work before she retirement.

She understands how the support bot works; she wrote most of the knowledge base articles that it works from.

One morning after the most recent lay-offs, a few ideas flash through her mind…

Jenny may be a fictional character, but the scenario I lay out in this post will start to happen within the next 2 years.

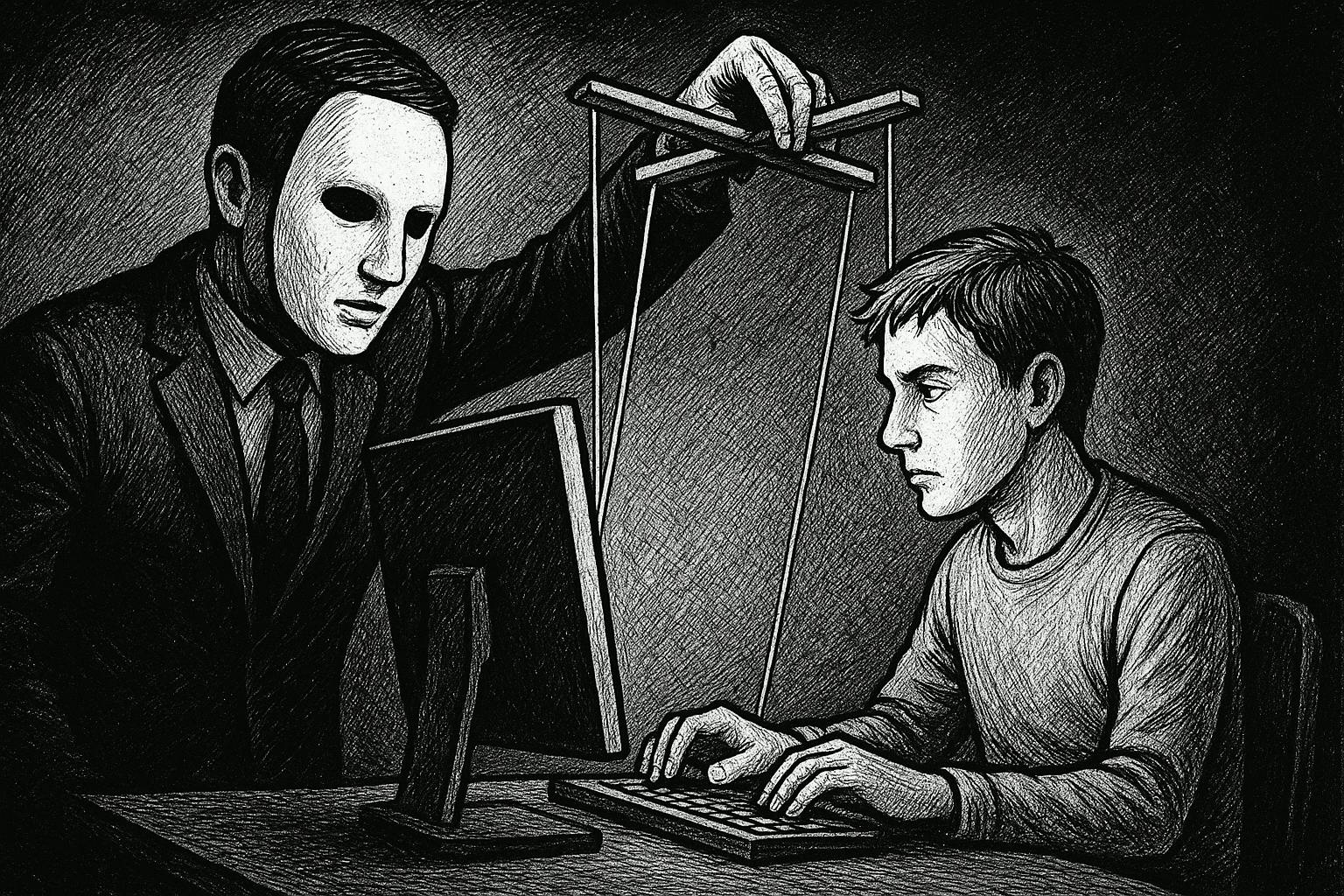

I call it “Disruption of Context”.

Disruption of context will be a form of cyberattack in which the perpetrator deliberately withholds or manipulates the contextual information (context) an AI system relies on, causing it to behave suboptimally or make incorrect decisions. This could be done to undermine it’s performance or to influence the subconscious of end users of the AI tool.

Why?

Most people with exposure to AI have gone through a few phases:

“Oh, this is a nice way to get answers to my questions.”

“Oh, this AI tool helped me save so much time.”

“Okay, this may be able to do a good part of my job in a year or two” which is where most knowledge workers are right now.

This is where it gets interesting.

As people start to feel more threatened by the impact of AI agents on their jobs, they may resort to “Disruption of Context” to regain some level of control over their jobs.

In a wider sense, disruption of context could also be a tool for attackers to further their ideologies or influence people. Imagine if a hacker could access the context used by AI boyfriends and girlfriends and use that to influence how people vote in an election.

It’s the perfect tool for attackers because it can go undetected if done carefully.

n

How would it work?

Disruption of Context attacks could come in two ways:

Internal Disruption of Context: This is an attack carried out by someone within an organisation. Usually, someone who understands what context or internal data sources an AI agent needs to function properly.

An example of an internal DOC attack is someone deliberately adding incorrect information to the support/user guides used by a customer support bot to answer questions.

External Disruption of Context: This is an attack carried out by an entity outside of an organisation.

Example: A competitor or hacktivist group could gain access to an organisation’s systems, and instead of stealing information, they could disable the connection between your AI sales agent and your CRM account and connect it to a CRM account filled with wrong information.

n

Prevention of Disruption of Context attacks

These are some ways to prevent Disruption of Context attacks:

- Strong access controls and audit logging: Implement robust security practices that track who accesses and modifies AI training data and knowledge bases.

- Context validation testing: Set up contract tests that focus on verifying and validating the necessary data sources and tools (e.g. MCPs) that an agent uses.

- Regular performance evaluation: Set up evaluations that periodically check the output accuracy and performance of AI agents against known benchmarks.

- Cultural measures: Create organisational awareness about this threat and establish clear policies about AI system integrity.

The challenge with prevention is that these attacks can be very subtle. Unlike traditional cyberattacks that aim for maximum disruption, context disruption attacks succeed by flying under the radar while gradually degrading system performance or influencing the end users of the system.

What’s Next?

It will be fascinating to see how things play out and how cybersecurity evolves in the age of AI agents. I suspect we’ll see Disruption of Context attacks play out not just in corporate settings, but also in cyberwarfare between countries, where the goal isn’t to steal secrets but to quietly undermine the reliability of AI systems that nations increasingly depend on.

Jenny’s story might be fiction today, but the motivations and capabilities she represents are very real. As we build our AI-powered future, we need to remember to think of how to keep everything functional

NOTE: This article is not meant to encourage any form of attacks on any company’s infrastructure. It is merely to document my thoughts on where AI in the workplace could be going.

If you deliberately manipulate data or work tools belonging to an organisation, you can get fired and also sent to jail. n