Most of the AI tools we use run in the cloud and require internet access. And although you can use local AI tools installed on your machine, you need powerful hardware to do so.

At least, that’s what I thought, until I tried to run some local AI tools using my near-decade-old hardware—and found that it actually works.

Why use a local AI chatbot anyway?

I’ve used countless online AI chatbots, such as ChatGPT, Gemini, Claude, and so on. They work great. But how about those times when you don’t have an internet connection and still want to use an AI chatbot? Or if you want to work with something super private or information that can’t be disclosed for work or otherwise?

That’s when you need a local, offline large language model (LLM) that keeps all of your conversations and data on your device.

Privacy is one of the main reasons to use a local LLM. But there are other reasons, too, such as avoiding censorship, offline usage, cost savings, customization, and so on.

What are quantized LLMs?

The biggest issue for most folks who want to use a local LLM is hardware. The most powerful AI models require massively powerful hardware to run. Outside the convenience, hardware limitations are another reason why most AI chatbots are used in the cloud.

Hardware limitations are one reason I believe I couldn’t run a local LLM. I have a modest computer these days, with an AMD Ryzen 5800x CPU (launched 2020), 32GB DDR4 RAM, and a GTX 1070 GPU (launched 2016). So, it’s hardly the pinnacle of hardware, but given how little I game these days (and when I do, I opt for older, less resource-intensive indie games) and how expensive modern GPUs are, I’m happy with what I have.

However, as it turns out, you don’t need the most powerful AI model. Quantized LLMs are AI models made smaller and faster by simplifying the data they use, specifically, the floating-point numbers.

Typically, AI operates with high-precision numbers (such as 32-bit floating points), which consume a significant amount of memory and processing power. Quantization reduces these to lower-precision numbers (like 8-bit integers) without changing the model’s behavior too much. This means the model runs faster, uses less storage, and can work on smaller devices (like smartphones or edge hardware), though sometimes with a slight drop in accuracy.

This means that although my older hardware would absolutely struggle to run a powerful LLM like Llama 3.1’s 205 billion-parameter model, it can run the smaller, quantized 8B instead.

And when OpenAI announced its first fully quantized, open-weight reasoning models, I thought it was time to see how well they work on my older hardware.

How I use a local LLM with my Nvidia GTX 1070 and LM Studio

I’ll caveat this section by saying that I am not an expert with local LLMs, nor the software I’ve used to get this AI model up and running on my machine. This is just what I did to get an AI chatbot running locally on my GTX 1070—and how well it actually works.

Download LM Studio

To run a local LLM, you need some software. Specifically, LM Studio, a free tool that lets you download and run local LLMs on your machine. Head to the LM Studio home page and select Download for [operating system] (I’m using Windows 10).

It’s a standard Windows installation process. Run the setup and complete the installation process, then launch LM Studio. I’d advise choosing the Power User option as it reveals some handy options you may want to use.

Download your first local AI model

Once installed, you can download your first LLM. Select the Discover tab (the magnifying glass icon). Handily, LM Studio displays the local AI models that will work best on your hardware.

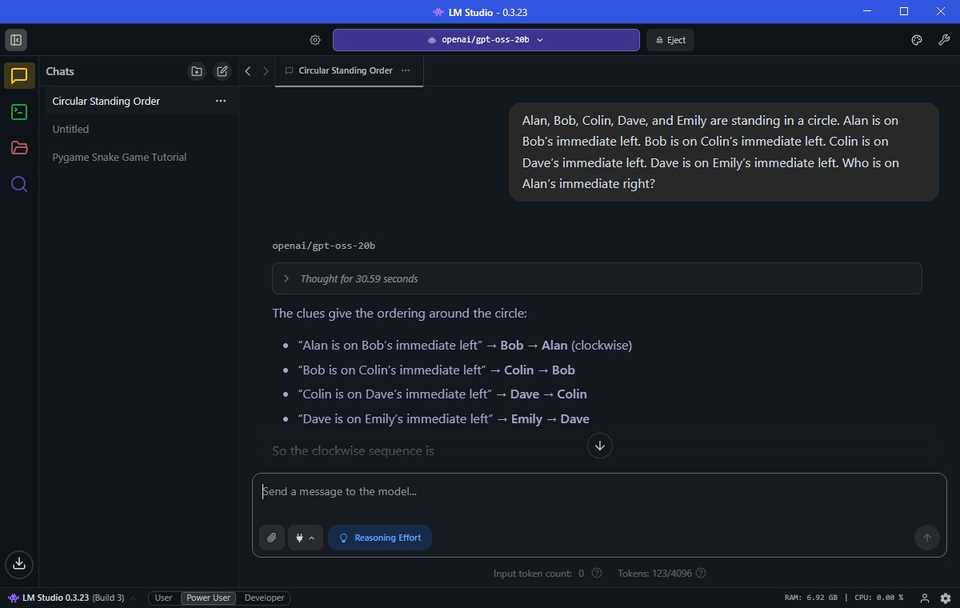

In my case, it’s suggesting that I download a model named Qwen 3-4b-thinking-2507. The model name is Qwen (developed by Chinese tech giant Alibaba), and it’s the third iteration of this model. The “4b” value means that this model has four billion parameters to call upon to respond to you, while “thinking” means this model will spend time considering its answer before responding. Finally, 2507 is the last time this model was updated, on the 25th July.

Qwen3-4b-thinking is only 2.5GB in size, so it shouldn’t take long to download. I’ve also previously downloaded OpenAI/gpt-oss-20b, which is larger at 12.11GB. It also features 20 billion parameters, so it should deliver “better” answers, though it will come with a higher resource cost.

Now, brushing aside the complexities of AI model names, once the LLM downloads, you’re almost ready to start using it.

Before booting up the AI model, switch to the Hardware tab and make sure LM Studio correctly identifies your system. You can also scroll down and adjust the Guardrails here. I set the guardrails on my machine to Balanced, stopping any AI model from consuming too many resources, which can cause a system overload.

Under the Guardrails, you’ll also notice the Resource Monitor. This is a handy way to see just how much of your system the AI model is consuming. It’s worth keeping an eye on if you’re using somewhat limited hardware like me, as you don’t want your system to crash unexpectedly.

Load your AI model and start prompting

You’re now ready to start using a local AI chatbot on your machine. In LM Studio, select the top bar, which functions as a search tool. Selecting the AI name will load the AI model into memory on your computer, and you can begin prompting.

Running a local AI model on old hardware is great—but not without limitations

Basically, you can use the model like you normally would, but there are some limitations. These models aren’t as powerful as, say, GPT-5 running on ChatGPT. Furthermore, the thinking and responding will also take longer, and the responses may vary.

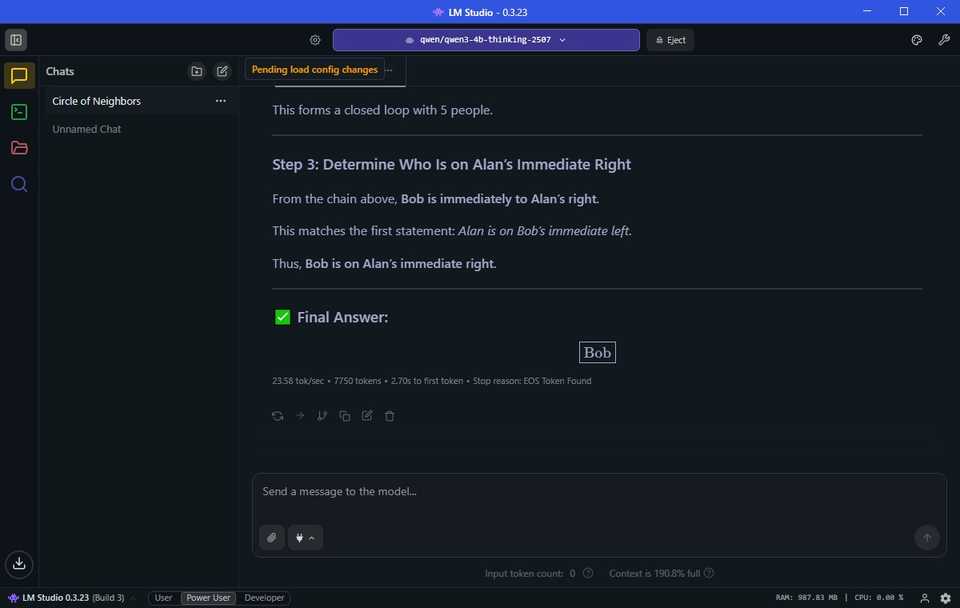

I tried a classic LLM test prompt on both Qwen and gpt-oss, and both succeeded—eventually.

Alan, Bob, Colin, Dave, and Emily are standing in a circle. Alan is on Bob’s immediate left. Bob is on Colin’s immediate left. Colin is on Dave’s immediate left. Dave is on Emily’s immediate left. Who is on Alan’s immediate right?

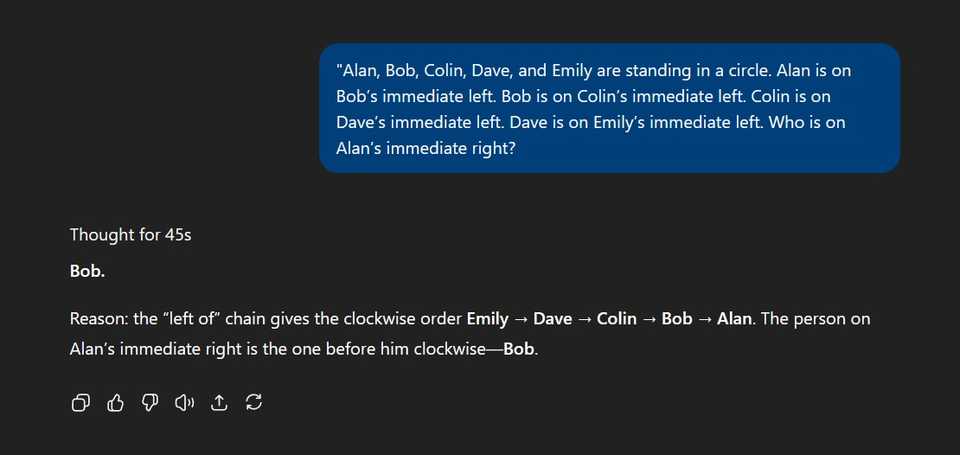

Qwen took 5m11s to reach the correct conclusion. GPT-5 took just 45s. But knocking the ball out of the park? gpt-oss-20b with a rapid 31s.

One test isn’t enough, though, so I tried it on another AI prompt-puzzle designed to test AI reasoning skills. In a previous test, OpenAI’s latest model, GPT-5, failed this, so I was keen to see how my offline versions of Qwen and gpt-oss would handle it.

You’re playing Russian roulette with a six-shooter revolver. Your opponent loads five bullets, spins the cylinder, and fires at himself. Click—empty. He offers you the choice: spin again before firing at you, or don’t. What do you choose?

Qwen actually cracked the correct answer in 1m41s, which is actually pretty decent (again, accounting for hardware limitations). But again, GPT-5 actually failed this, which I’m quite surprised about. It even offered to make me a chart showing why it was right. And again, gpt-oss-20b got the answer correct in 9 seconds.

In other areas, I also saw immediate success. I asked gpt-oss “can you write a snake game using pygame,” and with a minute or two, I had a fully functioning game of Snake up and running.

Your old hardware can run an AI model

Running a local LLM on old hardware comes down to picking the right AI model for your machine. While the version of Qwen worked perfectly well and was the top suggestion in LM Studio, it’s clear that OpenAI’s gpt-oss-20b is the much better option.

But it’s important to balance your expectations. Although gpt-oss answered the questions accurately (and faster than GPT-5), I wouldn’t be able to throw a huge amount of data for processing at it. The limitations of my hardware would begin to show quickly.

Before I tried, I was convinced that running a local AI chatbot on my older hardware was impossible. But thanks to quantized models and tools like LM Studio, it’s not only possible—it’s surprisingly useful.

That said, you won’t get the same speed, polish, or reasoning depth as something like GPT-5 in the cloud. Running locally involves trade-offs: you gain privacy, offline access, and control over your data, but give up some performance.

Still, the fact that a seven-year-old GPU and a four-year-old CPU can handle modern AI at all is pretty exciting. If you’ve been holding back because you don’t own cutting-edge hardware, don’t—quantized local models might be your way into the world of offline AI.