Arm has just unveiled its next-gen processor technologies for upcoming smartphones, which could potentially land in consumer hands as soon as the end of the year. As usual, we have new CPU and GPU parts to cover, but there are also a lot of subtle changes to the familiar formula to get our heads around this year as well.

That’s hardly surprising, the landscape has changed quite rapidly in the last twelve months. Qualcomm has gone down the custom Arm-based CPU route with the Snapdragon 8 Elite, resulting in fewer high-profile flagships using Arm IP this year. At the same time, Google has moved to Imagination Technologies for graphics, while the rapid advancement of AI has thrown a spanner in traditional performance metrics. Arm’s latest announcement moves to address at least some of these challenges.

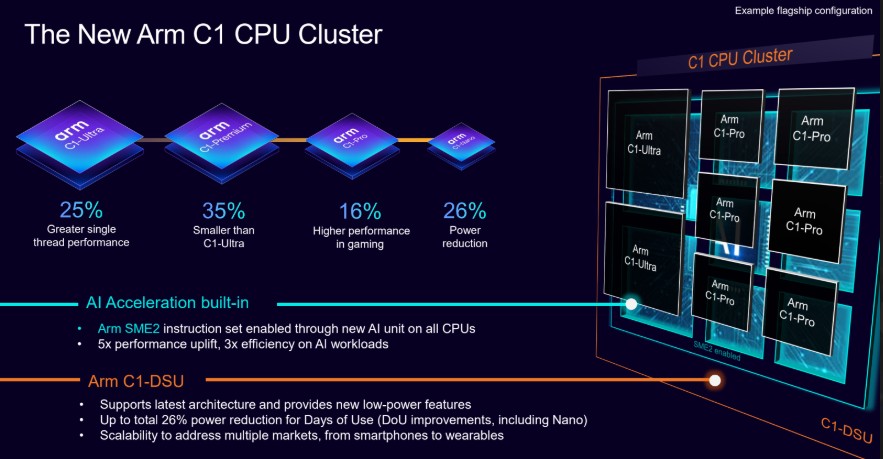

First up is another rebrand. Last year’s Cortex-X and A monikers give way to new C1 CPUs, which are divided into Ultra, Performance, Pro, and Nano cores. We’ll get into all this in a minute. Graphics has undergone a slightly less drastic rename; Mali remains, but the short-lived, high-end Immortalis gives way to a far simpler G1-Ultra, Premium, and Pro branding.

Another notable change is that Arm is taking a greater role in platform design and turnkey solutions. In other words, designs that are ready to plonk right into a chip. I’m still a little unsure about what this means for the traditional individual part licensing structure. Arm insinuates that flexible platform customization remains, and I assume customers can still cherry-pick specific CPU and GPU parts if they want. Still, Arm aims to speed up time to market with its more tightly integrated platform and close relationships with foundries like TSMC. By the way, platform options that contain C1-Ultra and Premium cores fall under the Arm Lumex branding umbrella.

With all that jostling around in the back of our brains, it’s time to examine what’s new on the hardware side.

Meet Arm C1, from Ultra to Nano

With the new Arm C1 names comes a subtle shift in the architecture. All of the new cores are bumped up to ArmV9.3, which means we say goodbye to mixing and matching with previous Cortex-X and A models. That’s right, there won’t be any more multi-tier Cortex-X parts in this year’s chipset announcements. Still, C1-Ultra and Performance can be considered successors to the Cortex-X925, the C1-Pro is the new middle-core that replaces the Cortex-A725, and the C1-Nano is the revamped Cortex-A520. So we’re still looking at three different microarchitectures. The difference between the C1-Ultra and Performance is that the latter is optimized for a 35% smaller area footprint, making it cheaper for upper-mid-tier chipsets but with a slight performance penalty.

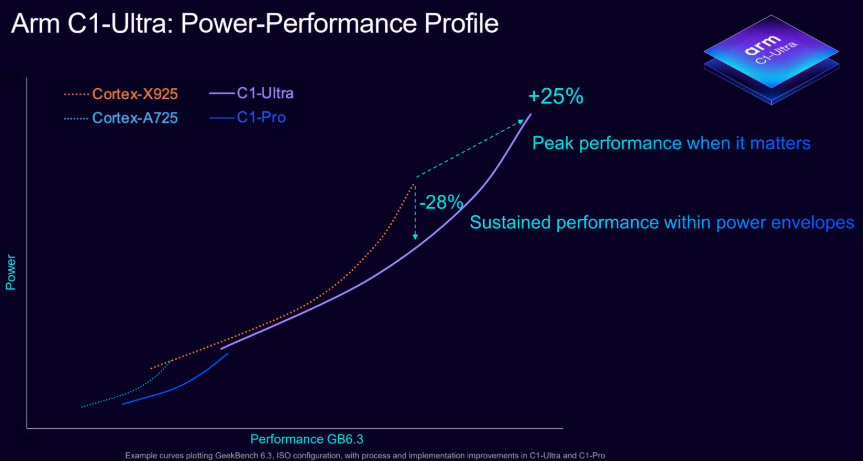

Speaking of performance, IPC gains (those for the same clock speed, cache configuration, etc as last year) are reasonable but perhaps not as groundbreaking as you might expect from the rename. The Arm C1-Ultra appears to be in the region of 12% faster than the Cortex-X925, a ballpark I’ve pulled from a rather poorly labeled graph we saw. However, this increases to 25% once you factor in the move to 3nm and the higher clock speed potential of a 4.1GHz C1-Ultra versus a 3.6GHz Cortex-X925. Perhaps the bigger deal is that the C1-Ultra can offer the same performance as last year while consuming 28% less power.

To achieve this, the Arm C1-Ultra is once again a higher-throughput architecture than its predecessor. The core’s out-of-order window is 25% larger and now handles roughly 2,000 instructions in flight at once; the X925 handles around 1,500. There’s a 33% increase in L1 instruction-cache bandwidth, too, helping to pull out those instructions faster. It’s unclear if Arm has beefed up the execution units to use these extra instructions; not much may have changed, given the limited IPC gain, and the focus seems to be on front-end optimizations. In any case, Arm states that its premium cores are built to scale all the way up to tablet and laptop-class performance — I’ll keep watching this space.

The C1-Pro has seen a similar focus on the front end, with a larger and smarter branch predictor and a larger branch target buffer (BTB) to reduce mispredicts. Caches have been bolstered, too, with higher L1 data bandwidth and lower L2 TLB latency to reduce stall cycles. Both of these contribute to power savings, and the C1-Pro is impressive — Arm claims it’ll offer the same performance as the Cortex-A725 with a 26% reduction in power or 11% more performance for the same power, once you factor in SME2, which we’ll look at more closely in a minute.

The new C1-Premium is a 35% smaller version of the C1-Ultra.

The little C1-Nano boasts a 26% boost to power efficiency over the Cortex-A520, and again, the focus has been on branch predictor secret sauce and cache improvements. In addition, the core’s vector performance has been enhanced, there’s better clock gating during stalls to improve power efficiency, and significant reductions to L3/DRAM traffic that also help reduce system power. The performance gains are more modest, in the region of 5-8% but the Nano is primarily for background tasks these days, where efficiency is far more critical.

Despite the name change, Arm’s CPUs continue on a steady trajectory of double-digit IPC gains, which is nothing to turn your nose up at. However, the bigger change this year is how Arm approaches AI workloads.

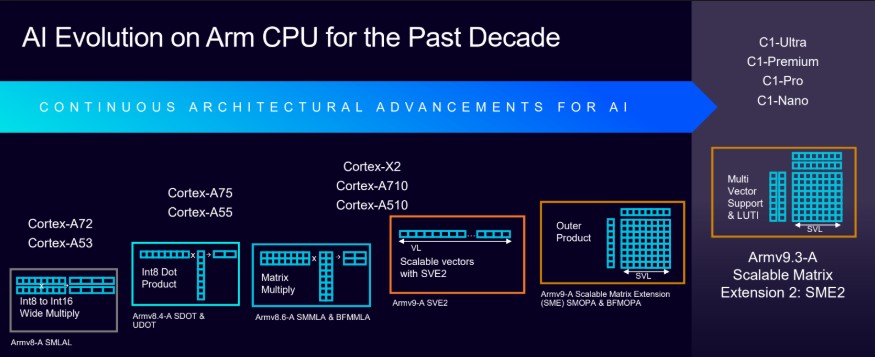

Betting the house on SME2 for AI

A significant change with the new CPUs is the introduction of SME2 — Arm’s latest extension to accelerate common machine learning workloads. SME2 builds on the original SME, which Android chips have essentially dodged, with multi-vector instructions and predicates, 2b/4b weight compression, and 1b binary networks. In other words, it crunches more AI workload types faster.

What’s quite interesting about the implementation of SME2 is that, unlike ARM’s NEON and SVE extensions that are built into the CPU, SME2 sits outside the core, almost like a separate accelerator. However, each of the CPU cores in the C1 series can decode SME2 instructions, making it essentially a shared execution unit. There are two immediate benefits: the unit can shut down entirely when not in use, and you don’t have oversized CPUs with internal SME2 that might not use the unit often anyway. Another perk of this approach is that both high-end and budget CPU cluster configurations can offer similar SME2 capabilities more easily. One of Arm’s high-end Lumex CSS Platform examples points to eight CPU cores paired with twoC1-SME2 units, and there’s no reason a smaller CPU setup couldn’t also offer very similar SME2 capabilities, albeit with slightly weaker instruction dispatch capabilities.

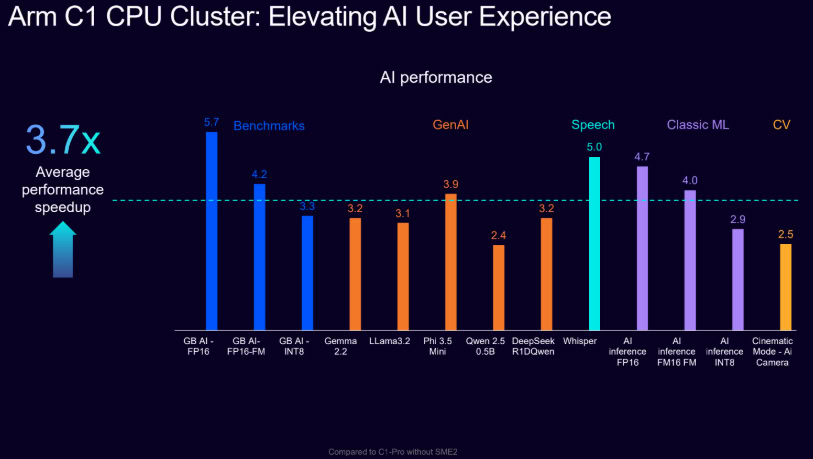

SME2 isn’t going to let your phone run a huge 20 billion parameter chat model, but it will speed up running smaller models and AI tools directly on future phone CPUs. Arm claims a 4.7x latency reduction in speech recognition use cases, 4.7x faster token encoding for Gemma3, a 2.8x speed up for Stable Audio generation, and an average of a 3.7x performance jump across a selection of other workloads compared to the same C1-Pro CPU core without SME2. Context is important here, and many AI use cases will still run an order of magnitude slower even on an SME2 CPU compared to a dedicated NPU or GPU setup.

Still, SME2 is enabled in Google’s XNNPACK library for Android and is supported across multiple frameworks like llama.cpp, Alibaba’s MNN, and Microsoft’s ONNX, where swathes of machine learning development are taking place. Likewise, developers already using Arm’s KleidiAI software library (which integrates with these frameworks) will automatically take advantage of SME2 hardware once it becomes available in Android smartphones. So, future phones will get a “free” boost in AI use cases that can’t tap into their NPU or GPU, provided partners implement SME2, of course, which is not guaranteed.

Ray tracing and machine learning on your GPU

Robert Triggs / Android Authority

Arm’s new Mali G1-Ultra graphics processor sports some similarly solid yearly upgrades. For a 14-core comparison against last year’s Immortalis G925, the G1 Ultra boasts 20% better performance for games and machine learning inference, 9% less energy per frame, and up to 2x faster ray tracing. A good chunk of ARM’s performance improvements this year comes from Image Region Dependencies, allowing the GPU to avoid redundant work, skip waiting on unrelated tiles, and improve memory usage. The new GPU also sports improved on-chip interconnects to double the bandwidth and cache, reducing congestion and improving throughput. Keeping the core busy, in other words.

That 2x faster ray tracing potential is clearly the big winner here. Arm has achieved this by supporting BVH traversal in hardware for the first time and addressing the maths with a single ray rather than a packed ray approach. Parallel processing of packed rays is less crucial when crunching the algorithm in a dedicated unit, and a single-ray algorithm is simpler for low-memory systems, even though it doesn’t benefit from cache efficiency for nearby rays. As Arm has combined ray casting and intersection testing into the same structure, the RTU can be power-gated when not in use, improving power efficiency. However, I suspect the RTU now takes up slightly more space as the trade-off.

Obviously, the performance benefit depends entirely on how much ray tracing workload is in the scene. With only a handful of ray tracing titles and even fewer presenting heavy ray tracing elements, real-world performance gains might be in the region of 40% rather than 2x. This is the uplift figure Arm quotes for its in-house Lumilings RT benchmark built on Unreal 5, but even here, the benefit is actually lower compared to last-gen software ray tracing. The benchmark clocks in at 37.5 fps average, but we saw dips below 24fps, so mileage clearly varies. I’d definitely take the 2x claim with a huge pinch of salt when it comes to real workloads.

As before, the G1 GPU comes in a few different branding flavors, depending on the number of cores. A Mali G1 GPU with 10 or more cores with ray-tracing consistency is a G1-Ultra, 6 to 9 cores is a G1-Premium, and a G1-Pro with 1 to 5 cores is a small configuration you’ll likely find in budget chipsets.

What will next-gen mobile SoCs look like?

As in previous years, the exact chipsets that Arm’s latest components end up in depend heavily on what partners prioritize. We could see more top-heavy designs like MediaTek’s recent Dimensity models, while others stick to a more familiar scaled cluster approach. We’ll just have to wait for next-gen announcements.

That said, Arm’s internal Lumex Reference FPGA platform hints at what it considers a top-end mobile configuration. Two 4.1GHz C1-Ultra cores paired with six 3.5GHz C1-Pro cores, with two SME2 units and a 16MB L3 cache, make for a powerhouse setup that doesn’t use any little cores. Combined with a 14-core Mali-G1 Ultra with 4MB of L2 cache and 16MB of system-level cache, all built on 3nm, this would be a pretty large and memory-heavy design. Partners have historically been more conservative on cache size for cost purposes, but heaps of memory help maximize the potential of Arm’s latest CPU and GPU cores.

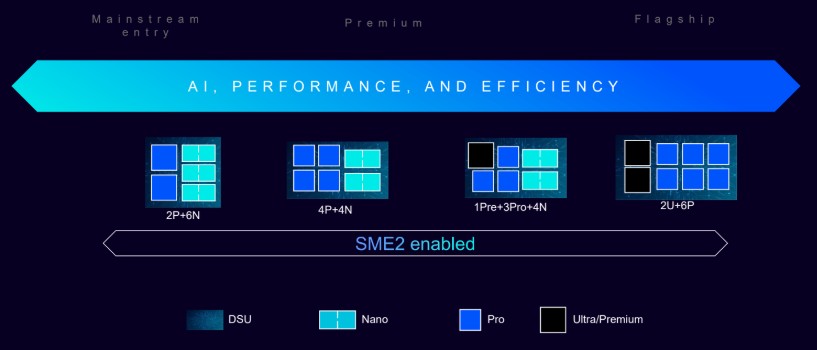

For near-flagship grade chipsets, Arm suggests that partners could swap the C1-Ultra for the C1-Premium for a more cost-effective area-conscious design at the expense of some single-threaded performance. This would likely be paired with a slightly smaller GPU configuration, but could still support ray-tracing and SME2 for AI. For mid-tier chipsets, Arm typically envisions a single Ultra or Premium core paired with three Pro cores and four Nano cores, with two Pro and six Nano cores catering to a mainstream price point. Any of these configurations can be paired with SME2 for a machine learning uplift, but imagine low-end chipsets that are very silicon budget-conscious will opt out and pick much smaller Mali-G1 configurations as well.

We can expect next-gen smartphone chips based on Arm’s C1 and G1 technologies to offer robust performance uplifts for general tasks and very welcome power efficiency gains. However, the bigger wins are found in the niches of the optional machine learning and ray-tracing components, but consumers remain less sold on those areas than the industry chiefs. As for chipsets, I anticipate that the MediaTek Dimensity 9500 will be the first flagship SoC to sport Arm’s new C1 CPU cores and the new G1-Ultra GPU. There’s a chance that next year’s Google Tensor G6 will also jump straight to the C1, albeit in a more moderate 1+6 configuration and a different vendor’s GPU, but that announcement is essentially a year away.

Thank you for being part of our community. Read our Comment Policy before posting.