Google DeepMind has introduced EmbeddingGemma, a 308M parameter open embedding model designed to run efficiently on-device. The model aims to make applications like retrieval-augmented generation (RAG), semantic search, and text classification accessible without the need for a server or internet connection.

The model is built with Matryoshka representation learning, allowing embeddings to be truncated to smaller vectors, and uses Quantization-Aware Training for efficiency. According to Google, inference can be done in under 15ms for short inputs on EdgeTPU hardware.

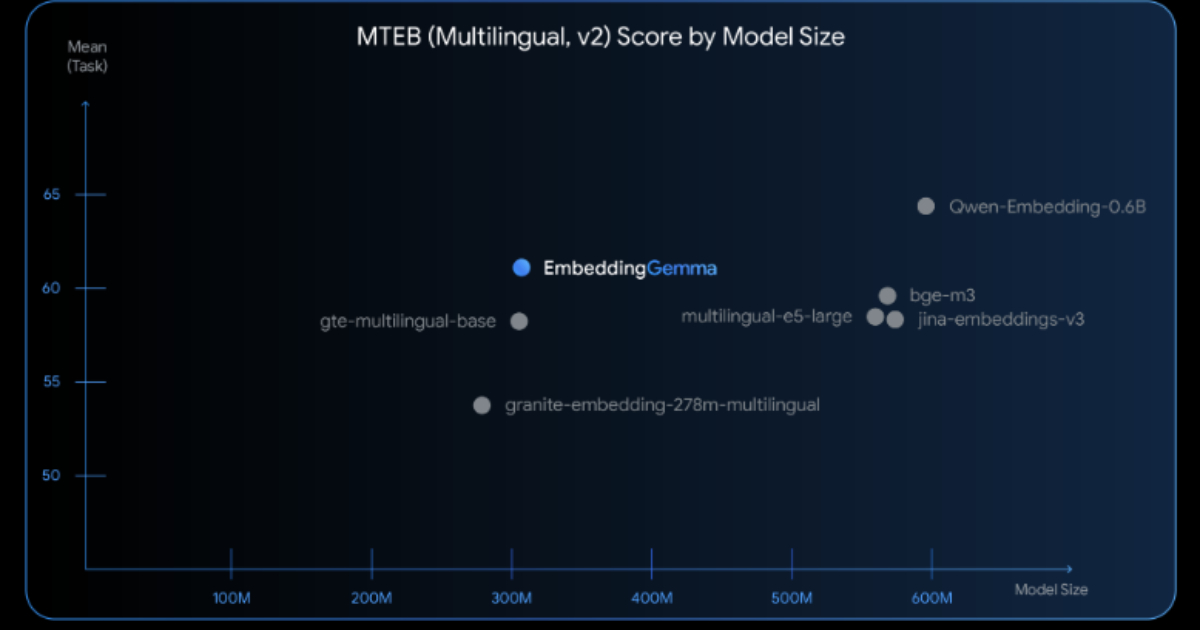

Despite its compact size, EmbeddingGemma ranks as the highest-performing open multilingual embedding model under 500M parameters on the Massive Text Embedding Benchmark (MTEB). It supports over 100 languages and can operate in less than 200MB of RAM when quantized. Developers can also adjust output dimensions (from 768 to 128) for different speed and storage trade-offs, while maintaining quality.

Source: Google Blog

EmbeddingGemma is intended for offline and privacy-sensitive scenarios, such as searching across personal files locally, running mobile RAG pipelines with Gemma 3n, or building domain-specific chatbots. Developers can fine-tune the model for specialized tasks, and it is already integrated with tools like transformers.js, llama.cpp, MLX, Ollama, LiteRT, and LMStudio.

On Reddit, users discussed the role of embeddings in practice:

What do people actually use embedding models for? I knew the applications, but how does it specifically help with them?

User igorwarzocha replied:

Apart from obvious search engines, you can place it between a larger model and your database as a helper model. A few coding apps have this functionality. unsure if this actually helps or confuses the LLM even more.

I tried using it as a “matcher” for description vs keywords (or the other way round, can’t remember) to match an image from the generic assets library to the entry, without having to do it manually. It kind of worked, but I went with bespoke generated imagery instead.

Beyond search, Google suggests EmbeddingGemma could be used for offline assistants, personal file search, or industry-specific chatbots where privacy is a concern. Since the model processes data locally, sensitive information such as emails or business documents does not need to leave the device. Developers can also fine-tune the model for domain-specific tasks or languages.

With this release, Google positions EmbeddingGemma as a complement to its larger server-side Gemini Embedding model, offering developers a choice between offline, efficient embeddings for local applications and higher-capacity embeddings served through the Gemini API for large-scale deployments.