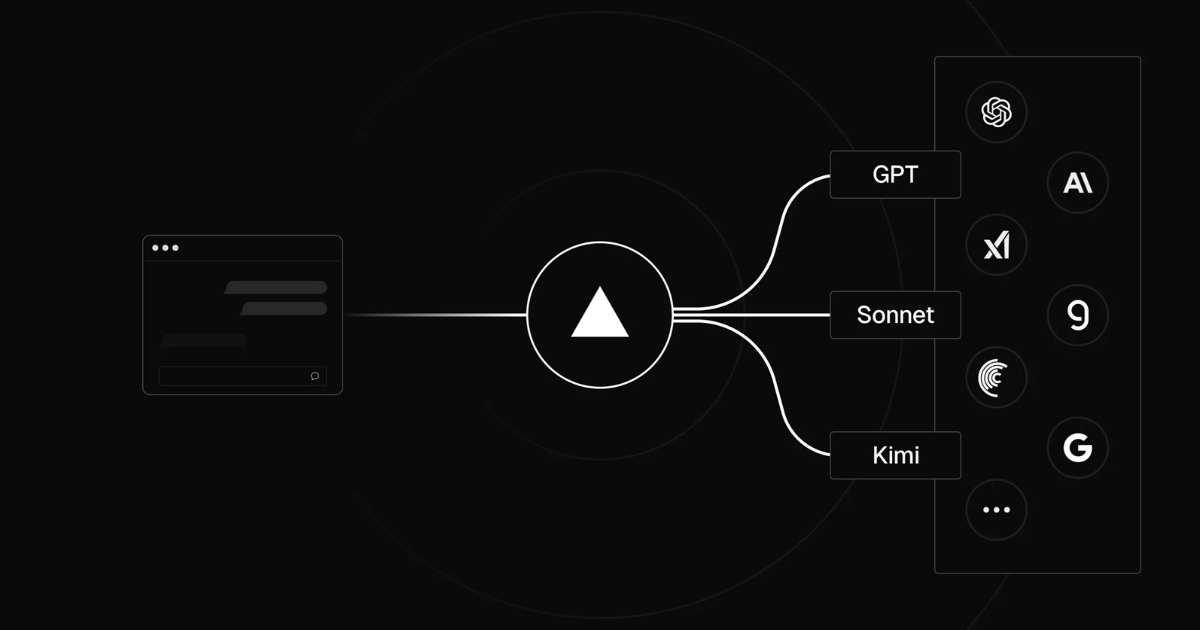

Vercel has rolled out the AI Gateway for production workloads. The service provides a single API endpoint for accessing a wide range of large language and generative models, aiming to simplify integration and management for developers.

The AI Gateway allows applications to send inference requests to multiple model providers through one endpoint. It supports bring-your-own-key authentication, which means developers can use their own API keys from providers such as OpenAI, Anthropic, or Google without paying an additional token markup. The gateway also provides consistent request routing with latency under 20 milliseconds, designed to keep inference times stable regardless of the underlying provider.

One of the core features of the system is its failover mechanism. If a model provider experiences downtime, the gateway automatically redirects requests to an available alternative, reducing service interruptions. It also supports high request throughput, with rate limits intended to meet production-level traffic.

Observability is built into the platform. Developers have access to detailed logs, performance metrics, and cost tracking for each request. This data can be used to analyze usage patterns, monitor response times, and understand the distribution of costs across different model providers. Integration can be done using the AI SDK, where a request can be made by specifying a model identifier in the configuration.

Vercle highlighted that the AI Gateway has been in use internally to run v0.app, a service that has served millions of users. With this release, the infrastructure is available to external developers with optimizations for production workloads.

A comparable service is OpenRouter, which also provides a unified interface to different AI model providers. While OpenRouter emphasizes model discovery and pricing transparency across vendors, Vercel’s AI Gateway focuses on low-latency routing, built-in failover, and integration with the company’s existing developer tools and hosting environment. Both services share the goal of simplifying access to multiple models, but they approach developer experience and infrastructure reliability from different angles.

Comments from developers on X and Reddit highlight a mix of praise for its simplicity and flexibility, alongside some frustrations with free-tier limitations and specific model integrations.

Filipe Sommer, Tech Lead at eToro posted:

Awesome! Could you please elaborate on the failover? Cannot find much about it in this blogpost nor in the docs. Does that mean that when, say, a Gemini model is unavailable, it can retry automatically with another model?

Meanwhile AI Specialist Himanshu Kumar commented:

Democratizing AI access while prioritizing speed and reliability — a significant leap forward. This could empower a new wave of AI-driven applications.

Developer Melvin Arias raised a practical concern, asking:

How does the pricing compare to openrouter?

Vercel’s general availability release of AI Gateway positions it as part of the broader ecosystem of tools for building AI-powered applications, with the focus on routing, reliability, and monitoring rather than providing its own proprietary models.