During AI Week 2025, Cloudflare announced Application Confidence Scores, an automated assessment system that is designed to help organizations evaluate the safety and security of third-party AI applications at scale.

The new scoring system should address the growing challenges of “Shadow IT” and “Shadow AI,” where employees use unapproved generative AI tools that potentially expose sensitive corporate data to security risks or retain user data for extended periods with lax security practices.

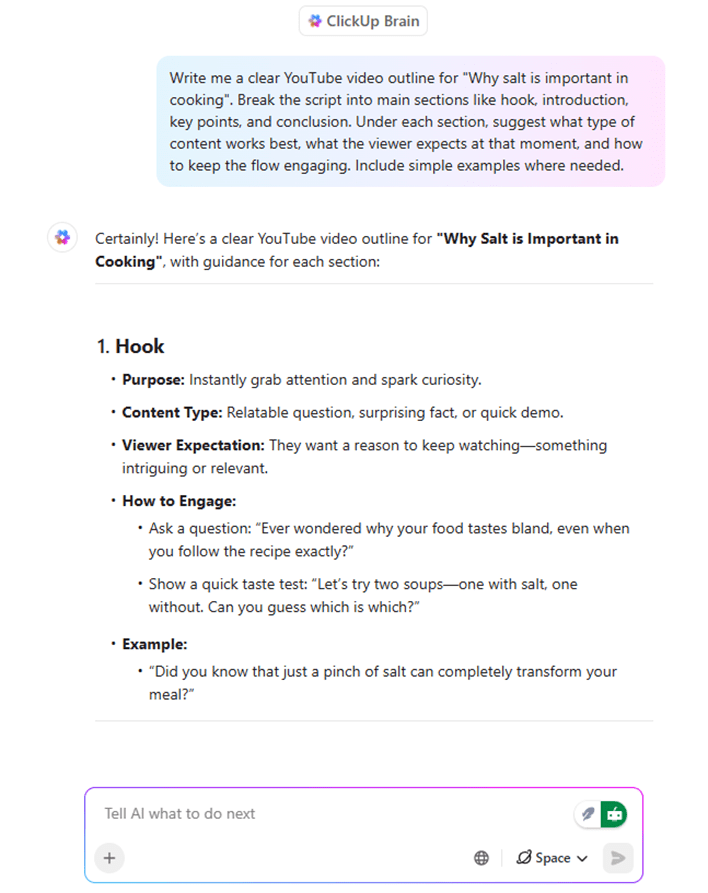

The confidence scoring provides two separate 1-to-5 ratings for each AI application: the Application Confidence Score, which measures general SaaS maturity, and the Gen-AI Confidence Score, focused on generative AI-specific risks. Ayush Kumar, senior product manager at Cloudflare, and Sharon Goldberg, product director at Cloudflare and formerly founder at BastionZero, argue why the scoring system could support security teams in defining access policies for AI at scale and write:

Scores are not based on vibes or black-box “learning algorithms” or “artificial intelligence engines”. We avoid subjective judgments or large-scale red-teaming as those can be tough to execute reliably and consistently over time. Instead, scores will be computed against an objective rubric that we describe in detail in this blog. Our rubric will be publicly maintained and kept up to date in the Cloudflare developer docs.

Among the criteria used to calculate the Application Confidence Score, the article highlights regulatory compliance (SOC 2, GDPR, ISO 27001), data management practices, security controls, and financial stability (to evaluate the long-term viability of the company behind the application). The Gen-AI Confidence Score focuses on the deployment security model, availability of model cards, and training on user prompts.

Source: Cloudflare blog

Walter Haydock, founder at StackAware, comments:

Cloudflare’s new “Application Confidence Score for AI” takes into account ISO 42001. I’d be interested as to how they determine this, because the blog post mentions both “certification” and “compliance,” which aren’t necessarily the same.

Each component of the tested rubric for security and compliance practices is based on publicly available data, including privacy policies, security documentation, compliance certifications, model cards, and incident reports. No points are assigned when no data is available. According to Cloudflare, crawlers are used to collect public information, with AI only used for extraction and computing the scores via an automated system that incorporates human oversight to improve accuracy.

So far, the team has described how the logic works; however, the scores are not currently utilized by any managed service. They will be made available as part of the new suite of AI Security Posture Management (AI-SPM) features in the One SASE platform, but no date has been announced as Kumar and Goldberg confirm:

Today, we’re just releasing our scoring rubric in order to solicit feedback from the community. But soon, you’ll start seeing these Cloudflare Application Confidence Scores integrated into the Application Library in our SASE platform. Customers can simply click or hover over any score to reveal a detailed breakdown of the rubric and underlying components of the score.

During AI Week 2025, Cloudflare announced other features intended to secure access to AI, including shadow AI dashboards, a data-driven view of the AI tools employees are using, API CASB controls for AI to detect risky configurations, data loss, and security issues, and MCP Server Portals to observe every MCP connection in an organization. Furthermore, AI prompt protection is now in beta, supporting ChatGPT, Claude, Gemini, and Perplexity.