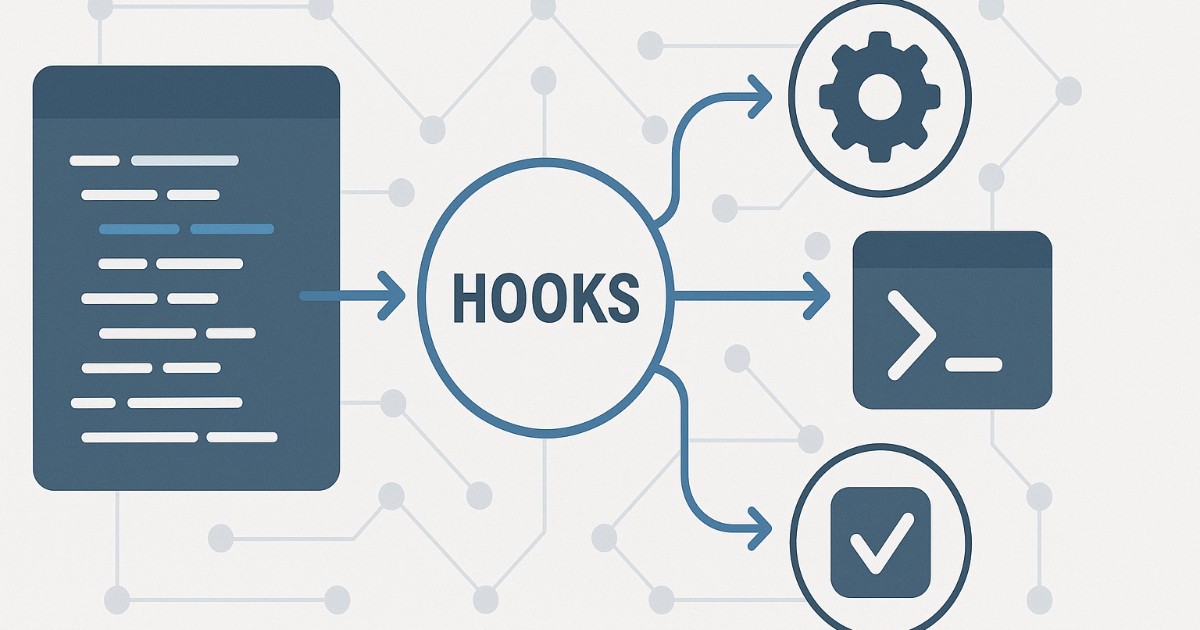

Cursor has introduced a Hooks system in version 1.7 that allows developers to intercept and modify agent behavior at defined lifecycle events. Hooks can be used to block shell commands, run formatters after edits, or observe agent actions in real time.

Early feedback has been cautiously positive: developers welcomed the added control and extensibility, with interest in applying hooks for guardrails, auditing, and workflow automation.

However, adoption is limited so far, and users have noted gaps in documentation, occasional instability, and the need for clearer guidance around safe usage.

Introduced in version 1.7, Cursor Hooks allow external scripts to run at defined stages of the agent loop. Each hook is configured via JSON and executed as a standalone process, receiving structured input over stdin and returning output to Cursor.

Supported lifecycle events include beforeShellExecution, beforeMCPExecution, beforeReadFile, afterFileEdit, and stop, among others.

For example, a developer can block an unsafe command, redact sensitive content before it reaches the model, or run a formatter after a code edit.

Real-world usage of Cursor Hooks is still limited, but early adopters are beginning to explore practical integrations.

One of the first public implementations comes from GitButler, which uses afterFileEdit and stop hooks to automate version control of work performed by agents.

Each AI session begins with a new branch, and ends with an AI-generated commit message based on the user’s prompt. The team describes this as a way to track and checkpoint everything Cursor does.

Cursor’s documentation also provides a simple example: a stop hook that displays a local macOS notification when an agent completes its task. It illustrates how hooks can be used to integrate lightweight automation without needing external services.

Prior to the 1.7 release, developers had been actively requesting this kind of lifecycle control. In one forum thread, a user wrote:

…This would unlock a lot of flexibility for more advanced workflows.

Others proposed use cases such as running tests after edits or tagging AI-authored lines for compliance — features that are now technically possible with Hooks, but not yet widely implemented.

The release of Cursor Hooks has been met with quiet interest but limited visible adoption so far. Cursor’s own forum discussions around version 1.7 on release day focused more on documentation gaps and stability concerns.

As a likely indicator of adoption patterns, Claude Code rolled out its hooks system in mid‑2025. The months since the release have produced a mix of hands-on experiments and feedback. Hooks have been used to enforce coding standards, guard against hallucinations, ask for additional instructions or play a sound when edits are complete.

However, the release of hooks hasn’t been universally smooth. Alongside API frustrations, the Eesel AI guide notes that hooks require deep technical expertise, are confined to local development tasks, and introduce maintenance and security overhead due to their reliance on arbitrary shell commands.

For now, Hooks remain a beta feature. Their long-term utility will likely depend on improved documentation, better examples, and emerging community patterns.