Content Overview

- Automatic Differentiation and Gradients

- Setup

- Computing gradients

- Gradient tapes

- Gradients with respect to a model

- Controlling what the tape watches

- Intermediate results

- Notes on performance

- Gradients of non-scalar targets

- Control flow

Automatic Differentiation and Gradients

Automatic differentiation is useful for implementing machine learning algorithms such as backpropagation for training neural networks.

In this guide, you will explore ways to compute gradients with TensorFlow, especially in eager execution.

Setup

import numpy as np

import matplotlib.pyplot as plt

import tensorflow as tf

2024-08-15 01:30:07.003169: E external/local_xla/xla/stream_executor/cuda/cuda_fft.cc:485] Unable to register cuFFT factory: Attempting to register factory for plugin cuFFT when one has already been registered

2024-08-15 01:30:07.023862: E external/local_xla/xla/stream_executor/cuda/cuda_dnn.cc:8454] Unable to register cuDNN factory: Attempting to register factory for plugin cuDNN when one has already been registered

2024-08-15 01:30:07.029954: E external/local_xla/xla/stream_executor/cuda/cuda_blas.cc:1452] Unable to register cuBLAS factory: Attempting to register factory for plugin cuBLAS when one has already been registered

Computing gradients

To differentiate automatically, TensorFlow needs to remember what operations happen in what order during the forward pass. Then, during the backward pass, TensorFlow traverses this list of operations in reverse order to compute gradients.

Gradient tapes

TensorFlow provides the tf.GradientTape API for automatic differentiation; that is, computing the gradient of a computation with respect to some inputs, usually tf.Variables. TensorFlow “records” relevant operations executed inside the context of a tf.GradientTape onto a “tape”. TensorFlow then uses that tape to compute the gradients of a “recorded” computation using reverse mode differentiation.

Here is a simple example:

x = tf.Variable(3.0)

with tf.GradientTape() as tape:

y = x**2

WARNING: All log messages before absl::InitializeLog() is called are written to STDERR

I0000 00:00:1723685409.408818 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.412555 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.416343 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.420087 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.431667 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.435229 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.438777 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.442350 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.445712 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.449141 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.452491 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685409.456034 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.685265 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.687389 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.689411 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.691490 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.693542 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.695541 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.697441 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.699432 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.701351 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.703333 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.705229 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.707222 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.744994 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.747037 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.749507 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.751538 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.753500 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.755501 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.757421 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.759404 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.761363 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.763858 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.766199 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

I0000 00:00:1723685410.768560 20970 cuda_executor.cc:1015] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero. See more at https://github.com/torvalds/linux/blob/v6.0/Documentation/ABI/testing/sysfs-bus-pci#L344-L355

Once you’ve recorded some operations, use GradientTape.gradient(target, sources) to calculate the gradient of some target (often a loss) relative to some source (often the model’s variables):

# dy = 2x * dx

dy_dx = tape.gradient(y, x)

dy_dx.numpy()

6.0

The above example uses scalars, but tf.GradientTape works as easily on any tensor:

w = tf.Variable(tf.random.normal((3, 2)), name="w")

b = tf.Variable(tf.zeros(2, dtype=tf.float32), name="b")

x = [[1., 2., 3.]]

with tf.GradientTape(persistent=True) as tape:

y = x @ w + b

loss = tf.reduce_mean(y**2)

To get the gradient of loss with respect to both variables, you can pass both as sources to the gradient method. The tape is flexible about how sources are passed and will accept any nested combination of lists or dictionaries and return the gradient structured the same way (see tf.nest).

[dl_dw, dl_db] = tape.gradient(loss, [w, b])

The gradient with respect to each source has the shape of the source:

print(w.shape)

print(dl_dw.shape)

(3, 2)

(3, 2)

Here is the gradient calculation again, this time passing a dictionary of variables:

my_vars = {

'w': w,

'b': b

}

grad = tape.gradient(loss, my_vars)

grad['b']

<tf.Tensor: shape=(2,), dtype=float32, numpy=array([7.5046124, 2.0025406], dtype=float32)>

Gradients with respect to a model

It’s common to collect tf.Variables into a tf.Module or one of its subclasses (layers.Layer, keras.Model) for checkpointing and exporting.

In most cases, you will want to calculate gradients with respect to a model’s trainable variables. Since all subclasses of tf.Module aggregate their variables in the Module.trainable_variables property, you can calculate these gradients in a few lines of code:

layer = tf.keras.layers.Dense(2, activation='relu')

x = tf.constant([[1., 2., 3.]])

with tf.GradientTape() as tape:

# Forward pass

y = layer(x)

loss = tf.reduce_mean(y**2)

# Calculate gradients with respect to every trainable variable

grad = tape.gradient(loss, layer.trainable_variables)

for var, g in zip(layer.trainable_variables, grad):

print(f'{var.name}, shape: {g.shape}')

kernel, shape: (3, 2)

bias, shape: (2,)

Controlling what the tape watches

The default behavior is to record all operations after accessing a trainable tf.Variable. The reasons for this are:

- The tape needs to know which operations to record in the forward pass to calculate the gradients in the backwards pass.

- The tape holds references to intermediate outputs, so you don’t want to record unnecessary operations.

- The most common use case involves calculating the gradient of a loss with respect to all a model’s trainable variables.

For example, the following fails to calculate a gradient because the tf.Tensor is not “watched” by default, and the tf.Variable is not trainable:

# A trainable variable

x0 = tf.Variable(3.0, name="x0")

# Not trainable

x1 = tf.Variable(3.0, name="x1", trainable=False)

# Not a Variable: A variable + tensor returns a tensor.

x2 = tf.Variable(2.0, name="x2") + 1.0

# Not a variable

x3 = tf.constant(3.0, name="x3")

with tf.GradientTape() as tape:

y = (x0**2) + (x1**2) + (x2**2)

grad = tape.gradient(y, [x0, x1, x2, x3])

for g in grad:

print(g)

tf.Tensor(6.0, shape=(), dtype=float32)

None

None

None

You can list the variables being watched by the tape using the GradientTape.watched_variables method:

[var.name for var in tape.watched_variables()]

['x0:0']

tf.GradientTape provides hooks that give the user control over what is or is not watched.

To record gradients with respect to a tf.Tensor, you need to call GradientTape.watch(x):

x = tf.constant(3.0)

with tf.GradientTape() as tape:

tape.watch(x)

y = x**2

# dy = 2x * dx

dy_dx = tape.gradient(y, x)

print(dy_dx.numpy())

6.0

Conversely, to disable the default behavior of watching all tf.Variables, set watch_accessed_variables=False when creating the gradient tape. This calculation uses two variables, but only connects the gradient for one of the variables:

x0 = tf.Variable(0.0)

x1 = tf.Variable(10.0)

with tf.GradientTape(watch_accessed_variables=False) as tape:

tape.watch(x1)

y0 = tf.math.sin(x0)

y1 = tf.nn.softplus(x1)

y = y0 + y1

ys = tf.reduce_sum(y)

Since GradientTape.watch was not called on x0, no gradient is computed with respect to it:

# dys/dx1 = exp(x1) / (1 + exp(x1)) = sigmoid(x1)

grad = tape.gradient(ys, {'x0': x0, 'x1': x1})

print('dy/dx0:', grad['x0'])

print('dy/dx1:', grad['x1'].numpy())

dy/dx0: None

dy/dx1: 0.9999546

Intermediate results

You can also request gradients of the output with respect to intermediate values computed inside the tf.GradientTape context.

x = tf.constant(3.0)

with tf.GradientTape() as tape:

tape.watch(x)

y = x * x

z = y * y

# Use the tape to compute the gradient of z with respect to the

# intermediate value y.

# dz_dy = 2 * y and y = x ** 2 = 9

print(tape.gradient(z, y).numpy())

18.0

By default, the resources held by a GradientTape are released as soon as the GradientTape.gradient method is called. To compute multiple gradients over the same computation, create a gradient tape with persistent=True. This allows multiple calls to the gradient method as resources are released when the tape object is garbage collected. For example:

x = tf.constant([1, 3.0])

with tf.GradientTape(persistent=True) as tape:

tape.watch(x)

y = x * x

z = y * y

print(tape.gradient(z, x).numpy()) # [4.0, 108.0] (4 * x**3 at x = [1.0, 3.0])

print(tape.gradient(y, x).numpy()) # [2.0, 6.0] (2 * x at x = [1.0, 3.0])

[ 4. 108.]

[2. 6.]

del tape # Drop the reference to the tape

Notes on performance

-

There is a tiny overhead associated with doing operations inside a gradient tape context. For most eager execution this will not be a noticeable cost, but you should still use tape context around the areas only where it is required.

-

Gradient tapes use memory to store intermediate results, including inputs and outputs, for use during the backwards pass.

For efficiency, some ops (like

ReLU) don’t need to keep their intermediate results and they are pruned during the forward pass. However, if you usepersistent=Trueon your tape, nothing is discarded and your peak memory usage will be higher.

Gradients of non-scalar targets

A gradient is fundamentally an operation on a scalar.

x = tf.Variable(2.0)

with tf.GradientTape(persistent=True) as tape:

y0 = x**2

y1 = 1 / x

print(tape.gradient(y0, x).numpy())

print(tape.gradient(y1, x).numpy())

4.0

-0.25

Thus, if you ask for the gradient of multiple targets, the result for each source is:

- The gradient of the sum of the targets, or equivalently

- The sum of the gradients of each target.

x = tf.Variable(2.0)

with tf.GradientTape() as tape:

y0 = x**2

y1 = 1 / x

print(tape.gradient({'y0': y0, 'y1': y1}, x).numpy())

3.75

Similarly, if the target(s) are not scalar the gradient of the sum is calculated:

x = tf.Variable(2.)

with tf.GradientTape() as tape:

y = x * [3., 4.]

print(tape.gradient(y, x).numpy())

7.0

This makes it simple to take the gradient of the sum of a collection of losses, or the gradient of the sum of an element-wise loss calculation.

If you need a separate gradient for each item, refer to Jacobians.

In some cases you can skip the Jacobian. For an element-wise calculation, the gradient of the sum gives the derivative of each element with respect to its input-element, since each element is independent:

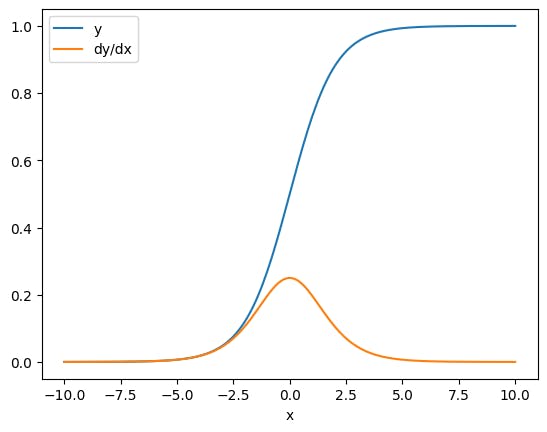

x = tf.linspace(-10.0, 10.0, 200+1)

with tf.GradientTape() as tape:

tape.watch(x)

y = tf.nn.sigmoid(x)

dy_dx = tape.gradient(y, x)

plt.plot(x, y, label="y")

plt.plot(x, dy_dx, label="dy/dx")

plt.legend()

_ = plt.xlabel('x')

Control flow

Because a gradient tape records operations as they are executed, Python control flow is naturally handled (for example, if and while statements).

Here a different variable is used on each branch of an if. The gradient only connects to the variable that was used:

x = tf.constant(1.0)

v0 = tf.Variable(2.0)

v1 = tf.Variable(2.0)

with tf.GradientTape(persistent=True) as tape:

tape.watch(x)

if x > 0.0:

result = v0

else:

result = v1**2

dv0, dv1 = tape.gradient(result, [v0, v1])

print(dv0)

print(dv1)

tf.Tensor(1.0, shape=(), dtype=float32)

None

Just remember that the control statements themselves are not differentiable, so they are invisible to gradient-based optimizers.

Depending on the value of x in the above example, the tape either records result = v0 or result = v1**2. The gradient with respect to x is always None.

dx = tape.gradient(result, x)

print(dx)

None

:::info

Originally published on the TensorFlow website, this article appears here under a new headline and is licensed under CC BY 4.0. Code samples shared under the Apache 2.0 License.

:::