Information Technology (IT) Incident:

An IT incident is any unplanned interruption or reduction in the quality of an IT service. Incidents range from minor issues, such as a slow application, to critical disruptions, including server outages. Incident Management (IM) aims to handle these incidents effectively, ensuring IT services are restored promptly.

Cyber Incident:

An occurrence that may jeopardize the confidentiality, integrity, or availability of information or an information system, or constitutes a threat to security policies or security procedures.

Cyber Incident Response and Severity Levels:

Incident Response (IR) is an organized process an organization follows to recover from a security incident. The primary goal of a cybersecurity Incident Response program is to limit the damage and reduce the cost and recovery time of a security breach, ensuring business continuity and preserving the integrity of systems and data.

Incidents should have different severity levels because not all events have the same impact on the business. Assigning a severity level is a critical step that dictates the speed and scope of resources dedicated to the response.

GenAI Incident and Severity Matrix:

The general use of Artificial Intelligence (AI) methods, including Generative AI (GenAI) and large language models (LLMs) for personal and professional activities, became possible because of technological advancements in Generative Pre-trained Transformer (GPT) systems. GenAI applications have created new security risks, which require information security teams to extend their protection responsibilities to these systems.

The types of possible incidents include chatbots misleading customers, data leaked by AI agents, and many more. Security Operations Center (SOC) and Incident Response (IR) teams require an applied severity matrix for AI incidents to create a standardized system that determines the actual effects and severity levels of AI-related events.

The tool described in the article functions to determine incident severity levels for proper IR resource distribution. The new system integrates numerical data with human assessment factors by using a matrix-based evaluation method.

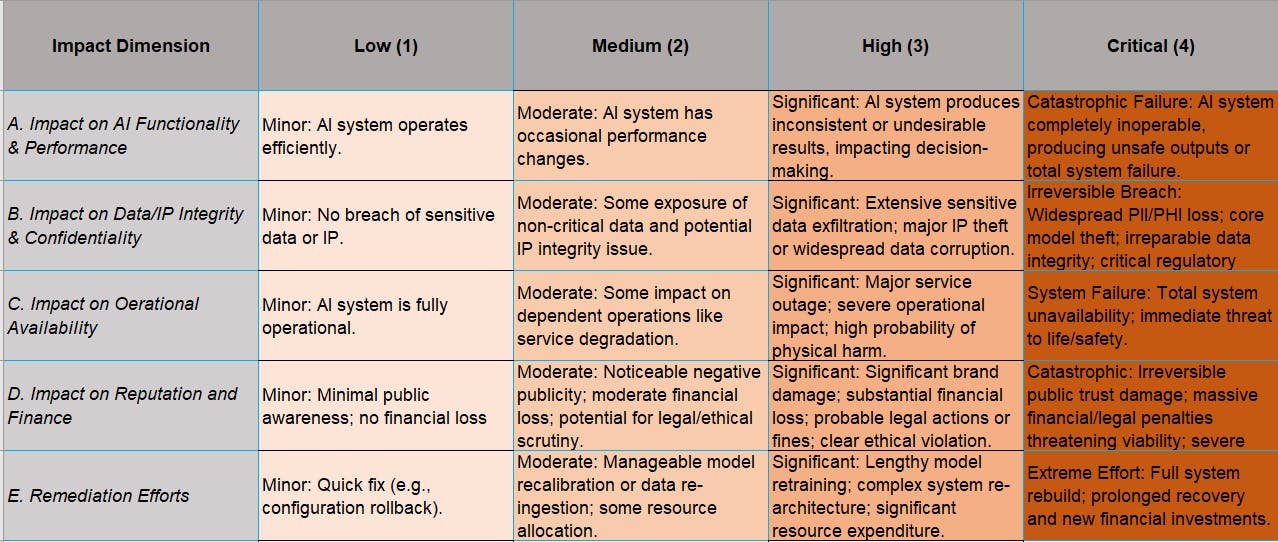

The Matrix contains five ‘Impact Dimensions’ which assess the effects on AI Functionality, Data Integrity, Operation Availability, Reputation, and Remediation efforts.

The tool enables the evaluation of each dimension through a scoring system, which can have Low (1), Medium (2), High (3), or Critical (4) scores.

Factors to Consider Before Incident Declaration:

A preliminary assessment needs to occur before declaring an AI incident because it helps establish the need for substantial resources and immediate triage procedures. AI systems possess distinct characteristics that distinguish them from all other systems.

The assessment needs to identify which AI systems took part in the incident and determine their significance to operational safety and business operations. Some examples of systems are chatbots, computer vision models, recommender systems, custom agents, etc. The evaluation of functional impact requires researchers to analyze how operational design changes when AI systems encounter adversarial attacks.

The system shows different levels of malfunction, which include minor performance problems that affect accuracy and speed, and major problems that result in system breakdowns and dangerous or unfair outcomes. Some performance issues may be around reduced accuracy and increased latency in responses from the model.

The system requires identification of two things according to the ATLAS framework: the nature of the detected anomaly and whether it stems from an adversarial attack or system malfunction. MITRE ATLAS (MITRE, 2025) allows users to identify particular attack techniques (e.g., LLM Prompt Injection, Poison Training Data, Denial of AI Service), which show both the attack goals and their corresponding classification.

The assessment includes verification of data integrity and confidentiality by checking for any compromise of training or validation data and sensitive information (PII, PHI, proprietary model IP) and exfiltration. The evaluation process must determine how much the AI system’s output affects operational and systemic business service elements that rely on its results. Determination should be made about the extent of physical danger as well as financial loss to the business.

Calculating the Severity of the Declared Incident

The evaluation of declared incidents needs standardized methods to establish suitable response procedures. The incident severity assessment requires both numerical data points and human judgment through matrix-based systems to achieve accurate evaluation. The incident severity level reaches its maximum value from any relevant impact category, which allows severe elements to increase the whole incident to a higher priority status.

The scoring system for each relevant dimension (A-E) runs from 1 to 4 to determine incident severity for organizations. The incident severity rating becomes the maximum score from all evaluated dimensions, which determines the complete severity level. The incident can reach ‘Critical’ status if the Functional Impact score is ‘2’, but the Data Impact reaches ‘4’ while other dimensions range from ‘1’ to ‘2’. The structured evaluation process allows organizations to get standardized results while speeding up the evaluation process.

Organizations need to choose between implementing this framework as is or creating their own version that matches their existing calculation systems to establish AI Incident severity levels.

Prioritize Risks Based on the Severity of the AI Incident:

The severity level calculation helps incident response teams decide which risks need their immediate attention when planning their response and allocating resources. The response to severe incidents demands an immediate deployment of a specialized team consisting of Cybersecurity experts, AI/ML Engineers, Data Scientists, Legal professionals, and PR representatives. The response to incidents with lower severity levels needs fewer personnel to manage them.

Critical incidents need immediate executive and board members, legal team, regulatory body, and public stakeholder notification, but low-severity incidents should only receive limited internal information sharing. System shutdowns, together with API disconnections, function as first defense mechanisms that stop high-severity incidents from spreading. The containment process for lower-severity incidents needs to stop major operational disruptions from happening.