Table Of Links

Abstract

1 Introduction

2 Data Collection

3 RQ1: What types of software engineering inquiries do developers present to ChatGPT in the initial prompt?

4 RQ2: How do developers present their inquiries to ChatGPT in multi-turn conversations?

5 RQ3: What are the characteristics of the sharing behavior?

6 Discussions

7 Threats to Validity

8 Related Work

9 Conclusion and Future Work

References

RQ2: How do developers present their inquiries to ChatGPT in multi-turn conversations?

==Motivation:== Results presented in Figure 3 and Figure 4 reveal that a substantial portion in DevGPT-PRs (33.2%) and DevGPT-Issues (26.9%) encompass multi-turn conversations. In single-turn conversations, developers pose an SErelated inquiry in the initial prompt and receive one response from ChatGPT, providing a clear and direct exchange. The dynamics of multi-turn conversations, however, introduce complexity. These interactions extend beyond a simple query and response, involving a series of exchanges that potentially refine, expand, or clarify the initial inquiry.

This layered communication raises a question about developers’ strategies to articulate their inquiries across multiple turns. Thus, we introduce RQ2, which studies the nature of developers’ prompts in multi-turn conversations. To facilitate a comprehensive analysis, we further introduce two sub-RQs:

– RQ2.1: What are the roles of developer prompts in multi-turn conversations? This question aims to categorize the structural role of each prompt in the corresponding multi-turn conversation.

– RQ2.2: What are the flow patterns in multi-turn conversations? Based on the taxonomy proposed as the answer to RQ2.1, this question explores the frequent transition pattern of those identified roles of prompts in multi-turn conversations. The answers to the above sub-RQs will provide insights for researchers about the dynamics and practices of developers in utilizing ChatGPT via multiple rounds of interactions.

4.1 Approach

In RQ2.1, we consider prompts in all 189 multi-turn conversations, i.e., 64 conversations from DevGPT-PRs and 125 from DevGPT-Issues. Following a method similar to RQ1, we used open coding to manually label 645 prompts (236 prompts from DevGPT-PRs and 409 prompts from DevGPT-Issues) in multi-turn conversations over three rounds:

– In the first round, five co-authors independently labeled randomly selected 20 conversations from both the multi-turn DevGPT-PRs and DevGPTIssues datasets, encompassing 40 conversations and 123 prompts. Postdiscussion, we developed a coding book consisting of seven distinct labels.

– In the second round, based on the existing coding book, two annotators independently labeled another set of 20 conversations from each of the multiturn DevGPT-PRs and DevGPT-Issues datasets, a total of 144 prompts. The two annotators achieved an inter-rater agreement score of 0.89, as measured by Cohen’s kappa coefficient, representing almost perfect agreement (Landis and Koch, 1977). The annotators then discussed and refined the coding book.

– Finally, each of the two annotators from round two independently labeled the remaining data. In RQ2.2, we use a Markov model (Gagniuc, 2017) to analyze the conversation flow patterns by plotting a Markov Transition Graph. A Markov Transition Graph is a directed graph that demonstrates the probabilistic transitions

between various states or nodes. In our case, each node in the graph represents a specific category developed in RQ2.1, and the directed edges between nodes denote the likelihood of transitioning from one taxonomy to another based on the multi-turn conversations we collected. To extract meaningful insights from the Markov Transition Graph, we propose the following post-processing steps:

-

We pruned the graph by removing transitions with probabilities lower than 0.05, ensuring a focus on statistically significant relationships.

-

We refined the graph structure by removing nodes without incoming and outgoing edges, except the start and end nodes. This step ensures simplification as we only keep essential components.

-

We systematically reorganized the Markov Transition Graph into a flow chart to enhance its interpretability, offering an easier-to-understand representation of the flow patterns.

4.2 Results

4.2.1 RQ 2.1 What are the roles of developer prompts in multi-turn conversations?Table 4 presents our proposed taxonomy to classify prompts within multiturn conversations. Our analysis reveals that, in both pull requests and issues, multi-turn conversations contain three major types of prompts: those that pose follow-up questions (M1), those that introduce the initial task (M2), and those that are refined from a previous prompt (M3). One prompt from DevGPT-PRs and six prompts from DevGPT-Issues were categorized under “Others” due to their nature of being either casual conversation or lacking sufficient detail to determine their roles.Below, we describe each category in more detail.

==(M1) Iterative follow-up:== In 33% and 40% of the prompts in multi-turn DevGPT-PRs and DevGPT-Issues, developers post queries that directly built upon ChatGPT’s prior responses or the ongoing context, such as debugging and repairing a solution after code generation by ChatGPT. Such iterative follow-ups typically emerge when the initial task presents a complex challenge that ChatGPT might not fully resolve in a single interaction. Consequently, developers engage in a prompt specifying a follow-up request, enabling ChatGPT to incorporate human feedback and iteratively enhance the proposed solution.

==(M2) Reveal the initial task:== We find that a similar fraction, i.e., 26% in multi-turn DevGPT-PRs and 29% in multi-turn DevGPT-Issues, of prompts serve to introduce the initial task to ChatGPT. This distribution highlights that in multi-turn conversations, unlike in single-turn conversations, where the sole prompt is dedicated to outlining the primary task, there are a significant amount of prompts serving other purposes.

==(M3) Refine prompt:== Besides iterative follow-up (M1), developers also tend to improve the solution proposed by ChatGPT by providing a refined request prompt with additional context or constraints. The objective is to enhance the response quality for the same query posted in the previous prompt. Refined Prompts account for 17% of the prompts in multi-turn DevGPT-PRs and 14% in DevGPT-Issues.

==(M4) Information giving:== In 8% and 6% of the prompts in multi-turn DevGPT-PRs and DevGPT-issues, developers do not post any request for ChatGPT, but rather, share knowledge or context with ChatGPT.

==(M5) Reveal a new task== We observe that 7% and 4% of the prompts in multi-turn DevGPT-PRs and DevGPT-issues are posting a new task to ChatGPT, which is distinct from the task(s) concerned in the prior prompts. This category represents a clear difference from iterative follow-ups (M1), as the new task does not relate to or build upon ChatGPT’s prior responses and aims for a different goal. For example, a developer initially requested ChatGPT to generate the SQL corresponding to a Django query set and, in a subsequent prompt, asked for the SQL for a different query set, thereby shifting the focus of the conversation to an entirely new task without prior relevance.

==(M6) Negative feedback:== Within multi-turn conversations, a few (6% in DevGPT-PRs and 2% in DevGPT-Issues) prompts contain only negative feedback directed at ChatGPT’s previous responses, without providing any information for ChatGPT to improve or further resolve. For instance, “Your code is incorrect”, “The same error persists”, and “…does not work”. This category underscores instances where developers seek to inform ChatGPT of its shortcomings, without seeking further assistance or clarification.

(M7) Asking for clarification: 4% and 5% of prompts in multi-turn DevGPTPRs and DevGPT-Issues ask ChatGPT to elaborate on its response. These requests for elaboration aim to ensure the comprehensiveness of a solution, e.g., “Do I need to do anything else?”. They also include verification of ChatGPT’s capacity to handle specific tasks, or inquiries to verify whether certain conditions have been considered in the response. Additionally, developers might ask why some alternatives were overlooked by ChatGPT, indicating a deeper engagement with the proposed solutions and a desire to understand the rationale behind ChatGPT’s proposed solution.

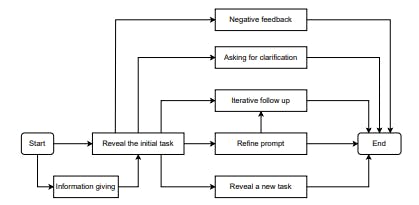

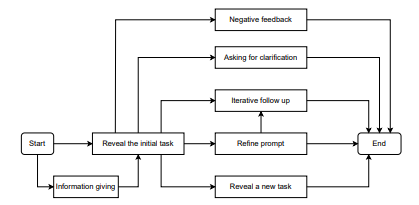

4.2.2 RQ 2.2 What are the flow patterns in multi-turn conversations?Figure 5 presents the resulting flow chart after applying the postprocessing steps on a Markov Transition Graph based on annotated conversations as a result of RQ2.1. The flow chart applies to multi-turn conversations in both DevGPT-PRs and DevGPT-Issues. As illustrated in Figure 5, multi-turn conversations typically start with the presentation of the initial task (M2) or contextual information (M4).

Our detailed follow-up analysis reveals that 81% of multi-turn conversations in DevGPT-PRs and 90% in DevGPT-Issues begin by outlining the initial task. Conversely, around 13% of multi-turn conversations in DevGPT-PRs and 3% in DevGPT-Issues introduce the initial task in the second prompt. In extreme instances, the initial task is disclosed as late as the seventh turn, or, in some cases, the initial task is never explicitly presented—instead, these conversations only present information to ChatGPT without directly stating the task.

As for the complete flow, we identified the following patterns based on Figure 5:

-

Start → reveal the initial task → iterative follow up → end

-

Start → reveal the initial task → refine prompt → (iterative follow up) → end

-

Start → reveal the initial task → reveal a new task → end

-

Start → information giving → reveal the initial task → … → end

-

Start → reveal the initial task → asking for clarification → end

-

Start → reveal the initial task → negative feedback → end

Flow patterns (1) to (3) show the most common developer-ChatGPT interaction flows in multi-turn conversations. The initial task is disclosed in the initial prompt, followed by prompts aiming to improve ChatGPT’s responses via iterative follow-up, prompt refinements, or to reveal a new task.

Pattern (4) demonstrates interaction flows started by developers providing information to ChatGPT as the first step. Then, the initial task was revealed, followed by patterns akin to (1) to (3). Pattern (5) refers to developers asking for clarification from ChatGPT after revealing the initial task and receiving a response from ChatGPT.

Pattern (6) represents an interaction flow in which developers provide negative feedback after revealing the initial task and receiving a response from ChatGPT. Although self-loops are excluded from Figure 5, it’s important to note that certain types of prompts, i.e., those revealing a task, refining a previous prompt, posing iterative follow-up questions, or providing information, can occur repetitively. An example of this pattern is observed when a developer successively refines their prompt across two consecutive prompts.

:::info

Authors

- Huizi Hao

- Kazi Amit Hasan

- Hong Qin

- Marcos Macedo

- Yuan Tian

- Steven H. H. Ding

- Ahmed E. Hassan

:::

:::info

This paper is available on arxiv under CC BY-NC-SA 4.0 license.

:::