Table of Links

Abstract and 1. Introduction

-

Related work

-

Method

3.1. Uniform quantizer

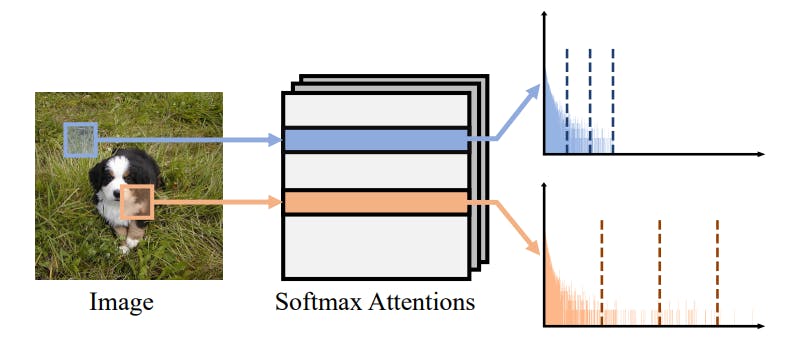

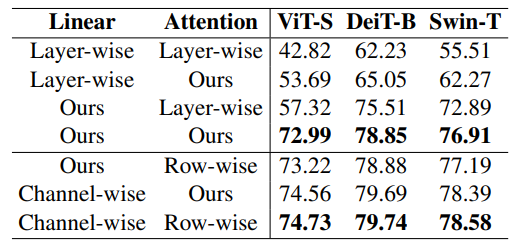

3.2. IGQ-ViT

3.3. Group size allocation

-

Experiments

4.1. Implementation details and 4.2. Results

4.3. Discussion

-

Conclusion, Acknowledgements, and References

Supplementary Material

A. More implementation details

B. Compatibility with existing hardwares

C. Latency on practical devices

D. Application to DETR

4. Experiments

In this section, we describe our experimental settings (Sec. 4.1), and evaluate IGQ-ViT on image classification, object detection and semantic segmentation (Sec. 4.2). We then present a detailed analysis of our approach (Sec. 4.3).

4.1. Implementation details

We evaluate our IGQ-ViT framework on the tasks of image classification, object detection, and instance segmentation. We use the ImageNet [8] dataset for image classification, which contains approximately 1.2M images for training, and 50K for validation. We use COCO [22] for object detection and instance segmentation, which includes 118K training, 5K validation, and 20K test images. We adopt various transformer architectures, including ViT [10], DeiT [34], and Swin transformer [25], for image classification. For the tasks of object detection and instance segmentation, we use Mask R-CNN [14] and Cascade Mask R-CNN [5] with Swin transformers as the backbone. Following [9, 21], we randomly sample 32 images from the ImageNet [8] dataset for image classification, and a single image from COCO [22] for object detection and instance segmentation to calibrate the quantization parameters. We apply our instance-aware grouping technique for all input activations of FC layers, and softmax attentions. More detailed settings are available in the supplement.

4.2. Results

Results on ImageNet. We show in Table 2 the top-1 accuracy (%) on the validation split of ImageNet [8] with various ViT architectures. We report the accuracy with an average group size of 8 and 12. We summarize our findings as follows: (1) Our IGQ-ViT framework with 8 groups already outperforms the state of the art except for ViT-B [10] and Swin-S [25] under 6/6-bit setting, while using more groups further boosts the performance. (2) Our approach under 4/4-bit setting consistently outperforms RepQ-ViT [21] by a large margin. Similar to ours, RepQ-ViT also addresses the scale variations between channels, but it can be applied to the activations with preceding LayerNorm only. In contrast, our method handles the scale variations on all input activations of FC layers and softmax attentions, providing better results. (3) Our group size allocation technique boosts the quantization performance for all models, indicating that using the same number of groups for all layers is suboptimal. (4) Exploiting 12 groups for our approach incurs less than 0.9% accuracy drop, compared to the upper bound under the 6/6-bit setting. Note that the results of upper bound are obtained by using a separate quantizer for each channel of activations and each row of softmax attentions.

Results on COCO. We show in Table 3 the quantization results for object detection and instance segmentation on

COCO [22]. We quantize the backbones of Swin transformers [25] and the convolutional layers in the neck and head of Mask R-CNN [14] and Cascade Mask R-CNN [5]. We observe that PTQ4ViT [40] and APQ-ViT [9], that use layerwise quantizers for activations, do not perform well. In contrast, IGQ-ViT outperforms the state of the art with 8 groups only, and the quantization performance further boosts by exploiting more groups. In particular, it provides the results nearly the same as the full-precision ones for the the 6/6-bit setting. This suggests that scale variations across different channels or tokens are much more critical for object detection and instance segmentation.

:::info

Authors:

(1) Jaehyeon Moon, Yonsei University and Articron;

(2) Dohyung Kim, Yonsei University;

(3) Junyong Cheon, Yonsei University;

(4) Bumsub Ham, a Corresponding Author from Yonsei University.

:::

:::info

This paper is available on arxiv under CC BY-NC-ND 4.0 Deed (Attribution-Noncommercial-Noderivs 4.0 International) license

:::