Introduction

Google announced Gemini File Search, and pundits claim it’s the death toll for homebrew RAG (Retrieval Augmented Generation). The reason is that now the app developer no longer needs to worry about chunking, embedding, file storage, vector database, metadata, retrieval optimization, context management, and more. And the entire document Q&A stack (used to be a middleware plus application layer logic) is now absorbed by the Gemini model and its peripheral cloud offerings.

In this article, we will try out the Gemini File Search and compare it with a homebrew RAG system in terms of capabilities, performance, cost, flexibility, and transparency. You will be able to make an educated decision for your use case. And to speed up your development, I included my example app on GitHub.

Here is the original Google announcement:

Build Your Own Agentic RAG

Traditional RAG – A Refresher

The architecture of a traditional RAG looks like this, which consists of a few sequential steps.

- The documents are first chunked, embedded, and inserted into a vector database. Often, related metadata are included in the database entries.

- User query was embedded and converted into a vector DB search to retrieve the relevant chunks.

- And finally, the original user query and the retrieved chunks (as context) are fed into the AI models to generate the answer for the user.

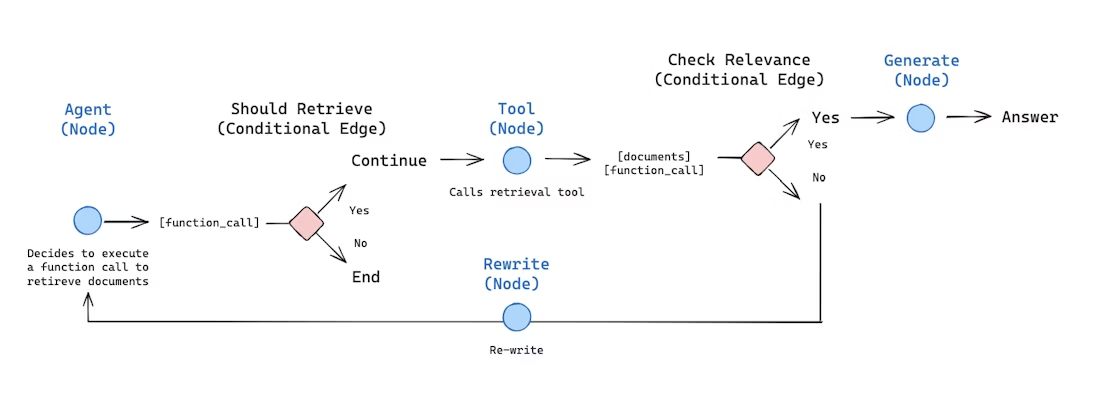

Agentic RAG

The architecture of an Agentic RAG system added a reflection & react loop, where the agent will check if the results are relevant and complete, and then rewrite the query to satisfy the search quality. So, the AI model is used in several places: to rewrite the user query into a vector DB query, to assess whether the retrieval is satisfactory, and finally to generate the answer for the user.

An Example Use Case – Camera Manual Q&A

There are many new photographers who are interested in using old film cameras. One of the main challenges for them is that many old cameras have unique and sometimes quirky ways to operate, even the basic things, such as loading film and resetting the film frame counter. Worse, you can even damage the camera if you do certain things in the “wrong order.” Therefore, accurate and exact instructions from a camera manual are essential.

A camera manual archive hosts 9,000 old camera manuals, mostly scanned PDFs. In an ideal world, you would just download a few for your camera, study them, get familiar, and be done with that. But we are all modern humans who are neither patient nor pre-planned. So, we need Q&A against camera manual PDFs on the go, e.g., in a phone app.

This fits the agentic RAG scope very well. And I assume it will be universally applicable to lots of hobbies (music instruments, Hi-Fi equipment, vintage cars) that require finding information from ancient user manuals.

Homebrew RAG for PDF Q&A

Our RAG system was implemented earlier this year based on the LLaMAIndex RAG workflow with substantial customization:

- Use Qrrant vector database: good price-performance ratio, support metadata.

- Use Mistral OCR API to ingest the PDF: good performance in understanding complex PDF files with illustrations and tables.

- Keep images of each PDF page so users can directly access a graphic illustration of complex camera operations, in addition to text instructions.

- Add an agentic loop of reflection and react based on the Google/Langchain example for agentic search.

How About Multi-Modal LLMs?

Since 2024, the multi-modal LLMs have already been getting really good. An obvious alternative approach was to feed the user query and the entire PDF to the LLM and get an answer. This is a much simpler solution that does not need to maintain any vector DB or middleware.

Our main concern was cost, so we did a cost calculation and comparison. And the short answer is that RAG is faster, more efficient, and much less costly once the number of user queries per day is greater than 10. So, the “directly feeding user query and entire matching PDF to a Multi-modal LLM” only really works for prototyping or very low volume use (a few queries a day).

At that time, it confirmed our belief that homebrew RAG is still critically important until Google drops the Gemini File Search. I think the decision is not that simple anymore.

The Gemini File Search – An Example

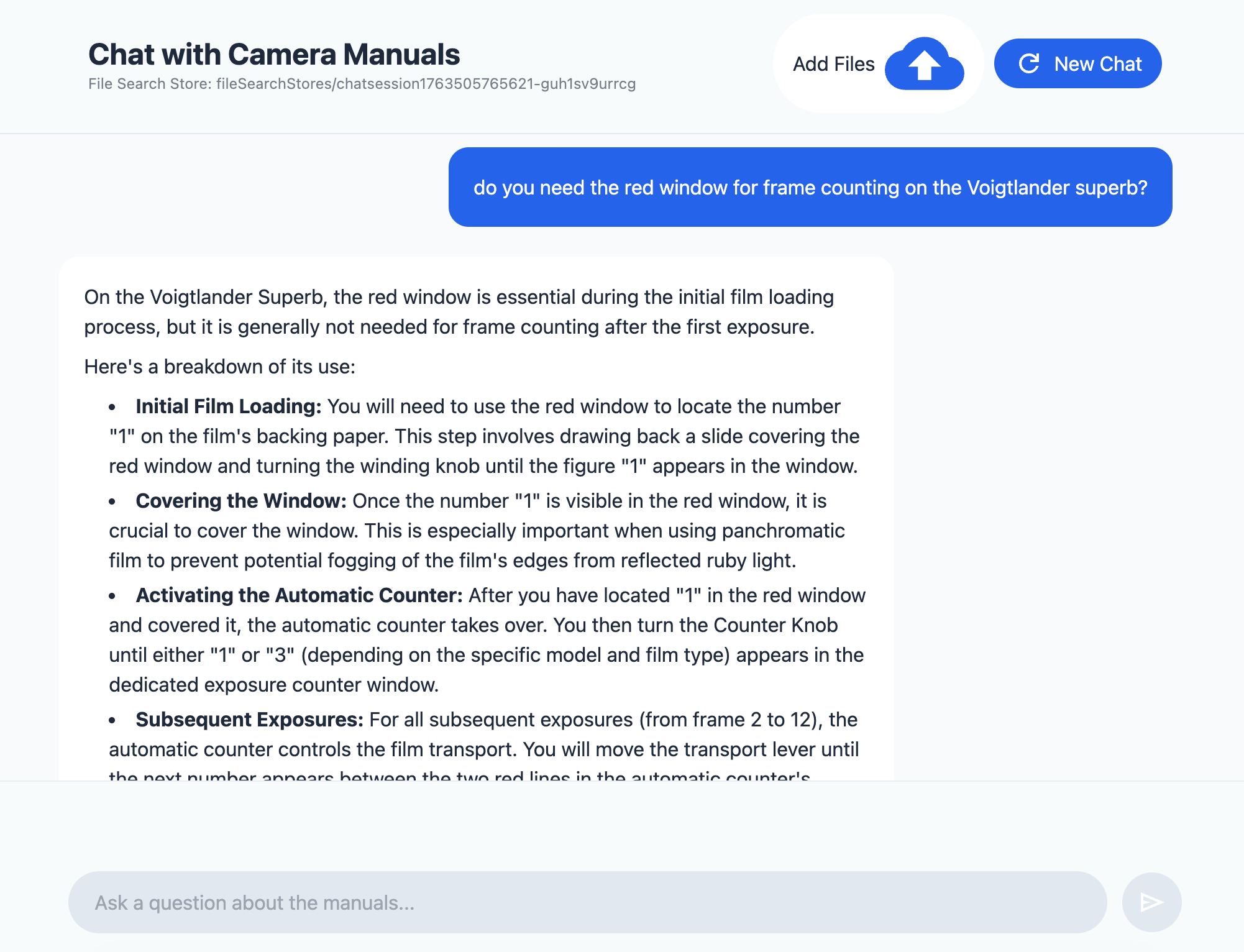

I built an example app for the camera manual Q&A use case, based on the Google AI Studio example. It is open source on GitHub, so you can try it very quickly. Here is a screenshot of the user interface and the chat thread.

n

Example Q&A with PDFs using Gemini File Search:

https://github.com/zbruceli/pdf_qa

The main steps involved in the source code:

- Create a File Search Store, and persist it across different sessions.

- Upload Multiple Files Concurrently, and the Google backend will handle all the chunking and embedding. It even creates sample questions for the users. In addition, you can modify the chunking strategy and upload custom metadata.

- Run a Standard Generation Query (RAG): behind the scenes, it is agentic and can actually assess the quality of results before generating the final answer.

More Developer Information

Gemini File Search API doc

https://ai.google.dev/gemini-api/docs/file-search

Tutorial by Phil Schmidt

https://www.philschmid.de/gemini-file-search-javascript

Pricing of Gemini File Search

- Developers are charged for embeddings at indexing time based on existing embeddings pricing ($0.15 per 1M tokens).

- Storage is free of charge.

- Query time embeddings are free of charge.

- Retrieved document tokens are charged as regular context tokens.

So, Which Is Better?

Since Gemini File Search is still fairly new, my assessment is purely based on the initial testing for about a week.

Capability Comparison

Gemini File Search has all the basic features of a homebrew RAG system

- Chunking (can configure size and overlap)

- Embedding

- Vector DB supporting custom metadata input

- Retrieval

- Generative output

And more advanced features under the hood:

- Agentic capability to assess retrieval quality

If I have to nitpick, image output is currently missing. So far, the output of Google File Search is limited to text only, while a custom-built RAG can return images from the scanned PDF. I imagine it won’t be too difficult for Gemini File Search to offer multi-modal output in the future.

Performance Comparison

- Accuracy: on par. There is no tangible improvement in retrieval or generation quality.

- Speed: mostly on par. Gemini File Search might be slightly faster, since the vector DB and LLM are both “sitting” inside the Google Cloud infrastructure.

Cost Comparison

Finally, Gemini File Search is a fully hosted system that might cost less than a homebrew system.

The embedding of documents was run only once, and it costs $0.15 per million tokens. This is a fixed cost that is common for all RAG systems, and can be amortized over the lifespan of the document Q&A application. In my use case of camera manuals, this fixed cost is a very small portion of the total cost.

Since Gemini File Search offers “free” file storage and database, this is a saving over the homebrew RAG system.

Inference cost is about the same, since the amount of input tokens (question plus vector search results as context) and output tokens are comparable between Gemini File Search and the homebrew system.

Flexibility & Transparency for Tuning and Debugging

Naturally, Gemini File Search marries you to Gemini AI models for embedding and inference. It is essentially gaining convenience while sacrificing flexibility and choice.

In terms of fine-tuning your RAG system, Gemini File Search provides some level of customization. For example, you can define a chunkingConfig during upload to specify parameters like maxTokensPerChunk and maxOverlapTokens, and customMetadata to attach key-value pairs to the document.

However, it seems impossible to have an internal trace of the Gemini File Search system for debugging and performance tuning. So, you are using it more or less as a black box.

Conclusions

Google’s Gemini File Search is good enough for most applications and most people at a very attractive price. It is super easy to use and has minimal operational overhead. It is not only good for quick prototyping and mock-ups, but also good enough for a production system with thousands of users.

However, there are a few scenarios that you might still consider a homebrew RAG system: n

- You don’t trust Google to host your proprietary documents.

- You need to return images to the user from the original documents.

- You want full flexibility and transparency in terms of which LLM to use for embedding and inference, how to do chunking, how to control the agentic flow of the RAG, and how to debug potential retrieval quality issues.

So, give the Gemini File Search a try and decide for yourself. You can either use the Google AI Studio as a playground, or you can use my example code on GitHub. Please comment below on your findings for your use cases.