Table Of Links

Abstract

Executive summary

Acknowledgments

1. Introduction and Motivation

- Relevant literature

- Limitations

2. Understanding the AI Supply Chain

- Background history

- Inputs necessary for development of frontier AI models

- Steps of the supply chain

3. Overview of the integration landscape

- Working definitions

- Integration in the AI supply chain

4. Antitrust in the AI supply chain

- Lithography and semiconductors

- Cloud and AI

- Policy: sanctions, tensions, and subsidies

5. Potential drivers

- Synergies

- Strategically harden competition

- Governmental action or industry reaction

- Other reasons

6. Closing remarks and open questions

- Selected Research Questions

References

2.1 Background history

This section will be an overview of the AI supply chain. For readers familiar with this, we recommend skipping to the next section

In 1948 at Bell Telephone Laboratories, a team led by physicists John Bardeen, Walter Brattain, and William Shockley created the first transistor, a semiconductor device used to amplify or switch electronic signals.

Until then, the electronics industry was dominated by more sizable and less energy-efficient vacuum tubes. This won them a Nobel Prize in Physics in 1956 and laid the foundation for increasingly powerful digital systems (Shaller, 1997). In the same year of 1956, Dartmouth College held a conference that laid the foundation of artificial intelligence as a distinct academic discipline. Organized by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, the conference, which in its proposal stated that “every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it” (McCarthy et al, 1955).

Although the initial optimism for AI’s potential was later met with challenges and periods of skepticism known as “AI winters”, over time the semiconductor industry and the field of artificial intelligence have developed a deeply interconnected relationship. The evolution of the semiconductor industry has allowed AI models to go from simple rule-based systems to deep learning models with billions of parameters (LeCun, Yoshua & Bengio, 2015).

In 2006, Microsoft researchers (Chellapilla et al 2006) recognized that convolution neural networks (CNN), designed in the 1990s to process images, could be trained more efficiently by parellezing the training using NVIDIA’s Graphics Processing Units, first designed for video games. Developing on this idea, the AlexNet algorithm won the Stanford image-classification competition in 2012 (Krizhevsky et al, 2012).

With the introduction of transformers in 2017 by Google Brain researchers, natural language processing tasks – which are sequential in nature – became more easily parallelized using hardware accelerators, allowing breakthroughs in machine learning.

2.2 Inputs necessary for development of frontier AI models

State-of-the-art AI labs develop foundation models, which are large models capable of being applied across various applications (see, e.g., Bommasani et al., 2021). The development of these models requires three primary inputs: data, algorithms, and computing resources (see, e.g., Buchanan, 2020). The rise of deep learning since the early 2010s has been driven by the availability of large amounts of data, advances in neural network architectures, and substantial improvements in computer power – compute, henceforth.

2.2.1 Data

Vast amounts of data are necessary for training frontier AI models. AI labs commonly use large public datasets, like Common Crawl and Wikipedia, while often supplementing these with proprietary datasets, especially for niche applications. The quality of data is critical; for supervised learning approaches, datasets often need to be meticulously labeled to train the models effectively; other approaches such as self-supervised learning also commonly depend on human-labeling efforts at different stages. Over time, there has been an exponential increase in data availability, which has helped the advancement of more complex and accurate models (see, e.g., Villalobos et al., 2022).

2.2.2 Algorithms

Frontier AI models are based on different architectures of neural networks, designed to learn data patterns through the gradual optimization of model parameters to minimize loss functions. According to Erdil & Beriglu (2022), the algorithmic efficiency of neural networks doubles every 9 months, much faster than Moore’s law. That means that current models can achieve similar performance levels to older ones with fewer compute and data. Both algorithms and data can be considered non-rival but excludable; that is, multiple users can utilize the same algorithms and data simultaneously, yet it is possible to prevent others from using them.

2.2.3 Compute

Computing resources (short: compute) are essential for both the training and deployment of AI models. These often include specialized hardware such as AI accelerators, which are chips optimized for AI computations. Graphics Processing Units (GPUs) are among the most widely used types of AI accelerators (Reuther et al., 2023). Field-Programmable Gate Arrays (FPGAs) are another type of accelerator, characterized by being able to be reprogrammed to suit specific computational tasks after manufacture.

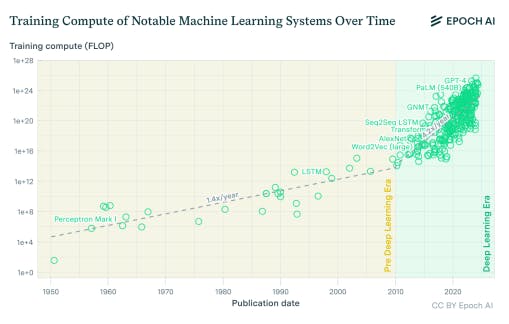

Application-specific integrated Circuits (ASICs), like Google’s Tensor Processing Unities (TPUs), are designed for a specific function; According to a study by Sevilla et al (2022), the amount of compute required by frontier AI systems has increased by a factor of 4.2 every year since 2010. Unlike data and algorithms, computing resources are rivalrous: one person using a chip for a purpose directly impedes others from using it.

This characteristic, along with the ease of measuring and tracking compute resources compared to the other inputs needed for AI development, positions it as a key element in AI governance proposals. Additionally to the chips themselves, the operation of data centers and the infrastructure necessary to maintain them are of great importance in the overall functioning of the industry.

These are large-scale facilities that demand significant use of electricity and water. Data centers and cloud providers are where the training of large foundation models actually happens, usually utilizing the same facilities as other non-AI applications. As their size is pushed to the limit, it is becoming increasingly important to handle how these chips are put together and kept at adequate temperatures (Pilz and Heim, 2023).

2.2.4 Scaling laws

AI models generally exhibit a strong relationship between performance on their training objectives and factors such as model size, data, and compute usage (Clark et al., 2022). Kaplan et al. (2020) note that “performance has a power-law relationship with each of the three scale factors N [number of parameters], D [dataset size], C [compute utilized],” while it “depends […] weakly on model shape.”

Moreover, the relationship between pretraining compute budget and held-out loss typically follows a log-log relationship (Hoffman et al, 2022). Although performance on training objectives—usually next token prediction—does not directly translate to real-world capabilities such as code creation or question-answering, larger models are increasingly used due to the observed association between training performance and practical capabilities (see, e.g., Radford et al., 2019; Brown et al., 2020).

2.2.5 Talent

In addition to this triad, talent is also important for the development of frontier AI models. Technical expertise and talent serve as major bottlenecks in the AI industry overall (see, e.g, Gehlhaus et al., 2023). The talent is often concentrated in a few key hotspots of expertise and innovation, as highlighted by the economic literature of technological clusters (see, e.g., Kerr & Robert-Nicout, 2020).

2.3 Steps of the supply chain

The AI market is characterized by global reach, complexity, concentration, high fixed costs, and significant investments in research and development (R&D). Roughly from down to upstream, the key steps that make up this supply chain are i) AI laboratories, ii) cloud providers, iii) chip designers, iv) chip fabricators, and v) lithography companies that build the machines used in the fabrication of AI accelerators. See the Pilz’ (2023) visualization of the advanced AI supply chain. As featured in the diagram, there are other relevant steps, such as the Core IP, OSAT (Outsourced Semiconductor Assembly and Test), and supplier of key inputs to lithography companies that we are not going to focus on in this report.

2.3.1 AI labs and cloud providers

AI labs design, train and deploy frontier AI models. Four major AI labs that are actively engaged in the pursuit of developing Artificial General Intelligence (AGI) are OpenAI, Google DeepMind, Anthropic and xAI. In addition to them, Microsoft, Meta, Apple, and Mistral develop large foundation models. Major tech companies like Google, Amazon, and Microsoft offer both consumer and business cloud services. Cloud services are widely used in developing and deploying AI models.

These platforms enable developers and businesses to access pre-built AI tools, frameworks, and APIs, allowing them to use AI capabilities without needing extensive infrastructure investment.3 The AI industry is significantly influenced by big tech in other ways. For instance, Meta created PyTorch by Meta and Google developed TensorFlow, respectively the first and second most widely used AI frameworks for the development of models.

2.3.2 The semiconductor industry

The semiconductor industry involves the design and manufacturing of chips, as well as the machines needed to produce them. The process starts with getting silicon from sand and purifying it using specialized chemical methods. Silicon is a key material that allows us to make transistors, which are small electronic parts that can represent binary code and form the core of any computer system.

These chips are made from larger pieces called wafers and are carefully designed to fit as many transistors as possible (see, e.g., Proia, 2019). The semiconductor industry originated primarily in Silicon Valley during the 1960s. Gordon Moore, founder of Intel, famously predicted that the number of transistors on chips

would double every two years, a forecast that became known as Moore’s Law. Aiming this, companies were and are focused on reducing the node size of chips, which refers to specific manufacturing processes and the size of the features it can create, usually related to the size of a transistor’s gate. These smaller nodes allow for more transistors to be packed closely together, which usually means better performance and efficiency for the chip.

While the terminology is not used consistently in the industry, the node size is typically expressed in nanometers and conveys the technological generation of the product. Early industry giants such as IBM and Texas Instruments were integrated companies, handling everything from machine manufacturing to chip design and fabrication. A significant shift occurred in 1987 with the founding of TSMC (Taiwan Semiconductor Manufacturing Company) by Morris Chang. In collaboration with the Taiwanese government and Philips, TSMC specialized in manufacturing, paving the way for the prevalence of fabless companies, which focus on design while outsourcing fabrication (Chiang, 2023).

Nowadays, the manufacturing of semiconductors is mostly concentrated in East Asia, including countries such as Taiwan, Japan, South Korea, and China (see, e.g., Thadani & Allen, 2023). In the early 2000s, the semiconductor industry saw a shift with the introduction of extreme ultraviolet (EUV) lithography technology, used for the production of advanced chips. While Nikon and Canon were dominant players, the emergence of ASML, a company from the Netherlands, made them a de facto monopoly in the EUV sector, essential for manufacturing the most advanced chips.

This underscores how technological advancements can lead to market concentration, influencing the overall industry dynamics. In this report, the main focus will be on a type of chip called AI accelerator. These chips are specifically designed for tasks related to AI. These chips are optimized for tasks such as matrix multiplications and tensor operations, which are very common in AI training and inference. In contrast, for instance, to CPUs, which prioritize general-purpose processing and are optimized for a broad range of computing tasks including logical, arithmetic, and control operations, AI accelerators are built specially to manage the high-throughput, parallel computations commonly found in machine learning tasks.

NVIDIA’s GPUs are the dominant hardware accelerator in the AI industry. Google has its own solution, an ASIC known as Tensor Processing Units (TPUs), available only through cloud services. The semiconductor industry also uses ASICs for particular AI applications such as recommendation systems, computer vision, and natural language processing. Recently, there has been a trend toward creating chips specialized either for training or deployment tasks (Reuther, 2022).

2.3.3 Other relevant segments

The semiconductor supply chain includes important segments like CORE IP (Intellectual Property) and OSAT (Outsourced Assembly and Test). CORE IP companies create the basic reusable design of chips, known as IP cores. These designs are licensed to chip designers like Nvidia. Key players in this area are ARM, Synopsys, and Cadence, among others (Design Reuse, 2020).

After wafer fabrication by foundries, Outsourced Assembly and Test (OSAT) companies handle the cutting, assembling, packaging, and testing of chips to turn them into finished products for market release. This stage is crucial for the final quality and efficiency of chips and important companies include ASE Technology, Amkor Technology, and Lam Research. Though CORE IP and OSAT are also important segments, this paper will briefly cover these steps, focusing more on other parts of the supply chain.

:::info

Author:

Tomás Aguirre

:::

:::info

This paper is available on arxiv under CC by 4.0 Deed (Attribution 4.0 International) license.

:::