Whether we were ready for it or not, generative AI technology has circulated far and wide across the internet. Most of the major tech brands are pushing their own AI-powered tools and assistants, and some of these chatbots even outperform ChatGPT. However, in an unfortunate side effect, those tools and assistants have found their way into the hands of those who probably shouldn’t have them. Scammers of just about every variety have taken to AI quite well, using it to both enhance their existing phishing scams and escalate efforts like identity theft to worrying new heights.

The notion of a scammer using AI tools to make convincing facsimiles of you and your loved ones, or tailoring their scams directly to your interests, is naturally very frightening. However, much like how nearly every online scam has a visible string to pull on, so too do those enhanced by AI. AI is powerful, but it is definitely not infallible, and if you know the right signs to look for and employ some common sense safety measures, even AI-enhanced scammers won’t be able to steal your money or identity.

AI-enhanced phishing scams

Phishing scams are one of the most traditional forms of online scamming. A scammer sends you an email, chat request, or other form of communication and attempts to trick you into clicking a fake link, which then solicits you for valuable information like login credentials or banking info. Regular phishing scams are already bad enough, but AI tools have allowed scammers to fine-tune their methods further.

By feeding large quantities of genuine messages and statements from major brands and companies into an LLM, scammers can swiftly generate phishing emails designed to more closely mimic the way those particular companies talk to users and customers. Where before a phishing email may have obvious typos or bizarre formatting, with AI assistance, it can look nearly indistinguishable from the genuine article.

However, anyone who has seen enough generated text from a chatbot knows that LLMs have their own distinct quirks. If you receive a suspicious, unsolicited email from a site or business you’ve previously used, try pulling up a previous email and comparing it to the suspicious one. You may notice some small inconsistencies in language and word choice. Additionally, check the email address the suspicious message came from. If it’s different from the address you usually get messages from that brand from, that’s a big red flag.

AI-enhanced spear phishing and catfishing

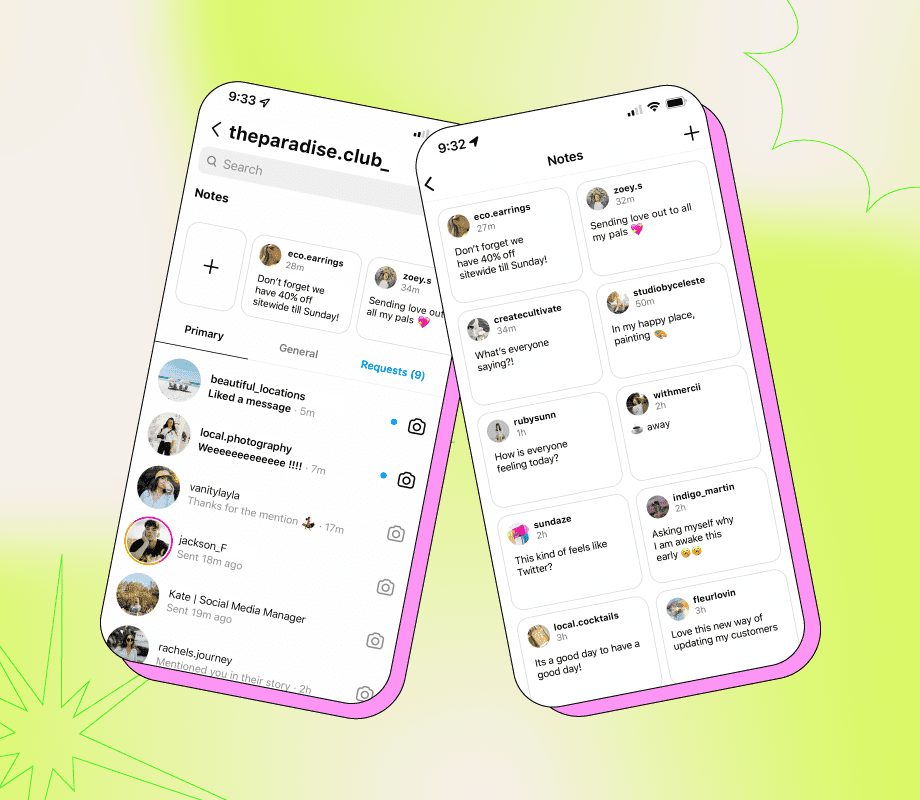

In addition to traditional phishing scams, scammers have also utilized AI to enhance their efforts at spear phishing and catfishing. Spear phishing is when a scammer tailors their scam to your publicly available personal information, such as what you’ve posted to a personal social media account. Catfishing is a similar scam in which a scammer creates a false identity in an effort to get close to you and solicit money, a tactic often used for romance scams.

Similar to regular phishing scams, AI can also be used to enhance these other kinds of scams. Rather than feeding an LLM corporate messages, though, a scammer may feed it your information, scraped from your social media accounts, in an effort to create a virtual person who just so happens to share all of your hobbies and interests. These tactics could also be used to create a facsimile of you yourself in an effort to target your friends or family members.

While it’s unpleasant to think that everyone on every social media platform is out to get you, it’s unfortunately something of a necessary precaution. If you’re contacted by a stranger via direct message or email, treat them with a healthy degree of skepticism, and immediately break contact if they ask you for money, try to pressure you into investing, or urge you to purchase something like shady products or crypto. In the event a scammer is trying to mimic you with AI, you should give your friends and family members a secondary, private means of contacting you. If they ever receive a message from “you” that seems off-base, they should contact you through this second method to verify.

Voice cloning

A classic scam conducted over the phone involves the scammer calling someone, frequently an elderly individual who may be hard of hearing, and saying “it’s me, it’s me,” and waiting for them to drop a name they can latch onto. The obvious weakness of this scam is that, more often than not, the scammer sounds nothing like the person they’re claiming to be. This is another avenue where scammers have found AI quite useful.

AI has the well-advertised ability to mimic human voices via analyzed audio clips. If there is a lot of audio of your voice online from videos or social media posts, a scammer could have an LLM skim all of that audio to create a fairly convincing mockup of your voice and speech patterns. This mockup could be used to solicit money from your friends or family members. In an even darker example, some scammers have used these mockups to convince individuals that they’ve taken their family members hostage and demand ransom.

Similarly to catfishing, a good countermeasure for cloned voices of your friends or family is to have a secondary means of communication you can consult when you’re unsure of their authenticity. These secondary means could also prove invaluable if you find yourself in that dark circumstance where a scammer tells you they’ve kidnapped them. If the cloned voice is merely trying to talk to you, try asking them a question that only you and this other individual would know, something you’re sure they haven’t posted publicly online. Odds are good they’ll either fumble the answer and hang up or become angry and try to pressure you, which is a good time for you to hang up.

Imposter deepfakes

In addition to voices, AI has proven itself adept at generating convincing video footage. The practice of using this function to create false footage of specific individuals is commonly known as deepfake scamming. Again, this is accomplished through combing large quantities of data to assemble the fake, specifically visual data like videos posted online. Unsurprisingly, this function has also been put toward malicious purposes.

Scammers will create deepfakes of popular online influencers, celebrities, or other individuals that have a lot of video data to draw from. These deepfakes can then be used to send private video messages to targeted individuals, or create public-facing videos with trap links for things like contests or giveaways. One scammer managed to trick an individual by creating a fake conference call with high-ranking executives at their job. Theoretically, this function could also be used to duplicate individuals like friends or family, though this would probably be harder to do than a celebrity unless the friend or family member in question posts a comparable amount of personal video.

If you happen to receive a video from an influencer you follow that’s not coming from their actual account, it is almost definitely a fake, even if it seems to look like them. You should be particularly suspicious of direct, unsolicited communications from such individuals. If someone tries to host a video call with you to convince you of their authenticity, that’s an opportunity for you to expose them with AI’s critical weakness: ask them to turn their head or perform some manner of complex hand motion. As powerful as AI has become, it still can’t handle those kinds of fine details, and the deepfake’s face or hand will morph into an unpleasant mishmash.