A new industry benchmark aimed at systematically evaluating the factual accuracy of large language models has been released with the launch of the FACTS Benchmark Suite. Developed by the FACTS team in collaboration with Kaggle, the suite expands earlier work on factual grounding and introduces a broader, multi-dimensional framework for measuring how reliably language models produce factually correct responses across different usage scenarios.

The FACTS Benchmark Suite builds on the original FACTS Grounding Benchmark and adds three new benchmarks: Parametric, Search, and Multimodal. Together with an updated Grounding Benchmark v2, the suite evaluates factuality across four dimensions that reflect common real-world model usage. In total, the benchmarks comprise 3,513 curated examples, split between public and private evaluation sets. Kaggle manages the held-out private sets, evaluates participating models, and publishes results through a public leaderboard. Overall performance is reported as the FACTS Score, calculated as the average accuracy across all benchmarks and both data splits.

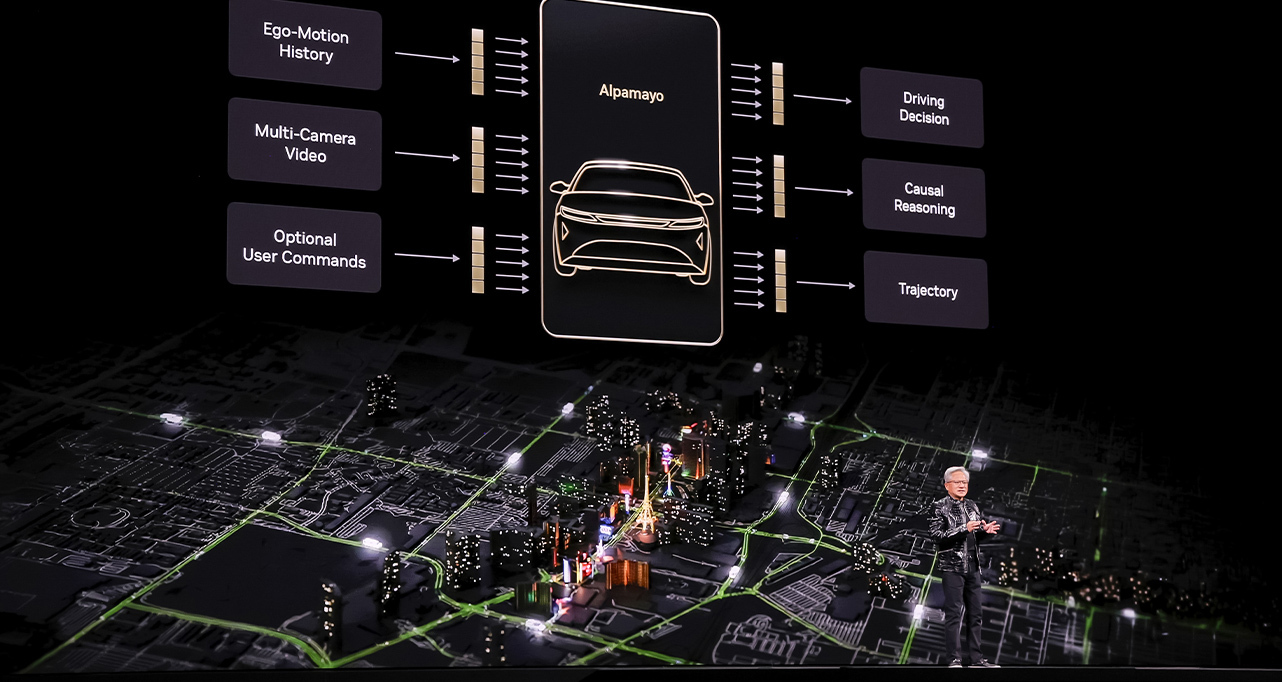

The Parametric Benchmark focuses on a model’s ability to answer fact-based questions using internal knowledge alone, without access to external tools. Questions resemble trivia-style prompts commonly answerable from sources such as Wikipedia. The Search Benchmark evaluates whether models can correctly retrieve and synthesize information using a standardized web search tool, often requiring multiple retrieval steps to resolve a single query. The Multimodal Benchmark tests factual accuracy when answering questions about images, requiring correct visual interpretation combined with background knowledge. The updated Grounding Benchmark v2 assesses whether responses are properly grounded in provided contextual information.

Early results highlight both progress and remaining challenges. Among evaluated models, Gemini 3 Pro achieved the highest overall FACTS Score at 68.8%, with notable improvements over its predecessor in parametric and search-based factuality. However, no evaluated model exceeded 70% overall accuracy, with multimodal factuality emerging as a particularly difficult area across the board.

Source: Google DeepMind Blog

The structure of the benchmark has drawn attention from practitioners. Commenting on the release, Alexey Marinin, a senior iOS engineer, noted:

This four-dimensional view (knowledge, web, grounding, multimodal) feels much closer to how people actually use these models day to day

The FACTS team stated that the benchmark is intended to support ongoing research rather than serve as a final measure of model quality. By making the datasets publicly available and standardizing evaluation, the project aims to provide a shared reference point for measuring factual reliability as language models continue to evolve.