Ryan Haines / Android Authority

I wouldn’t say I’m a photography purist (I shoot with a Fuji after all), but one thing that continues to draw me to using my mirrorless over all but the very best smartphone cameras is a more natural look to my pictures.

It’s not that smartphones are bad by any stretch of the imagination; some smartphone cameras are absolutely brilliant. But take a closer look, and even the best handsets leave telltale signs of overprocessing; whether it’s oversharpened skin textures from the Pixel’s portrait mode or overly dark shadows in Apple’s HDR algorithm.

You don’t have to take my word for it; the internet is plastered with complaints about overprocessing and artifacts found even in some of the best camera phones. And yet, brands seem determined to cram even more processing into mobile imaging in pursuit of modest boosts in image quality, rather than taking the plunge on better camera hardware. I’m looking at you, Samsung, and your years of identical camera specs.

How do you feel about modern phone camera processing?

2 votes

Virtually everywhere you look, the latest smartphones promise to use AI (or perhaps we should call it machine learning?) to enhance the appearance of your pictures — from Google’s 100x Pro Res Zoom and “AiMAGE” features embedded, such as outpainting and restyling, in the new HONOR Magic 8 Pro, to the Photonic Engine in the latest iPhones. We can’t escape, and yet the results are mixed at best and sometimes outright atrocious.

2025 was the year that AI photography really took off, but it was mixed at best.

As impressive as Google’s and OnePlus’s AI-infused long-range zoom capabilities seem at first use, we quickly tired of their uneven application. Texture details look mostly good, but it’s a no-go for distant human subjects, and these two have some of the better AI photography implementations on the market right now. At the other end of the spectrum, last year’s HONOR Magic 7 Pro looked far too heavy-handed in every scenario it used AI. Thankfully, the new models seem to have dialed things back a bit.

Rather than attempting to plaster over the cracks with AI, in all the cases where we’ve been let down, these phones would have performed much better simply by having better hardware. The OnePlus 15 is a prime example of how even minor hardware downgrades obviously produce an inferior experience. Despite relying more heavily on its DetailMax Engine, the higher-specced OnePlus 13 came out on top during our reviews.

By contrast, the Xiaomi 15 Ultra and OPPO Find X9 Pro are two of my favorite camera phones from last year. Although they also dabble in AI, both employ some of the best hardware in the business, and not just in their primary lens. If you look at Apple, Google, or Samsung, they’re still stuck in the tiny-periscope-camera paradigm, while these rivals have moved on to large-sensor, 200MP zooms with bright apertures. Honestly, I’d take a great 20x snap over an AI 100x picture nearly every time.

I’m even more concerned about budget models that use AI as a cheap band-aid for inferior camera hardware. You don’t have to look too far to find countless complaints about overly aggressive photo processing, which has only been made worse by the inclusion of aggressive AI features. Extras like AI Portrait Enhancements, outpainting, scene enhancements, should just be that — extras that can boost an already good-looking photo, rather than heavily processing image data that was fundamentally compromised to begin with.

When done right, AI can help affordable phones close the gap with more expensive alternatives. I’ll be the first to trumpet computational photography’s virtues at elevating budget phones like Google’s Pixel 9a to near flagship quality. However, even Google’s pictures tend to have a certain look; for better or worse, the algorithm-heavy approach has resulted in inferior color accuracy and more aggressive noise artifacts.

AI can be useful, but when applied aggressively the results often come out looking worse.

Software, even AI, can only do so much to enhance the appearance of camera hardware beyond its physical capabilities. The more you lean into the algorithm to patch over the cracks, the more frequent and obvious the added artifacts become. You can’t beat physics, and when it comes to taking brilliant pictures that feature real detail, you still need large pixels and high-quality optics to capture that light. AI cannot replace good hardware.

There are good uses for AI photography, but they’re more subtle

Ryan Haines / Android Authority

As down as I seem on the AI hype, it can still be an incredibly powerful tool for photography, but it just needs to be used in the right way. Instead of hallucinating details that aren’t there, you can lean into machine learning’s mathematical qualities to improve existing software enhancements. Modern “AI” is essentially a glorified statistics model, which makes it very good at performing classification and modeling tasks.

It’s already extensively used in mobile photography for object and scene detection, allowing cameras to optimize their settings for your environment. That’s a simple, effective solution that I’m completely behind, just so long as the results are used to optimize image quality rather than completely reinvent colors or lighting.

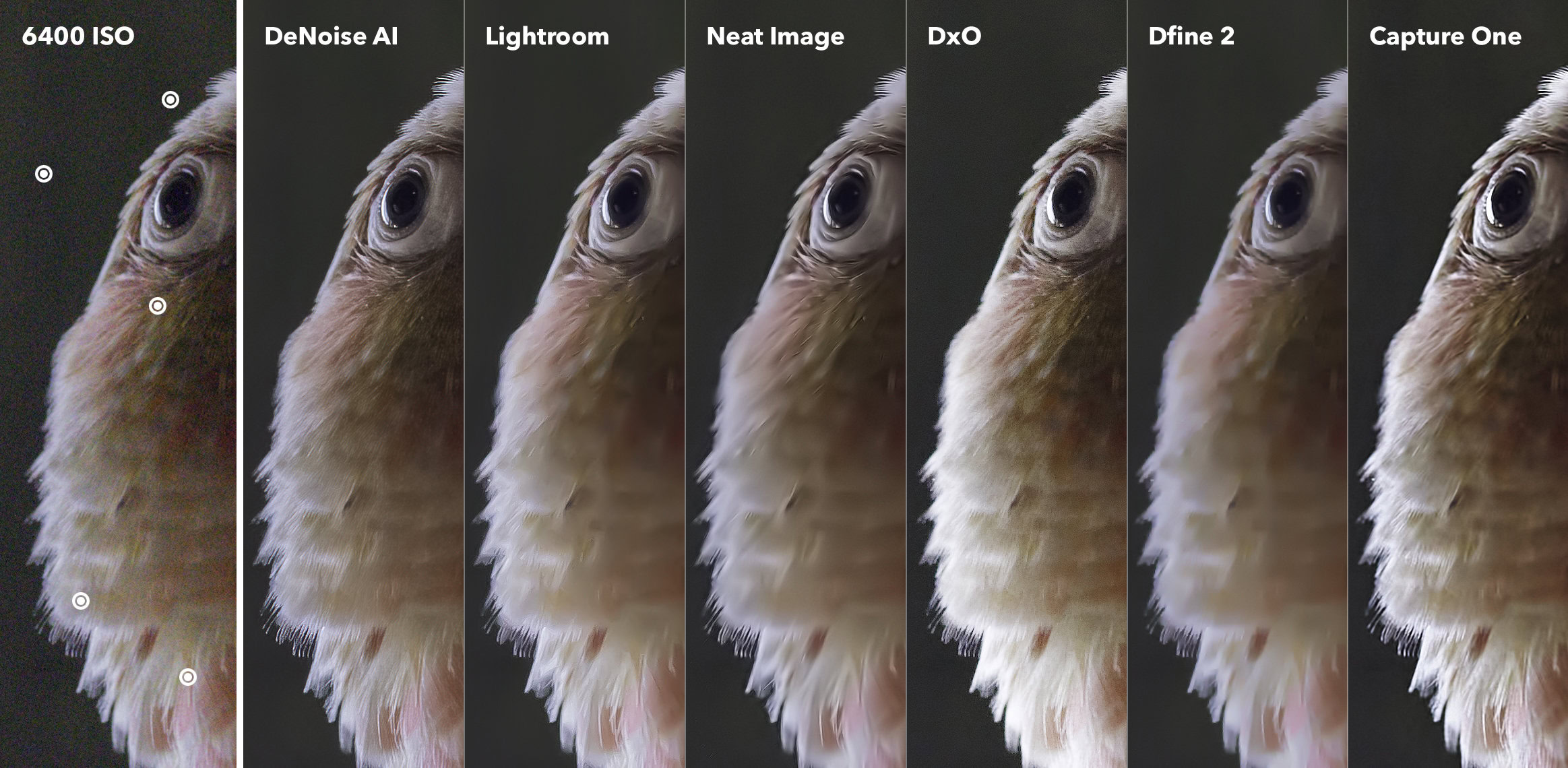

Statistical modeling makes AI highly effective at noise reduction compared to traditional algorithms, for both image quality and video audio applications. OEMs could train models on their camera hardware against larger-sensor SLRs and leverage the differences to address minor performance deficiencies, such as noise or color accuracy, without wholesale repainting your picture.

If you’re not convinced, check out this awesome comparison of traditional and AI denoise algorithms from Fstoppers, and this was five years ago. How useful would this be for low-light photography, particularly on smaller zoom lenses?

The problem with all of these ideas is that they’re subtle by definition. Tweaking color and dynamic range just makes your pictures look cleaner, which doesn’t exactly make for a cheer-inducing presentation compared to wholesale swapping out parts of your snap for something else. It’s the same as branding fancy new multi-ISO or HDR-stacking hardware technologies; they’re great, but they don’t permeate consumer consciousness.

The good news is that the mobile industry has already begun experimenting with some of these ideas. Years ago, OPPO debuted its custom MariSilicon X silicon to improve low-light snaps and video, only to shutter the idea, presumably because flagship SoCs quickly caught up. Meanwhile, Apple has been gradually improving its Deep Fusion detail enhancer to leverage its newer processors.

Likewise, Qualcomm has long used “AI” in its Snapdragon ISPs to assist with context-aware focus, exposure, and white balance. It also supports multi-frame noise reduction and 4K30 video noise reduction in its latest 8 Elite Gen 5 chip. Similarly, Google’s Tensor has been the key to boosting dynamic range for multi-frame HDR and Super Res Zoom for a long time.

The best uses for photographic AI are subtle, but they can be brilliant.

Some of the ideas I’m more in favor of are already here. However, you’ll need premium-tier smartphones to benefit from them, and there’s still plenty more that could be done in processing pictures in the RAW domain with some of the more cutting-edge ideas in computational photography.

If brands are serious about making meaningful rather than gimmicky improvements to smartphone camera capabilities, they’ll have to do more of this less glamorous work and less showboating. You can have both, of course, but smartphones still have plenty to improve regarding quick shutter, low-light shooting, and noise at long range. I’d rather they focus on the fundamentals first and then add AI for fine-tuning, rather than using it as a lazy, quick fix for bad hardware.

Don’t want to miss the best from Android Authority?

Thank you for being part of our community. Read our Comment Policy before posting.