Most Salesforce AI conversations start with models. That’s backward.

The work starts with deciding where AI can influence priority, routing, and outcomes. What follows determines whether anything holds up in production: signal quality, release discipline, and governance that applies to AI outputs with the same rigor as the underlying data.

Salesforce introduced Einstein GPT as generative AI for CRM, designed to deliver AI-created content across sales, service, marketing, commerce, and IT interactions, and positioned it as open, extensible, and trained on trusted, real-time data. That framing sets a clear expectation. AI should change workflows and remain controllable under enterprise conditions.

Einstein AI in Salesforce: A Workflow Lens

Einstein GPT is most useful when evaluated as workflow, because workflow is where outcomes appear.

Decision moments define priority. A score matters when it changes what gets worked next. A risk signal matters when it changes escalation, queue order, or follow-up timing.

Execution moments are where Salesforce acts. Routing, assignments, and automation via Flow or Apex live here. Generative outputs, such as summaries or drafts, belong in this layer only when inputs are bounded and access rules are enforced.

Experience moments are where results become visible. Faster resolution, fewer handoffs, and more relevant guidance depend on decision and execution layers being connected.

If that “work changes next” step is unclear, adoption will be inconsistent, and measurement will drift toward vanity metrics.

Predictive Analytics and Personalization: Designing the Decision Layer

At enterprise scale, personalization is decisioning, not content selection.

Salesforce’s Personalization data model describes runtime objects that capture personalization requests, eligibility, placement, and delivery. That framing matters because it points to a system that operates continuously, not a static configuration.

A practical structure keeps this manageable: context, intent, next best experience.

Context captures what is true now. Channel, lifecycle stage, open cases, service tier, renewal windows, and consent constraints typically matter more than long attribute lists.

Intent reflects the likely goal, such as resolving an issue, renewing, upgrading, or comparing options.

Next best experience is the action tied to an outcome, such as routing to the correct queue, recommending knowledge, creating a renewal task, or surfacing guided scripting.

For this to work, decision outputs must move. Salesforce describes Data Cloud activation as presenting data in an actionable format, with both streaming and batch support. Without reliable activation, decision logic exists but workflows stay unchanged.

A useful check remains simple. What changes tomorrow if the prediction is correct.

Architecture and Scalability: Pressure Points Surface Early

AI increases demand for fresh, consistent signals. That pressure exposes architectural weaknesses quickly, especially fragmented identity and brittle integrations.

Multi-Org Architecture

Multi-org architecture fits when isolation requirements are persistent, such as regulatory separation, distinct governance, or independent release cadence. The cost is increased integration complexity and operational overhead.

Long-term complexity is driven less by org count and more by a few decisions. Where the golden customer record lives. How identity is resolved across org boundaries. Where analytics run. How releases stay coordinated when dependencies exist.

AI raises the stakes because identity, consent, and data freshness become baseline requirements for prediction and personalization.

Event-Driven Integration for Signals

When AI depends on signals, delivery mechanics matter.

Salesforce describes Platform Events as secure, scalable messages for exchanging real-time event data between Salesforce and external systems. Change Data Capture publishes near-real-time change events for record creation, updates, and deletions to support synchronization.

These primitives support safer retries, recovery after failures, and protection against spikes. They also reduce hidden coupling by separating “what changed” from “who requested it,” which simplifies downstream decisioning.

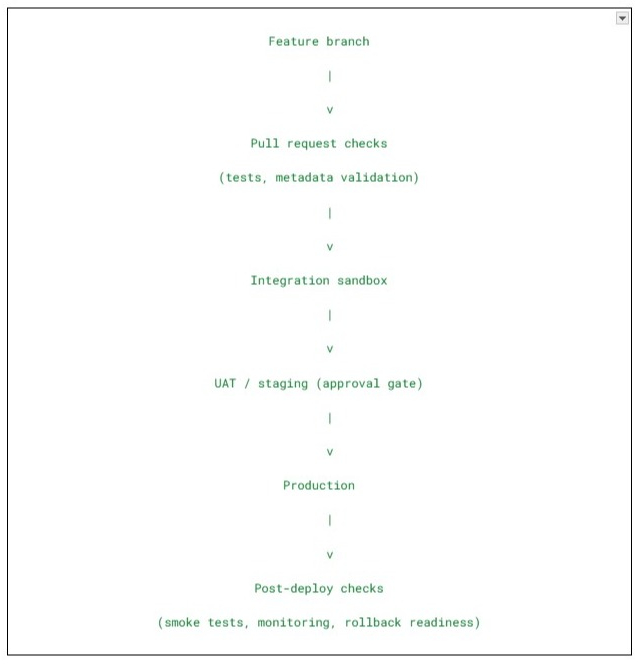

DevOps and CI/CD: Keeping Change Predictable

As AI becomes embedded, releases carry more risk because more workflows depend on automation and data. Delivery discipline turns risk into routine.

Salesforce Help documentation for DevOps Center states that each pipeline stage has an associated branch in the source control repository.

This is where many programs either reduce risk over time or accumulate it. If the pipeline cannot reproduce a release from source control and promote it through environments with traceability, AI features will magnify that weakness.

Industry Constraints: Healthcare and Government

Some industries multiply requirements rather than add them.

In healthcare, Salesforce maintains a HIPAA compliance category and references current BAA restrictions and HIPAA-covered services. That scope matters because compliance is shaped by service boundaries and technical controls together. Strong access control, encryption strategy, auditability, and retention discipline become core design concerns. With generative workflows in play, outputs must respect access rules the same way field-level security does.

Government environments place similar pressure on identity, policy-driven access, and traceability. Logs and audit history are part of the system’s value, not an afterthought.

Emerging Tech: Clear Boundaries First

Emerging technologies fit Salesforce architectures when boundaries are explicit.

Blockchain is most defensible as a verification layer. Sensitive data stays off-chain, hashes or proofs are stored, Salesforce handles workflow and user experience, and the chain provides verification.

IoT works best as signals rather than raw telemetry. Telemetry is aggregated and analyzed upstream, converted into actionable indicators, then pushed into Salesforce so service workflows can act.

These constraints keep the CRM focused on orchestration rather than high-volume processing.

Security and Compliance: AI Inherits the Rules

Security defines the boundary around everything above.

Salesforce describes Salesforce Shield as including Platform Encryption, Event Monitoring, and Field Audit Trail, mapping directly to enterprise needs for encryption, visibility, and audit history. Privacy obligations reinforce the same design discipline. Salesforce’s GDPR guidance outlines expanded rights for individuals and obligations for organizations handling personal data.

In practice, this means data discovery, consent management, retention automation, access controls, and auditability. AI increases the importance of these controls because outputs can surface information in new contexts.

A non-negotiable guardrail applies. If a user cannot access a field, an AI summary should not surface it.

Conclusion: Making AI in Salesforce Operable

AI in Salesforce works when it is treated as an operable system. Models matter, but outcomes depend on signal delivery, workflow wiring, release control, and security boundaries.

A final check keeps teams grounded. Can the workflow impact be explained clearly. Can inputs be traced and recovered during failures. Can changes be reproduced and promoted with confidence. Can outputs stay within access rules and privacy obligations.

One question ties it together. Could this run during an audit, during a peak season, and during a live incident without improvisation.

If the answer is yes, the foundation is strong enough to keep adding AI without compounding risk.

:::info

This story was published under HackerNoon’s Business Blogging Program.

:::