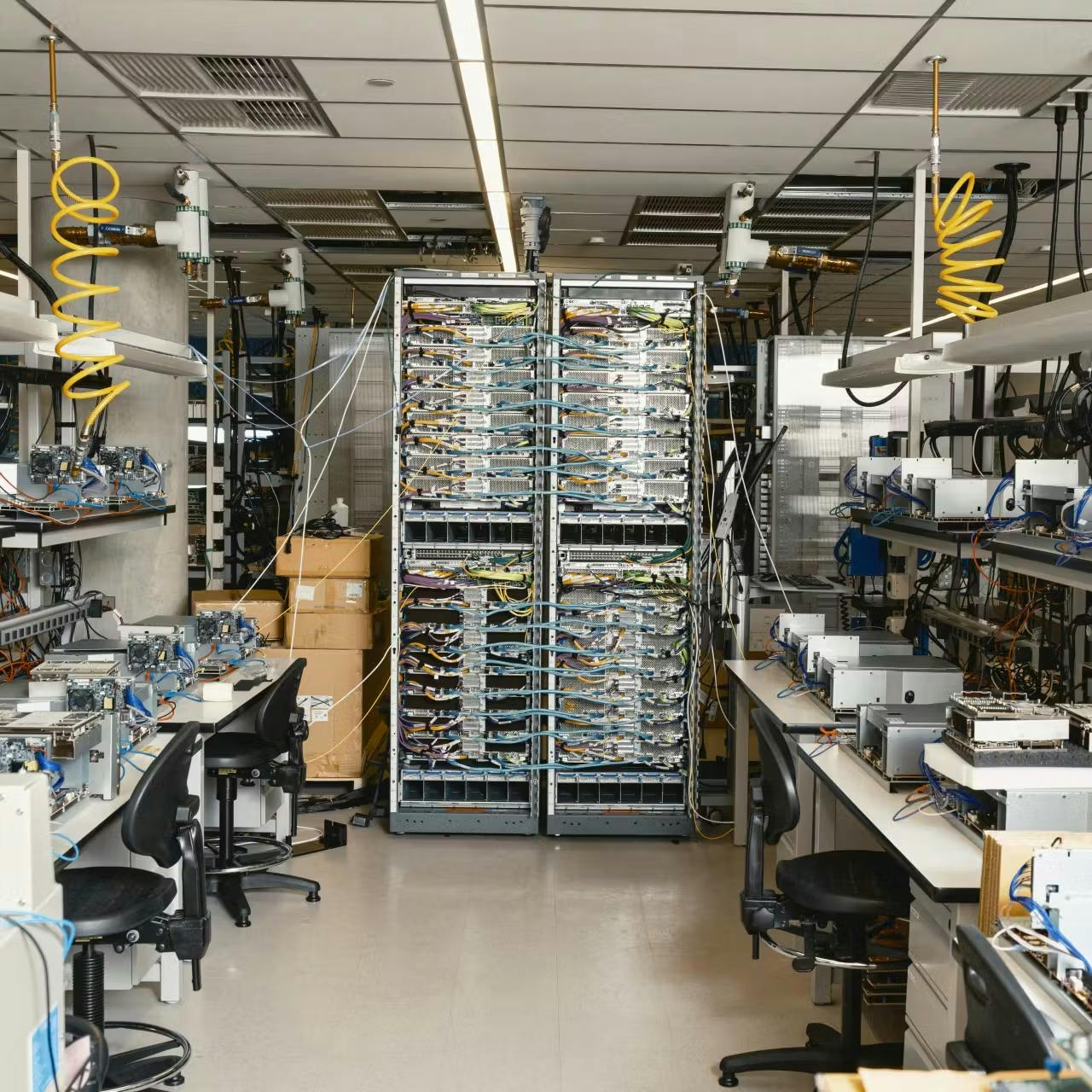

In my previous analysis of Power Stranding, I explored the multi-billion-dollar art of ensuring that massive power infrastructure doesn’t sit idle. But for a data center financial analyst, solving the power equation isn’t just about building better substations. It’s about what sits inside the racks.

Looking at a modern data center’s balance sheet in early 2026, the “Hardware” line item is undergoing a silent coup. For decades, the industry operated as a one-stop shop: cloud providers bought their GPUs from Nvidia and their CPUs from Intel or AMD, focusing their engineering on the building around them.

That era is over. We have entered the age of the Vertical Data Center. Hyperscalers – AWS with Trainium 3, Google with TPU v7 (Ironwood), and Microsoft with Maia – are essentially becoming semiconductor houses that happen to own their own real estate.

The Financial Arbitrage of Custom Silicon

When I model a new data center build-out, I consider CAPEX, OPEX, and TCO. In-house chips are a “cheat code” for all three:

- Margin Reclamation: When a provider buys an Nvidia Blackwell system, they aren’t just paying for silicon; they are paying for margins that Nvidia itself has disclosed as exceeding 70% in recent earnings reports. By designing chips in-house, hyperscalers effectively “internalize” that margin. This keeps billions of dollars in CAPEX on the provider’s balance sheet rather than exporting it as profit to a third party.

- Performance per Watt: Power remains the scarcest resource in the cloud. General-purpose chips are designed for a broad market. In-house chips are specialists. Early 2026 disclosures and vendor-published benchmarks suggest that Google’s Ironwood TPUs and AWS’s Trainium 3 (built on 3nm nodes) are delivering up to 2x the performance-per-watt of previous generations. This is the ultimate hedge against power stranding: making every watt work twice as hard.

- The Supply Chain Buffer: While everyone relies on TSMC, in-house design provides a critical buffer. By dealing directly with foundries, hyperscalers bypass the “allocation logic” of external vendors. They aren’t competing with the rest of the world for a finished card; they are securing raw wafer capacity calibrated strictly to their own capacity forecasts.

The Storytelling Gap: Google vs. AWS

There is a fascinating divergence in how these programs are being pitched to the Street, and the market’s report card is in.

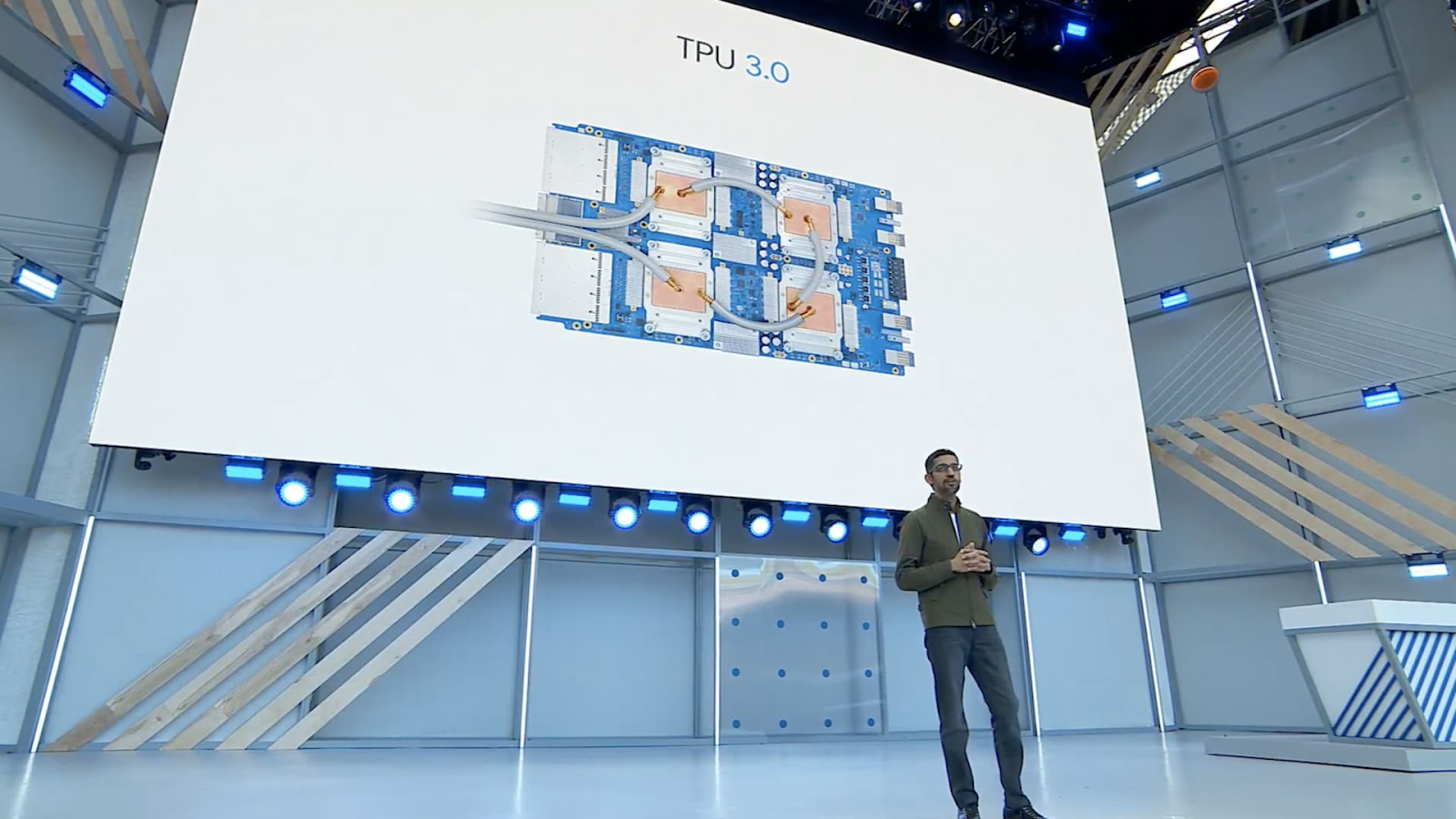

Google has mastered “The Big Reveal.” They have branded the TPU as the secret sauce behind Gemini. When Google speaks to investors, the TPU isn’t just a component; it’s a proprietary moat. They have successfully framed it as an Integrated Stack – where the silicon, the software, and the models are one single machine.

This narrative is working. Over the trailing twelve months, Alphabet (Google) has seen its stock skyrocket roughly 60%, which many investors have interpreted as evidence of successful vertical integration. Google recently confirmed that over 75% of Gemini computations are now handled by its internal TPU fleet.

AWS, by contrast, has traditionally operated as “The Infrastructure Utility.” They have treated Trainium as an option for customers – one more tool in a toolbox alongside Nvidia. While this strategy is customer-centric, it lacks the “hero” narrative. Over the same period, Amazon’s stock gains have been more modest (around 5-10%) as the market waits for AWS to prove it can maintain its lead without being taxed by the high cost of third-party silicon. n

Scale vs. Integration: The “Project Rainier” Factor

Don’t let the storytelling gap fool you. While Google is more vertically integrated today, Amazon is scaling at a pace that is hard to wrap a spreadsheet around. Project Rainier, Amazon’s internally referenced effort to deploy Trainium at hyperscale, 1 million plus chips by the end of 2026, represents a transition from strategic planning to massive capacity realization.

Amazon isn’t trying to build a “walled garden.” They are building a dual-highway: one lane for the industry-standard Nvidia stacks, and a second, high-efficiency lane for their own silicon.

The Bottom Line

In the world of data center finance, the most beautiful spreadsheet is the one where the lines don’t just meet – they reinforce each other. Custom silicon is the ultimate reinforcement. It turns the data center from a passive shell into an active participant in the compute cycle.

The race isn’t just about who can build the biggest data center anymore. It’s about who can extract the most value out of every single electron. Google is currently winning the PR battle for that electron, but AWS has the scale to win the war – if it starts talking about its chips as the brain of the data center, not just another component in the rack.

For developers and startups, this shift matters because the economics of compute are diverging. The same workload may soon have radically different cost and performance profiles depending on whether it runs on vertically integrated infrastructure or commodity accelerators.

The Silicon Moat: Why the World’s Largest Clouds are Becoming Chipmakers

In my previous analysis of Power Stranding, I explored the multi-billion-dollar art of ensuring that massive power infrastructure doesn’t sit idle. But for a data center financial analyst, solving the power equation isn’t just about building better substations. It’s about what sits inside the racks.

Looking at a modern data center’s balance sheet in early 2026, the “Hardware” line item is undergoing a silent coup. For decades, the industry operated as a one-stop shop: cloud providers bought their GPUs from Nvidia and their CPUs from Intel or AMD, focusing their engineering on the building around them.

That era is over. We have entered the age of the Vertical Data Center. Hyperscalers – AWS with Trainium 3, Google with TPU v7 (Ironwood), and Microsoft with Maia – are essentially becoming semiconductor houses that happen to own their own real estate.

The Financial Arbitrage of Custom Silicon

When I model a new data center build-out, I’m looking at CAPEX, OPEX, and TCO. In-house chips are a “cheat code” for all three:

- Margin Reclamation: When a provider buys an Nvidia Blackwell system, they aren’t just paying for silicon; they are paying for margins that Nvidia itself has disclosed as exceeding 70% in recent earnings reports. By designing chips in-house, hyperscalers effectively “internalize” that margin. This keeps billions of dollars in CAPEX on the provider’s balance sheet rather than exporting it as profit to a third party.

- Performance per Watt: Power remains the scarcest resource in the cloud. General-purpose chips are designed for a broad market. In-house chips are specialists. Early 2026 disclosures and vendor-published benchmarks suggest that Google’s Ironwood TPUs and AWS’s Trainium 3 (built on 3nm nodes) are delivering up to 2x the performance-per-watt of previous generations. This is the ultimate hedge against power stranding: making every watt work twice as hard.

- The Supply Chain Buffer: While everyone relies on TSMC, in-house design provides a critical buffer. By dealing directly with foundries, hyperscalers bypass the “allocation logic” of external vendors. They aren’t competing with the rest of the world for a finished card; they are securing raw wafer capacity calibrated strictly to their own capacity forecasts.

The Storytelling Gap: Google vs. AWS

There is a fascinating divergence in how these programs are being pitched to the Street, and the market’s report card is in.

Google has mastered “The Big Reveal.” They have branded the TPU as the secret sauce behind Gemini. When Google speaks to investors, the TPU isn’t just a component; it’s a proprietary moat. They have successfully framed it as an Integrated Stack – where the silicon, the software, and the models are one single machine.

This narrative is working. Over the trailing twelve months, Alphabet (Google) has seen its stock skyrocket roughly 60%, which many investors have interpreted as evidence of successful vertical integration. Google recently confirmed that over 75% of Gemini computations are now handled by its internal TPU fleet.

AWS, by contrast, has traditionally operated as “The Infrastructure Utility.” They have treated Trainium as an option for customers – one more tool in a toolbox alongside Nvidia. While this strategy is customer-centric, it lacks the “hero” narrative. Over the same period, Amazon’s stock gains have been more modest (around 5-10%) as the market waits for AWS to prove it can maintain its lead without being taxed by the high cost of third-party silicon. n

Scale vs. Integration: The “Project Rainier” Factor

Don’t let the storytelling gap fool you. While Google is more vertically integrated today, Amazon is scaling at a pace that is hard to wrap a spreadsheet around. Project Rainier, Amazon’s internally referenced effort to deploy Trainium at hyperscale, 1 million plus chips by the end of 2026, represents a transition from strategic planning to massive capacity realization.

Amazon isn’t trying to build a “walled garden.” They are building a dual-highway: one lane for the industry-standard Nvidia stacks, and a second, high-efficiency lane for their own silicon.

The Bottom Line

In the world of data center finance, the most beautiful spreadsheet is the one where the lines don’t just meet – they reinforce each other. Custom silicon is the ultimate reinforcement. It turns the data center from a passive shell into an active participant in the compute cycle.

The race isn’t just about who can build the biggest data center anymore. It’s about who can extract the most value out of every single electron. Google is currently winning the PR battle for that electron, but AWS has the scale to win the war – if it starts talking about its chips as the brain of the data center, not just another component in the rack.

For developers and startups, this shift matters because the economics of compute are diverging. The same workload may soon have radically different cost and performance profiles depending on whether it runs on vertically integrated infrastructure or commodity accelerators.