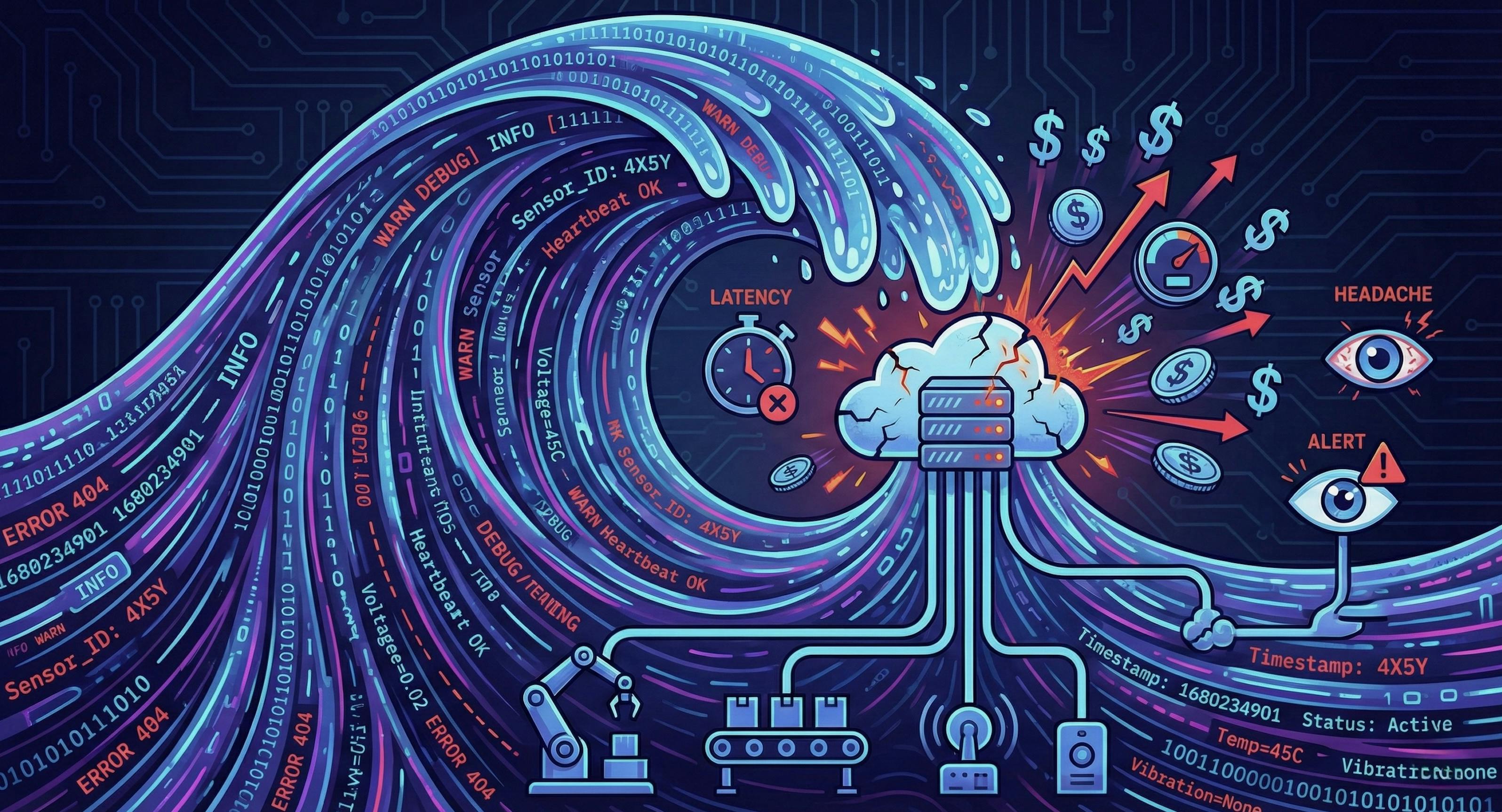

Do you remember the “Enterprise Cloud Rush” around 2015? We treated the hyperscaler cloud like an infinite dumpster for our data. Every sensor in the hospital, every vibration reading from a five-axis CNC machine, every transaction log in the bank. We piped it all to AWS S3 buckets or Azure Blob Storage. We proudly called it “Observability”.We congratulated ourselves on building “Data Lakes.”

In reality, we were building data swamps. Expensive, bandwidth-hogging, latency-ridden swamps.

I’ve spent over 22 years architecting systems for Industry 4.0 environments for companies like Apple, PayPal, and GE Digital, and if there is one hard lesson I’ve learned, it is this: The “Store Everything, Analyze ” model is dead. That model might have worked in 2015, but it died somewhere around 2024 when AI finally stabilized enough to be trusted at the edge. Now, in 2026, continuing to dump raw data into the cloud isn’t just inefficient. It is a total waste of time, effort, and money.

Here is why the industry has to move toward the AI Edge Proxy, and why your factory (or hospital) needs to stop sending every single log to the cloud.

The “Nanosecond” Problem

To understand why the old model failed, you have to grasp the scale of modern industrial telemetry. We aren’t talking about an Apache web server logging a few hits per second.

Imagine a modern automotive manufacturing plant. Take a single robotic welding arm. It doesn’t just log “Weld Complete.” Every single millisecond, its servo motors are reporting torque, current draw, joint angle, and temperature. The welding tip is reporting voltage, wire feed speed, and gas flow rates.

Now, multiply that by 500 robots on a single production line.

In the “Pre-2024 Era” architecture, the pipeline was brutally simple and brutally inefficient:

-

Source: Devices generate terabytes of raw, high-frequency logs daily.

-

Transport: We clog the factory WAN sending 100% of this data to the cloud.

-

Storage: We pay to store petabytes of data, 99.9% of which says “System operating normally.”

-

Analysis (Too Late): A cloud-based scheduled job wakes up 4 hours later to scan for anomalies.

n By the time it finds a bearing failure, the production line has already been down for three hours. n As I discuss in Chapter 1 (The New Industrial Revolution) of my upcoming book, The Trusted Machine, this is the Latency Challenge. Cloud-only AI fails in manufacturing because when a high-speed milling machine drifts off-axis, you don’t have seconds to round-trip data to a data center in Northern Virginia to ask what to do. You have milliseconds.Furthermore, the financial cost became unsustainable. Organizations realized they were spending fortunes transferring data that no human or machine would ever look at again.* The Reality Check: According to research cited by the CNCF in 2025, nearly 70% of collected observability data is unnecessary, leading to inflated storage bills without adding value.

The Cost of Delay: In Manufacturing, Finance or critical Healthcare, waiting for cloud latency isn’t just annoying. It’s financial suicide.

The Solution: “Bouncer” (AI Edge Proxy) | Moving Intelligence “Upstream”

We needed a paradigm shift. We needed intelligence that lived in the pipe, right next to the machine, not at the end of a long network connection.

We stopped treating the edge gateways as mere routers and started treating them as compute platforms. We began deploying local, lightweight Neural Networks right at the edge, running on hardware like NVIDIA Jetson modules or robust industrial PCs integrated into the OT (Operational Technology) network.

I call this the AI Edge Proxy.

n Think of the AI Edge Proxy as a highly trained bouncer at an exclusive nightclub. In the old days, we let everyone (every log line) inside and hoped the security guards inside (Cloud AI) would sort the troublemakers out later. The AI Edge Proxy is the bouncer at the door, checking IDs and behavior before anyone gets in.

How It Works:

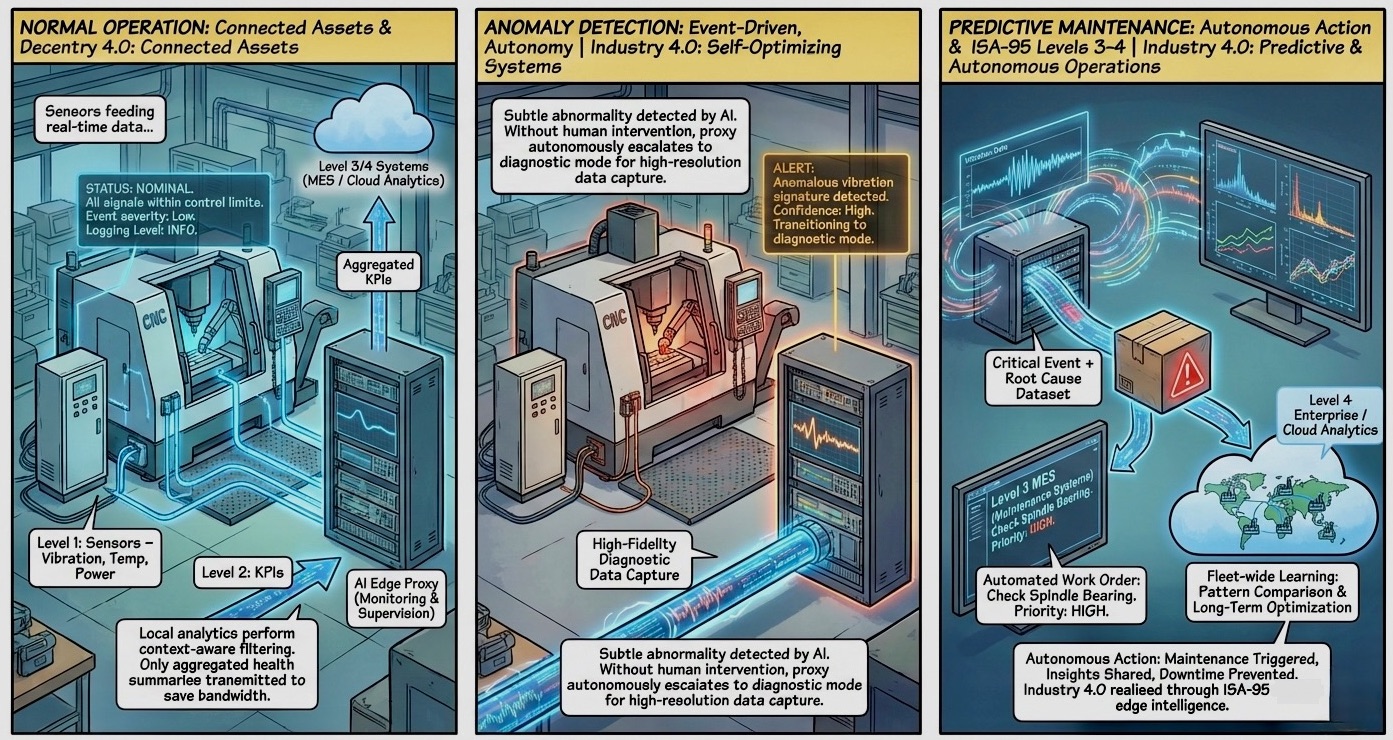

The Edge Proxy sits in the log pipeline, ingesting the firehose of raw telemetry via protocols like MQTT or OPC-UA.

1. It Tastes the Data: The Edge Proxy ingests the firehose of raw telemetry locally. The onboard neural net is trained on the standard, “healthy” operating baseline of that specific facility’s equipment. It knows what a normal Tuesday morning sounds like vibration-wise.

2. It Filters the Noise: 99.9% of logs are “System Normal.” The Proxy discards these and sends a simple summary to the cloud. “Machine A: All parameters nominal at 10:00 AM”, sends that tiny packet to the cloud for compliance dashboards, and discards the gigabytes of raw logs instantly.

3. It Elevates the Signal: It only lets the “VIPs” (the anomalies) through.

n In Finance & Manufacturing, companies are currently hoarding petabytes of “Success” logs. Storing every single “200 OK” debug message is killing cloud storage budgets. By using the Bouncer model to filter this locally, you can reduce cloud costs by 40–60%.

The Killer Feature: Dynamic “Debug” Injection

If the only thing the Edge Proxy did was filter noise, it would be worth the investment. But the real breakthrough is it’s ==active interaction==. n In a traditional setup, debugging an intermittent issue is a nightmare scenario. An incident occurs at 3 AM. An engineer wakes up, SSHs into the machine, changes the logging level from standard INFO to verbose DEBUG, and then… waits. They hope the error happens again while the disk fills up with massive debug logs. It’s reactive, slow, and rarely captures the root cause the first time. Our AI Edge Proxy automates this entirely.

A “Trusted Machine” does this automatically.

Let’s go back to that CNC machine. The local Neural Net on the Edge Proxy is monitoring vibration sensor logs.

- The Suspicion (T-Minus 60 Seconds): The AI detects a subtle shift in harmonic resonance. It’s not an error yet, it hasn’t crossed a hard threshold. But it’s a 0.5% deviation from the trained baseline. It “suspects” something is wrong.

- The Action (T-Minus 59 Seconds): The Proxy immediately acts as an orchestration agent. It sends a command to the local machine controller: “Elevate logging level to TRACE/DEBUG immediately for the next 15 minutes.”

- The Capture (The Incident): Two minutes later, the bearing seizes momentarily. Because the system was already in DEBUG mode, it captures high-fidelity, millisecond-by-millisecond granular data leading up to and during the failure.

- The Revert (T-Plus 15 Minutes): Once the timer expires or the anomaly signature clears, the Proxy automatically drops the system back to standard INFO logging to save local disk space.

We are no longer searching for needles in haystacks. We are using AI to build the haystack only when we know a needle is about to drop. This ensures that when we do send data to the cloud for deep root-cause analysis, it is the right data.

Security and the “Trusted Machine”

Beyond efficiency, this architecture is a cornerstone of modern industrial security.

As detailed in Chapter 9 (Active Defense) of The Trusted Machine, the sad reality is that the more data you ship out of your secure facility, the larger your attack surface becomes. n By processing logs locally, the AI Edge Proxy acts as a semi-permeable membrane for your data. Detailed operational blueprints, precise manufacturing tolerances, and patient-identifiable telemetry stay within the local network boundary. Only sanitized insights leave the premises.Furthermore, by having AI at the edge, we are now implementing “Industrial SIEM” locally. The proxy can detect not just mechanical anomalies, but security anomalies like a PLC suddenly trying to communicate with an unknown external IP address over a weird port and clamp down the connection instantly.

The Bottom Line

If you are still sending petabytes of “System OK” logs to the cloud in 2026, you are operating on a 2015 playbook. n The future of Industry 4.0, Finance, and Healthcare isn’t Big Data; it’s **Smart Data**. It’s about having trusted, AI-driven watchers at the edge that know the difference between noise and a signal, and have the autonomy to act on that knowledge in milliseconds.

References & Further Reading

The High Cost of Outages n High-impact outages in sectors like Finance and Media can cost enterprises over==~$2 million per hour==, driving the need for faster, edge-based resolution rather than waiting on cloud latency.

Source: New Relic 2025/2026 Observability Forecast

The “Cloud Exit” Reality n By==repatriating workloads from AWS to their own hardware==, 37signals reported projected savings of over $10 million over five years, validating the financial case for owning your infrastructure rather than renting it.

Source:[37signals “Leaving the Cloud” Report

The Problem of Data Waste

Organizations have realized that nearly ==70% of collected observability data is unnecessary==, leading to massive inefficiencies in storage and processing.

Source: CNCF Blog: Observability Trends in 2025

Savings from Edge Filtering & Log Reduction

Adopting “Adaptive Logs” strategies to filter low-value data at the source can result in a ==50% reduction in log volumes==, proving the financial viability of the AI Edge Proxy model.

Source: Grafana Blog: How to Cut Costs for Metrics and Logs

The Repatriation Trend

==80% of CIOs expect some level of workload repatriation== (moving back from cloud to on-prem/edge) to control costs, as organizations struggle to manage spiraling cloud spend.

Source: Flexera 2025 State of the Cloud Report

n