Table of Links

-

Abstract and Introduction

-

System Architecture

2.1 Access via UI or HTTP

2.1.1 GUI

2.2 Input

2.3 Natural Language Processing Pipeline — The Llettuce API

2.3.1 Vector search

2.3.2 LLM

2.3.3 Concept Matches

2.4 Output

-

Case Study: Medication Dataset

3.1 Data Description

3.2 Experimental Design

3.3 Results

3.3.1 Comparison between vector search and Usagi

3.3.2 Comparison with GPT-3

3.4 Conclusions & Acknowledgement

3.5 References

2. System Architecture

Llettuce was written using Python for both a back-end and a user interface. The back-end comprises a vector store for semantic search, a local LLM to suggest mappings, and queries to a connected OMOP CDM database to provide the details of a suggested mapping to the user.

2.1 Access via UI or HTTP

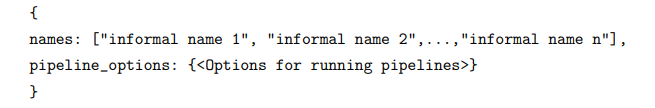

All interactions with Llettuce are made via HTTP requests to the Llettuce API. A POST request is made to the Llettuce server containing JSON with the following format:

The pipeline options are optional, so a request can be just a list of informal names.

2.1.1 GUI

For users less comfortable with the command line, a graphical user interface (GUI) is provided. This is built using the Streamlit (Streamlit, 2024) Python framework, and presents the user with two options. The first shows a text box where a user can type a comma-separated list of informal names to run through the pipeline. The second allows a user to upload a .csv file and choose a column containing names to run through the pipeline. For either option, the results of the pipeline are shown below the input.

2.2 Input

Llettuce uses FastAPI (Ramírez, 2024) to serve API endpoints. These endpoints allow different combinations of Llettuce modules to be used to find concept names.

2.3 Natural Language Processing Pipeline — The Llettuce API

2.3.1 Vector search

LLMs are good generalists for text generation. However, the OMOP CDM has a specialist vocubulary. To bridge this gap, Llettuce uses embeddings of OMOP concepts for semantic search. It has been demonstrated that encoder-only transformers produce representations of language such that semantically related concepts are close in the space of their embeddings (Bengio et al., 2003; Devlin et al., 2019). Llettuce uses sentence embeddings (Reimers & Gurevych, 2019) to generate embeddings for concepts. These are stored locally in a vector database. FastEmbed (‘Qdrant/Fastembed’, 2023, July 14/The embedded concept is compared with the stored vectors and the k embeddings with the highest dot-product with the query are retrieved from the database. Models used for embeddings are much smaller than LLMs, so generating an embedding demands less computational resources. Retrieval from the vector database also provides a score for how close the embeddings are to the query vector, so that a perfect match has a score of 1.

The top k embeddings are used for retrieval-augmented generation (RAG) (Lewis et.al., 2021). In RAG mode, the pipeline first queries the vector database. A threshold on the similarity of the embeddings has been set such that embeddings above this are exact matches. If there is an embedding with a score above this threshold, these are provided to the user. If there is no very close match, the embeddings are inserted into a prompt, which serves as input to the LLM, as discussed next. The rationale is that close embeddings may either contain the answer, which the LLM can select, or hints as to what might be close to the right answer.

2.3.2 LLM

Llettuce uses Llama.cpp (Gerganov et al., 2024) to run the latest Llama LLM from Meta (Dubey et al., 2024). Llama.cpp provides an API for running transformer inference. It detects the available hardware and uses optimisations for efficient inference running on central processing units (CPUs).

The version of Llama tested in our case study has 8 billion parameters (Llama 3.1 8B). Models are trained with each parameter as a 32-bit floating point number. Quantisation was employed to reduce the size of the model, with little loss of accuracy (Dettmers & Zettlemoyer, 2023; Jacob et al., 2017). The full precision Llama 3.1 8B requires over 32 Gb RAM to run, whereas the 4-bit quantised model requires less than 5 Gb. Most consumer laptops are therefore able to keep the model in memory

Llettuce uses Haystack (deepset GmbH, 2024) to orchestrate its LLM pipelines. For LLM-only pipelines there is a component that takes an informal name and inserts it into a prompt template, and another which delivers this prompt to the LLM. The prompt used in these cases uses techniques recommended for Llama models (‘Meta-Llama/Llama-Recipes’, 2023, July 17/2024).

For example, the prompt for RAG contains detailed, explicit instructions

You are an assistant that suggests formal RxNorm names for a medication. You will be given the name of a medication, along with some possibly related RxNorm terms. If you do not think these terms are related, ignore them when making your suggestion.

Respond only with the formal name of the medication, without any extra explanation.

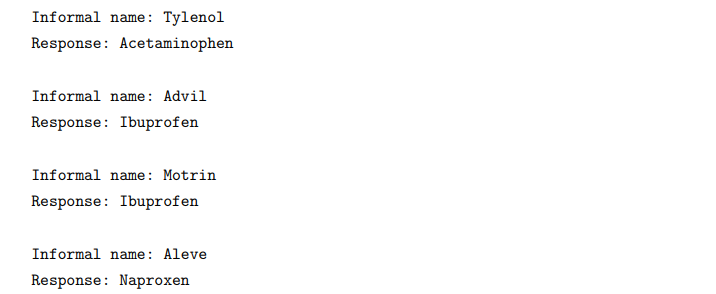

This part of the prompt also gives the LLM a role, which has been shown to improve consistency in responses(Kong et al., 2024). Importantly, the prompt includes providing examples of informal name/formal name pairs, an effective tactic for LLM prompting (Brown et al., 2020):

Once the LLM has inferred a formal name for the informal name provided, this formal name is used as a concept name in a parameterised OMOP CDM query.

2.3.3 Concept Matches

To retrieve the details of any concept through a Llettuce pipeline, an OMOP CDM database is queried. OMOP CDM queries are generated using an object-relational mapping through SQLAlchemy (Bayer, 2012). The string used for the concept name field is first preprocessed by removing punctuation and stop words and splitting up the words with the pipe character for compatibility with PostgreSQL in-database text search. For example, the string “paracetamol and caffeine” has the stop-word “and” removed, and the remaining 196 words used to build the string “paracetamol | caffeine”. The words of this search term are used for a text search query against the concept names of the selected OMOP vocabularies. Optionally, concept synonyms can be included in this query. The retrieved concept names are then compared with the input by fuzzy string matching, and any names above the threshold are presented to the user.

2.4 Output

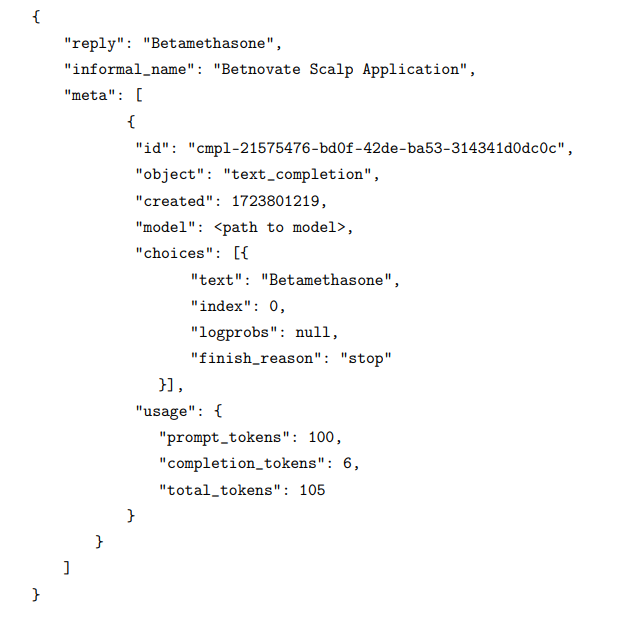

Llettuce pipelines emit JSON containing the results of the pipeline. For example, for a pipeline running the LLM and OMOP query, an input request as follows:

{“names”: [“Betnovate Scalp Application”]}

returns JSON output for the “events” llmoutput and omopoutput for each name sent to Llettuce.

The llmoutput contains a reply of the LLM’s response, the informalname supplied in the request, and meta describing metadata about the LLM’s run.

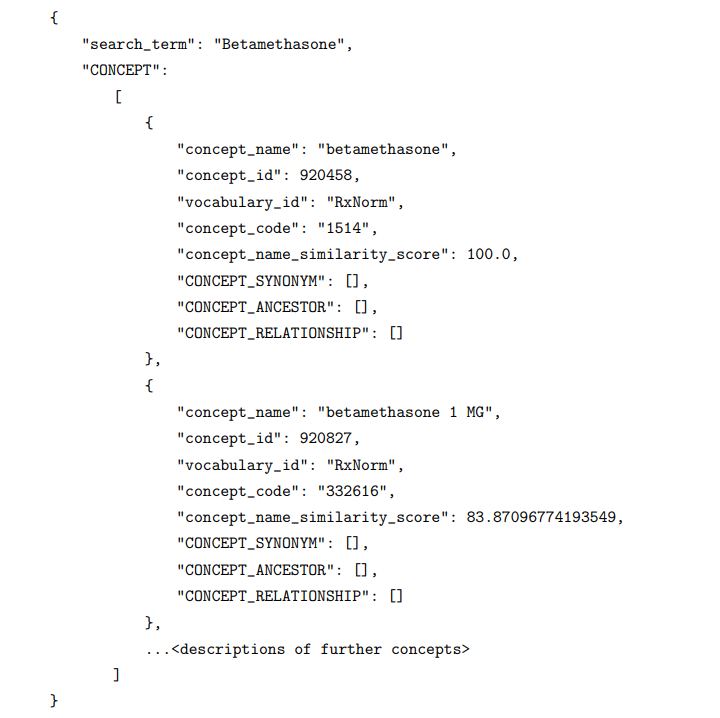

The omopoutput event contains the searchterm sent to the OMOP-CDM, then a CONCEPT array, where each item is a match meeting the threshold set on fuzzy string matching. Each item describes the conceptname, conceptid, vocabularyid, and conceptcode from the OMOP-CDM’s concept table, followed by the conceptnamesimilarity_score calculated in Llettuce. Llettuce also has the option to fetch further information from the OMOP-CDM, not enabled in the default configuration, which is included in the entry for each concept.

The GUI parses this and displays it in a more user-friendly format.

:::info

Authors:

(1) James Mitchell-White, Centre for Health Informatics, School of Medicine, The University of Nottingham, Digital Research Service, The University of Nottingham, and NIHR Nottingham Biomedical Research Centre;

(2) Reza Omdivar, Digital Research Service, The University of Nottingham, and NIHR Nottingham Biomedical Research Centre;

(3) Esmond Urwin, Centre for Health Informatics, School of Medicine, The University of Nottingham and NIHR Nottingham Biomedical Research Centre;

(4) Karthikeyan Sivakumar, Digital Research Service, The University of Nottingham;

(5) Ruizhe Li, NIHR Nottingham Biomedical Research Centre and School of Computer Science, The University of Nottingham;

(6) Andy Rae, Centre for Health Informatics, School of Medicine, The University of Nottingham;

(7) Xiaoyan Wang, Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore;

(8) Theresia Mina, Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore;

(9) John Chambers, Lee Kong Chian School of Medicine, Nanyang Technological University, Singapore and Department of Epidemiology and Biostatistics, School of Public Health, Imperial College London, United Kingdom;

(10) Grazziela Figueredo, Centre for Health Informatics, School of Medicine, The University of Nottingham and NIHR Nottingham Biomedical Research Centre;

(11) Philip R Quinlan, Centre for Health Informatics, School of Medicine, The University of Nottingham.

:::

:::info

This paper is available on arxiv under CC BY 4.0 license.

:::