:::info

Authors:

(1) Likun Zhang;

(2) Hao Wu;

(3) Lingcui Zhang;

(4) Fengyuan Xu;

(5) Jin Cao;

(6) Fenghua Li;

(7) Ben Niu∗.

:::

Table of Links

-

Abstract and Introduction

-

Background & Related Work

2.1 Text-to-Image Diffusion Model

2.2 Watermarking Techniques

2.3 Preliminary

2.3.1 Problem Statement

2.3.2 Assumptions

2.4 Methodology

2.4.1 Research Problem

2.4.2 Design Overview

2.4.3 Instance-level Solution

2.5 Statistical-level Solution

-

Experimental Evaluation

3.1 Settings

3.2 Main Results

3.3 Ablation Studies

3.4 Conclusion & References

Abstract

The emergence of text-to-image models has recently sparked significant interest, but the attendant is a looming shadow of potential infringement by violating the user terms. Specifically, an adversary may exploit data created by a commercial model to train their own without proper authorization. To address such risk, it is crucial to investigate the attribution of a suspicious model’s training data by determining whether its training data originates, wholly or partially, from a specific source model. To trace the generated data, existing methods require applying extra watermarks during either the training or inference phases of the source model. However, these methods are impractical for pre-trained models that have been released, especially when model owners lack security expertise. To tackle this challenge, we propose an injection-free training data attribution method for text-to-image models. It can identify whether a suspicious model’s training data stems from a source model, without additional modifications on the source model. The crux of our method lies in the inherent memorization characteristic of text-to-image models. Our core insight is that the memorization of the training dataset is passed down through the data generated by the source model to the model trained on that data, making the source model and the infringing model exhibit consistent behaviors on specific samples. Therefore, our approach involves developing algorithms to uncover these distinct samples and using them as inherent watermarks to verify if a suspicious model originates from the source model. Our experiments demonstrate that our method achieves an accuracy of over 80% in identifying the source of a suspicious model’s training data, without interfering the original training or generation process of the source model.

ACM Reference Format: Likun Zhang, Hao Wu, Lingcui Zhang, Fengyuan Xu, Jin Cao, Fenghua Li, Ben Niu. 2024. Training Data Attribution: Was Your Model Secretly Trained On Data Created By Mine?. In . ACM, New York, NY, USA, 9 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn

1 Introduction

Text-to-image generation systems based on diffusion models have become popular tools for creating digital images and artistic creations [16, 17]. Given an input prompt in natural language, these generative systems can synthesize digital images of high aesthetic quality. Nevertheless, training these models is quite an intensive task, demanding substantial amounts of data and training resources. They make such models valuable intellectual properties for model owners, even if the model structures are usually public.

One significant concern for such models is the unauthorized usage of their generated data [10]. As illustrated in Figure 1, an attacker could potentially query a commercial model and collect the data generated by the model, then use the generated data to train their personalized model. For simplicity in narration, we denote

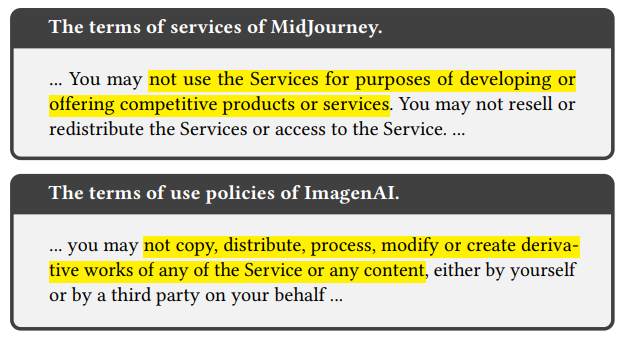

he attacker’s model as the suspicious model and denote the commercial model as the source model. This attack has already raised the alarm among commercial model developers. Some leading companies, e.g., MidJourney [14] and ImagenAI [7], have explicitly stated in their user terms that such practices are not permitted, as shown in Figure 2. It is crucial to investigate the relationship between the source model and the suspicious model. We term the task as training data attribution.

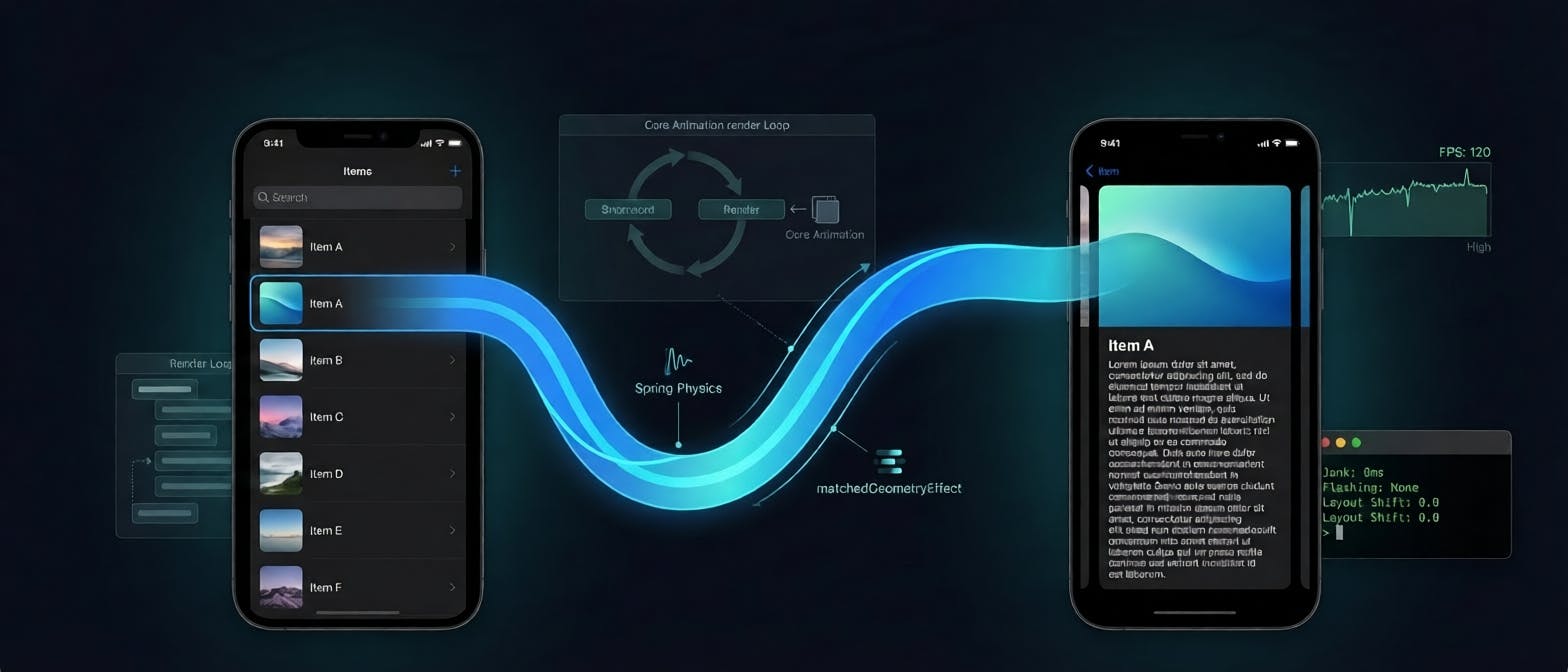

To tackle the task, one may think of using the watermarking techniques to accomplish the task. Existing watermarking methods can generally be categorized into two types: one involves embedding watermarks in the training data during the model training phase [11, 12, 28], and the other adds watermarks to the model outputs after training [10], such that the generated data contains traceable watermark characteristics. However, there are two issues existing works do not fully address. Firstly, regarding feasibility, it remains unexplored whether a source model, once watermarked, can successfully pass its watermark to a suspicious model through generated data. Secondly, regarding usability, watermark techniques could affect the generation quality of the source model [28], either during or after training. Moreover, applying these techniques requires security knowledge, thereby raising the bar for practical application.

In this paper, our goal is to uncover the indicators naturally embedded within a source model, which can be transferred to any model trained on data produced by the source model. These inherent watermarks can reveal the relationship between the source and suspicious models. Unlike artificially injected watermarks, these inherent indicators do not require modifications to the model’s training algorithm or outputs. This means that applying our attribution method approach won’t compromise the model’s generation quality and doesn’t necessitate any security knowledge.

The rationale of our approach stems from the memorization phenomenon exhibited by text-to-image generation models. The memorization signifies a model’s ability to remember and reproduce images of certain training samples when the model is prompted by the corresponding texts during inference [23]. Research has shown that such memorization in generative models is not occasional. On the contrary, models with superior performance and stronger generalization abilities demonstrate more notable memorization [23].

Though promising, applying the memorization phenomenon to achieve our goal is not straightforward. Even if we manage to conduct a successful training data extraction on the suspicious model as proposed in [3], the information we procure is the data generated by the source model. Given that the generation space of the source model is vast, it becomes challenging to verify whether the extracted data was generated by the source model. We will detail the challenges in a formal manner in Section 3.

In this paper, we propose a practical injection-free method to ascertain whether a suspicious model has been trained using data generated by a certain source model. Our approach considers both the instance-level and statistical behavior characteristics of the source model, which are treated as part of the inherent indicators to trace its generated data against unauthorized usage. In particular, at the instance level, we devise two strategies to select a set of key samples (in the form of text and image pairs) within the source model’s training data. This set is chosen to maximize the memorization phenomenon. We then use the texts in these samples to query the suspicious model at minimal cost, evaluating the relationship between the two models based on the similarity of their outputs. At the statistical level, we develop a technique involving the training of several shadow models on the datasets that contain or do not contain the data generated by the source model. Then we estimate the metric distributions for data attribution with a high-confidence.

Experimental results demonstrate that our instance-level attribution solution is reliable in identifying an infringing model with high confidence over 0.8. Even when the infringing model uses only a small proportion as 30% of generated data, the attribution confidence is over 0.6, on par with the existing watermark-based attribution method. The statistical-level solution achieves an overall accuracy of over 85% in distinguishing the source of a suspicious model’s training data.

Our main contributions are summarized as:

- Focusing on the issue of user term violation caused by the abuse of generated data from pre-trained text-to-image models, we formulate the problem as training data attribution in a realistic scenario. To the best of our knowledge, we are the first to work on investigating the relationship between a suspicious model and the source model.

- We propose two novel injection-free solutions to attribute training data of a suspicious model to the source model at both the instance level and statistical level. These methods can effectively and reliably identify whether a suspicious model has been trained on data produced by a source model.

- We carry out an extensive evaluation of our attribution approach. Results demonstrate its performance is on par with existing watermark-based attribution approach where watermarks are injected before a model is deployed.

The rest of the paper is organized as follows. We introduce the background knowledge and related works in Section 2. Section 3 describes the preliminary and our assumptions. Then, Section 4 presents our research question and the attribution approach in detail. Experimental evaluation results are reported in Section 5. Finally, we conclude this paper in Section 6.

:::info

This paper is available on arxiv under CC BY 4.0 license.

:::