At Tesco Technology, designing a complex internal simulation platform for high-stakes decisions made one thing clear: even the most advanced AI tools can be misleading if they are not intuitive, user-friendly, and not designed to work for the humans using them. This raised a fundamental question: how can we make advanced data-driven tools truly user-centric? To answer this, we needed to design a framework for complex AI systems that bridges the gap between human-centred design and AI development.

This practical framework helps designers, data scientists, and engineers find common ground. It aligns the voice and needs of users with the design of AI systems, ensuring that human insights influence not only the interfaces but also the underlying logic and behaviour of AI tools, so that users trust and actually enjoy using them. This framework guides you through every phase of product development, from early research to post-launch feedback, highlighting where and how humans should be ‘in’ and ‘on’ the loop. This enables complex AI systems not just functional, but transparent, learnable, and trustworthy, systems that are designed to support decision-making, not replace human decision-makers, especially in strategic contexts and without a data science background.

Introduction

In the design world, we often refer to human-centred design, an approach focused on empathy, usability, and solving real user problems, but in data science and engineering, users are often considered late in the process, typically as validators of inputs. While some teams still prioritise technical feasibility, ideally, system’s design should be driven by users’ needs and understanding.

This is often viewed in terms of the concept of technological determinism, which states that people, culture, and the economy evolve as a result of technological progress. In contrast, the social construction of technology suggests that people shape technology to cause change, rather than the other way around. However, in practice, technology development is often influenced by limitations such as data availability, system constraints, or organisational priorities, rather than solely by ideal user needs or societal goals. This framework is more in line with the social construction of technology, in which users actively shape the evolution of the system rather than passively adapt to it, which is necessary in strategic contexts.

General terms

Human-in-the-Loop (HITL): Humans actively participate in the decision-making process. Ask: “Does the user have to shape or approve the AI’s actions before they are taken?”

Human-on-the-Loop (HOTL): Humans provide feedback and oversight after the AI has made a decision. Ask: “Does the user monitor, interpret or improve the AI’s Output?”

Generative-AI (GenAI) and large language models (LLMs): Computational methods for generating new content such as text, images, code, video or audio from training data. Additionally they can serve as multi-agent assistants that can run multiple tasks at scale.

Double Diamond (DD): A clear, comprehensive, and visual description of the design process. It includes Discover, Define, Develop, Deliver phases.

AI systems that operate without human supervision can be powerful but also misleading, especially in high-stakes areas with strategic decisions (e.g., healthcare, finance). HOTL structures provide a vital balance between autonomy and accountability. Humans monitor AI operations, intervene when necessary, and ensure safety, ethical compliance, and continuous improvement of the system. Unlike fully automated systems, HOTL assigns humans an oversight role, such as monitoring, guiding, and intervening at critical moments, while still allowing AI to operate smoothly in the background.

Why it matters

Without proper controls and boundaries, AI can make biased or unintentionally harmful decisions. HOTL ensures that human experts provide qualitative judgments and contextual insights that may not be captured in the data or available to the system. This oversight not only reduces risk, but also gives humans nuanced judgments that AI cannot handle alone. Including human oversight increases trust by providing ongoing feedback that improves the AI over time.

Framework with key actions

1. Research and exploration

DD: Discover

HOTL focus: User insight informs model and tool design.

Before writing code or designing screens, immerse yourself in the user’s world. Their context, needs, environment, problems, and behaviours should drive your technical direction and data strategy.

- Conduct qualitative and quantitative research.

- Gather user context, behavioural patterns, and user experiences.

- Map user journeys to surface pain points, needs, and motivations.

- Integrate lightweight research tools into the platform (e. g., micro-surveys, feedback on the process).

- Determine optimal feedback frequency.

2. Communications and interface design

DD: Define

HOTL: Interfaces guide and stimulate human interaction with models.

Clearly define problems and system structures for simulation tasks. Clarify interactions and demonstrate system behaviour to reduce cognitive load.

- Translate research into actionable design decisions by empowering users to act, learn, and intervene in meaningful ways.

- Translate research into clear problem definitions and system interaction patterns.

- Design and implement 5 types of communications: ○ warnings (e.g., error alerts) ○ notices (e.g., completion alerts, email notifications) ○ status indicators (e.g., loading, success, pending) ○ training messages ○ policy messages.

- Use visual signals to manage user cognitive load (colour, icons, animation) to indicate certainty, confidence or urgency.

2.1. Data inputs and trust mechanisms

DD: Define – Develop

2.1.1. Data preprocessing

HOTL: System alerts users, but no active decision-making is required.

- Automate preprocessing where possible to reduce manual work.

- Notify users of upload errors or invalid formats.

- Create transparent model input guides.

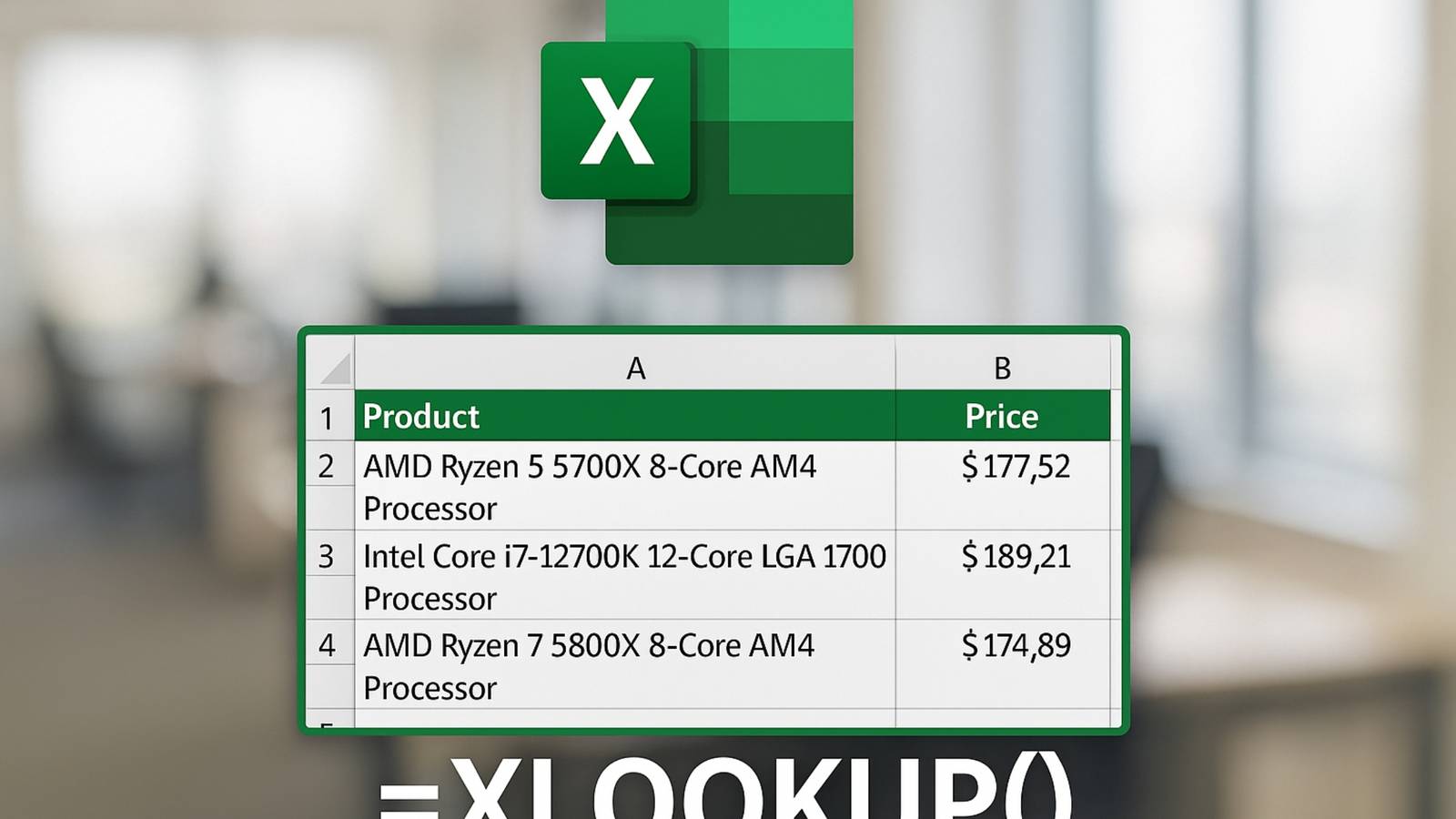

2.1.2. Inputs that guide system behaviour (e.g., scenario parameters or decision boundaries)

HITL: Users shape inputs and set controls.

- Segment users based on their roles and expertise level.

- Enable expert users to manually set simulation variables.

Provide presets for beginners.

2.1.3. Output validator

HITL: Set approval thresholds for high-risk outcomes before taking any action.

- Implement checkpoints where user approval is required.

- Create audit trails for user decisions for different user segments (who approved what and when).

- Create transparent model output guides.

2.1.4. Trust and security in the loop

HOTL: The team identifies anomalies and defines thresholds that require user review.

Define thresholds that trigger mandatory user validation.

Track validation errors over time to improve logic or the need to conduct and develop additional training.

3. Communication processing

DD: Develop – Deliver

HOTL: Feedback must be clear and applicable in practice.

Just showing results is not enough, it is important explaining users their meaning and what to do next. It is necessary to be aware of the user’s ability to understand the communication. Provide learning experiences where users can not only understand the message but also learn what to do in response to it.

- Design messages with clarity, relevance, and actionability.

- Include explanations or links for further help.

- Conduct user testing on messaging effectiveness.

- Track metrics for comprehension and appropriate user response.

4. Platform hierarchy and navigation

DD: Deliver

HOTL: Help users in complex environments.

As systems become more complex, intuitive navigation and clarity become critical. A clear information architecture allows users to know where to find content (e.g., intuitive layout, accessibility).

- Develop intuitive navigation and content grouping.

- Create a sitemap with sections (e.g., training, feedback, support).

- Validate structure with card-sorting and usability tests.

- Add a search function.

- Maintain consistency across user journeys to achieve key user goals.

5.1. Training

DD: Deliver

HOTL: Onboarding and training support deeper engagement.

Users need confidence to deeply engage with models. This can be achieved through excellent training and reinforcement. It is necessary to create different types of training materials, for beginners and pros. This may include video content, successful case studies, and updates.

- Create multi-level learning content (from introductory to advanced).

- Record short tutorial videos, walkthroughs and showcase successful use cases.

- Send notifications to users about new /additional content (via in-app messages, email, alerts).

- Run usability tests to validate learning efficacy.

5.2. Knowledge application

Promote:

- knowledge retention, when the user is able to remember a message when a situation arises in which that knowledge needs to be applied.

- knowledge transfer, when the user is able to recognise situations in which a message is applicable and understand how to apply it.

Key actions:

- Create mechanisms for continuous learning (e.g., built-in tips, contextual prompts and guidance).

- Reinforce learning with periodic micro reminders.

- Create practice scenarios.

- Conduct user testing to ensure effectiveness.

6.1. Feedback loops

DD: Rediscover – Redefine

HOTL: User feedback helps improve the system continuously.

A living system evolves with its users, so feedback shouldn’t be an afterthought; it should be a function of the system. It is necessary to create a mechanism to ensure continuous improvement based on user interactions and feedback by organising the collection of post-implementation information, integrating system and human feedback loops (combining human + system input).

- Create mechanisms for continuous collection of user feedback (e.g. post-task surveys, built-in tips and guidance).

- Set up automated feedback collection based on system usage data (e.g. error trigger, automatically open a window to send feedback to the team).

- Schedule periodic feedback reviews with cross-functional teams.

- Set up dashboards for feedback analytics.

- Update the system iteratively based on real world insights.

6.2. Continuous improvement

- Document version changes with change logs visible to users.

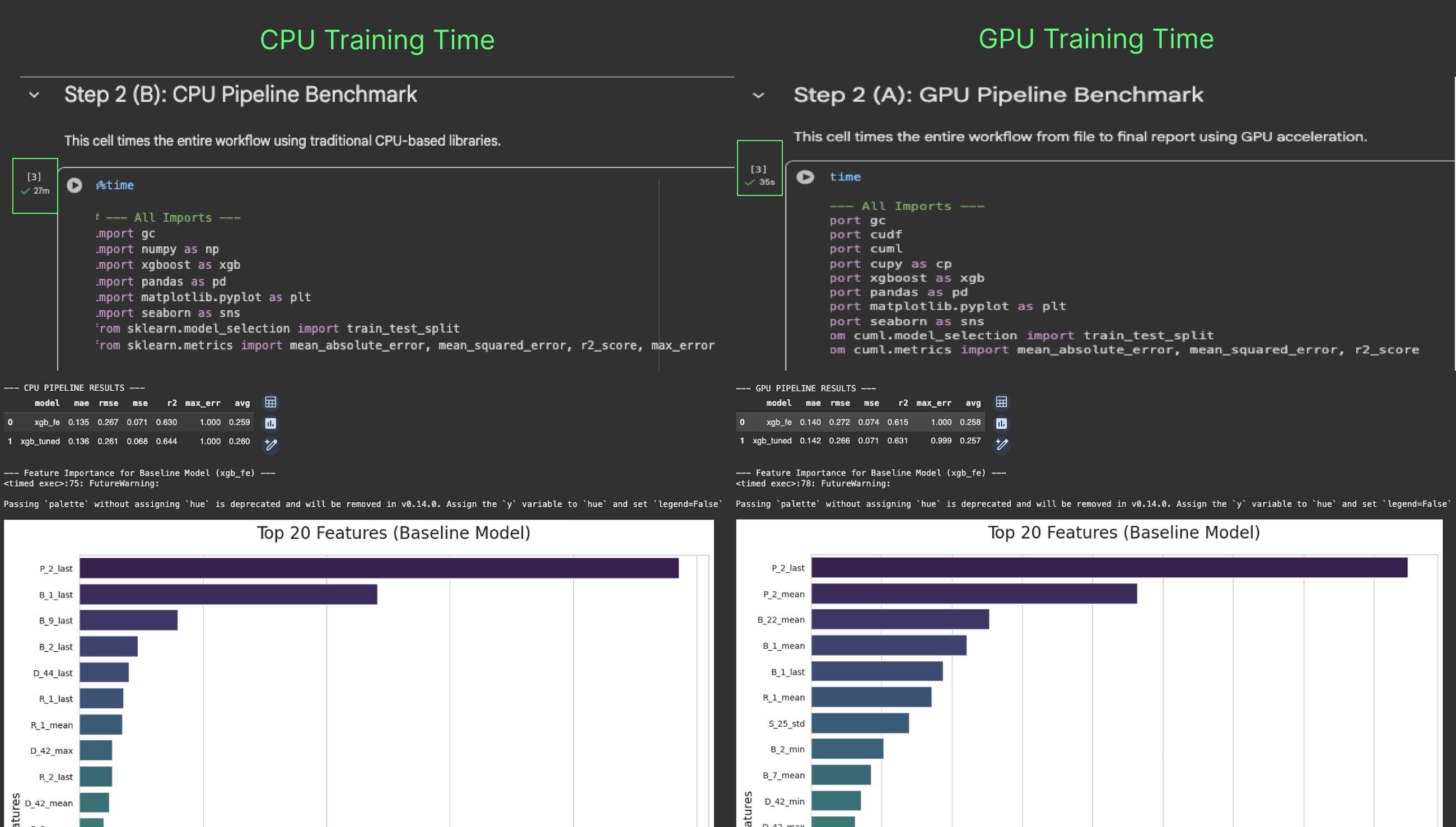

6.3. Data modelling

HITL: Users adjust or select scenarios.

- Integrate feedback loops where users correct, validate or select the best scenario.

7. Personalisation

DD: Rediscover

HOTL: Contextual relevance improves usability and comprehension.

Create personalised user experiences based on the user’s role. Tailored experiences increase adoption and satisfaction.

- Segment users and deliver role-based UI elements.

- Adapt training depth and frequency per user group.

- Recommend content dynamically based on past actions, errors, feedback or questions asked.

8. Collaboration

DD: Redefine – Develop

HITL: Users are collaborators, not just validators.

Involve users and your team in the iterative improvement process. Their insights are critical to improving AI systems.

- Set up feedback sessions and co-creation workshops with different user’s segments.

- Encourage cross-disciplinary collaboration (design, engineering, data science, management).

- Create and maintain a shared document for ongoing user insights and feedback.

- Establish a framework for prioritising and implementing suggestions.

Conclusion

Human-centred design is more than just a workflow; it’s a philosophy that recognises users as co-creators of AI systems. By integrating design thinking and machine learning, this framework ensures that technology serves people, not the other way around. This means creating intuitive interfaces that empower users through incorporating transparency, trust, and continuous feedback.

In a nutshell: