Table of Links

Abstract and 1 Introduction

2. Prior conceptualisations of intelligent assistance for programmers

3. A brief overview of large language models for code generation

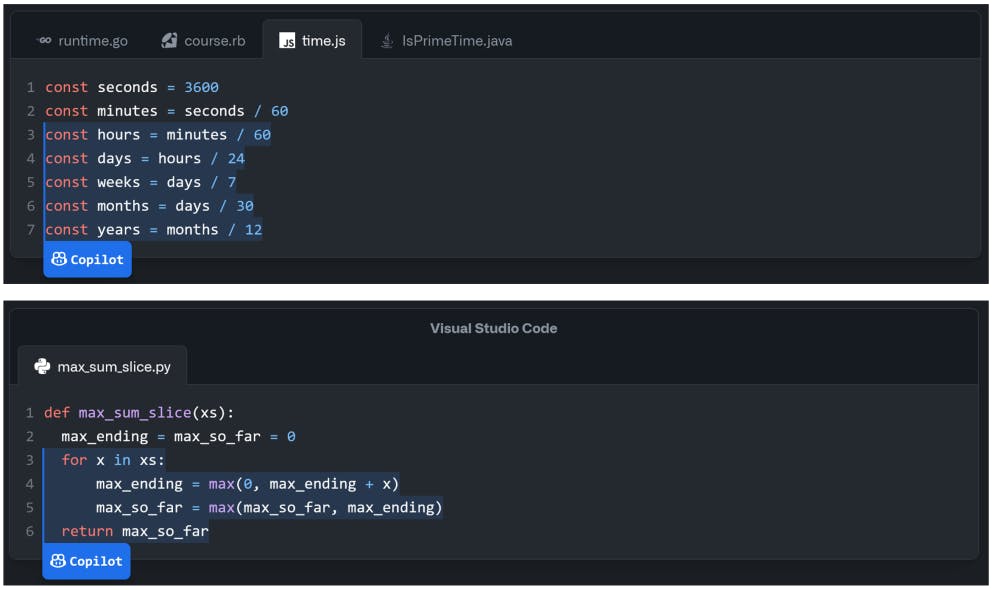

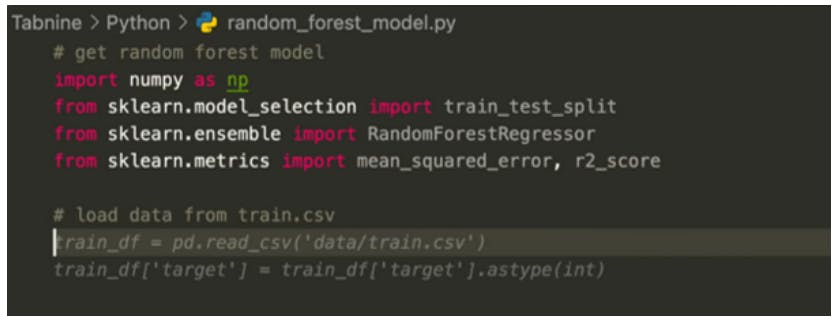

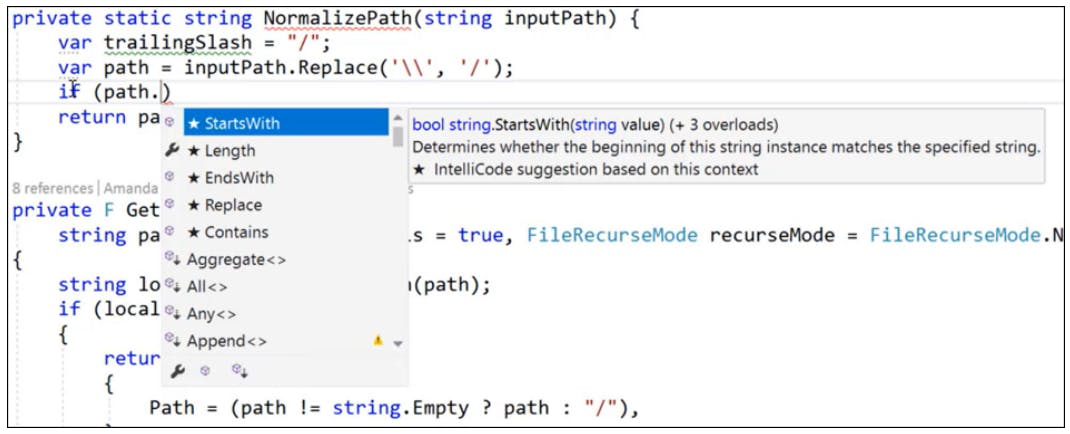

4. Commercial programming tools that use large language models

5. Reliability, safety, and security implications of code-generating AI models

6. Usability and design studies of AI-assisted programming

7. Experience reports and 7.1. Writing effective prompts is hard

7.2. The activity of programming shifts towards checking and unfamiliar debugging

7.3. These tools are useful for boilerplate and code reuse

8. The inadequacy of existing metaphors for AI-assisted programming

8.1. AI assistance as search

8.2. AI assistance as compilation

8.3. AI assistance as pair programming

8.4. A distinct way of programming

9. Issues with application to end-user programming

9.1. Issue 1: Intent specification, problem decomposition and computational thinking

9.2. Issue 2: Code correctness, quality and (over)confidence

9.3. Issue 3: Code comprehension and maintenance

9.4. Issue 4: Consequences of automation in end-user programming

9.5. Issue 5: No code, and the dilemma of the direct answer

10. Conclusion

A. Experience report sources

References

3. A brief overview of large language models for code generation

3.1. The transformer architecture and big datasets enable large pre-trained models

In the 2010s, natural language processing has evolved in the development of language models (LMs,) tasks, and evaluation. Mikolov et al. (2013) introduced Word2Vec, where vectors are assigned to words such that similar words are grouped together. It relies on co-occurrences in text (like Wikipedia articles), though simple instantiations ignore the fact that words can have multiple meanings depending on context. Long short-term memory (LSTM) neural networks (Hochreiter & Schmidhuber, 1997; Sutskever et al., 2014) and later encoder-decoder networks, account for order in an input sequence. Self-attention (Vaswani et al., 2017) significantly simplified prior networks by replacing each element in the input by a weighted average of the rest of the input. Transformers combined the advantages of (multi-head) attention and word embeddings, enriched with positional encodings (which add order information to the word embeddings) into one architecture. While there are many alternatives to transformers for language modelling, in this paper when we mention a language model we will usually imply a transformer-based language model.

There are large collections of unlabelled text for some widely-spoken natural languages. For example, the Common Crawl project[1] produces around 20 TB of text data (from web pages) monthly. Labelled task-specific data is less prevalent. This makes unsupervised training appealing. Pre-trained LMs (J. Li et al., 2021) are commonly trained to perform next-word prediction (e.g., GPT (Brown et al., 2020)) or filling a gap in a sequence (e.g., BERT (Devlin et al., 2019)).

Ideally, the “general knowledge” learnt by pre-trained LMs can then be transferred to downstream language tasks (where we have less labelled data) such as question answering, fiction generation, text summarisation, etc. Fine-tuning is the process of adapting a given pre-trained LM to different downstream tasks by introducing additional parameters and training them using task-specific objective functions. In certain cases the pre-training objective also gets adjusted to better suit the downstream task. Instead of (or on top of) fine-tuning, the downstream task can be reformulated to be similar to the original LLM training. In practice, this means expressing the task as a set of instructions to the LLM via a prompt. So the goal, rather than defining a learning objective for a given task, is to find a way to query the LLM to directly predict for the downstream task. This is sometimes referred to as Pre-train, Prompt, Predict.[2]

3.2. Language models tuned for source code generation

The downstream task of interest to us in this paper is code generation, where the input to the model is a mixture of natural language comments and code snippets, and the output is new code. Unlike other downstream tasks, a large corpus of data is available from public code repositories such as GitHub. Code generation can be divided into many sub-tasks, such as variable type generation, e.g. (J. Wei et al., 2020), comment generation, e.g. (Liu et al., 2021), duplicate detection, e.g (Mou et al., 2016), code migration from one language to another e.g. (Nguyen et al., 2015) etc. A recent benchmark that covers many tasks is CodeXGLUE (Lu et al., 2021).

LLM technology has brought us within reach of full-solution generation. Codex (Chen, Tworek, Jun, Yuan, Ponde, et al., 2021), a version of GPT-3 fine-tuned for code generation, can solve on average 47/164 problems in the HumanEval code generation benchmark, in one attempt. HumanEval is a set of 164 hand-written programming problems, which include a function signature, docstring, body, and several unit tests, with an average of 7.7 tests per problem. Smaller models have followed Codex, like GPT-J[3] (fine-tuned on top of GPT-2), CodeParrot[4] (also fine-tuned on top of GPT-2, targets Python generations), PolyCoder (Xu, Alon, et al., 2022)(GPT-2 style but trained directly on code).

LLMs comparable in size to Codex include AlphaCode (Y. Li et al., 2022a) and PaLM-Coder (Chowdhery et al., 2022). AlphaCode is trained directly on GitHub data and fine-tuned on coding competition problems. It introduces a method to reduce from a large number of potential solutions (up to millions) to a handful of candidates (competitions permit a maximum of 10). On a dataset of 10000 programming problems, Codex solves around 3% of the problems within 5 attempts, versus AlphaCode which solves 4-7%. In competitions for which it was fine-tuned (CodeContests) AlphaCode achieves a 34% success rate, on par with the average human competitor.

Despite promising results there are known shortcomings. Models can directly copy full solutions or key parts of the solutions from the training data, rather than generating new code. Though developers make efforts to clean and retain only high-quality code, there are no guarantees of correctness and errors can be directly propagated through generations.

Codex can also produce syntactically incorrect or undefined code, and can invoke functions, variables, and attributes that are undefined or out of scope. Moreover, Codex struggles to parse through increasingly long and higher-level or system-level specifications which can lead to mistakes in binding operations to variables, especially when the number of operations and variables in the docstring is large. Various approaches have been explored to filter out bad generations or repair them, especially for syntax errors.

Consistency is another issue. There is a trade-off between non-determinism and generation diversity. Some parameter settings can control the diversity of generation (i.e., how diverse the different generations for a single prompt might be), but there is no guarantee that we will get the same generation if we run the system at different times under the same settings. To alleviate this issue in measurements, metrics such as pass@k (have a solution that passes the tests within k tries) have been modified to be probabilistic.

[1] https://commoncrawl.org/

[2] http://pretrain.nlpedia.ai/

[3] https://huggingface.co/docs/transformers/main/model_doc/gptj

[4] https://huggingface.co/blog/codeparrot