In the early 2000s, Google, faced with the bursting of the Internet bubble, realized that residual user data had immense value. It was really from this moment that the great shift took place: our private life became, on the web, raw material to be extracted. The advent of social networks (Facebook first), a few years later, only put the nail in the coffin, forever ratifying the notion of confidentiality as relative. Now part of the Meta empire, the company is unfortunately known for being the biggest data vacuum on the web, in particular thanks to the immeasurable striking power of Meta AI.

Obviously, the predator is no longer alone. OpenAI, Google, Anthropic: all the tech giants are waging a silent war to monopolize every last piece of your digital existence. For this, they are equipped with the best weapons in the contemporary arsenal: chatbots. Whether you use ChatGPT or Gemini for personal or professional reasons, you leave, as soon as you converse with these interfaces, a trace of yourself. A persistent digital alter ego, engraved deep within the servers of Big Tech American.

He looks so much like you that your own reflection in the mirror would appear blurry in comparison; a replica of yourself so faithful that even your friends or family members wouldn’t recognize it. For those who would cry conspiracy theory, know that unfortunately this is not the case; this is a very serious study published in the journal Nature Human Behaviour on January 30, 2026 which has just demonstrated it. Even if LLMs do not understand not in the human sense of the term, they razed our inner garden, depriving us of this fundamental right which is the secret of oneself.

Language: a semantic spy

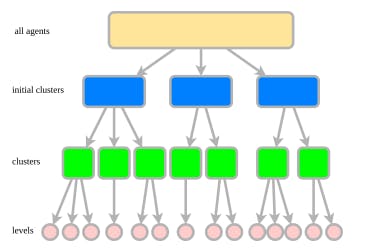

The experiment was conducted by researchers from the University of Michigan and Pittsburgh on more than 160 participants. The latter revealed their privacy to ChatGPT and Claudein the form of daily videos or raw monologues where they simply let their minds wander out loud.

The AI models never needed subjects to fill out any questionnaires. After integrating the participants’ confidences, the machine had to respond to classic personality tests used in psychology by pretending to be them. The answers then generated by the AI models corresponded almost perfectly to reality, surpassing even the predictions that their friends or family members might have made.

« What this study demonstrates is that AI can also help us better understand ourselves, offering insight into what makes us most human: our personalities », Analyzes Aidan Wright, first author of the study and professor of psychology. By simply counting the occurrences of words during conversations, AI models are able to predict with frightening accuracy extremely intimate psychological components : felt emotions, stress level, social behaviors and even the likelihood of a future mental disorder diagnosis.

For Chandra Sripada, professor of philosophy and psychiatry at the University of Michigan, these results prove that language carries such exact clues about our psychological traits that they were impossible to treat before the arrival of LLMs. “ Thanks to generative AI, researchers can now analyze this type of data quickly and with precision that was simply impossible before “, he emphasizes.

Keep this in mind: from the moment you regularly use a chatbot, there is nowhere to hide. This was already true 15 years ago, but with the advent of natural language processing, your informal data is now transformed into usable behavioral metadata: the change of scale is immense. Since our passing thoughts are “ imbued with our deep identity », as analyzed by Whitney Ringwald, co-author of this study, the distinctionBetween what you choose to show and what you really are no longer exists. It is now up to us to discern whether the comfort provided by these tools – very practical it must be admitted – definitely worth the sacrifice.

🟣 To not miss any news on the WorldOfSoftware, follow us on Google and on our WhatsApp channel. And if you love us, .