Authors:

(1) Nora Schneider, Computer Science Department, ETH Zurich, Zurich, Switzerland ([email protected]);

(2) Shirin Goshtasbpour, Computer Science Department, ETH Zurich, Zurich, Switzerland and Swiss Data Science Center, Zurich, Switzerland ([email protected]);

(3) Fernando Perez-Cruz, Computer Science Department, ETH Zurich, Zurich, Switzerland and Swiss Data Science Center, Zurich, Switzerland ([email protected]).

Table of Links

Abstract and 1 Introduction

2 Background

2.1 Data Augmentation

2.2 Anchor Regression

3 Anchor Data Augmentation

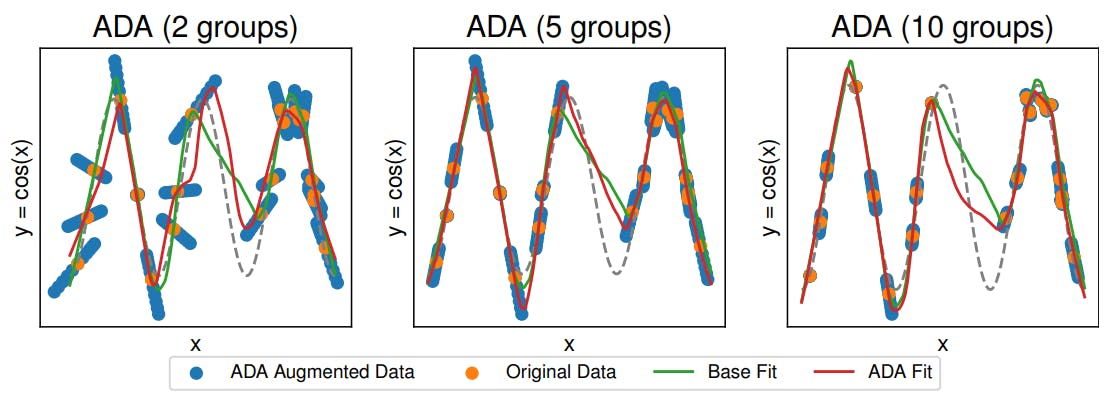

3.1 Comparison to C-Mixup and 3.2 Preserving nonlinear data structure

3.3 Algorithm

4 Experiments and 4.1 Linear synthetic data

4.2 Housing nonlinear regression

4.3 In-distribution Generalization

4.4 Out-of-distribution Robustness

5 Conclusion, Broader Impact, and References

A Additional information for Anchor Data Augmentation

B Experiments

5 Conclusion

We introduced Anchor Data Augmentation (ADA), an extension of Anchor Regression for the purpose of data augmentation. AR is a novel causal approach to increase the robustness in regression problems. In ADA, we systematically mix multiple samples based on a collective similarity criterion, which is determined via clustering. The augmented samples are modifications of the original samples that are moved towards or away from the cluster centroids based on the desired degree of robustness in AR. Our empirical evaluations across diverse synthetic and real-world regression problems consistently demonstrate the effectiveness of ADA, especially for limited data availability. ADA is competitive with or outperforms state-of-the-art data augmentation strategies for regression problems, even though the improvements are marginal on some datasets.

ADA can be applied to any regression setting, and we have not found any case in which the results were detrimental. To apply ADA, we only need to cluster our data and select a distribution for γ. We relied on vanilla k-means, and the results are robust with respect to the number of clusters. Other clustering algorithms might be more suitable for different applications. For setting the parameter γ, we used a uniform distribution. We believe a gamma distribution could be equally effective.

Broader Impact

The purpose of data augmentation is to compensate for data scarcity in multiple domains where gathering and labeling data accurately by experts is impractical, expensive, or time-consuming. If applied properly, it can effectively expand the training dataset, reduce overfitting and improve the model’s robustness, as was shown in the paper. However, It is important to note that the choice and combination of the data augmentation technique depends on the specific problem and using the wrong augmentation method may introduce additional bias to the model. More generally, incorrect data augmentation can lead to the following problems: overfitting the augmented data, loss of important information, introduction of unrealistic patterns and imbalanced presentation of the data. Detecting emerging problems due to data augmentation may not be straightforward. In particular, the performance on a test distribution that matches the training data distribution may be misleading and the model’s predictions should be used with caution on new data that reflects the potential distribution shifts or variations encountered in real-world.

References

[1] Antreas Antoniou, Amos Storkey, and Harrison Edwards. Data augmentation generative adversarial networks. arXiv preprint arXiv:1711.04340, 2017.

[2] Raphael Baena, Lucas Drumetz, and Vincent Gripon. Preventing manifold intrusion with locality: Local mixup. arXiv preprint arXiv:2201.04368, 2022.

[3] Xavier Bouthillier, Kishore Konda, Pascal Vincent, and Roland Memisevic. Dropout as data augmentation. arXiv preprint arXiv:1506.08700, 2015.

[4] Peter Bühlmann. Invariance, causality and robustness. Statistical Science, 35(3):404–426, 2020.

[5] Luigi Carratino, Moustapha Cissé, Rodolphe Jenatton, and Jean-Philippe Vert. On mixup regularization. The Journal of Machine Learning Research, 23(1):14632–14662, 2022.

[6] Clément Chadebec and Stéphanie Allassonnière. Data augmentation with variational autoencoders and manifold sampling. In Deep Generative Models, and Data Augmentation, Labelling, and Imperfections, pages 184–192. Springer, 2021.

[7] Ekin D Cubuk, Barret Zoph, Dandelion Mane, Vijay Vasudevan, and Quoc V Le. Autoaugment: Learning augmentation strategies from data. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pages 113–123, 2019.

[8] Ekin D Cubuk, Barret Zoph, Jonathon Shlens, and Quoc V Le. Randaugment: Practical automated data augmentation with a reduced search space. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition workshops, pages 702–703, 2020.

[9] Jia Deng, Wei Dong, Richard Socher, Li-Jia Li, Kai Li, and Li Fei-Fei. Imagenet: A largescale hierarchical image database. In 2009 IEEE conference on computer vision and pattern recognition, pages 248–255. Ieee, 2009.

[10] Terrance DeVries and Graham W Taylor. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552, 2017.

[11] Vanessa Didelez, Sha Meng, and Nuala A Sheehan. Assumptions of iv methods for observational epidemiology. Statistical Science, 25(1):22–40, 2010.

[12] Dheeru Dua and Casey Graff. UCI machine learning repository, 2017.

[13] Ian Goodfellow, Jean Pouget-Abadie, Mehdi Mirza, Bing Xu, David Warde-Farley, Sherjil Ozair, Aaron Courville, and Yoshua Bengio. Generative adversarial networks. Communications of the ACM, 63(11):139–144, 2020.

[14] David Harrison and Daniel L Rubinfeld. Hedonic housing prices and the demand for clean air. Journal of Environmental Economics and Management, 5(1):81–102, 1978.

[15] Kaiming He, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, pages 770–778, 2016.

[16] Daniel Ho, Eric Liang, Xi Chen, Ion Stoica, and Pieter Abbeel. Population based augmentation: Efficient learning of augmentation policy schedules. In International Conference on Machine Learning, pages 2731–2741. PMLR, 2019.

[17] Kexin Huang, Tianfan Fu, Wenhao Gao, Yue Zhao, Yusuf Roohani, Jure Leskovec, Connor W Coley, Cao Xiao, Jimeng Sun, and Marinka Zitnik. Therapeutics data commons: Machine learning datasets and tasks for drug discovery and development. arXiv preprint arXiv:2102.09548, 2021.

[18] Seong-Hyeon Hwang and Steven Euijong Whang. Regmix: Data mixing augmentation for regression. arXiv preprint arXiv:2106.03374, 2021.

[19] R. Kelley Pace and Ronald Barry. Sparse spatial autoregressions. Statistics and Probability Letters, 33(3):291–297, 1997.

[20] Jang-Hyun Kim, Wonho Choo, Hosan Jeong, and Hyun Oh Song. Co-mixup: Saliency guided joint mixup with supermodular diversity. arXiv preprint arXiv:2102.03065, 2021.

[21] Jang-Hyun Kim, Wonho Choo, and Hyun Oh Song. Puzzle mix: Exploiting saliency and local statistics for optimal mixup. In International Conference on Machine Learning, pages 5275–5285. PMLR, 2020.

[22] Diederik P Kingma and Max Welling. Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114, 2013.

[23] Pang Wei Koh, Shiori Sagawa, Henrik Marklund, Sang Michael Xie, Marvin Zhang, Akshay Balsubramani, Weihua Hu, Michihiro Yasunaga, Richard Lanas Phillips, Irena Gao, et al. Wilds: A benchmark of in-the-wild distribution shifts. In International Conference on Machine Learning, pages 5637–5664. PMLR, 2021.

[24] Charles Kooperberg. Statlib: an archive for statistical software, datasets, and information. The American Statistician, 51(1):98, 1997.

[25] Alex Krizhevsky, Geoffrey Hinton, et al. Learning multiple layers of features from tiny images. 2009.

[26] Alex Krizhevsky, Ilya Sutskever, and Geoffrey E Hinton. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems, 25, 2012.

[27] Guokun Lai, Wei-Cheng Chang, Yiming Yang, and Hanxiao Liu. Modeling long-and short-term temporal patterns with deep neural networks. In The 41st international ACM SIGIR conference on research & development in information retrieval, pages 95–104, 2018.

[28] Balaji Lakshminarayanan, Alexander Pritzel, and Charles Blundell. Simple and scalable predictive uncertainty estimation using deep ensembles. Advances in neural information processing systems, 30, 2017.

[29] Yann LeCun, Léon Bottou, Yoshua Bengio, and Patrick Haffner. Gradient-based learning applied to document recognition. Proceedings of the IEEE, 86(11):2278–2324, 1998.

[30] Sungbin Lim, Ildoo Kim, Taesup Kim, Chiheon Kim, and Sungwoong Kim. Fast autoaugment. Advances in Neural Information Processing Systems, 32, 2019.

[31] Chen Lin, Minghao Guo, Chuming Li, Xin Yuan, Wei Wu, Junjie Yan, Dahua Lin, and Wanli Ouyang. Online hyper-parameter learning for auto-augmentation strategy. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 6579–6588, 2019.

[32] Tom Ching LingChen, Ava Khonsari, Amirreza Lashkari, Mina Rafi Nazari, Jaspreet Singh Sambee, and Mario A Nascimento. Uniformaugment: A search-free probabilistic data augmentation approach. arXiv preprint arXiv:2003.14348, 2020.

[33] Zicheng Liu, Siyuan Li, Di Wu, Zihan Liu, Zhiyuan Chen, Lirong Wu, and Stan Z Li. Automix: Unveiling the power of mixup for stronger classifiers. In European Conference on Computer Vision, pages 441–458. Springer, 2022.

[34] Zirui Liu, Haifeng Jin, Ting-Hsiang Wang, Kaixiong Zhou, and Xia Hu. Divaug: Plug-in automated data augmentation with explicit diversity maximization. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 4762–4770, 2021.

[35] J MacQueen. Classification and analysis of multivariate observations. In 5th Berkeley Symp. Math. Statist. Probability, pages 281–297, 1967.

[36] Samuel G Müller and Frank Hutter. Trivialaugment: Tuning-free yet state-of-the-art data augmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 774–782, 2021.

[37] David Ouyang, Bryan He, Amirata Ghorbani, Neal Yuan, Joseph Ebinger, Curtis P Langlotz, Paul A Heidenreich, Robert A Harrington, David H Liang, Euan A Ashley, et al. Video-based ai for beat-to-beat assessment of cardiac function. Nature, 580(7802):252–256, 2020.

[38] Hakime Öztürk, Arzucan Özgür, and Elif Ozkirimli. Deepdta: deep drug–target binding affinity prediction. Bioinformatics, 34(17):i821–i829, 2018.

[39] Jonas Peters, Dominik Janzing, and Bernhard Schoelkopf, editors. Elements of Causal Inference. MIT Press, Cambridge, MA, 2017.

[40] Jonas Peters, Nicolai Meinshausen, and Peter Bühlmann. Causal inference by using invariant prediction: Identification and confidence intervals. Journal of the Royal Statistical Society Series B, 78(5):947–1012, 2016.

[41] Mateo Rojas-Carulla, Bernhard Schölkopf, Richard Turner, and Jonas Peters. Invariant models for causal transfer learning. The Journal of Machine Learning Research, 19(1):1309–1342, 2018.

[42] Dominik Rothenhäusler, Nicolai Meinshausen, Peter Bühlmann, Jonas Peters, et al. Anchor regression: Heterogeneous data meet causality. Journal of the Royal Statistical Society Series B, 83(2):215–246, 2021.

[43] Karen Simonyan and Andrew Zisserman. Very deep convolutional networks for large-scale image recognition. arXiv preprint arXiv:1409.1556, 2014.

[44] Zhiqiang Tang, Yunhe Gao, Leonid Karlinsky, Prasanna Sattigeri, Rogerio Feris, and Dimitris Metaxas. Onlineaugment: Online data augmentation with less domain knowledge. In European Conference on Computer Vision, pages 313–329. Springer, 2020.

[45] Luke Taylor and Geoff Nitschke. Improving deep learning with generic data augmentation. In 2018 IEEE Symposium Series on Computational Intelligence (SSCI), pages 1542–1547. IEEE, 2018.

[46] Vikas Verma, Alex Lamb, Christopher Beckham, Amir Najafi, Ioannis Mitliagkas, David LopezPaz, and Yoshua Bengio. Manifold mixup: Better representations by interpolating hidden states. In International conference on machine learning, pages 6438–6447. PMLR, 2019.

[47] Haotao Wang, Chaowei Xiao, Jean Kossaifi, Zhiding Yu, Anima Anandkumar, and Zhangyang Wang. Augmax: Adversarial composition of random augmentations for robust training. Advances in neural information processing systems, 34:237–250, 2021.

[48] Xiaogang Xu, Hengshuang Zhao, and Philip Torr. Universal adaptive data augmentation. arXiv preprint arXiv:2207.06658, 2022.

[49] Huaxiu Yao, Yiping Wang, Linjun Zhang, James Zou, and Chelsea Finn. C-mixup: Improving generalization in regression. In Proceeding of the Thirty-Sixth Conference on Neural Information Processing Systems, 2022.

[50] Sangdoo Yun, Dongyoon Han, Seong Joon Oh, Sanghyuk Chun, Junsuk Choe, and Youngjoon Yoo. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF international conference on computer vision, pages 6023–6032, 2019.

[51] Hongyi Zhang, Moustapha Cisse, Yann N Dauphin, and David Lopez-Paz. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412, 2017.

[52] Linjun Zhang, Zhun Deng, Kenji Kawaguchi, Amirata Ghorbani, and James Zou. How does mixup help with robustness and generalization? arXiv preprint arXiv:2010.04819, 2020.

[53] Xinyu Zhang, Qiang Wang, Jian Zhang, and Zhao Zhong. Adversarial autoaugment. arXiv preprint arXiv:1912.11188, 2019.