Authors:

(1) Soyeong Jeong, School of Computing;

(2) Jinheon Baek, Graduate School of AI;

(3) Sukmin Cho, School of Computing;

(4) Sung Ju Hwang, Korea Advanced Institute of Science and Technology;

(5) Jong C. Park, School of Computing.

Table of Links

Abstract and 1. Introduction

2 Related Work

3 Method and 3.1 Preliminaries

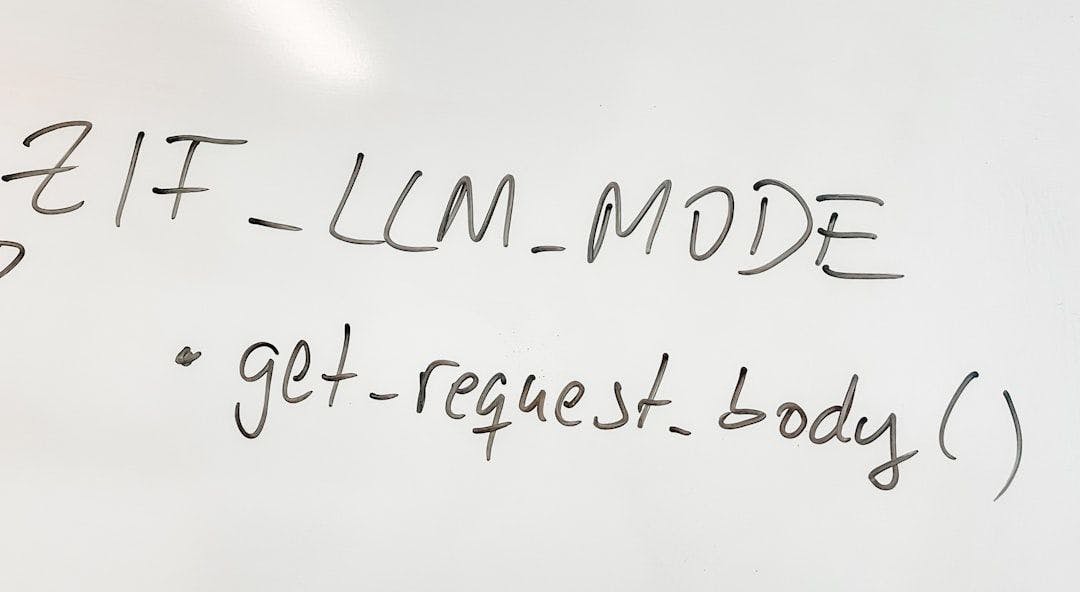

3.2 Adaptive-RAG: Adaptive Retrieval-Augmented Generation

4 Experimental Setups and 4.1 Datasets

4.2 Models and 4.3 Evaluation Metrics

4.4 Implementation Details

5 Experimental Results and Analyses

6 Conclusion, Limitations, Ethics Statement, Acknowledgements, and References

A Additional Experimental Setups

B Additional Experimental Results

5 Experimental Results and Analyses

In this section, we show the overall experimental results and offer in-depth analyses of our method.

Main Results First of all, Table 1 shows our main results averaged over all considered datasets, which corroborate our hypothesis that simple retrieval augmented strategies are less effective than the complex strategy, while the complex one is significantly more expensive than the simple ones. In addition, we report the more granular results with FLAN-T5-XL on each of the single-hop and multihop datasets in Table 2 (and more with different LLMs in Table 7 and Table 8 of Appendix), which are consistent with the results observed in Table 1.

However, in a real-world scenario, not all users ask queries with the same level of complexity, which emphasizes the importance of the need for adaptive strategies. Note that among the adaptive strategies, our Adaptive-RAG shows remarkable effectiveness over the competitors (Table 1). This indicates that merely focusing on the decision of whether to retrieve or not is suboptimal. Also, as shown in Table 2, such simple adaptive strategies are particularly inadequate for handling complex queries in multi-hop datasets, which require aggregated information and reasoning over multiple documents. Meanwhile, our approach can consider a more fine-grained query handling strategy by further incorporating an iterative module for complex queries. Furthermore, in a realistic setting, we should take into account not only effectiveness but also efficiency. As shown in Table 1, compared to the complex multi-step strategy, our proposed adaptive strategy is significantly more efficient across all model sizes. This is meaningful in this era of LLMs, where the cost of accessing them is a critical factor for practical applications and scalability. Finally, to see the upper bound of our Adaptive-RAG, we report its performances with the oracle classifier where the classification performance is perfect. As shown in Table 1 and Table 2, we observe that it achieves the best performance while being much more efficient than our Adaptive-RAG without the oracle classifier. These results support the validity and significance of our proposal for adapting retrieval-augmented LLM strategies based on query complexity, and further suggest the direction to develop more improved classifiers to achieve optimal performance.

Classifier Performance To understand how the proposed classifier works, we analyze its performance across different complexity labels. As Figure 3 (Left and Center) shows, the classification accuracy of our Adaptive-RAG is better than those of the other adaptive retrieval baselines, which leads to overall QA performance improvements. In other words, this result indicates that our AdaptiveRAG is capable of more accurately classifying the complexity levels with various granularities, which include not performing retrieval, performing retrieval only once, and performing retrieval multiple times. In addition to the true positive performance of our classifier averaged over all those three labels in Figure 3 (Left and Center), we further report its confusion matrix in Figure 3 (Right). We note that the confusion matrix reveals some notable trends: ‘C (Multi)’ is sometimes misclassified as ‘B (One)’ (about 31%) and ‘B (One)’ as ‘C (Multi)’ (about 23%); ‘A (No)’ is misclassified often as ‘B (One)’ (about 47%) and less frequently as ‘C (Multi)’ (about 22%). While the overall results in Figure 3 show that our classifier effectively categorizes the three labels, further refining it based on such misclassification would be a meaningful direction for future work.

Analyses on Efficiency for Classifier While Table 1 shows the relative elapsed time for each of the three different RAG strategies, we further provide the exact elapsed time per query for our AdaptiveRAG and the distribution for predicted labels from our query-complexity classifier in Table 3. Similar to the results of the elapsed time in Table 1 (relative time), Table 3 (exact time) shows that efficiency can be substantially improved by identifying simple or straightforward queries.

Analyses on Training Data for Classifier We have shown that the classifier plays an important role in adaptive retrieval. Here, we further analyze the different strategies for training the classifier by ablating our full training strategy, which includes two approaches: generating silver data from predicted outcomes of models and utilizing inductive bias in datasets (see Section 3.2). As Table 4 shows, compared to the training strategy relying solely on the data derived from inductive bias, ours is significantly more efficient. This efficiency is partly because ours also takes into account the case that does not consider any documents at all, as also implied by the classification accuracy; meanwhile, queries in the existing datasets do not capture the information on whether the retrieval is required or not. On the other hand, in the case of only using the silver data annotated from the correct predictions, while its overall classification accuracy is high, the overall QA performance implies that relying on the silver data may not be optimal. This may be because this silver data does not cover complexity labels over incorrectly predicted queries, which leads to lower generalization effect on queries relevant to them. Meanwhile, by also incorporating complexity labels from dataset bias (single-hop vs multi-hop), the classifier becomes more accurate in predicting multi-hop queries, leading to the better performance. It is worth noting that our automatic labeling strategies are two particular instantiations for training the classifier, and that there could be other instantiations, which we leave as future work.

Analyses on Classifier Size To investigate the sensitivity of our classifier according to its varying sizes, we conducted further experiments. As shown in Table 6, we observe no significant performance differences among classifiers of various sizes, even with reduced complexity and fewer parameters in smaller classifiers. This indicates that our proposed classifier can contribute to resource-efficient settings in real-use cases with smaller sizes without compromising the performance.

Case Study We conduct a case study to qualitatively compare our Adaptive-RAG against Adaptive Retrieval. Table 5 shows the classified complexity and the query handling patterns for both simple and complex questions. First, for the simple single-hop question, our Adaptive-RAG identifies that it is answerable by only using the LLM’s parametric knowledge about ‘Google’. By contrast,

Adaptive Retrieval fetches additional documents, leading to longer processing times and occasionally producing incorrect responses due to the inclusion of partially irrelevant information about ‘Microsoft’. Meanwhile, faced with a complex question, Adaptive-RAG seeks out relevant information, including details like ‘a son of John Cabot’, which may not have been stored in LLMs, while Adaptive Retrieval fails to request such information from external sources, resulting in inaccurate answers.