The biggest breakthrough enabling mass AI adoption last year was reliable function and tool calling.

At the start of 2025, major releases – like Claude tools – triggered excitement and panic on social media. They made it easy to optimize basic routine tasks. But the real question is: why did this suddenly work?

I recently did another gadget-free day for myself and used the time to reflect on this shift, think it through, and share my thoughts with you.

Today, LLMs have far more structured context about us and, crucially, the ability to trigger actions. Previously, when you typed into ChatGPT “help me with X,” it would respond with “tell me about A,” then “tell me about B.” In 2025, that stage largely disappeared. We learned how to give models a richer context upfront.

As a result, LLMs stopped being just chats and orchestrators. The interaction model flipped: before, LLMs told you what to do. Now, they tell tool interfaces what to do. And those tools execute.

Function calling itself isn’t new; it existed back in 2023. What changed is:

- Reasoning models became cheaper (and smarter);

- High-quality integrations appeared inside the existing UX. MCP-style integrations became accessible. What once required CLI work is now one click.

- And most importantly, function calling stopped breaking and became safe for production.

Consequence #1: LLMs Become Runtimes

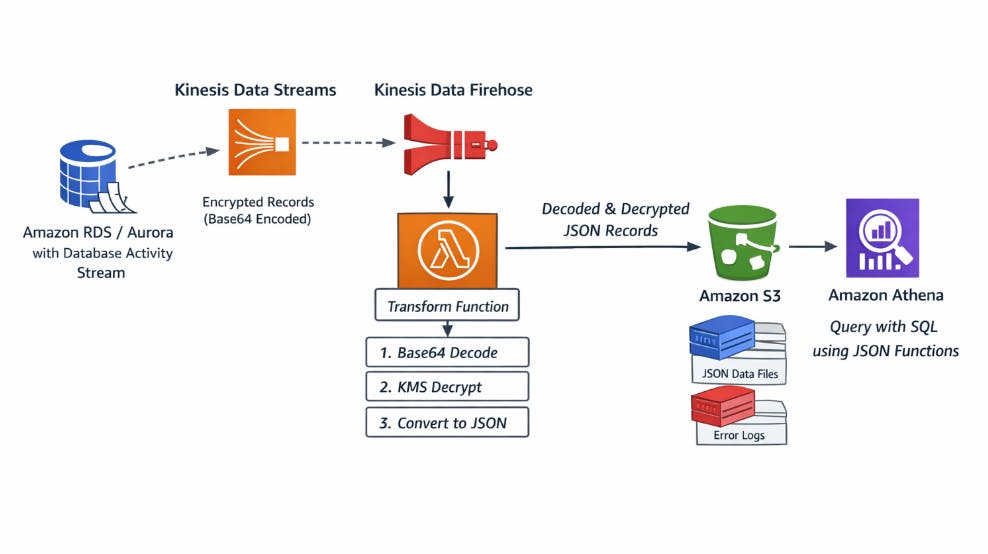

Once LLMs begin executing tasks, the state cannot live within the model. To ensure reliable execution, the state must be externalized. Otherwise, hallucinations and context window limits make repeatable outcomes impossible. This is where BPMS- or Temporal-like systems come in: explicit process definitions that survive prompt failures and allow execution to continue.

For business, it is critical to get repeatable results. In other words, instead of an orchestrator, the system needs to become a runtime. This can be done via MCP. I think it’s only a matter of time before we see a cheap product that does this.

Of course, here we need to assume that the runtime is expected to always return the same result, whereas the LLM is inherently stochastic and influenced by many factors. Therefore, it’s not the LLM itself that becomes the runtime, but the entire surrounding system that makes the output predictable with an external state.

Security risks increase

Attackers are now even more interested in gaining control over tools, traffic, or the LLM itself. Imagine a malicious code embedded in a desktop AI tool that quietly steals crypto when you prompt: “Analyze which coins I should invest my Solana in.” This isn’t hypothetical, and it’s not limited to desktop apps. I guess trust in tooling will matter more.

Consequence #2: The Spread of Reasoning Models

At the beginning of 2025, reasoning models felt exotic and hallucination-prone (I tried several myself). Today, “thinking” models are the most effective part of process orchestration.

Take Claude Opus. It first reasons about what a landing page needs, then executes the work and produces results that people praise on X. Anthropic calls this a hybrid model. I don’t know exactly where the efficiency boundary lies, but from a results standpoint, it works great.

Prompt: “Create a red landing page to sell my yogurt product named Kogurt.”

Paradoxically, the worse the input, the better the results and the stronger the “wow” effect.

My assumptions here:

- Users want results, not the reasoning process (they won’t reread or analyze it)

- Results need to be repeatable

- Internal reasoning can be long and expensive (we pay per token), and users don’t want to pay for that

Most likely, reasoning will be hidden, or there will be ways to avoid starting from scratch each time, instead working more like precedent law.

A counterargument is that humans reason too. However, simpler approaches can work just as well, such as Claude writing a Python script to perform the calculations.

Consequence #3: Coordination Between Models

Everyone wants a universal silver-bullet model. But realistically, we can’t train a single model to excel in all domains at once. Training a model that’s simultaneously an excellent programmer and an excellent doctor is technically challenging, and maintaining it long-term is even harder.

There’s also the issue of domain-specific knowledge: a programmer might require N terabytes of data, while a doctor needs M terabytes, each with different reasoning processes, guardrails, and so on.

Most likely, the world will move in a different direction: a single reasoning/router model paired with a set of specialized expert models.

Today, models communicate with each other by:

- Passing prompts or parts of prompts

- Calling other models via function calls

But this is expensive. While it benefits providers financially (charging per token), it’s slow and inefficient.

I expect the solution will be server-to-server communication: a server-side function call that transfers structured information – essentially a stateless service. For example, a router model service and a doctor service, where the router sends information in a structured format:

{

"request_id": "123",

"temperature": 36.6,

"symptoms": ["sore throat"]

}

This way, reasoning isn’t transferred, only facts. It reduces hallucinations and prevents confusing the expert model. The router acts as an input filter before invoking an expensive model.

It also changes monetization. The router or generalist model can live locally on the user’s device (phone, tablet), while specialist models are hosted by providers. Local calls (e.g., weather, calendar) are free, while calls to specialists, such as a chemist, doctor, or engineer, incur costs.

In other words, I anticipate a more narrow specialization. Models like Claude Sonnet, which is excellent for coding, illustrate that. If we know when to call a model most efficiently, we spend less money and get results faster.

In fact, this is already happening in mix-of-experts models, such as awesome Kimi-K2. But everything hinges on routing quality, and debugging remains a challenge:

- If the answer is wrong, was it the router or the expert? How can we tell?

- Routers need constant iteration and efficiency measurement

- The longer the request chain, the worse the performance.

Mix-of-agents shows promise, but in my opinion, it has theoretical downsides and a single point of failure, which I mentioned above:

- In complex flows, the router may select different experts at each step.

- Even for the same task, slightly different phrasing can cause different routing outcomes.

Whoever builds the best router that solves these problems will win the race.

Conclusions

In 2026, I assume we expect more of everything:

- “Wow” moments like “AI made Counter-Strike for $5”

- Panic around “AI will take our jobs,” protests, and modern Luddites

- Situations like “I gave AI all my money to trade” lead to localized financial crashes.